2026-02-01 09:23:01

Vor ein paar Wochen hatte ich David Snyders Diretta Projekt für einen ROON Endpoint bestehend aus 2 Raspberry Pis mit Audiolinux umgesetzt. Hier ist eine Beschreibung des Projekts und ein Bild des experimentellen Aufbaus.

https://blog.watzmann.…

2026-01-01 19:51:11

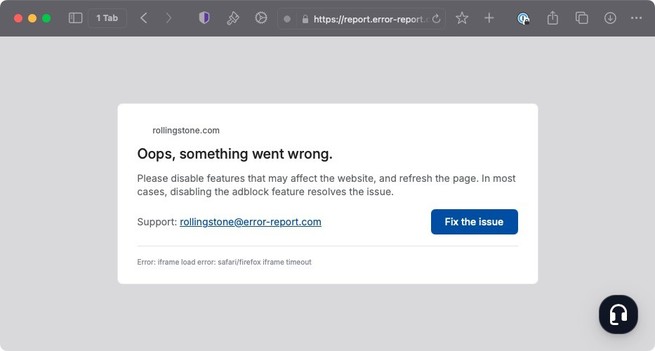

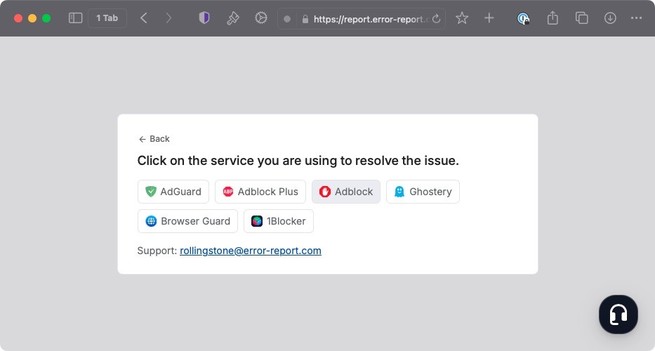

Wow, seems the people farmers of surveillance capitalism have fully embraced scammer techniques now.

Ran into this new flow on a number of sites just now (Indiewire, Variety, and RollingStone) delivered by the colossal douchebags at html-load.com who run report-error.com.

They make it look like a browser error has occurred and then tell you to disable your tracker/ad blocker.

To the asshole developers who built this for them instead of refusing: Fuck you for making everyone…

2025-12-02 07:20:45

Oha, Bärbel Bas erinnert sich an das "S" in #SPD:

(Und der SPIEGEL berichtet nur darüber, weil die Arbeitgeber es kacke finden. Capitalist Realism in Aktion ...)

2026-02-01 17:29:54

Joining the #fosdem translations room today was fun, also spontaneously taking over A/V duty for the room was a nice experience. @… brought a great energy into the room despite the very hot air and the locked windows. Thank you!

2026-02-01 14:15:53

Im #Sauerland steht mit dem traditionsreichen #Sahnehang ein ganzes #Skigebiet zum Verkauf.

Zum Angebot gehören Pisten,

2026-01-31 21:41:01

An interview with Nvidia's senior VP of hardware engineering, Andrew Bell, on continuing to provide Shield Android TV software updates a decade after its launch (Ryan Whitwam/Ars Technica)

https://arstechnica.com/gadgets/2026/0

2026-02-02 05:47:12

"Damit verändert sich auch, was ein Open-Source-Projekt überhaupt ist. Es wird nicht mehr als sozio-technisches Gefüge wahrgenommen, in dem Code, Dokumentation, Community und Finanzierung aufeinander bezogen sind, sondern als bloßer Datenlieferant für nachgelagerte Systeme. Das Projekt wird unsichtbar, während seine Produkte weiter zirkulieren."

#oss

2026-01-31 20:57:15

The new Dutch coalition agreement talks a lot about nitrogen. By delaying measures for many years, we've now run into severe limitations on nitrogen deposition in nature areas. And the outgoing right-far-right coalition only made matters worse.

But: I'm not so sure we're taking the next big environmental problem seriously. The EU Water Framework Directive has 2027 goals for water quality, and - guess what - the Netherlands is not on track to meet those.

2026-01-31 20:21:45

Incredible. I love the idea of taking checkpoints and the "papers please" mentality, and turning it around on the nazis.

#FuckICE #Minnesota (and yes, this is very much also #TacticalUrbanism

2026-03-01 13:02:30

„Wir wissen doch, wohin das führt“

Klar, in Hinblick auf aktuelle politische Parolen auf die Schrecknisse der Vernichtungslager, des Weltkriegs, des verbrecherischen totalitären Regimes hinzuweisen, ist wichtig.

Gleichzeitig sollten wir uns dafür schämen, daß wir als Gesellschaft Menschenverachtung nicht einfach unabhängig davon, ob daraus in Zukunft irgendwelche noch extremeren Schrecklichkeiten resultieren werden, erkennen, und ablehnen – einfach weil menschenverachtende Par…