2025-11-24 02:42:47

Rampart Talks

Exclusive interviews with the most intriguing businesspeople Australia has going for it...

Great Australian Pods Podcast Directory: https://www.greataustralianpods.com/rampart-talks/

2025-10-24 12:49:30

Bills getting defensive reinforcements in hopes to avoid 3-game slide https://www.espn.com/nfl/story/_/id/46700557/buffalo-bills-defensive-reinforcements-michael-hoecht-larry-ogunjob-week-8

2026-01-21 11:51:00

2025-12-22 01:17:06

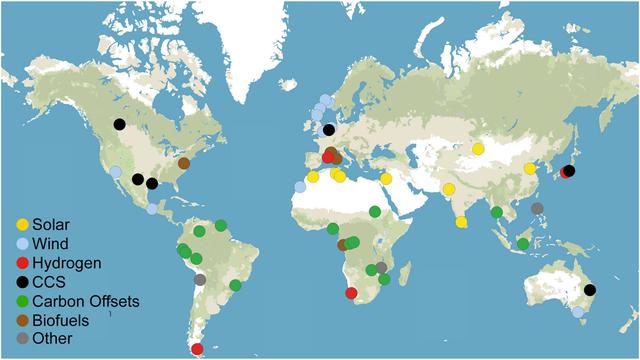

Fossil fuel industry's 'climate false solutions' reinforce its power, aggravate environmental injustice, study suggests https://phys.org/news/2025-12-fossil-fuel-industry-climate-false.html

2025-11-22 21:19:13

🇺🇦 Auf radioeins läuft...

AFI:

🎵 Behind the Clock

#NowPlaying #AFI

https://runforcoverrecords.bandcamp.com/track/behind-the-clock

https://open.spotify.com/track/7x3ir1NFIt7z5Sy1rJTHLJ

2025-12-24 19:12:26

Russian troops step up pressure on Mirnohrad as Ukraine reinforces defenses and supply corridors: https://benborges.xyz/2025/12/24/russian-troops-step-up-pressure.html

2025-11-23 12:00:09

"From fossil fuel roadmaps to rainforest finance: The 10 biggest stories from COP30"

#FossilFuels #COP30 #ClimateSummit

2026-01-24 13:15:21

(€) In der Neudegger Siedlung in #Donauwörth wird geprüft, wie die Wärmeversorgung künftig unabhängiger von #Erdgas werden kann.

Geplant ist der Einsatz von #Großwärmepumpen

2026-01-21 21:17:00

Auch Blue Origin baut Satelliten-Netz: Terawave

Das Raumfahrtunternehmen Blue Origin will ebenfalls eine große Satellitenkonstellation errichten. Die zweite Etage soll bis zu 6 Terabit/s schaffen.

https://www.

2026-01-24 18:43:26

Russia's strikes on the energy sector – Ukraine requests urgent reinforcement of air defense #shorts: https://benborges.xyz/2026/01/24/russias-strikes-on-the-energy.html