Climate justice took center stage at Pre-COP30 as African and Global South advocates made their case clear: adaptation finance isn't charity—it's survival.

CSOs pushed for locally-rooted indicators in the Global Goal on Adaptation, fair compensation for communities displaced by green projects, and recognition of food sovereignty in climate policy.

h…

When the Trump administration began freezing federal funding for climate and ecosystem research, one of the programs hit hard was ours: the U.S. Geological Survey’s Climate Adaptation Science Centers.

These nine regional centers help fish, wildlife, water, land – and, importantly, people – adapt to rising global temperatures and other climate shifts.

The centers have been helping to track invasive species, protect water supplies and make agriculture more sustainable in the face of…

An hour-long piece on how the adaptation of "The Wizard of Oz" to an #immersive show in the #Sphere is a glorious failure (artistically at least): #fulldome planetarium production.

Ligne L: adaptation de l’offre de transport Le 02/01/2026, l’offre de transport est adaptée sur votre ligne. Pour préparer votre trajet et avant de vous rendre en gare, vérifiez vos horaires sur l’appli Ile-de-France Mobilités, le site Transilien.com, SNCF Connect ou votre appli de mobilité.

Motif : difficultés liées Š un manque de personnel.

🤖 02/01 05:00

Ruri Dragon Manga by Masaoki Shindo gets TV Anime Adaptation

#RuriDragon

Treating emergent traits of the microbiome like quantitative genetic traits of the host reveals how environmentally acquired symbionts can contribute to hosts' adaptation

https://doi.org/10.1093/evolut/qpaf171

Mitigating Forgetting in Low Rank Adaptation

Joanna Sliwa, Frank Schneider, Philipp Hennig, Jose Miguel Hernandez-Lobato

https://arxiv.org/abs/2512.17720 https://arxiv.org/pdf/2512.17720 https://arxiv.org/html/2512.17720

arXiv:2512.17720v1 Announce Type: new

Abstract: Parameter-efficient fine-tuning methods, such as Low-Rank Adaptation (LoRA), enable fast specialization of large pre-trained models to different downstream applications. However, this process often leads to catastrophic forgetting of the model's prior domain knowledge. We address this issue with LaLoRA, a weight-space regularization technique that applies a Laplace approximation to Low-Rank Adaptation. Our approach estimates the model's confidence in each parameter and constrains updates in high-curvature directions, preserving prior knowledge while enabling efficient target-domain learning. By applying the Laplace approximation only to the LoRA weights, the method remains lightweight. We evaluate LaLoRA by fine-tuning a Llama model for mathematical reasoning and demonstrate an improved learning-forgetting trade-off, which can be directly controlled via the method's regularization strength. We further explore different loss landscape curvature approximations for estimating parameter confidence, analyze the effect of the data used for the Laplace approximation, and study robustness across hyperparameters.

toXiv_bot_toot

Here's my take on the adaptation of Wicked from stage to screen in my latest Human Meme podcast episode:

https://humanmeme.com/wicked-the-bespoke-voice-and-the-echo-of-the-ghost

Ligne L: adaptation de l’offre de transport Le 02/01/2026, l’offre de transport est adaptée sur votre ligne. Pour préparer votre trajet et avant de vous rendre en gare, vérifiez vos horaires sur l’appli Ile-de-France Mobilités, le site Transilien.com, SNCF Connect ou votre appli de mobilité.

Motif : difficultés liées Š un manque de personnel.

🤖 31/12 17:00

Just finished "The Raven Boys," a graphic novel adaptation of a novel by Maggie Stiefvater (adaptation written by Stephanie Williams and illustrated by Sas Milledge).

I haven't read the original novel, and because of that, this version felt way too dense, having to fit huge amounts of important details into not enough pages. The illustrations are gorgeous and the writing is fine; the setting and plot have some pretty interesting aspects... It's just too hard to follow a lot of the threads, or things we're supposed to care about aren't given the time/space to feel important.

The other thing that I didn't like: one of the central characters is rich, and we see this reflected in several ways, but we're clearly expected to ignore/excuse the class differences within the cast because he's a good guy. At this point in my life, I'm simply no longer interested in stories about good rich guys very much. It's become clear to me how in real life, we constantly get the perspectives of the rich, and rarely if ever hear the perspectives of the poor (same applies across racial and gender gradients, among others). Why then in fiction should I get more of the same, spending my mental bandwidth building empathy for yet another dilettante who somehow has a heart of gold? I'm tired of that.

#AmReading #ReadingNow

#Page42

"Splendide adaptation de Jack London"

(Š propos de "The Sea Wolf" de Michael Curtiz)

Tiré de "50 ans de Cinéma Américain" de Jean-Pierre Coursodon et Bertrand Tavernier, éd. Omnibus

Young people aren't just worried about climate change—they're actively solving it.

Across communities worldwide, youth are designing innovative adaptation solutions focused on justice and inclusion. They're mobilizing action at scale, demanding accessible finance, and ensuring no one gets left behind in the climate transition.

The message to decision-makers is clear: partner with young leaders and invest in youth-led adaptation now.

I've re-watched the BBC's Fortunes of War, for research purposes. I saw it as a kid but it's much tougher to watch as an adult.

Credit to the writer/screen adaptation, it does show characters criticising colonialism, and from a variety of perspectives.

The polite put-downs the British use when jockeying for position in the class system are also painful to watch.

“For millions, adaptation is not an abstract goal,”

“It is the difference between rebuilding and being swept away,

between replanting and starving,

between staying on ancestral land or losing it forever."

UN Secretary General

Guterres also supported a just transition, to ensure those working in the fossil fuel industry are supported into new livelihoods.

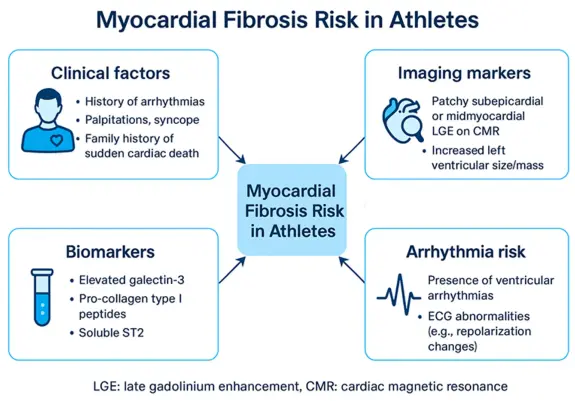

🫀 Myocardial Fibrosis in Athletes: Risk Marker or Physiological Adaptation?

#heart

Extreme heat is becoming deadlier, but we already have solutions that work.

The Heat Is On campaign showcased proven approaches—early warning systems and nature-based solutions—that have saved lives and protected communities worldwide. At COP30, this momentum paid off: adaptation finance is set to triple under the Belém Package, finally putting climate adaptation on equal footing with mitigation.

How can we make sustainability part of everything we do?

That question led to the creation of SIG-Sustainability, a new community space within the GÉANT Community Programme (GCP).

Launched in 2024, the group brings together members from across our community to exchange ideas and tools, from reducing carbon footprint to promoting inclusivity & climate adaptation.

📖 Read the full interview with the SIG-Sustainability Coordinator Judith Six (Jisc) in

Easy Adaptation: An Efficient Task-Specific Knowledge Injection Method for Large Models in Resource-Constrained Environments

Dong Chen, Zhengqing Hu, Shixing Zhao, Yibo Guo

https://arxiv.org/abs/2512.17771 https://arxiv.org/pdf/2512.17771 https://arxiv.org/html/2512.17771

arXiv:2512.17771v1 Announce Type: new

Abstract: While the enormous parameter scale endows Large Models (LMs) with unparalleled performance, it also limits their adaptability across specific tasks. Parameter-Efficient Fine-Tuning (PEFT) has emerged as a critical approach for effectively adapting LMs to a diverse range of downstream tasks. However, existing PEFT methods face two primary challenges: (1) High resource cost. Although PEFT methods significantly reduce resource demands compared to full fine-tuning, it still requires substantial time and memory, making it impractical in resource-constrained environments. (2) Parameter dependency. PEFT methods heavily rely on updating a subset of parameters associated with LMs to incorporate task-specific knowledge. Yet, due to increasing competition in the LMs landscape, many companies have adopted closed-source policies for their leading models, offering access only via Application Programming Interface (APIs). Whereas, the expense is often cost-prohibitive and difficult to sustain, as the fine-tuning process of LMs is extremely slow. Even if small models perform far worse than LMs in general, they can achieve superior results on particular distributions while requiring only minimal resources. Motivated by this insight, we propose Easy Adaptation (EA), which designs Specific Small Models (SSMs) to complement the underfitted data distribution for LMs. Extensive experiments show that EA matches the performance of PEFT on diverse tasks without accessing LM parameters, and requires only minimal resources.

toXiv_bot_toot

Lignes L : adaptation de l’offre de transport. Pendant les vacances scolaires du 25/12/2025 au 01/01/2026, l’offre de transport est adaptée sur votre ligne : certains trains ne circuleront pas. Pour préparer votre trajet et avant de vous rendre en gare, vérifiez vos horaires sur l’appli Ile-de-France Mobilités, le site Transilien.com, SNCF Connect ou votre appli de mobilité.

🤖 25/12 03:00

Replaced article(s) found for math.GN. https://arxiv.org/list/math.GN/new

[1/1]:

- An Adaptation of the Vietoris Topology for Ordered Compact Sets

Christopher Caruvana, Jared Holshouser

https://arxiv.org/abs/2507.17936 https://mastoxiv.page/@arXiv_mathGN_bot/114912817437529340

- No Nowhere Constant Continuous Function Maps All Non-Normal Numbers to Normal Numbers

Chokri Manai

https://arxiv.org/abs/2506.15422 https://mastoxiv.page/@arXiv_mathNT_bot/114709233846620023

toXiv_bot_toot

RE: #Climate change mitigation is cheaper than #adaptation, but we need both 👇. It's rarely 'either/or' in this space, almost always 'both/and'. Which begs the question of how to pay.

After ending #fossilfuel subsidies (innovations like any UK #tax on airline fuel🤯) I come back to #CarbonCurrency as an idea that could work at the required, gigantic scale. HT Delton Chen, Kim Stanley Robinson etc.

#solarpunk #CarbonCoin

Поступова трансформація медичних діагнозів у лайку — на жаль, поширене явище. Цікаво, що "аутист" мігрує в іншому напрямку і може тепер стати компліментом.

Цікаво, як так сталося.

Цікаво, чи можливо відтворити цей процес.

...А спонсор цього поста — Уляна. Уляна: пиряй в блекаут з укулеле.

---

The gradual transformation of medical diagnoses into slurs is, unfortunately, quite ubiquitous. With this in mind, a reverse migration of "autistic" is all…

The Theory of Strategic Evolution: Games with Endogenous Players and Strategic Replicators

Kevin Vallier

https://arxiv.org/abs/2512.07901 https://arxiv.org/pdf/2512.07901 https://arxiv.org/html/2512.07901

arXiv:2512.07901v1 Announce Type: new

Abstract: This paper develops the Theory of Strategic Evolution, a general model for systems in which the population of players, strategies, and institutional rules evolve together. The theory extends replicator dynamics to settings with endogenous players, multi level selection, innovation, constitutional change, and meta governance. The central mathematical object is a Poiesis stack: a hierarchy of strategic layers linked by cross level gain matrices. Under small gain conditions, the system admits a global Lyapunov function and satisfies selection, tracking, and stochastic stability results at every finite depth. We prove that the class is closed under block extension, innovation events, heterogeneous utilities, continuous strategy spaces, and constitutional evolution. The closure theorem shows that no new dynamics arise at higher levels and that unrestricted self modification cannot preserve Lyapunov structure. The theory unifies results from evolutionary game theory, institutional design, innovation dynamics, and constitutional political economy, providing a general mathematical model of long run strategic adaptation.

toXiv_bot_toot

KENT's manga Daikaijuu Gaea-tima (The Great Gaea-Tima) will be made into an anime.

Currently in production.

#manga #DaikaijuuGaeaTima #anime

"Migration, Adaptation and Memory" - 9th International Interdisciplinary Conference

https://ift.tt/FgKbpik

updated: Friday, January 23, 2026 - 4:19pmfull name / name of organization: InMind Supportcontact…

via Input 4 RELCFP

The UN is looking for a new home for its Climate Technology Centre—the hub that helps developing nations access critical climate tech for adaptation and mitigation.

This is a major opportunity: the hosting mandate runs until 2041, positioning the selected organization at the center of global climate technology cooperation.

Proposals due March 16, 2026.

🇺🇦 #NowPlaying on BBCRadio3's #WordsAndMusic

Florence Price & New York Youth Symphony Orchestra:

🎵 Ethiopia's Shadow in America III: His Adaptation

#FlorencePrice #NewYorkYouthSymphonyOrchestra

Text and Texture: Rethinking Materiality in Adaptation Studies

https://ift.tt/B6wLm2N

updated: Tuesday, January 6, 2026 - 10:50pmfull name / name of organization: Assoc. Prof. Gülden…

via Input 4 RELCFP