2026-02-16 10:12:03

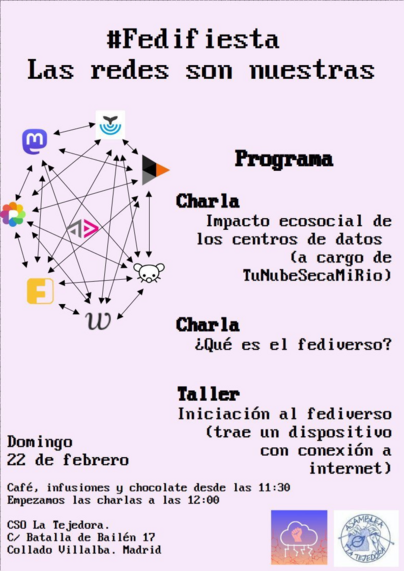

Recordatorio que este domingo es la #fedifiesta en #ColladoVillalba, #Madrid con la presencia estelar de @…

2026-01-07 22:17:00

Series D, Episode 12 - Warlord

TARRANT: Who's with you? I repeat, who's that with you, Lodestar?

[Zukan's space ship]

ZUKAN: This is Zukan, President of Betafarl, come to attend the conference of the non-aligned planets.

https://blake.torpidity.net/m/412/27 B7B6

2025-12-05 17:08:44

None of the supposedly fastest terminals improved the speed of Xfce4-terminal on any of my computers. Ghostty, however, does.

It bothers me not knowing why, but for now I'm going to switch to this terminal emulator to see how it goes.

https://ghostty.org/

2025-11-26 21:17:31

Someone has encouraged me to engage in party politics by joining a political party. However, I oppose it, because political parties often represent interests that conflict with my own values and belief system.

Rather than aligning myself with parties that promote agendas incompatible with my views, I want to organiz my local community, prioritizing grassroots involvement and direct democratic participation over representative party politics.

As a syndicalist, I firmly believe tha…

2026-02-03 07:44:55

Genus-0 Surface Parameterization using Spherical Beltrami Differentials

Zhehao Xu, Lok Ming Lui

https://arxiv.org/abs/2602.01589 https://arxiv.org/pdf/2602.01589 https://arxiv.org/html/2602.01589

arXiv:2602.01589v1 Announce Type: new

Abstract: Spherical surface parameterization is a fundamental tool in geometry processing and imaging science. For a genus-0 closed surface, many efficient algorithms can map the surface to the sphere; consequently, a broad class of task-driven genus-0 mapping problems can be reduced to constructing a high-quality spherical self-map. However, existing approaches often face a trade-off between satisfying task objectives (e.g., landmark or feature alignment), maintaining bijectivity, and controlling geometric distortion. We introduce the Spherical Beltrami Differential (SBD), a two-chart representation of quasiconformal self-maps of the sphere, and establish its correspondence with spherical homeomorphisms up to conformal automorphisms. Building on the Spectral Beltrami Network (SBN), we propose a neural optimization framework BOOST that optimizes two Beltrami fields on hemispherical stereographic charts and enforces global consistency through explicit seam-aware constraints. Experiments on large-deformation landmark matching and intensity-based spherical registration demonstrate the effectiveness of our proposed framework. We further apply the method to brain cortical surface registration, aligning sulcal landmarks and jointly matching cortical sulci depth maps, showing improved task fidelity with controlled distortion and robust bijective behavior.

toXiv_bot_toot

2026-01-21 18:40:51

Ando a receber umas chamadas bem esquisitas cš em casa. Um robô a dizer que tem uma mensagem confidencial para a minha mãe e a pedir número de contribuinte.

Mando Š merda e desligo. Tresanda a fraude. Mas procurando pelo número não encontro nenhum daqueles alertas que costuma haver online.

Alguém tem alguma ideia?

2025-11-27 09:02:26

Photo-induced carrier dynamics in InSb probed with broadband THz spectroscopy based on BNA crystals

Elodie Iglesis, Alexandr Alekhin, Maximilien Cazayous, Alain Sacuto, Yann Gallais, Sarah Houver

https://arxiv.org/abs/2511.20896

2025-11-25 10:53:53

MOCLIP: A Foundation Model for Large-Scale Nanophotonic Inverse Design

S. Rodionov, A. Burguete-Lopez, M. Makarenko, Q. Wang, F. Getman, A. Fratalocchi

https://arxiv.org/abs/2511.18980 https://arxiv.org/pdf/2511.18980 https://arxiv.org/html/2511.18980

arXiv:2511.18980v1 Announce Type: new

Abstract: Foundation models (FM) are transforming artificial intelligence by enabling generalizable, data-efficient solutions across different domains for a broad range of applications. However, the lack of large and diverse datasets limits the development of FM in nanophotonics. This work presents MOCLIP (Metasurface Optics Contrastive Learning Pretrained), a nanophotonic foundation model that integrates metasurface geometry and spectra within a shared latent space. MOCLIP employs contrastive learning to align geometry and spectral representations using an experimentally acquired dataset with a sample density comparable to ImageNet-1K. The study demonstrates MOCLIP inverse design capabilities for high-throughput zero-shot prediction at a rate of 0.2 million samples per second, enabling the design of a full 4-inch wafer populated with high-density metasurfaces in minutes. It also shows generative latent-space optimization reaching 97 percent accuracy. Finally, we introduce an optical information storage concept that uses MOCLIP to achieve a density of 0.1 Gbit per square millimeter at the resolution limit, exceeding commercial optical media by a factor of six. These results position MOCLIP as a scalable and versatile platform for next-generation photonic design and data-driven applications.

toXiv_bot_toot

2025-12-22 10:34:10

Exploiting ID-Text Complementarity via Ensembling for Sequential Recommendation

Liam Collins, Bhuvesh Kumar, Clark Mingxuan Ju, Tong Zhao, Donald Loveland, Leonardo Neves, Neil Shah

https://arxiv.org/abs/2512.17820 https://arxiv.org/pdf/2512.17820 https://arxiv.org/html/2512.17820

arXiv:2512.17820v1 Announce Type: new

Abstract: Modern Sequential Recommendation (SR) models commonly utilize modality features to represent items, motivated in large part by recent advancements in language and vision modeling. To do so, several works completely replace ID embeddings with modality embeddings, claiming that modality embeddings render ID embeddings unnecessary because they can match or even exceed ID embedding performance. On the other hand, many works jointly utilize ID and modality features, but posit that complex fusion strategies, such as multi-stage training and/or intricate alignment architectures, are necessary for this joint utilization. However, underlying both these lines of work is a lack of understanding of the complementarity of ID and modality features. In this work, we address this gap by studying the complementarity of ID- and text-based SR models. We show that these models do learn complementary signals, meaning that either should provide performance gain when used properly alongside the other. Motivated by this, we propose a new SR method that preserves ID-text complementarity through independent model training, then harnesses it through a simple ensembling strategy. Despite this method's simplicity, we show it outperforms several competitive SR baselines, implying that both ID and text features are necessary to achieve state-of-the-art SR performance but complex fusion architectures are not.

toXiv_bot_toot

2026-01-27 19:07:50

- La mixité de l’utilisation. 70% admin/affaire et 30% logements ce justifient au hyper centre. Par contre, seulement 20% de logements d’utilité publique est en deçŠ des engagements de Lausanne. Si on veut atteindre 30% dans la ville, il faut le demander aussi Š des grands promoteurs comme ici (c’est pas une contrainte technique, mais une discussion de réalisme capitaliste). LŠ il faut renégocier.

3/3