2026-01-18 14:00:55

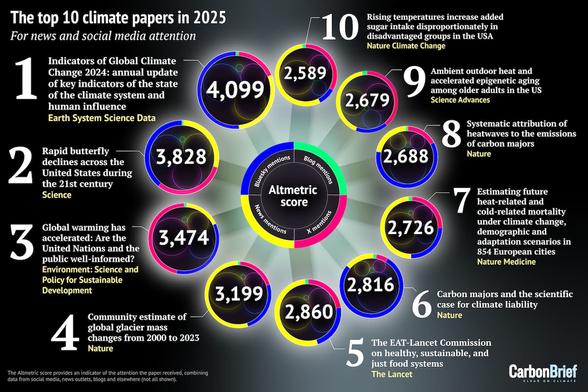

"Analysis: The climate papers most featured in the media in 2025"

#Climate #ClimateChange

https:/…

2025-12-17 20:25:19

Let's Encrypt Ending TLS Client Authentication Certificate Support in 2026

#news

2025-12-17 23:30:52

Trump administration plans to break up largest federal climate research center (NBC News)

https://www.nbcnews.com/science/climate-change/trump-administration-break-climate-research-center-ncar-rcna249668

http://www.memeorandum.com/251217/p128#a251217p128

2025-11-18 13:00:08

"Analysis: Seven charts showing how the $100bn climate-finance goal was met"

#Climate #ClimateChange

2025-12-18 13:00:06

"‘Hot droughts’ could push the Amazon into a hypertropical climate by 2100 - and trees won’t survive"

#Amazon #Climate #ClimateChange

2026-01-17 16:16:09

How Wall Street Turned Its Back on Climate Change (David Gelles/New York Times)

https://www.nytimes.com/2026/01/17/climate/how-wall-street-turned-its-back-on-climate-change.html

http://www.memeorandum.com/260117/p22#a260117p22

2025-11-17 11:00:44

"Climate Plans Focus More on Planting Trees Than on Protecting Forests, Experts Warn"

#Trees #Climate #ClimateChange

2025-11-18 01:50:43

Pope Leo XIV calls for urgent climate action and says God's creation is 'crying out' (Anton L. Delgado/Associated Press)

https://apnews.com/article/pope-climate-talk-video-climate-change-0b33de0843ee2fe7c19e13aeeb6e6d93

http://www.memeorandum.com/251117/p151#a251117p151

2025-12-18 17:00:05

"Polar bears may be adapting to survive warmer climates thanks to their ‘jumping genes’"

#PolarBears #Climate #ClimateChange

2025-11-18 11:00:08

"Fossil fuel lobbyists outnumber most delegations at COP30 climate talks in Brazil"

#Brazil #FossilFuels #Climate