No exact results. Similar results found.

2026-01-02 20:05:31

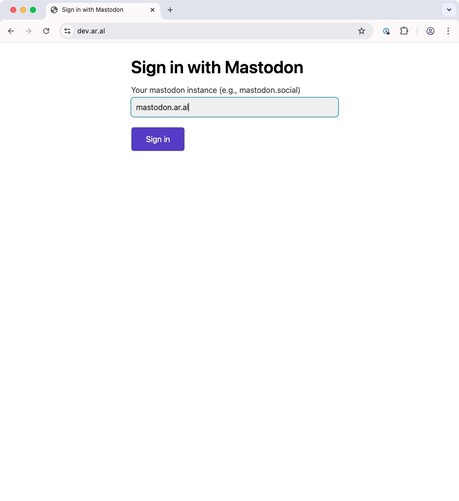

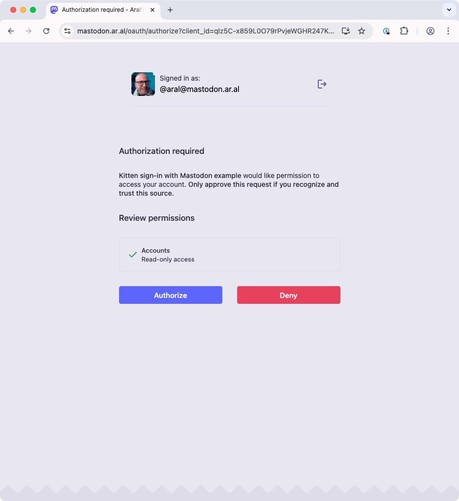

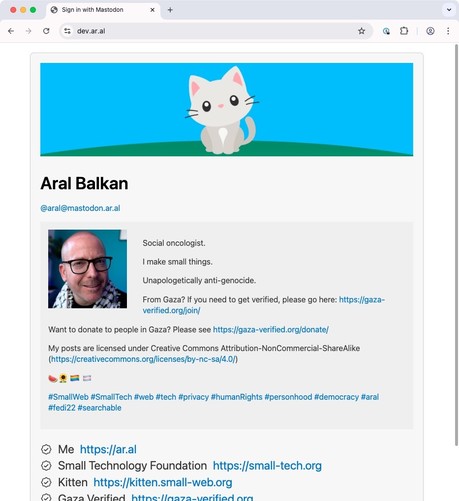

Just added a “Sign in with Mastodon” example to Kitten’s¹ examples:

https://codeberg.org/kitten/app/src/branch/main/examples/sign-in-with-mastodon

If I have time at some point, I might make it into a tutorial.

Enjoy!

:kitten:💕