2026-01-22 17:26:04

Inside the turmoil at Thinking Machines; sources say Meta discussed buying TML, and CTO Barret Zoph had been in talks since October 2025 about an OpenAI return (New York Times)

https://www.nytimes.com/2026/01/22/technology/thinking-machines-ai-startup-o…

2026-01-23 20:14:05

For #FootpathFriday I chose this one.

It's been a nice walk around this hill with the little church on top.

I'm missing this right now. White snow would be nice, or green grass. Yet we are currently stuck in the season in between.

But for such cases, we have photos. Photos to revive memories, to make us smile, to make us dream. Dream of the experience.

This …

2025-11-21 17:05:14

🇺🇦 #NowPlaying on KEXP's #MorningShow

Duran Duran:

🎵 The Wild Boys

#DuranDuran

https://studiomasters.bandcamp.com/track/duran-duran-the-wild-boys-remix-124-bpm

https://open.spotify.com/track/23M7cQkNJLiddeubvVgaQl

2026-01-23 18:42:03

from my link log —

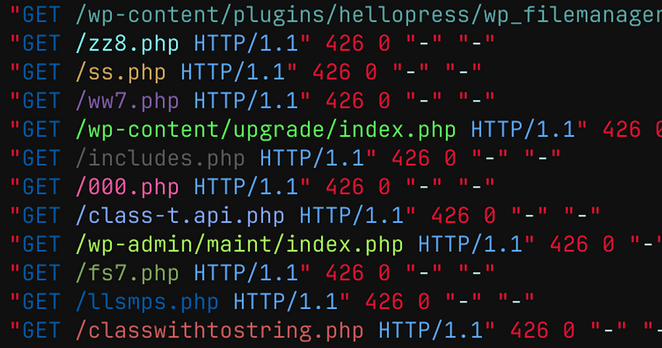

Selectively disabling HTTP/1.0 and HTTP/1.1

https://markmcb.com/web/selectively_disabling_http_1/

saved 2026-01-23 https://

2025-12-20 23:22:58

So in another dream I just woke up from, I was talking to someone about "the idea problem" (that it's becoming harder to monitize ideas, from a vox article written by an AI cooked reporter).

https://www.iheart.com/podcast/105-it-could-happen-here-30717896/episode/executive-disorder-white-house-weekly-46-313675864/

Basically, I was arguing that the majority of inventions target men because patriarchy puts economic control in men's hands. As men have started to help more with childcare, there have been more inventions related to childcare. (I don't have any idea if this is true. Seems legit, but I'm just relating my dream. I think I was also oversimplifying a bit to "men" and "women" because of my audience, but anyway it was a dream.) There's actually more low-hanging fruit, I pointed out, related to making care work easier.

So I argued that the real problem was a failure to invest in research into solving that problem. Today there are all these boondoggles built around killing people. What if, instead of all this government research into killing people, we dumped a ton of money into making it easier to support a household? That would be great for the economy. (Being asleep, I seem to have forgotten that working people need money.)

In the blur of being just awake I started thinking about how you could kickstart the US economy by taking the money from the AI boondoggle and other autonomous murder bots and create something like a program to build robots for housekeepers. You'd still be funding tech with government money, so the same horrible people get paid, but you're now actually solving real problems. It wouldn't even matter if it was a boondoggle, honestly. Just dumping money into something other than murdering people is good enough.

I imagined first if there was a program to fund a robot housecleaner, like robot dog with AI some laundry pickup, that would be provided, free of charge, to help people with children. It would work the same as the military boondoggle where a private company makes the government buy a piece of hardware from them and then also pay them to service it for some number of years. But instead of that hardware sitting around waiting to kill someone, it would be getting brought to people's houses to help them.

Then I thought, hey, you could even boost the economy more if you just had government funding for doulas and housecleaners and paid them a living wage. Hey, you could really kickstart the economy by nationalizing healthcare and including doula support as part of all births. Oh, and you could also just include the optional household help for families with children until the kids turn 18.

None of this is perfect (I don't actually think most of this is possible from any state), but the point is that it's actually wildly easy to figure out all kinds of ways to invest in the economy and monitize ideas as long as you aren't entirely focused on the same old "make money from spying on people and killing them." Funny that. Like they said in the podcast, maybe "finding ideas" isn't the problem.

Hope you enjoyed the weird semi-awake brain dump/rant.

2025-12-19 13:02:42

So my car has been complaining I should put some exhaust emissions neutralizing fluid in it. A thing they call AdBlue I believe.

Bought a big 10l drum of the stuff ready to fill up.

But when I look at where I expect to find the hole for filling it, I just find a capped off tube and a warning sticker "See the GM Citreon Berlingo Blaze manual" for the adblue refilling hole.

Only user manual I have is the one telling me to expect it there.

The car was converted for wheelchair access at some point in its life. I think they are referring to the wheelchair-adaption manual, which the seller did not give me.

🤔

Have been looking around the car as much as I can for a couple of hours this morning to no avail. Where have they hidden this hole to fill up adblue?

Maybe it's under the engine or something now and you have to put the thing on stilts to find it?

🤷

Asked my mechanic about it and he says to bring it in on Monday. Gonna be a pain if I have to rip up the floorboards or something every year to refill that.

#mechanic #car #diesel

2025-12-22 10:34:50

Regularized Random Fourier Features and Finite Element Reconstruction for Operator Learning in Sobolev Space

Xinyue Yu, Hayden Schaeffer

https://arxiv.org/abs/2512.17884 https://arxiv.org/pdf/2512.17884 https://arxiv.org/html/2512.17884

arXiv:2512.17884v1 Announce Type: new

Abstract: Operator learning is a data-driven approximation of mappings between infinite-dimensional function spaces, such as the solution operators of partial differential equations. Kernel-based operator learning can offer accurate, theoretically justified approximations that require less training than standard methods. However, they can become computationally prohibitive for large training sets and can be sensitive to noise. We propose a regularized random Fourier feature (RRFF) approach, coupled with a finite element reconstruction map (RRFF-FEM), for learning operators from noisy data. The method uses random features drawn from multivariate Student's $t$ distributions, together with frequency-weighted Tikhonov regularization that suppresses high-frequency noise. We establish high-probability bounds on the extreme singular values of the associated random feature matrix and show that when the number of features $N$ scales like $m \log m$ with the number of training samples $m$, the system is well-conditioned, which yields estimation and generalization guarantees. Detailed numerical experiments on benchmark PDE problems, including advection, Burgers', Darcy flow, Helmholtz, Navier-Stokes, and structural mechanics, demonstrate that RRFF and RRFF-FEM are robust to noise and achieve improved performance with reduced training time compared to the unregularized random feature model, while maintaining competitive accuracy relative to kernel and neural operator tests.

toXiv_bot_toot

2026-01-20 10:35:20

2026-01-15 21:27:10

Source: at least two more TML employees are expected to join OpenAI soon; some AI researchers say they are exhausted by the constant drama in their industry (Wired)

https://www.wired.com/story/inside-openai-raid-on-thinking-machines-lab/

2026-01-21 04:11:15

Barret Zoph says Thinking Machines Lab fired him only after learning he was leaving, and at no time did the company cite his performance or unethical conduct (Wall Street Journal)

https://www.wsj.com/tech/ai/the-messy-h…