2025-11-23 17:15:40

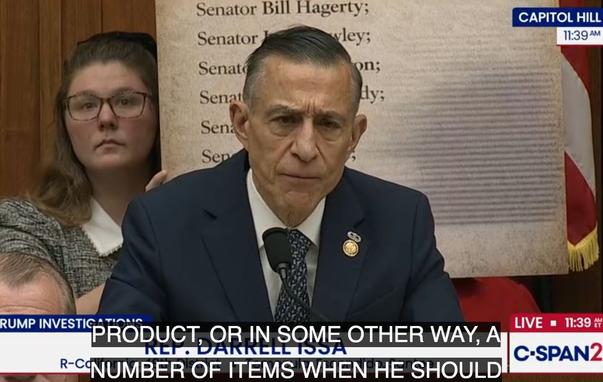

"Groceries," and Other Secrets of Managing Donald Trump (emptywheel)

https://www.emptywheel.net/2025/11/23/groceries-and-other-secrets-of-managing-donald-trump/

http://www.memeorandum.com/251123/p40#a251123p40

2026-01-23 17:22:34

«Empty Hands» es lo nuevo sorprendente y multifacética Poppy.

#Poppy

2026-01-21 12:21:05

Ukrainian-founded language learning marketplace Preply raised a $150M Series D led by WestCap at a $1.2B valuation; the startup has a 150-person office in Kyiv (Anna Heim/TechCrunch)

https://techcrunch.com/2026/01/21/langu…

2025-12-22 10:34:10

Exploiting ID-Text Complementarity via Ensembling for Sequential Recommendation

Liam Collins, Bhuvesh Kumar, Clark Mingxuan Ju, Tong Zhao, Donald Loveland, Leonardo Neves, Neil Shah

https://arxiv.org/abs/2512.17820 https://arxiv.org/pdf/2512.17820 https://arxiv.org/html/2512.17820

arXiv:2512.17820v1 Announce Type: new

Abstract: Modern Sequential Recommendation (SR) models commonly utilize modality features to represent items, motivated in large part by recent advancements in language and vision modeling. To do so, several works completely replace ID embeddings with modality embeddings, claiming that modality embeddings render ID embeddings unnecessary because they can match or even exceed ID embedding performance. On the other hand, many works jointly utilize ID and modality features, but posit that complex fusion strategies, such as multi-stage training and/or intricate alignment architectures, are necessary for this joint utilization. However, underlying both these lines of work is a lack of understanding of the complementarity of ID and modality features. In this work, we address this gap by studying the complementarity of ID- and text-based SR models. We show that these models do learn complementary signals, meaning that either should provide performance gain when used properly alongside the other. Motivated by this, we propose a new SR method that preserves ID-text complementarity through independent model training, then harnesses it through a simple ensembling strategy. Despite this method's simplicity, we show it outperforms several competitive SR baselines, implying that both ID and text features are necessary to achieve state-of-the-art SR performance but complex fusion architectures are not.

toXiv_bot_toot

2026-01-22 22:51:08

He is NOT aging well. Get him a whole-body MRI to find the tumors. Or remove the tapeworm. Or the liver fluke. @… https://<…

2026-01-22 06:08:28

2025-12-23 09:26:01

2025-12-22 01:13:27

2025-11-19 06:01:55

xAI's Grok 4.1 tops benchmarks in emotional intelligence, while its model card also shows a marked increase in sycophancy compared to Grok 4 (Christopher Ort/i10X)

https://i10x.ai/news/grok-4-1-eq-bench-empathy-sycophancy-paradox

2025-12-23 02:55:58

The Pentagon partners with xAI to embed the company's frontier AI systems, based on the Grok family of models, directly into GenAI.mil as soon as early 2026 (Bonny Chu/Fox News)

https://www.foxnews.com/politics/pentagon-