2026-01-05 15:05:08

»Rue statt Rust — Genauso sicher, aber leichter zugänglich:

Die Programmiersprache Rue verbindet die Vorteile von Rust mit einer einfacheren Syntax. Den Compiler dazu entwickelt das KI-Modell Claude.«

Sieht auf dem ersten Blick fast so aus wie Rust. Mal sehen wie sich das nun durchsetzt, denn Rust hat sich ja jetzt schon (fast) etabliert für viele Einsätze.

🧑💻

2026-02-04 12:20:50

2025-11-27 05:28:28

Geno Smith sends message to Raiders fans following middle finger incident https://www.sportingnews.com/us/nfl/las-vegas-raiders/news/geno-smith-sends-message-raiders-fans-following-middle-fin…

2025-12-02 14:00:34

Data regulators in Thailand said they are blocking the Sam Altman-founded company Tools for Humanity from collecting iris scans.

"The business model is based on the premise that it will become more difficult to tell humans apart from online AI avatars in the near future."

It sounds like we create a problem then found a business to solve the problem.

2026-01-30 08:28:26

JUST-DUB-IT: Video Dubbing via Joint Audio-Visual Diffusion

Anthony Chen, Naomi Ken Korem, Tavi Halperin, Matan Ben Yosef, Urska Jelercic, Ofir Bibi, Or Patashnik, Daniel Cohen-Or

https://arxiv.org/abs/2601.22143 https://arxiv.org/pdf/2601.22143 https://arxiv.org/html/2601.22143

arXiv:2601.22143v1 Announce Type: new

Abstract: Audio-Visual Foundation Models, which are pretrained to jointly generate sound and visual content, have recently shown an unprecedented ability to model multi-modal generation and editing, opening new opportunities for downstream tasks. Among these tasks, video dubbing could greatly benefit from such priors, yet most existing solutions still rely on complex, task-specific pipelines that struggle in real-world settings. In this work, we introduce a single-model approach that adapts a foundational audio-video diffusion model for video-to-video dubbing via a lightweight LoRA. The LoRA enables the model to condition on an input audio-video while jointly generating translated audio and synchronized facial motion. To train this LoRA, we leverage the generative model itself to synthesize paired multilingual videos of the same speaker. Specifically, we generate multilingual videos with language switches within a single clip, and then inpaint the face and audio in each half to match the language of the other half. By leveraging the rich generative prior of the audio-visual model, our approach preserves speaker identity and lip synchronization while remaining robust to complex motion and real-world dynamics. We demonstrate that our approach produces high-quality dubbed videos with improved visual fidelity, lip synchronization, and robustness compared to existing dubbing pipelines.

toXiv_bot_toot

2025-12-30 13:37:17

Series B, Episode 02 - Shadow

CALLY: I want you back here. Get ready to teleport.

VILA: Wasting your time, Cally. I'm not wearing a bracelet. I'm not going to be snatched away in the middle of...in the middle of anything. Sightseeing. And you should see some of the sights I'm seeing. No. Perhaps you shouldn't.

https://

2025-12-17 11:50:37

Source: Tencent tells staff that Yao Shunyu, an ex-OpenAI researcher who joined in September, is now its chief AI scientist, reporting to President Martin Lau (Juro Osawa/The Information)

https://www.theinformation.com/briefings/tencent-name…

2025-12-04 07:48:09

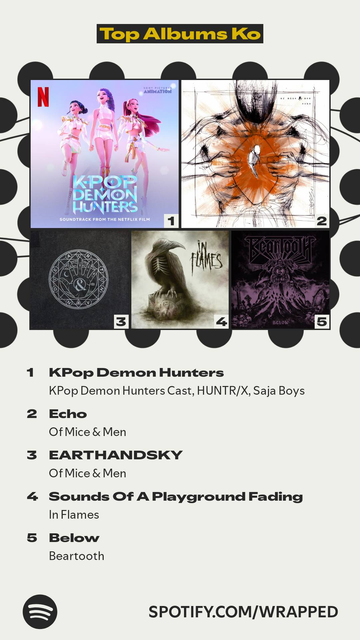

when you're a metalhead but you shared your account with your gf

#kpop #kpopdemonhunters #metal #metalcore

@axbom@axbom.me

@axbom@axbom.me2026-01-28 22:12:28

Me: What's my dosage today?

LLM: Today is Tuesday, so your dosage is 2 pills.

Me: Wh…

2026-02-02 08:41:09

Learning to Build Shapes by Extrusion

Thor Vestergaard Christiansen, Karran Pandey, Alba Reinders, Karan Singh, Morten Rieger Hannemose, J. Andreas B{\ae}rentzen

https://arxiv.org/abs/2601.22858 https://arxiv.org/pdf/2601.22858 https://arxiv.org/html/2601.22858

arXiv:2601.22858v1 Announce Type: new

Abstract: We introduce Text Encoded Extrusion (TEE), a text-based representation that expresses mesh construction as sequences of face extrusions rather than polygon lists, and a method for generating 3D meshes from TEE using a large language model (LLM). By learning extrusion sequences that assemble a mesh, similar to the way artists create meshes, our approach naturally supports arbitrary output face counts and produces manifold meshes by design, in contrast to recent transformer-based models. The learnt extrusion sequences can also be applied to existing meshes - enabling editing in addition to generation. To train our model, we decompose a library of quadrilateral meshes with non-self-intersecting face loops into constituent loops, which can be viewed as their building blocks, and finetune an LLM on the steps for reassembling the meshes by performing a sequence of extrusions. We demonstrate that our representation enables reconstruction, novel shape synthesis, and the addition of new features to existing meshes.

toXiv_bot_toot