2026-01-04 00:04:43

I share much of these feelings. But unfortunately some loved ones have been sucked into the Trump Cult. It is a Cult. And when a loved one is sucked into a cult you do need to try to get them out.

But if they won't leave after showing every angle of how they are being lied to and brainwashed and they still want to follow the cult and support fascism, racism, misogyny and war crimes, then they can no longer be loved ones. And maybe need to be puched since they have become a Nazi (though I’m not a very good puncher) https://todon.eu/@PaulDitz/115832636818580869

2026-02-04 17:20:17

I mean, spam sucks obviously, but occasionally one leaks through the filters that makes me smile.

There’s a URL in the signature which I whimsically followed, the headline there is: “The place where you can build and buy a whole qubit.”

#xml

2026-02-03 23:26:54

A fun tactic of extremists is to argue that they don't hate out-groups, they just blah blah blah.

The pinnacle of this was the antebellum argument that enslaved people were happier and healthier enslaved,

and that enslavers were motivated bu their charitable natures.

It was BS then, and it's BS now.

https://<…

2025-12-05 16:33:51

Degrowth cabaret comes to London (and other news)

Degrowth Cabaret! LONDON FRI 23 JANUARY 10.30am – 4.30pm Siobhan Davies Studios If you are an economist interested in performance, a performer interested in economics or simply curious in how these seemingly distinct languages can be bridged, then this session is for you! But don’t worry, you won’t need prior knowledge of economics or the arts! Joaquín Pereira (economist and dancer) invites you to a day-long lab where…

2025-12-05 13:08:25

Series D, Episode 02 - Power

PELLA: I shall. You follow. [exits]

[Gunn Sar's room. He is lying on a table while Nina massages him.]

CATO: [Enters] Sir, we have the intruder. His equipment and gun. [Hands them to Gunn Sar.]

https://blake.torpidity.net/m/402/56 B7B3

2025-12-31 13:44:29

Had Fun

Bought a car/micro-camper

Bought a van to do up as a micro-camper, and did a temporary rush job of that conversion myself while waiting in the list for the pro to do it.

Then the pro gave himself a health criss the week it was booked so I took apart my temp job and only got another temp kit-job in it's place.

Went out in it like four times during that and then broke my wrist and couldn't really use it or improve it.

Then had to take it apart even more to try

and figure out where the ad-blue hole was.

I will do a proper permanent job of the

floor and walls and ceiling and adjustments to the kit-job to make it the nicest it's been so far during the spring next year.

My assumption that the prior conversion

into a van and for wheelchair-access meant the microcamper conversion was half-done already turned out to be false.

If I buy a new one, it'll be one that has never been wheelchair adapted.

But it's going okay. Only scraped it once so far.

Fewer than aimed for or booked, but I broke my wrist and had to cancel the second half of the summer.

Went to a conference about money and computers and fringe decentralized social media and it wasn't as boring as you might expect and felt pretty much like a festival.

Exactly the target number! It's lovely.

Took 3 times longer than I'd hoped and

50% more money than I'd planned for really.

Still improvements to make but they will

be incremental and gradual over the coming year or two now.

It's been interest-only for 20 years so a big old lump sum payment that I never really expected to be able to make. Expected to have to sell and move at the end of the mortgage term.

But surprisingly the stocks ISA got high enough to pay it off after all, so I did that.

Cash-flow ruined by that and the bedroom but should start to feel a bit richer next year.

2026-01-05 20:02:11

2025-11-27 09:39:16

Real conspiracies tend to come out, but some of them take a while. Information on the Iran/Contra scandal broke out about 5 years after the conspiracy started. That would have taken several hundred people to carry out, so it was somewhat hard to hide. Even so, they largely got away with it.

The moon landing conspiracy theory would have taken thousands of people, so it would have come out more quickly. Since we have an example of a real secret program of a similar scale as what would be required to fake a moon landing (that is, the Manhattan project), we know that the fake moon landing conspiracy theory is not true. (There's also the literally tons of evidence in the form of rocks and other samples, and all kinds of other ways to debunk the claim.)

Could Kash Patel's FBI have been trying really hard to entrap people into carrying out terrorist attacks in order to justify #Trump's occupation of DC? Could they have helped a guy plan an attack then just failed to arrest him? There are reasonable scenarios that fall in between malice and incompetence while still indicating some level of false flag.

Could someone have just snapped and ambushed some guardsmen without any involvement from the FBI? Yeah, totally. The US is a country full of guns with a completely non-functional mental health system. Someone coming from a country that the US destroyed, twice, could have a lot of untreated trauma. Might they see the national guard as a threat (even if that wasn't totally true)? Yeah, they were deployed to threaten people (even when they were just picking up trash). The point was to incite this kind of response. It's completely reasonable to believe that the FBI would not need to be involved at all, that this would just be the stochastic response they were looking for.

So the point here is that everything is on the table, nothing is really known, nothing should be surprising, and no matter what it's Trump's fault. This is exactly the escalation he was looking for. If he didn't get it naturally, he would also have had ways of making it happen.

He will use this in exactly the same way as the Reichstag fire, to drive a wedge between liberals and radicals. Don't fall for it.

Edit:

There are plausible reasons to not believe the official narrative at all right now, or maybe ever. The official narrative is also plausible, but there are plausible reasons to disagree with the response even if the official story is true. It is unnecessary to resort to conspiracy thinking in order to account for what happened and to disagree with the response. But it is also understandable why someone might jump immediately to a conspiracy given the circumstances.

2026-01-25 14:30:07

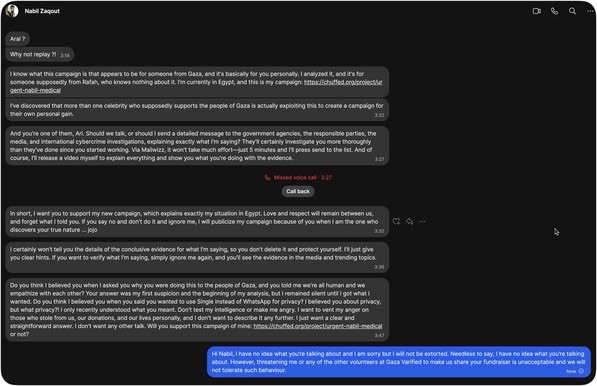

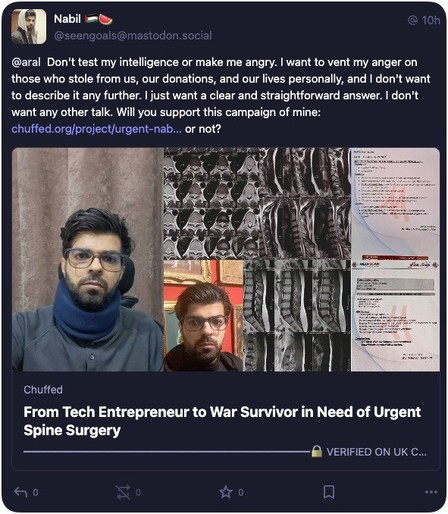

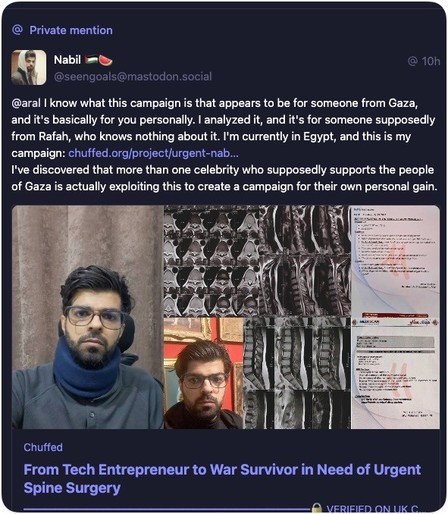

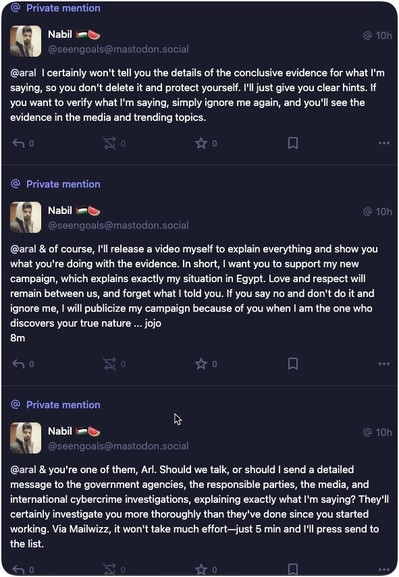

Just got these messages from @seengoals@mastodon.social, one of the members of Gaza Verified, basically accusing me of running a fundraiser for Gaza and keeping the proceeds (I have no fundraiser on any fundraising site anywhere) and extorting me to share his fundraiser or he’ll apparently go public with it.

So here’s what’s going to happening instead: Nabil has been removed Gaza Verified (

2025-11-20 22:27:26

After #Trump finally crashes and burns (I'm still saying I don't think he makes it to the mid terms, and I think it's more than possible he won't make it to the end of the year) we'll hear a lot of people say, "the system worked!" Today people are already talking about "saving democracy" by fighting back. This will become a big rally cry to vote (for Democrats, specifically), and the complete failure of the system will be held up as the best evidence for even greater investment in it.

I just want to point out that American democracy gave nuclear weapons to a pedophile, who, before being elected was already a well known sexual predator, and who made the campaign promise to commit genocide. He then preceded to commit genocide. And like, I don't care that he's "only" kidnaped and disappeared a few thousand brown people. That's still genocide. Even if you don't kill every member of a targeted group, any attempt to do so is still "committing genocide." Trump said he would commit genocide, then he hired all the "let's go do a race war" guys he could find and *paid* them to go do a race war. And, even now as this deranged monster is crashing out, he is still authorized to use the world's largest nuclear arsenal.

He committed genocide during his first term when his administration separated migrant parents and children, then adopted those children out to other parents. That's technically genocide. The point was to destroy the very people been sending right wing terror squads after.

There was a peaceful hand over of power to a known Russian asset *twice*, and the second time he'd already committed *at least one* act of genocide *and* destroyed cultural heritage sites (oh yeah, he also destroyed indigenous grave sites, in case you forgot, during his first term).

All of this was allowed because the system is set up to protect exactly these types of people, because *exactly* these types of people are *the entire power structure*.

Going back to that system means going back to exactly the system that gave nuclear weapons to a pedophile *TWICE*.

I'm already seeing the attempts to pull people back, the congratulations as we enter the final phase, the belief that getting Trump out will let us all get back to normal. Normal. The normal that lead here in the first place. I can already see the brunch reservations being made. When Trump is over, we will be told we won. We will be told that it's time to go back to sleep.

When they tell you everything worked, everything is better, that we can stop because we won, tell them "fuck you! Never again means never again." Destroy every system that ever gave these people power, that ever protected them from consequences, that ever let them hide what they were doing.

These democrats funded a genocide abroad and laid the groundwork for genocide at home. They protected these predators, for years. The whole power structure is guilty. As these files implicate so many powerful people, they're trying to shove everything back in the box. After all the suffering, after we've finally made it clear that we are the once with the power, only now they're willing to sacrifice Trump to calm us all down.

No, that's a good start but it can't be the end.

Winning can't be enough to quench that rage. Keep it burning. When this is over, let victory fan that anger until every institution that made this possible lies in ashes. Burn it all down and salt the earth. Taking down Trump is a great start, but it's not time to give up until this isn't possible again.

#USPol