2025-10-13 06:57:46

Day 19 (a bit late): Alice Oseman

As I said I've got 14 authors to fit into two days. Probably just going to extend to 30? But Oseman gets this spot as an absolute legend of queer fiction in both novel & graphic novel form, and an excellent example of the many truths queer writers have to share with non-queer people that can make everyone's lives better. Her writing is very kind, despite in many instances dealing with some dark stuff.

I started out on Heartstopper, which is just so lovely and fun to read, and then made my way through several of her novels. The one I'll highlight here which I think it's her greatest triumph is "Loveless", which is semi-autobiographical and was at least my first (but no longer only) experience with the "platonic romance" sub-genre. It not only helped me work through some crufty internal doubts about aro/ace identities that I'd never really examined, but in the process helped improve my understanding of friendship, period. Heck, it's probably a nice novel for anyone questioning any sort of identity or dealing with loneliness, and it's just super-enjoyable as a story regardless of the philosophical value.

To cheat a bit more here on my author count, I recently read "Dear Wendy" by Ann Zhao, which shouts out "Loveless" and offers a more expository exploration of aro/ace identities, but "Loveless" is a book with more heart and better writing overall, including the neat plotting and great pacing. I think there are also parallels with Becky Albertalli's work, though I think I like Oseman slightly more. Certainly both excel at writing queer romance (and romance-adjacent) stuff with happy endings (#OwnVoices wins again with all three authors).

In any case, Oseman is excellent and if you're not up for reading a novel, Heartstopper is a graphic novel series that's easy to jump into and very kind to its adorable main characters.

I think I've now decided to continue to 30, which is a relief, so I'm tagging this (and the next post that rounds out 20) two ways.

#20AuthorsNoMen

#30AuthorsNoMen

2025-10-09 07:42:40

Crossing Domains without Labels: Distant Supervision for Term Extraction

Elena Senger, Yuri Campbell, Rob van der Goot, Barbara Plank

https://arxiv.org/abs/2510.06838 https://…

2025-11-05 09:21:57

#DrumpfAdmin #FAA Blame #Democrats As They Announce Intention Of Taking Some Air Travel Routes Off Limits Temporarily Due To Overworked & Unpaid Air Traffic Controller Shortages ...

2025-11-24 00:44:21

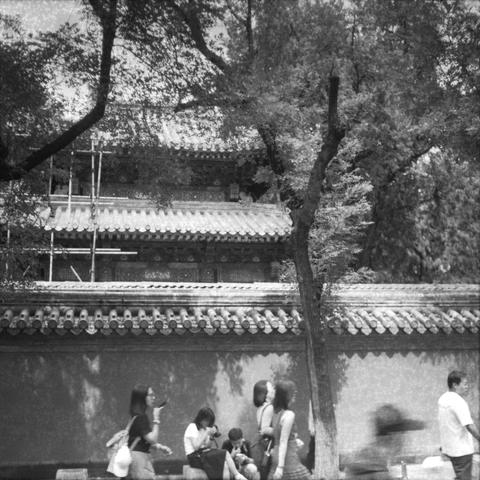

Moody Urbanity - Past II 📴

情绪化城市 - 过去 II 📴

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Ilford HP5, expired 1993

#filmphotography #Photography #blackandwhite

2025-09-23 12:48:40

Intra-Cluster Mixup: An Effective Data Augmentation Technique for Complementary-Label Learning

Tan-Ha Mai, Hsuan-Tien Lin

https://arxiv.org/abs/2509.17971 https://

2025-09-20 16:31:13

Mass killings

Was looking through Wikipedia's list of mass killings in America (#guns #GunViolence #Shooting

2025-09-19 10:15:51

Pseudo-Label Enhanced Cascaded Framework: 2nd Technical Report for LSVOS 2025 VOS Track

An Yan, Leilei Cao, Feng Lu, Ran Hong, Youhai Jiang, Fengjie Zhu

https://arxiv.org/abs/2509.14901

2025-10-02 09:47:01

Anisotropic linear magnetoresistance in Dirac semimetal NiTe2 nanoflakes

Ding Bang Zhou, Kuang Hong Gao, Tie Lin, Yang Yang, Meng Fan Zhao, Zhi Yan Jia, Xiao Xia Hu, Qian Jin Guo, Zhi Qing Li

https://arxiv.org/abs/2510.00940