UK AI Security Institute report: AI models are rapidly improving at potentially dangerous biological and chemical tasks, and show fast jumps in self-replication (Shakeel Hashim/Transformer)

https://www.transformernews.ai/p/aisi-ai-s

Credal Transformer: A Principled Approach for Quantifying and Mitigating Hallucinations in Large Language Models

Shihao Ji, Zihui Song, Jiajie Huang

https://arxiv.org/abs/2510.12137

Hybrid Explanation-Guided Learning for Transformer-Based Chest X-Ray Diagnosis

Shelley Zixin Shu, Haozhe Luo, Alexander Poellinger, Mauricio Reyes

https://arxiv.org/abs/2510.12704

GrifFinNet: A Graph-Relation Integrated Transformer for Financial Predictions

Chenlanhui Dai, Wenyan Wang, Yusi Fan, Yueying Wang, Lan Huang, Kewei Li, Fengfeng Zhou

https://arxiv.org/abs/2510.10387

Nvidia launches Nemotron 3, a family of AI models using a hybrid mixture-of-experts architecture and the Mamba-Transformer design, in 30B, 100B, and ~500B sizes (Emilia David/VentureBeat)

https://venturebeat.com/ai/nvidia-debuts-nemotron-3-w…

Efficient Autoregressive Inference for Transformer Probabilistic Models

Conor Hassan, Nasrulloh Loka, Cen-You Li, Daolang Huang, Paul E. Chang, Yang Yang, Francesco Silvestrin, Samuel Kaski, Luigi Acerbi

https://arxiv.org/abs/2510.09477

"Für jede Trafostation eine LEG" [‘A local energy community for every transformer station’]

It is fascinating how local energy communities are encouraged in Switzerland (so not governed by EU law, that also creates that role), and how the (local) utilities are also actively involved in this.

https://www.

Crosslisted article(s) found for cs.AI. https://arxiv.org/list/cs.AI/new

[6/6]:

- Hybrid Explanation-Guided Learning for Transformer-Based Chest X-Ray Diagnosis

Shelley Zixin Shu, Haozhe Luo, Alexander Poellinger, Mauricio Reyes

ILD-VIT: A Unified Vision Transformer Architecture for Detection of Interstitial Lung Disease from Respiratory Sounds

Soubhagya Ranjan Hota, Arka Roy, Udit Satija

https://arxiv.org/abs/2510.11458

VER: Vision Expert Transformer for Robot Learning via Foundation Distillation and Dynamic Routing

Yixiao Wang, Mingxiao Huo, Zhixuan Liang, Yushi Du, Lingfeng Sun, Haotian Lin, Jinghuan Shang, Chensheng Peng, Mohit Bansal, Mingyu Ding, Masayoshi Tomizuka

https://arxiv.org/abs/2510.05213

Latent Representation Learning in Heavy-Ion Collisions with MaskPoint Transformer

Jing-Zong Zhang, Shuang Guo, Li-Lin Zhu, Lingxiao Wang, Guo-Liang Ma

https://arxiv.org/abs/2510.06691

A Scalable AI Driven, IoT Integrated Cognitive Digital Twin for Multi-Modal Neuro-Oncological Prognostics and Tumor Kinetics Prediction using Enhanced Vision Transformer and XAI

Saptarshi Banerjee, Himadri Nath Saha, Utsho Banerjee, Rajarshi Karmakar, Jon Turdiev

https://arxiv.org/abs/2510.05123

LadderSym: A Multimodal Interleaved Transformer for Music Practice Error Detection

Benjamin Shiue-Hal Chou, Purvish Jajal, Nick John Eliopoulos, James C. Davis, George K. Thiruvathukal, Kristen Yeon-Ji Yun, Yung-Hsiang Lu

https://arxiv.org/abs/2510.08580

Locality-Sensitive Hashing-Based Efficient Point Transformer for Charged Particle Reconstruction

Shitij Govil, Jack P. Rodgers, Yuan-Tang Chou, Siqi Miao, Amit Saha, Advaith Anand, Kilian Lieret, Gage DeZoort, Mia Liu, Javier Duarte, Pan Li, Shih-Chieh Hsu

https://arxiv.org/abs/2510.07594

LRQ-Solver: A Transformer-Based Neural Operator for Fast and Accurate Solving of Large-scale 3D PDEs

Peijian Zeng, Guan Wang, Haohao Gu, Xiaoguang Hu, TiezhuGao, Zhuowei Wang, Aimin Yang, Xiaoyu Song

https://arxiv.org/abs/2510.11636

Interplay Between Belief Propagation and Transformer: Differential-Attention Message Passing Transformer

Chin Wa Lau, Xiang Shi, Ziyan Zheng, Haiwen Cao, Nian Guo

https://arxiv.org/abs/2509.15637

Sources: the NY governor proposes a rewrite of the RAISE Act, the AI bill that recently passed NY legislature, with text copied verbatim from California's SB 53 (Shakeel Hashim/Transformer)

https://www.transformernews.ai/p/new-york-governor-hochul-raise-act-sb-5…

ENLighten: Lighten the Transformer, Enable Efficient Optical Acceleration

Hanqing Zhu, Zhican Zhou, Shupeng Ning, Xuhao Wu, Ray Chen, Yating Wan, David Pan

https://arxiv.org/abs/2510.01673

DiTSinger: Scaling Singing Voice Synthesis with Diffusion Transformer and Implicit Alignment

Zongcai Du, Guilin Deng, Xiaofeng Guo, Xin Gao, Linke Li, Kaichang Cheng, Fubo Han, Siyu Yang, Peng Liu, Pan Zhong, Qiang Fu

https://arxiv.org/abs/2510.09016

Crosslisted article(s) found for eess.SP. https://arxiv.org/list/eess.SP/new

[1/1]:

- Soft Graph Transformer for MIMO Detection

Jiadong Hong, Lei Liu, Xinyu Bian, Wenjie Wang, Zhaoyang Zhang

Dual-Path Phishing Detection: Integrating Transformer-Based NLP with Structural URL Analysis

Ibrahim Altan, Abdulla Bachir, Yousuf Parbhulkar, Abdul Muksith Rizvi, Moshiur Farazi

https://arxiv.org/abs/2509.20972

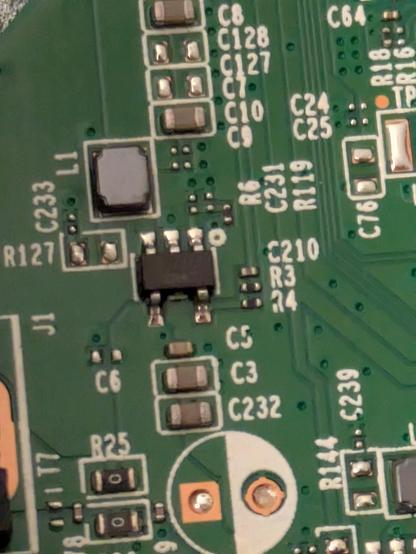

Pretty sure the power supply in this switch is fried. Possibly a short in the coil/transformer next to the hot power regulator.

$8 to replace the entire unit, delivered.

Comparing Symmetrized Determinant Neural Quantum States for the Hubbard Model

Louis Sharma, Ahmedeo Shokry, Rajah Nutakki, Olivier Simard, Michel Ferrero, Filippo Vicentini

https://arxiv.org/abs/2510.11710

A Comparative Analysis of Contextual Representation Flow in State-Space and Transformer Architectures

Nhat M. Hoang, Do Xuan Long, Cong-Duy Nguyen, Min-Yen Kan, Luu Anh Tuan

https://arxiv.org/abs/2510.06640

From Embeddings to Equations: Genetic-Programming Surrogates for Interpretable Transformer Classification

Mohammad Sadegh Khorshidi, Navid Yazdanjue, Hassan Gharoun, Mohammad Reza Nikoo, Fang Chen, Amir H. Gandomi

https://arxiv.org/abs/2509.21341

From Embeddings to Equations: Genetic-Programming Surrogates for Interpretable Transformer Classification

We study symbolic surrogate modeling of frozen Transformer embeddings to obtain compact, auditable classifiers with calibrated probabilities. For five benchmarks (SST2G, 20NG, MNIST, CIFAR10, MSC17), embeddings from ModernBERT, DINOv2, and SigLIP are partitioned on the training set into disjoint, information-preserving views via semantic-preserving feature partitioning (SPFP). A cooperative multi-population genetic program (MEGP) then learns additive, closed-form logit programs over these views…

Transformer Classification of Breast Lesions: The BreastDCEDL_AMBL Benchmark Dataset and 0.92 AUC Baseline

Naomi Fridman (Ariel University), Anat Goldstein (Ariel University)

https://arxiv.org/abs/2509.26440

How Pathway, a startup developing an alternative to the transformer, aims to use its Dragon Hatchling architecture to create a new class of adaptive AI systems (Steven Rosenbush/Wall Street Journal)

https://www.wsj.com/articles/an-ai…

PyramidStyler: Transformer-Based Neural Style Transfer with Pyramidal Positional Encoding and Reinforcement Learning

Raahul Krishna Durairaju (California State University, Fullerton), K. Saruladha (Puducherry Technological University)

https://arxiv.org/abs/2510.01715

This is so much "AI" reporting: Claims about potentials and/or threads. I'd just like to have grown-up conversations about tech again :(

"The actual current user base for evil chatbots is the cyber security vendors, who scaremonger how only their good AI can possibly stop this automated hacker evil!"

(Original title: AI for evil — hacked by WormGPT!)

CosmoUiT: A Vision Transformer-UNet Hybrid for Fast and Accurate Emulation of 21-cm Maps from the Epoch of Reionization

Prasad Rajesh Posture, Yashrajsinh Mahida, Suman Majumdar, Leon Noble

https://arxiv.org/abs/2510.01121

cAItomorph: Transformer-Based Hematological Malignancy Prediction from Peripheral Blood Smears in a Real-Word Cohort

Muhammed Furkan Dasdelen, Ivan Kukuljan, Peter Lienemann, Ario Sadafi, Matthias Hehr, Karsten Spiekermann, Christian Pohlkamp, Carsten Marr

https://arxiv.org/abs/2509.20402

#OTD 2013: A time when Tesla still meant progress. One of the first Tesla Superchargers in Europe, in Zevenaar 🇳🇱. Until then, #EV fast chargers were often single devices, but this was one of the first hubs.

Crosslisted article(s) found for cs.IT. https://arxiv.org/list/cs.IT/new

[1/1]:

- Soft Graph Transformer for MIMO Detection

Jiadong Hong, Lei Liu, Xinyu Bian, Wenjie Wang, Zhaoyang Zhang

Lightweight Transformer for EEG Classification via Balanced Signed Graph Algorithm Unrolling

Junyi Yao, Parham Eftekhar, Gene Cheung, Xujin Chris Liu, Yao Wang, Wei Hu

https://arxiv.org/abs/2510.03027 …

MAST: Multi-Agent Spatial Transformer for Learning to Collaborate

Damian Owerko, Frederic Vatnsdal, Saurav Agarwal, Vijay Kumar, Alejandro Ribeiro

https://arxiv.org/abs/2509.17195

New Machine Learning Approaches for Intrusion Detection in ADS-B

Mika\"ela Ngambo\'e, Jean-Simon Marrocco, Jean-Yves Ouattara, Jos\'e M. Fernandez, Gabriela Nicolescu

https://arxiv.org/abs/2510.08333

Deconstructing Attention: Investigating Design Principles for Effective Language Modeling

Huiyin Xue, Nafise Sadat Moosavi, Nikolaos Aletras

https://arxiv.org/abs/2510.11602 htt…

Replaced article(s) found for cs.CV. https://arxiv.org/list/cs.CV/new

[5/8]:

- Context Guided Transformer Entropy Modeling for Video Compression

Junlong Tong, Wei Zhang, Yaohui Jin, Xiaoyu Shen

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[9/14]:

- Hyper-STTN: Hypergraph Augmented Spatial-Temporal Transformer Network for Trajectory Prediction

Weizheng Wang, Baijian Yang, Sungeun Hong, Wenhai Sun, Byung-Cheol Min

Crosslisted article(s) found for eess.IV. https://arxiv.org/list/eess.IV/new

[1/1]:

- 3D Reconstruction from Transient Measurements with Time-Resolved Transformer

Yue Li, Shida Sun, Yu Hong, Feihu Xu, Zhiwei Xiong

Replaced article(s) found for cs.AI. https://arxiv.org/list/cs.AI/new

[2/9]:

- Spatial-Functional awareness Transformer-based graph archetype contrastive learning for Decoding ...

Yueming Sun, Long Yang

IIET: Efficient Numerical Transformer via Implicit Iterative Euler Method

Xinyu Liu, Bei Li, Jiahao Liu, Junhao Ruan, Kechen Jiao, Hongyin Tang, Jingang Wang, Xiao Tong, Jingbo Zhu

https://arxiv.org/abs/2509.22463

Towards fairer public transit: Real-time tensor-based multimodal fare evasion and fraud detection

Peter Wauyo, Dalia Bwiza, Alain Murara, Edwin Mugume, Eric Umuhoza

https://arxiv.org/abs/2510.02165

TrackFormers Part 2: Enhanced Transformer-Based Models for High-Energy Physics Track Reconstruction

Sascha Caron, Nadezhda Dobreva, Maarten Kimpel, Uraz Odyurt, Slav Pshenov, Roberto Ruiz de Austri Bazan, Eugene Shalugin, Zef Wolffs, Yue Zhao

https://arxiv.org/abs/2509.26411

Accent-Invariant Automatic Speech Recognition via Saliency-Driven Spectrogram Masking

Mohammad Hossein Sameti, Sepehr Harfi Moridani, Ali Zarean, Hossein Sameti

https://arxiv.org/abs/2510.09528

Transformer-Based Rate Prediction for Multi-Band Cellular Handsets

Ruibin Chen, Haozhe Lei, Hao Guo, Marco Mezzavilla, Hitesh Poddar, Tomoki Yoshimura, Sundeep Rangan

https://arxiv.org/abs/2509.25722

Google debuts Titans, an architecture combining RNN speed with transformer performance for real-time learning, able to scale effectively to a 2M context window (Google Research)

https://research.google/blog/titans-miras-helping-ai-have-long-term-memory/

DiT-VTON: Diffusion Transformer Framework for Unified Multi-Category Virtual Try-On and Virtual Try-All with Integrated Image Editing

Qi Li, Shuwen Qiu, Julien Han, Xingzi Xu, Mehmet Saygin Seyfioglu, Kee Kiat Koo, Karim Bouyarmane

https://arxiv.org/abs/2510.04797

AWARE, Beyond Sentence Boundaries: A Contextual Transformer Framework for Identifying Cultural Capital in STEM Narratives

Khalid Mehtab Khan, Anagha Kulkarni

https://arxiv.org/abs/2510.04983

GastroViT: A Vision Transformer Based Ensemble Learning Approach for Gastrointestinal Disease Classification with Grad CAM & SHAP Visualization

Sumaiya Tabassum, Md. Faysal Ahamed, Hafsa Binte Kibria, Md. Nahiduzzaman, Julfikar Haider, Muhammad E. H. Chowdhury, Mohammad Tariqul Islam

https://arxiv.org/abs/2509.26502

The Potential of Second-Order Optimization for LLMs: A Study with Full Gauss-Newton

Natalie Abreu, Nikhil Vyas, Sham Kakade, Depen Morwani

https://arxiv.org/abs/2510.09378 https…

Replaced article(s) found for cs.AI. https://arxiv.org/list/cs.AI/new

[6/13]:

- LIAM: Multimodal Transformer for Language Instructions, Images, Actions and Semantic Maps

Yihao Wang, Raphael Memmesheimer, Sven Behnke

Domain-Adapted Pre-trained Language Models for Implicit Information Extraction in Crash Narratives

Xixi Wang, Jordanka Kovaceva, Miguel Costa, Shuai Wang, Francisco Camara Pereira, Robert Thomson

https://arxiv.org/abs/2510.09434

HRTFformer: A Spatially-Aware Transformer for Personalized HRTF Upsampling in Immersive Audio Rendering

Xuyi Hu, Jian Li, Shaojie Zhang, Stefan Goetz, Lorenzo Picinali, Ozgur B. Akan, Aidan O. T. Hogg

https://arxiv.org/abs/2510.01891

Augmented Intelligence, which is building neuro-symbolic AI models, raised $20M in a bridge SAFE round at a $750M valuation, bringing its total funding to ~$60M (Carl Franzen/VentureBeat)

https://venturebeat.com/ai/the-beginning-of-the-end…

Conv-like Scale-Fusion Time Series Transformer: A Multi-Scale Representation for Variable-Length Long Time Series

Kai Zhang, Siming Sun, Zhengyu Fan, Qinmin Yang, Xuejun Jiang

https://arxiv.org/abs/2509.17845

IBM releases Granite 4.0, an open source "enterprise-ready" LLM family with a hybrid architecture, claiming it uses significantly less RAM than traditional LLMs (Carl Franzen/VentureBeat)

https://venturebeat.com/ai/western-qwe…

HART: Human Aligned Reconstruction Transformer

Xiyi Chen, Shaofei Wang, Marko Mihajlovic, Taewon Kang, Sergey Prokudin, Ming Lin

https://arxiv.org/abs/2509.26621 https://…

SINAI at eRisk@CLEF 2025: Transformer-Based and Conversational Strategies for Depression Detection

Alba Maria Marmol-Romero, Manuel Garcia-Vega, Miguel Angel Garcia-Cumbreras, Arturo Montejo-Raez

https://arxiv.org/abs/2509.19861

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[6/8]:

- Rethinking Decoders for Transformer-based Semantic Segmentation: A Compression Perspective

Qishuai Wen, Chun-Guang Li

To Sink or Not to Sink: Visual Information Pathways in Large Vision-Language Models

Jiayun Luo, Wan-Cyuan Fan, Lyuyang Wang, Xiangteng He, Tanzila Rahman, Purang Abolmaesumi, Leonid Sigal

https://arxiv.org/abs/2510.08510

GraDeT-HTR: A Resource-Efficient Bengali Handwritten Text Recognition System utilizing Grapheme-based Tokenizer and Decoder-only Transformer

Md. Mahmudul Hasan, Ahmed Nesar Tahsin Choudhury, Mahmudul Hasan, Md. Mosaddek Khan

https://arxiv.org/abs/2509.18081

Grouped Differential Attention

Junghwan Lim, Sungmin Lee, Dongseok Kim, Wai Ting Cheung, Beomgyu Kim, Taehwan Kim, Haesol Lee, Junhyeok Lee, Dongpin Oh, Eunhwan Park

https://arxiv.org/abs/2510.06949

AI-Driven Radiology Report Generation for Traumatic Brain Injuries

Riadh Bouslimi, Houda Trabelsi, Wahiba Ben Abdssalem Karaa, Hana Hedhli

https://arxiv.org/abs/2510.08498 https…

Heptapod: Language Modeling on Visual Signals

Yongxin Zhu, Jiawei Chen, Yuanzhe Chen, Zhuo Chen, Dongya Jia, Jian Cong, Xiaobin Zhuang, Yuping Wang, Yuxuan Wang

https://arxiv.org/abs/2510.06673

Bayesian Modelling of Multi-Year Crop Type Classification Using Deep Neural Networks and Hidden Markov Models

Gianmarco Perantoni, Giulio Weikmann, Lorenzo Bruzzone

https://arxiv.org/abs/2510.07008

Optimizing Inference in Transformer-Based Models: A Multi-Method Benchmark

Siu Hang Ho, Prasad Ganesan, Nguyen Duong, Daniel Schlabig

https://arxiv.org/abs/2509.17894 https://…

Quantized Visual Geometry Grounded Transformer

Weilun Feng, Haotong Qin, Mingqiang Wu, Chuanguang Yang, Yuqi Li, Xiangqi Li, Zhulin An, Libo Huang, Yulun Zhang, Michele Magno, Yongjun Xu

https://arxiv.org/abs/2509.21302

Lung Infection Severity Prediction Using Transformers with Conditional TransMix Augmentation and Cross-Attention

Bouthaina Slika, Fadi Dornaika, Fares Bougourzi, Karim Hammoudi

https://arxiv.org/abs/2510.06887

Crosslisted article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[1/8]:

- ReplaceMe: Network Simplification via Depth Pruning and Transformer Block Linearization

Shopkhoev, Ali, Zhussip, Malykh, Lefkimmiatis, Komodakis, Zagoruyko

PS3: A Multimodal Transformer Integrating Pathology Reports with Histology Images and Biological Pathways for Cancer Survival Prediction

Manahil Raza, Ayesha Azam, Talha Qaiser, Nasir Rajpoot

https://arxiv.org/abs/2509.20022

EfficienT-HDR: An Efficient Transformer-Based Framework via Multi-Exposure Fusion for HDR Reconstruction

Yu-Shen Huang, Tzu-Han Chen, Cheng-Yen Hsiao, Shaou-Gang Miaou

https://arxiv.org/abs/2509.19779 …

Visual Representations inside the Language Model

Benlin Liu, Amita Kamath, Madeleine Grunde-McLaughlin, Winson Han, Ranjay Krishna

https://arxiv.org/abs/2510.04819 https://