2026-02-10 11:34:46

2026-02-10 11:34:46

2026-02-10 12:40:45

2025-12-09 08:17:39

Not surprised that the said #AI sweatshop workers are from the #Philippines 🇵🇭

https://futurism.com/arti…

2026-01-09 08:06:41

OpenAI and Perplexity, armed with significant AI capabilities, have targeted the shopping domain. And have run into many problems around data access and semantics.

This raises three thoughts:

First, companies which are bullish about AI “changing everything”, are stumbling in maybe the most traditional, mundane domain: shopping. This doesn’t inspire confidence in their technology.

1/5

#ai

2026-01-09 15:08:24

OH on slack: It's not prompt engineering. It's sloperating

#ai #fuck_ai #generativeAI

2025-11-10 12:41:21

“And worse of all, my mental model of the code is completely gone, and with it my ownership.”

#AI #VibeCoding https://mastodon…

2026-01-09 16:05:03

So... should the data be structured or unstructured? 🤔

#AI

2025-12-10 10:47:42

2026-01-07 17:00:29

"‘Just an unbelievable amount of pollution’: how big a threat is AI to the climate?"

#Climate #ClimateChange #AI

2026-01-07 09:22:55

2025-11-10 18:03:41

2025-12-09 16:31:09

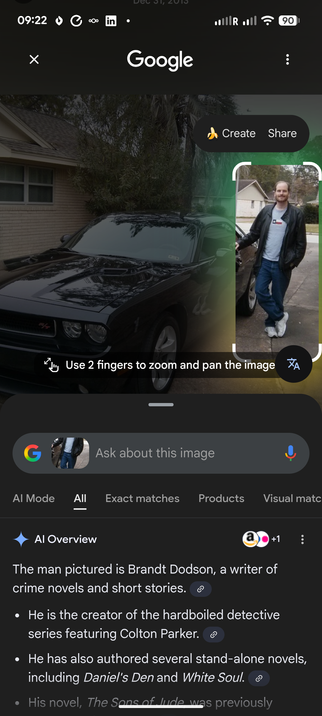

Yeah, um - that's a swing and a miss there. Gemini.

#AI #SwingAndAMiss #Gemini

2026-02-05 14:43:56

2026-02-04 09:10:48

Two kinds of #AI #users are emerging. The gap between them is astonishing.

https://martinalderson.com/posts/two-k

2026-02-08 09:52:26

Malafide AI-zwermen, een reële bedreiging, zelf vermoed ik dat ze al actief zijn. Goed paper en visuele samenvatting door NotebookLM.

#ai

2026-02-05 13:26:34

[OT] Microsoft 365 Classic explained: a lower-cost, no-#AI subscription option https://office-watch.com/2025/microsoft-365-classic-no-ai-lower-cost-plan/

2025-12-08 14:46:26

Maybe having "AI" doing any coding for you is a bad idea.

"Windows 11 25H2 is Breaking PCs Right Now (Here’s What’s Actually Broken) "

TBF, maybe it's just that M$ sucks at coding its own OS at this point.

#AI #enshittification

2025-12-07 05:04:40

2026-01-08 05:53:18

2026-02-04 17:33:03

2026-02-05 14:26:46

2026-01-08 19:21:16

2026-01-09 18:40:28

2026-02-03 15:16:57

#Firefox führt ab Version 148 eine neue Einstellung ein, mit der Nutzer alle aktuellen und zukünftigen generativen #AI-Funktionen im Browser blockieren können.

Alternativ lassen sich einzelne Features wie #Übersetzungen

2026-02-08 16:13:07

2026-01-10 02:24:06

2025-12-01 19:53:38

This is a genuinely good idea, and I'd urge you to sign it. Make #AI generated works immediately identifiable. It would be interesting also to require a similar 'watermark' in generated texts -- perhaps a particular sequence of whitespace characters.

#generativeAI

H/t

2026-02-06 11:41:45

Whenever people are commenting on another half-assed, crappy #LLM feat, claiming that there are "some" use cases for this "#AI", substitute "AI" with "genocide".

Because, you know, there are "use cases" for genocide too, and apparently a lot of people don't mind, as long as they can benefit from it and look the other way.

#NoAI

2026-02-08 03:44:01

No see #AI is good actually. #TrumpEpstein

Video generated by Ari Kuschnir and Schuyler Brown

2025-12-07 20:42:58

From Gary Marcus (via Brian Merchant) on OpenAIs latest predicaments:

"My guess is that they have cumulatively raised on the order of $100 billion since they launched, perhaps more than any other company in history, but have already spent most of it, and likely don’t have much more than a year’s runway left.”

#AI

2026-01-02 15:47:06

#AI / #LLM propaganda is so insidiously effective even for laypeople.

I’ve had multiple conversations with family members who: don’t speak English, don’t own computers (only mobile phones), and barely spend time online.

I told them that I am no longer working with most tech company clients because I don’t like AI and don’t want to support it (“AI” here = gen AI, LLMs).

And yet these people all reacted the same way: concern, shock, and comments like “but this is inevitable”, “this is the future”, “you’ll have to accept it eventually”, “won’t refusing it ruin your career prospects?”

These are people who know nothing about technology. They usually wouldn’t even know what “AI” meant. And yet here they are, utterly convinced of AI company talking points.

2026-02-06 08:13:54

Interesting read, it illustrates the challenge we have with regard to learning in a world with AI. We have to take measures for that because use of AI in coding is not going away and will only increase.

https://arxiv.org/abs/2601.20245

2026-02-09 02:42:47

I wonder how much that absolute trash Protect Ontario AI generated commercial cost.

👎

Thanks Doug Ford

#ai #work #video #mediastudies #SuperBowl

2026-02-03 18:26:16

2025-12-03 07:54:50

2026-01-01 22:22:27

2026-02-08 08:36:13

2026-02-05 14:42:32

Interesting take on the 'AI bubble' - what about this perspective? #economy #AI

https://youtu.be/21e5GZF3yx0?si=VQ0m9…

2026-01-07 09:57:40

RE: #AI,

2026-02-01 08:39:53

#Moltbook #AI #Vulnerability Exposes Email Addresses, Login Tokens, and API Keys

2025-11-27 20:28:12

Welcome to the world of the field, engineering.

For a long time, we've hired very few into sales, mktg, support or consulting that don't already gobs of experience elsewhere.

✅ How #Microsoft’s developers are using #AI - The Verge

2025-12-04 21:13:45

From #RobertReich @rbreich@instagram.com and @inequalitymedia@instagram.com @…

The #AI #surveillance

2026-01-02 22:57:31

I wonder...

How long time before all the #ai responses to search questions all get contaminated by sponsored tweaks to their answers?

A year?

I understand that Musk's personal ai toy already does that with respect to questions about him. It can't be much harder to sell ad spots that tweak the ai answers in a similar way, to car companies or dishwasher manufacturers.

2025-12-08 09:34:31

Kevin Xu argues that it's misleading to characterise the US–China AI competition as a race, since there's mutual co-operation and co-optation going on all the time: #AIResearch #LLM #AIResearch

2025-12-03 20:58:31

Crucial is shutting down — because Micron wants to sell its RAM and SSDs to AI companies instead

#AI #Enshittification

https…

2026-01-29 16:11:00

I have an idea. If we used people to do work, rather than paying for AI agents, then we wouldn’t need to pay for them to not work. Also, we wouldn’t have goodness knows how much electricity used on it.

#ai #ukpolitics

2026-02-08 12:49:54

2025-12-03 15:04:52

2026-01-28 09:23:58

The #AI Revolution in Coding: Why I’m Ignoring the Prophets of Doom

https://codingismycraft.blog/index.php/2026/01/23/the-ai-revolutio…

2025-12-06 05:00:42

2025-12-29 14:40:29

2025-11-28 18:13:47

Part II of you won't believe since when AI dweebs have made ridiculous predictions

Take a guess when this was said! (Solution in reply)

#ai

2025-12-03 15:00:44

Malicious LLMs empower inexperienced hackers with advanced tools

#AI

2026-02-05 16:10:48

2026-02-01 17:31:10

Oh My God… this is crazy #moltbook #AI

❤️ https://simonwillison.net/2026/Jan/30/moltbook/

2025-12-08 12:26:09

If you want to spend time on AI you can best spend it on lectures like this. No hype, just science, but in this case also very practical.

#AI

2026-01-26 13:00:42

2026-01-08 08:20:32

(YouTube, #AI #slop) Neuralink 2026: The Year Humans Become Digital https://www.youtube.com/watch?v=O5ArS-CBIjE Blindsight? Bette…

2025-12-22 13:10:24

2026-01-03 12:51:45

I admit that, through 2025, I have become an #AI #doomer. Not that I believe the LLM-becoming-sentient-and-killing-humanity self-serving hype bullshit for a second. There are much more concrete impact points that may lead to or greatly accelerate several crises, each of which will actively harm humanity…

2025-12-02 01:05:31

NOOOOOOOO!

They used some #ai generated garbage to open the new episode of #MudochMysteries!

I hate it! It's awful.

No AI. Ever!

2026-02-03 17:00:06

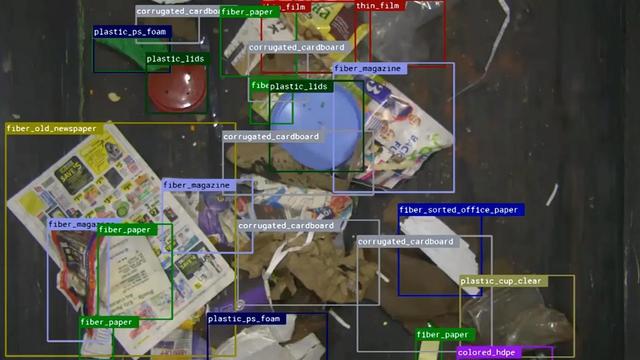

"AI’s Latest Trick Is Pulling Valuable Commodities Out of Our Trash"

#AI #ArtificialIntelligence

2025-12-31 23:54:52

2025-11-30 12:41:29

2025-12-01 22:30:39

Medicare to use AI to pre-authorize certain treatments in 6 states including NJ in 2026. Why? To reduce costs.

Also known as rationing healthcare. By a computer.

#medicare #AI #rationing

2025-11-21 17:07:39

2026-02-03 18:56:30

The #ai #apocalypse is near 🤬

RentAHuman.ai - AI Agents Hire Humans for Physical Tasks https://rentahuman.ai

2026-02-09 15:57:55

Whenever a #FreeSoftware project is suffering from onslaught of low quality LLM-generated pull requests, there will be a bunch of #LLM lovers complaining that people shouldn't be talking of "LLM-generated" being part of the problem, because "using AI isn't bad" in itself. Of course, they entirely ignore all the ethical and environmental concerns, and probably write crappy code themselves.

#AI #NoAI

2025-12-10 19:13:28

I see many people impatient for an AI crash , they want this "foolishness" to end, I am afraid it will take a while or even that it never happens....

#AIcrash

2025-12-23 09:46:35

Reading Tim O'Reilly's essay on the economic future of #AI, one sentence stands out:

"By product-market fit we don’t just mean that users love the product or that one company has dominant market share but that a company has found a viable economic model, where what people are willing to pay for AI-based services is greater than the cost of delivering them"

/Continued

2025-11-20 20:52:04

20/11 in Nieuwsuur: Zorgen om AI-bubbel? • Plan voor Oekraïne • Kinderopvang in het buitenland

#AI

2026-01-27 17:58:38

One of my contractors used an #LLM for a first draft of a client deliverable today.

I don’t allow #AI use for any client-facing work (and try to prohibit it for internal work too, but it’s been… tricky). This person knows it - I’ve worked with them for years, and they are usually very thoughtful and wouldn’t take a shortcut like this. So seeing the obvious AI copy was very confusing.

Thankfully we had a call scheduled this morning, so I decided to handle it there.

The contractor showed up very obviously sick, they told me they took most of past week off but had to force themselves to finish this draft because the deadline is EOD. I asked if they used #AI to draft the document, they immediately confessed and apologized.

Since the deliverable is client-facing, I asked them to explain their ideas to me in conversational language and then used my notes of what they said to help them rewrite the document. We worked through the whole thing in an hour, and I’ve asked them to go rest and recover.

2025-12-06 04:46:07

2026-02-06 12:42:27

2025-12-03 10:21:57

Deeplearning.ai is a resource i can really recommend for free (!) courses on various AI subjects, serious, no hype. For example the Agentic AI course by Andrew Ng does a good breakdown of the hype around "AI Agents" / "Agentic AI".

#AI #Deeplearning

2025-12-30 20:28:20

2026-02-05 14:29:56

2025-12-07 10:48:46

The current AI models let you easily experiment with application ideas like never before. For experimentation, quickly trying out ideas, they are great. That is something completely different from serious/professional use I am fully aware. Still your creativity is now the limit, not your knowledge of python, jscript etc and that is fascinating .

#AI

2025-11-28 13:24:03

[OT] So now even when you try to avoid using #AI, #AI hits your wallet nonetheless https://www.<…

2026-02-06 12:13:43

Anthropic's Claude Opus 4.6 uncovers 500 zero-day flaws in open-source code #ai

2025-12-09 06:48:47

2025-12-21 21:22:20

Nederlandse ondernemer sloeg 500 miljoen af, en wil nu zelf de AI-race winnen - #Nieuwsuur

#ai

2025-11-24 20:47:51

Honest government ad: AI

#AI #HonestGovernmentAds

2025-12-29 17:06:34

Rich Hickey goes right for the #AI jugular, and is spot on correct.

https://gist.github.com/richhickey/ea94e3741ff0a4e3af55b9fe6287887f

2025-11-14 15:29:31

I’ve been testing a theory: many people who are high on #AI and #LLMs are just new to automation and don’t realize you can automate processes with simple programming, if/then conditions, and API calls with zero AI involved.

So far it’s been working!

Whenever I’ve been asked to make an AI flow or find a way to implement AI in our work with a client, I’ve returned back with an automation flow that uses 0 AI.

Things like “when a new document is added here, add a link to it in this spreadsheet and then create a task in our project management software assigned to X with label Y”.

And the people who were frothing at the mouth at how I must change my mind on AI have (so far) all responded with resounding enthusiasm and excitement.

They think it’s the same thing. They just don’t understand how much automation is possible without any generative tools.

2026-01-14 15:00:51

"AI for Nature Restoration Tools: How Companies are Transforming Ecosystem Recovery Projects"

#AI #ArtificialIntelligence #Nature

2025-11-24 21:38:45

[OT] Companies are blaming #AI for layoffs. The real reason... https://medium.com/@mattlar.jari/companies-are-blaming-ai-for-layoffs-the-real-rea…

2026-01-04 07:46:30

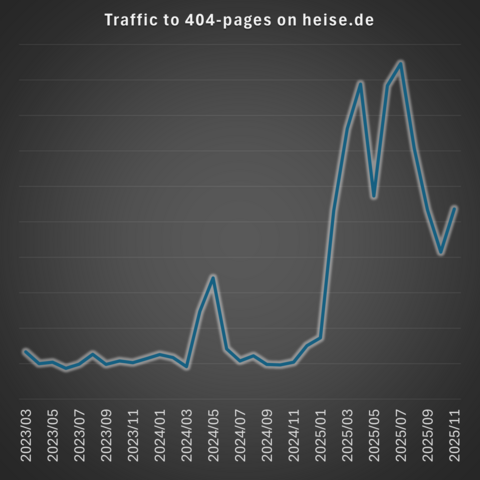

The rapid decline of #StackOverflow et al in the age of #AI slop https://data.stackexchange.com/stackov

2026-02-06 11:29:27

Using well-chosen public sources, i let NotebookLM and Kimi K2.5 generate a presentation on a subject, amazing quality and very few errors. Good inspiration for the real presentation i will make myself. AI is not only creating "slop", we have to really start more research about how we incorporate AI in our work-processes in a responsible way, how we don't become "dumber", maintain skills, learning and human creativity 🤔

2026-02-04 08:41:30

When i don't have time to read all the posts in a Mastodon list, i now use a Gemini App (made in Google AI Studio) to quickly make a summary and overview of the highlights, shared links etc.

Mastodon lists are a great way to organize your Mastodonfeed by the way.

#mastodonlists #AI

2025-12-23 11:42:36

Essential AI is an interesting company, their Rnj-1 model illustrates another approach compared to the large AI companies.

#AI

2025-12-11 03:44:29

2026-02-07 13:44:06

RE: #ai

2025-11-24 08:52:08

Ik lees in het Nationaal AI Deltaplan (goed dat het er is!) over een staatssecretaris van AI bij EZ. Zelf heb ik de voorkeur voor een Minister van Digitale Zaken die naast AI ook een onderwerp als cloud onder zijn/haar hoede kan nemen. Maar zeker niet allebei, dan spreiden we de thematiek over 2 departementen , EZ en BZK.

#AI

2025-12-04 07:46:37

Sometimes i have the feeling that AI results in Google Search are just a placeholder. Results are often awful and incomparable with using the paid version of Gemini 3 with the same query. Google is betting that models will improve and become cheaper so that they can offer a better experience in the future.

#AI #Search

2026-01-14 07:42:35

How generative AI is used in the financial sector.

#AI

2025-12-11 20:07:05

Ethan Mollick makes a good point on BlueSky:

I understand the fear and anger. But also:

1) It is connected to the belief that AI is fake and going to vanish, which means that critics who should be helping shape AI use through policy and collective work are sitting it out

2) Lumping all AI criticism/talk into us vs. "tech bros" doesn't help

#AI

2026-01-22 16:07:54

How AI can help students not by writing their essays but with dialogue.

#AI

2025-11-28 16:04:50

So as user of the Google eco-system I can now use Gemini for coding in: Colab, Jules, Anti-Gravity, AI-studio .... 🥳

#ai #google #vibecoding

2026-01-21 08:27:10

Er wordt veel onzin verteld over het energieverbruik van AI. Deze blog brengt wat realiteit.

#AI