2024-12-17 23:40:23

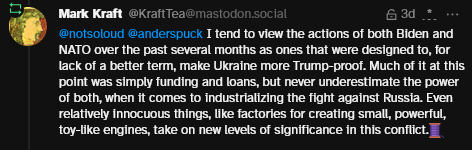

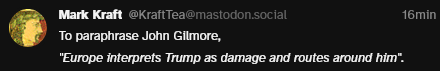

Drawing attention to this thread of comments of mine from a few days ago, as it goes deeper into apparent efforts from European nations to Trump-proof Ukraine, which is actually VERY important for European security.

It's nice seeing news articles pointing out Ukrainian funding & Russian appeasement isn't something Trump gets to dictate, but rather, a situation where Europe is trying to diplomatically push back against Trump to defend their legitimate security interests.

2024-12-11 09:01:18

2024-12-05 05:25:30

Integrating w/ features like Secure Boot & Windows Hello for Business, TPM 2.0 enhances security by ensuring that only verified software is executed & protecting confidential details.

It’s true that its implementation might require a change for your organization. Yet it represents an important step toward more effectively countering today’s intricate security challenges.

✅ TPM 2.0 – a necessity for a secure & future-proof Windows 11 -

2025-01-05 19:23:58

Nice, @…'s blog post about #Bundler's new checksums is worth a read:

2024-11-08 05:57:33

Maybe if I buy this flower a drink, it will tell me the secret to its optimism #bloomscrolling

2024-11-11 10:51:14

Series B, Episode 05 - Pressure Point

BLAKE: We'll pull out. Give it another hour.

[Inside the Security station]

https://blake.torpidity.net/m/205/168 B7B2

2024-12-13 18:48:37

Excited about the new xLSTM model release. There are many well-though designs compared to transformers: recurrence (which should allows composability), gating (like Mamba & LSTM which is based on, which allows time complexity independent of the input size), state tracking (unlike Mamba & transformers). For now, these advantage aren’t apparent on benchmarks, but most training techniques are secrets, and the recent advances of LLMs evidenced that they matter a lot.