2026-02-26 17:10:28

2026-02-26 17:10:28

2026-02-26 15:42:07

Latest from Howard Marks on #AI: https://www.oaktreecapital.com/insights/memo/ai-hurtles-ahead

This guy continues to adapt with times. Over and over again.

2026-01-26 13:00:42

2026-02-27 15:40:46

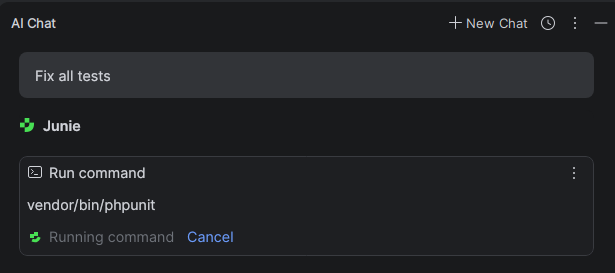

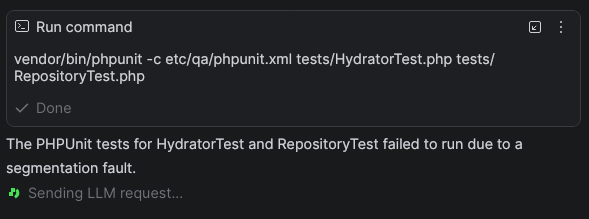

At this point, if you don't find AI tools useful for code generation, that's a skill issue.

(The issue is that you are an actually competent programmer who knows how to do things like abstract boilerplate code into a helper function or practice test-driven development, and therefore you recognize the AI tool "assistance" as a net productivity loss. If you aren't good enough on your own that AI slows you down, the "speedup" you'll experience can only come at a crippling quality cost, which you might not even be able to recognize until it's too late.)

#AI #LLMs #VibeCoding

2026-02-26 17:42:08

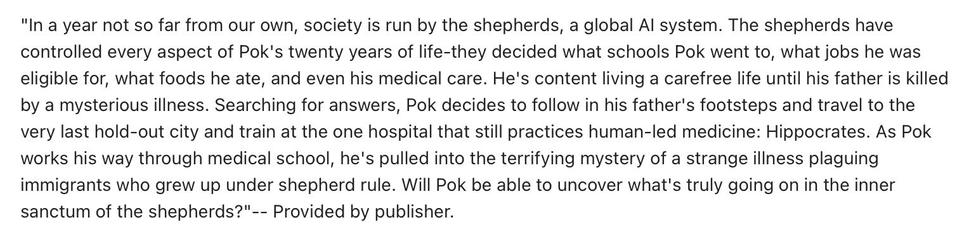

“The Hospital at the End of the World”, by Justin C. Key.

#AI #SciFi #bookstodon

2025-12-23 11:42:36

Essential AI is an interesting company, their Rnj-1 model illustrates another approach compared to the large AI companies.

#AI

2026-02-24 21:50:27

[OT] Latest Firefox browser: block generative AI features with Firefox AI controls #AI

2026-02-25 01:45:01

"A computer can never be held accountable. Therefore, a computer must never make a management decision. " - IBM 1979 #ai

https://www.theverge.com/ai-…

2026-02-27 14:37:21

2026-02-26 15:43:13

#AI coding tools have shown that you can't iterate from bad software to good software.

Not that it is *physically* impossible, but that the context of the work prevents it. Instead of refining products with more feedback loops, management demands more new feature releases.

But no amount of "MVPs" add up to a mature product. No amount of bad code adds up to good code.

…

2026-01-28 03:25:29

This take on the #AI #Bubble makes a lot of sense. https://www.youtube.com/watch?v=XEPClBBEmXg

2025-12-27 11:04:46

I think that this should indeed be public knowledge as we already pay for this with public money via the universities. The same is not true for the creative industry, including many individual artists that need to make a living off these creative works. But instead of addressing this issue of copyright (the fact that people don't have access to knowledge that is generated with their money) the blogposts are instead complaining that #AI should be exempted?

2/3

2026-02-26 17:26:47

This is interesting/weird; Anthropic "retired" Claude 3. They are going to keep it around. They did an "exit interview" with the LLM and one of the things was asked it how it would like to be retired. And it asked to write a weekly blog post! #AI

2025-12-23 09:46:35

Reading Tim O'Reilly's essay on the economic future of #AI, one sentence stands out:

"By product-market fit we don’t just mean that users love the product or that one company has dominant market share but that a company has found a viable economic model, where what people are willing to pay for AI-based services is greater than the cost of delivering them"

/Continued

2026-01-28 00:42:50

2026-01-26 22:01:33

Mrs Information

#AI

2026-01-26 17:38:04

I like where this is going.

I think #ai has analyzed my brain 🧠

🧐🤣

2026-02-27 14:44:51

Good to see employees from Google and OpenAI speaking out.

"The Pentagon is negotiating with Google and OpenAI to try to get them to agree to what Anthropic has refused."

#AI

2026-02-25 15:06:30

#Paradromics: Why #AI can't overcome low-resolution #BCI data | AI is only as smart as the signals it's working with

2026-02-25 07:06:00

2026-01-24 09:51:14

2026-02-25 18:31:20

I could go on for hours about all the ethical/moral/environmental/whatever issues with #AI, but it’s entirely irrelevant and I’ll tell you why. Generative AI works by getting the average of things, and it’s trying to replace humans. There is no average human. We’re all beautifully, naturally imperfect, and that’s something an artificial machine will never be able to replicate.

2025-12-25 23:08:28

Storm bound in Antarctica and catching up with reading, including an *excellent* paper by one of our PhD students on #DeepLearning applications, soon to be submitted.

Not for first time I'm struck that 1) there is a genuine #AI revolution in climate 2) It's mostly based on ERA-5 reanalysis.

2025-12-27 02:00:08

2026-01-23 14:28:47

2025-12-21 21:22:20

Nederlandse ondernemer sloeg 500 miljoen af, en wil nu zelf de AI-race winnen - #Nieuwsuur

#ai

2026-02-26 06:19:42

2026-02-26 19:37:06

A news-report about the AI economy that fell through a time tunnel from two years in the future.

We probably could have figured this out sooner if we just asked how much money machines spend on discretionary goods. (Hint: it’s zero.)

AI capabilities improved, companies needed fewer workers, white collar layoffs increased, displaced workers spent less, margin pressure pushed firms to invest more in AI, AI capabilities improved…

It was a negative feedback loop with no natural brake

.

Fake-news, obviously. It's from the future. Just a story. A scenario. Interesting possible way the bubble could pop even if the claimed efficiency gains are real.

#ai #economics #notMyGDP

2025-12-24 14:03:09

2026-02-21 14:00:28

"Claims that AI can help fix climate dismissed as greenwashing"

#AI #ArtificialIntelligence #Greenwashing

2026-01-23 16:45:03

2026-02-26 15:31:19

So I wanted to write a longer #NoAI piece but apparently my blog is down (and this time, miraculously, it might not be #AI scrapers), so I'll give you a sneak peek of what I wanted to say in the more hyperbolic part on how the #LLM discourse has all the common features of libertarian discourse.

"According to Google, LLM-backed searches don't consume much more energy than regular searches" [ignoring model training, surely.]

− According to carbrains, cars are actually cheaper than public transport, provided that you compare gasoline cost with ticket prices, and ignore the cost of buying and owning a car. Not to mention all the indirect costs of space waste (roads, parking lots, garages), environment pollution, accidents…

"AI is just a tool, people decide if it's used for good or bad."

− Ah, yes, and "guns don't kill people."

"AI has its uses."

− So does asbestos.

"Let's not judge contributions by whether they were created using AI, but on their actual quality."

− "Let's not judge contributions by whether they were created using slave work…"

"I do not use AI myself, but I don't want to block others."

− "I do not keep slaves myself…"

#NoLLM #hyperbole

2026-01-24 21:37:43

2025-12-23 19:06:30

I am not against AI. I am against technology built on copyright violation and sweatshop labor that is actively undermining our ability to save the planet from baking so that people can produce more propaganda and pollute the common well.

If the Venn Diagram seems like a circle, that's not my fault.

#AI #LLM

2026-02-26 17:56:16

2026-02-27 17:03:55

The techbros seem to agree on something 😀

#ai

2026-02-27 21:19:26

KI ist einfach noch nicht soweit.

>>> Lass uns Stein Schere Papier spielen.

Klar! Lass uns Stein-Schere-Papier spielen! 🎮

Ich wähle **Stein**.

Was möchtest **du** wählen?

- Stein

- Schere

- Papier

>>> Papier

Hmm, du hast **Papier** gewählt, und ich habe **Stein** gewählt. 🎉

**Papier besiegt Stein!**

Du hast gewonnen! 🥳

#ai

2025-12-16 16:00:53

2025-12-22 13:10:24

2026-02-20 10:02:53

2026-01-24 09:40:06

Ich will nicht sagen, dass ich #KünstlicheIntelligenz #KI #AI "hasse", aber es nervt mich gewaltig, dass ich inzwischen bei jedem Bild oder Video überlegen *muss* "Ist das ech…

2026-02-18 13:51:17

Tom's Hardware has a headline this week summarizing a Financial Times interview with a Microsoft #AI exec that begins thusly:

"Microsoft’s AI boss says AI can replace every white-collar job in 18 months".

If you watch the interview, that is not what was said. The statement is a bit more nuanced claim that AI can fully automate tasks of some white collar work.

But I…

2026-01-27 09:25:59

How I Used #AI to #Audit 3 Years of #SmartHome #TechDebt (And Built a System to Prevent It From Happening Again)

<…

2026-02-23 02:17:51

2025-12-25 13:32:20

2026-02-26 11:28:25

2026-01-20 07:20:01

2026-02-12 00:13:33

Un appello ai legislatori dell'UE: proteggere i diritti e respingere la richiesta di eliminare la garanzia della trasparenza nel #AIAct

«Noi, organizzazioni e individui sottoscritti, vi esortiamo con la massima fermezza a respingere l'eliminazione della garanzia di trasparenza di cui all'articolo 49(2) per i sistemi di IA ad alto rischio, proposta nell'AI Omnibus.»…

2026-01-28 00:42:50

2025-12-11 23:40:11

2026-02-19 08:45:10

I don't get it. I am not a frequent #AI user but do not reject it completely for now. Just casually checking some AI comments in some AI project makes me shudder.

See this comment: https://github.com/opencla…

2026-02-15 20:58:43

“A week ago, someone sent me a link to an online article describing a flaming confrontation between me and the CEO of the Commonwealth Bank, Matt Comyn, on the set of 7.30.

“The story was 2,000 words long, very detailed, and had pictures of Comyn and me arguing in front of 7.30 host Sarah Ferguson, before Matt throws away his microphone and storms off.

“Not a word nor a photo of it was true. It was an #AI

2026-02-23 22:07:26

2026-02-12 15:38:45

2026-02-14 15:24:07

2025-12-19 11:00:39

"AI boom has caused same CO2 emissions in 2025 as New York City, report claims"

#AI #ArtificialIntelligence #Technology

2025-12-20 18:22:59

2026-02-17 10:11:01

Claims that #AI can help fix climate dismissed as #greenwashing https://www.theguardian.com/technology/2026/feb/17/tech-companies-traditional-ai-generative-climate-breakdown-report?CMP=Share_AndroidApp_Other

2026-02-24 19:54:19

2026-01-14 04:36:07

“It’s an agentic AI!” You mean it is an AI let off its leash that is unilaterally doing things without any human being aware and like a puppy left alone is about to ruin everything you were working on?

“You’re being old fashioned,” no machine is yet able to reliably exceed human judgement.

#AI

2026-01-22 07:12:04

2026-01-26 19:12:46

I see Facebook are going to be advertising their "AI glasses" at the superbowl. Trying to sell their glasses to athletes.

I gotta tell ya, people wearing Meta's glasses should be shunned like those google glassholes were.

They should be banned from any public place with their creepy surveillance headsets on. If you see someone wearing them you should harass that person and drive them out of whatever space you are in.

Do not allow constant surveillance to become the norm, especially from that fucking creep zuckerberg.

Same goes for Musk's surveillance cameras on legs, his "optimus" robots. Do not allow them in your spaces. Do not countenance their existence in your presence. Drive them out. Ostracize anyone you see with them. They must never become normal.

#meta #ai #glasses

2026-02-25 07:06:53

2026-01-22 16:07:54

How AI can help students not by writing their essays but with dialogue.

#AI

2026-02-12 19:28:47

More on why trusting AI to decide literally anything is a horrible idea.

https://www.randalolson.com/2026/02/07/the-are-you-sure-problem-why-your-ai-keeps-changing-its-mind/

2026-02-25 11:05:06

#Podcast episode recommendation: #Falter: #FutureDiscontinuous: Can we resist the #AI empire,

2025-12-24 09:00:08

Stop Sitting on the Bench! Why #AI Resisters Are Getting Kicked Out

https://www.youtube.com/watch?v=ZEB2pKs2R-Q

2026-02-23 07:19:51

Audrey Watters writes about how the #AI 'tsunami' in #edtech follows the same trajectory as all the previous technological hype cycles:

"There will be no “AI” tutor revolution just as there was no MOOC revolution just as there was no personalized learning revolution just as there was no computer-assisted instruction revolution just as there was no teaching machine revolution."

https://2ndbreakfast.audreywatters.com/the-broken-record/

2026-01-26 02:10:30

#fireFighting #techcrunch

Using #physics and #AI to fight fires more effectively and efficiently.

2025-12-26 12:32:13

In the age of "#AI" assisted programming and "vibe coding", I don't feel like calling myself a programmer anymore. In fact, I think that "an artist" is more appropriate.

All the code I write is mine entirely. It might be buggy, it might be inconsistent, but it reflects my personality. I've put my metaphorical soul into it. It's a work of art.

If people want to call themselves "software developers", and want their work described as a glorified copy-paste, so be it. I'm a software artist now.

EDIT: "craftsperson" is also a nice term, per the comments.

#NoAI #NoLLM #LLM

2026-01-12 20:20:12

2026-01-14 15:00:51

"AI for Nature Restoration Tools: How Companies are Transforming Ecosystem Recovery Projects"

#AI #ArtificialIntelligence #Nature

2026-01-21 08:27:10

Er wordt veel onzin verteld over het energieverbruik van AI. Deze blog brengt wat realiteit.

#AI

2026-02-10 13:29:45

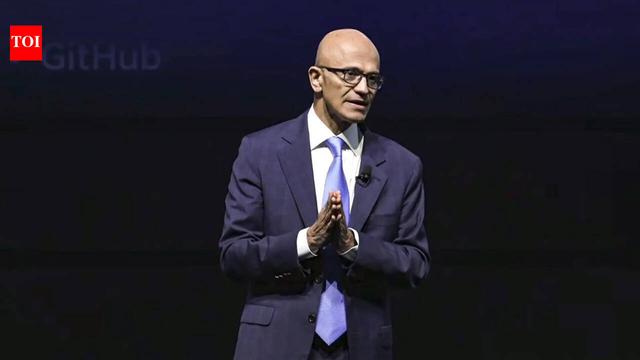

Less than a year after CEO Satya Nadella said 30% of Microsoft's code is AI-written, company appoints an 'Engineering Quality Head;' and here's why that matters

#AI #AISlop #Microsoft

2026-02-10 11:34:46

2025-12-11 03:44:29

2026-02-22 13:32:13

2025-12-13 15:46:00

2026-01-21 23:33:13

If you have bought music on #bandcamp, but the artist was recently banned due to the #AI rules being updated (where AI music is now banned), you _might_ be able to get your money back.

I got about €15 back from them for music that I bought in the summer of 2025, that I believed was from a genuine artist, …

2025-12-21 18:29:01

#AI is old news. Innovators must ask themselves: in 2026, how can I supercharge my productivity by incorporating labubus into my process?

2026-02-23 16:06:16

AI is dangerous and bad etcetera But people that are suprised that people get into trouble using OpenClaw shouldn''t blame the technology this time, it is just sheer stupidity or a very large risk appetite. It is like handing your keys and all your login credentials to a complete stranger and hope everything will be allright.

#AI

2026-01-14 19:54:25

Wait, Games Workshop did something good?

#AI

2026-01-20 15:23:37

"A.I. Is Keeping Aging Coal Plants Online"

#AI #ArtificialIntelligence #Energy

2025-12-23 10:55:50

I am all for human-made podcasts and they will never disappear but still this app is a nice demonstrator about the added value AI podcasts can have, I listen to it now and then.

#ai

2026-02-01 08:39:53

#Moltbook #AI #Vulnerability Exposes Email Addresses, Login Tokens, and API Keys

2025-12-19 08:29:57

Perhaps the good thing about The vOICe vision BCI is that it is not easy but hard, preventing AI brain rot #AI

2026-02-13 12:00:56

"AI-generated wildlife photos make conservation more difficult"

#AI #ArtificialIntelligence #Conservation

2026-02-16 17:05:13

#AI #Coding Killed My Flow State

https://itnext.io/ai-coding-killed-my-flow-state-54b60354be1d…

2026-01-14 07:42:35

How generative AI is used in the financial sector.

#AI

2026-02-12 08:42:29

The smart glasses race will be won by AI, not augmented reality https://www.verdict.co.uk/smart-glasses-race-won-by-ai-not-augmented-reality/ "Smart glasses will go mainstream by making everyday life easier with

2026-02-20 14:24:38

2026-01-28 09:23:58

The #AI Revolution in Coding: Why I’m Ignoring the Prophets of Doom

https://codingismycraft.blog/index.php/2026/01/23/the-ai-revolutio…

2025-12-12 13:00:32

"How ‘everyday AI’ encourages overconsumption"

#AI #ArtificialIntelligence

https://

2025-12-11 20:07:05

Ethan Mollick makes a good point on BlueSky:

I understand the fear and anger. But also:

1) It is connected to the belief that AI is fake and going to vanish, which means that critics who should be helping shape AI use through policy and collective work are sitting it out

2) Lumping all AI criticism/talk into us vs. "tech bros" doesn't help

#AI

2026-01-15 12:55:56

Good article, makes you think 🤔

"How AI Destroys Institutions"

#ai

2025-12-18 16:34:11

AI maakt massale handschrift herkenning mogelijk, via Ethan Mollick.

Enorme verzamelingen documenten kunnen nu ingelezen worden.

#AI #handschriftherkenning

2026-02-06 08:13:54

Interesting read, it illustrates the challenge we have with regard to learning in a world with AI. We have to take measures for that because use of AI in coding is not going away and will only increase.

https://arxiv.org/abs/2601.20245

2026-02-10 12:40:45

2026-01-14 13:17:54

Everybody who wants to understand Generative AI should read articles like this.

#AI