2026-01-04 08:34:12

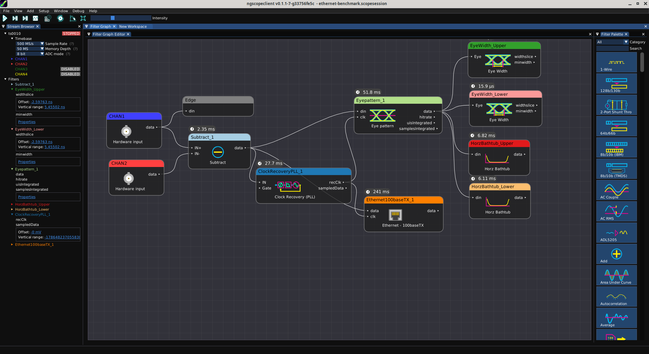

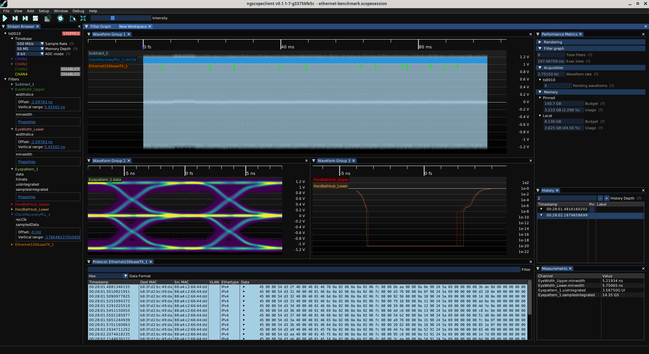

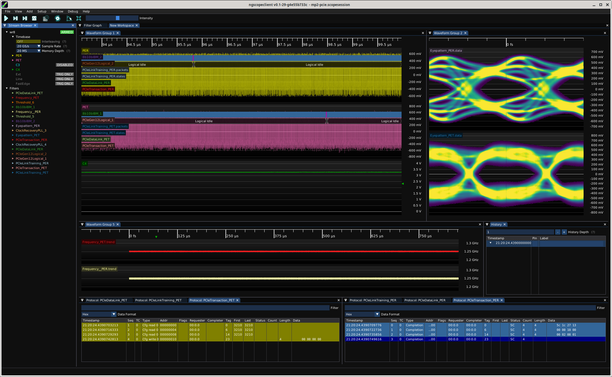

So, tonight's goal is to continue with ngscopeclient performance work.

I started out by doubling the speed of the eye pattern *again* by moving index buffer calculation from CPU to GPU.

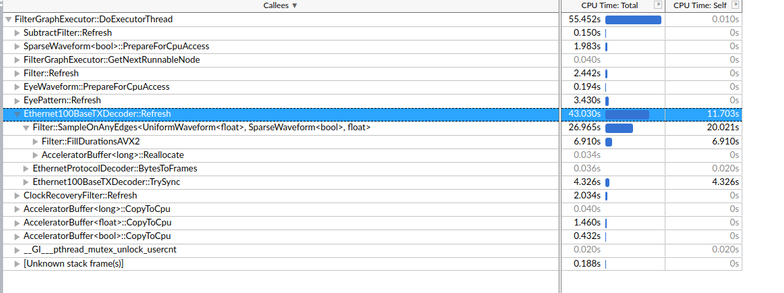

Next up is going to be getting the 100baseTX decoder to not be so slow. Right now of the 43 seconds of CPU time in the current 1-minute benchmark, 26.9 is spent sampling the MLT-3 waveform on rising edges of the recovered clock.

The thing is, we already *know* the sample values at the re…

2026-02-04 14:01:02

Ikea Grejsimojs: Neuer Bluetooth-Lautsprecher für 15 Euro

Ikea hat jetzt einen kinderfreundlichen Bluetooth-Lautsprecher im Angebot. Das Gerät lässt sich mit anderen Ikea-Lautsprechern verbinden.

https://www.

2025-11-05 03:25:14

A new ICE proposal outlines a 24/7 transport operation

run by armed contractors

—turning Texas into the logistical backbone of an industrialized deportation machine.

https://www.wired.com/story/ice-is-building-a-24-7-shadow-transporta…

2026-01-03 20:07:38

…it’s a mistake to •stop• the analysis there. This is not the same old US imperialism as ever. The false wisdom of “They’re all the same” is as always a giant intellectual trap for the left. This is not Iraq again. This is not Chile again. This is not a rerun.

The salty anger of @… is appropriate here; see posts below.

US imperialism was and is a terrible thing with a long history. Also, we are now in uncharted waters. Both these things are true.

https://jorts.horse/@AnarchoNinaWrites/115832859270597712

https://jorts.horse/@AnarchoNinaWrites/115832871321693950

https://jorts.horse/@AnarchoNinaWrites/115832717964769375

2025-12-03 16:34:53

Nou, daar is hij dan, het eerste gemotoriseerde voertuig dat ik in mijn leven heb bezeten, een ICE Adventure, tweedehands bij Tempelman in Dronten. Ik heb er naast de proefrit, een piepklein rondje op gefietst, hier in het park naast het huis en het voelt alsof ik er, als ik tijd van leven heb, veel en lang plezier mee en van ga hebben, of dat ik het zo leuk vindt dat ik zelfs voor een luxe opvolger ga. We zien wel.

2026-01-03 18:42:07

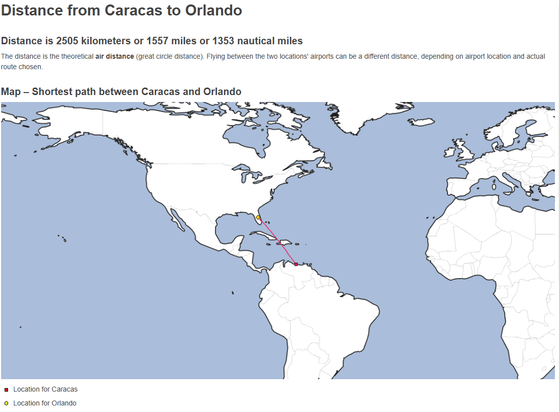

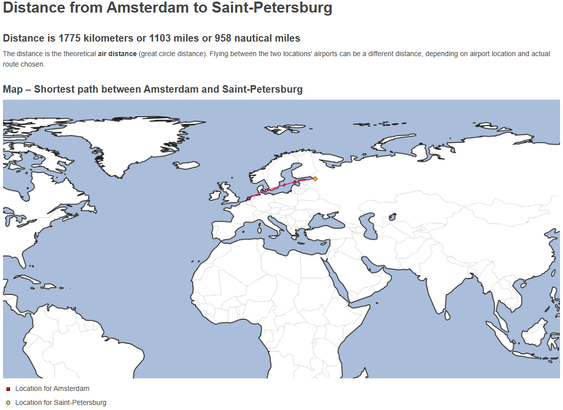

Ik dacht even door over Trumps opmerking dat de VS het recht hebben om 'zichzelf met vriendelijk buren te omringen' binnen hun 'invloedssfeer'.

De afstand tussen de VS en Venezuela is zo'n 2500 km.

De afstand tussen Amsterdam en Sint Petersburg is nog geen 1800 km. En dan laat ik Kaliningrad (veel dichterbij) nog voor wat het is.

Kortom wij liggen volgens Trump in de invloedssfeer van Putin.

Dat we het maar even weten.

2025-12-04 05:24:39

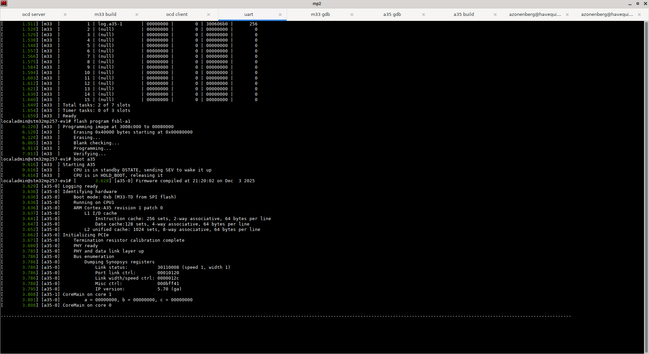

I think this is progress.

I fixed the typoed atu_unr_limit and now the ATU is working, translating accesses to the 0x1000_0000 AXI space to configuration reads and writes in the PCIe bus.

But the data is always zero. I'm trying to write 0x41410000 to the BAR and it comes out as zeroes, and while the protocol analyzer shows me successfully reading 1327:1c5c which is the VID/PID of my test SSD, it comes out as zero on the AXI side.

2025-12-03 05:21:56

“This is a 6-year-old who should be in school, not living in detention,” said Spector.

“And certainly not being separated from his father,

who is a caring, intelligent person who takes good care of him.

They should not be separating families.”

https://www.thecity.n…

2026-02-03 07:07:00

2026-01-05 00:24:45

MERCENARIES is a video and poster campaign to counter ICE recruitment.

The distribution model for this campaign is both decentralized and autonomous.

They ask, "Please help us circulate these everywhere that people are at risk."

https://crimethinc.com/zines/8-things-