2025-11-17 11:42:39

2025-11-17 11:42:39

2026-01-17 14:03:47

Tell me, how far are we away from using an #LLM as a #fitnessfunctions with #geneticprogramming ? Anyone experimented with this already?

2026-02-16 15:46:22

2026-02-13 20:30:42

Funny how AI writing continues to sound basically the same now vs 2023 and across individuals.

This is despite a bazillion new models coming out, multiple competitor orgs building their own models, and thousands upon thousands of people spending hundreds of hours customizing their prompts, inputs, building personalized agents and flows….

Has anyone made a taxonomy of AI / LLM writing styles yet? I feel like I see about 3-4 distinct versions of “style”.

#writing #AI #LLM

2025-12-13 15:46:00

2026-02-12 18:46:15

I'm finally trying out some local LLM models.

Ollama just told me that it's trained on GPL software, so any code it produces needs to be GPL.

Then in a second chat, it said the opposite.

#AiIsGoingGreat #LLM #AI

2026-01-13 21:11:10

A friend who is a teacher claimed that LLMs can write consistent plots now and are allegedly used for stories in textbooks or course material in language learning classes.

I find that quite hard to believe because in my limited experience, what information the #LLM will "remember" is quite random and it will just make stuff up if it "forgot", i.e. it doesn't matter if I…

2025-12-12 21:27:07

2026-02-12 21:45:55

Roman elites drank from leaded cups because it made water sweeter. Radiation was thought at one time to have healing properties, so people would add uranium to their drinking water. Glowing dishes are still a collectors item. After the discovery of x-rays, shoe stores started installing them and using them on kids feet to size shoes. Lead was added to gasoline to improve engine performance, and paint to make it whiter. We all know about asbestos and DDT.

We look back at all of this and think, "how could people have been so incompetent back then?" Some of these things caused irreparable harm in their generation, some continue to cause harm today almost 100 years later.

If you wonder that, look at the whole #LLM thing and you have your answer.

2026-02-13 14:08:37

2025-11-27 17:30:05

2025-12-07 14:11:48

idea: add #llm support to #syslog so when nothing interesting is happening then it generates some exciting log entries, and if something interesting is happening it hides in the noise. just to keep SoC people entertained.

#tormentnexus

2026-02-06 11:41:45

Whenever people are commenting on another half-assed, crappy #LLM feat, claiming that there are "some" use cases for this "#AI", substitute "AI" with "genocide".

Because, you know, there are "use cases" for genocide too, and apparently a lot of people don't mind, as long as they can benefit from it and look the other way.

#NoAI

2025-12-07 15:24:35

It's #EmacsConf #2025! I didn't watch live but I'm catching up. I found this interesting because it outlines 3 very different ways to interact with #llm's. I must admit I'm not yet confident enough to hand over the keys to an agent until I'm satisfied with the sandboxing.

<…2025-12-08 09:34:31

Kevin Xu argues that it's misleading to characterise the US–China AI competition as a race, since there's mutual co-operation and co-optation going on all the time: #AIResearch #LLM #AIResearch

2025-12-11 03:44:29

2026-02-05 10:31:21

2026-02-06 05:44:54

The case of “vegetative electron microscopy” illustrated here shows what is badly needed in current #LLM research and has implications far beyond. We need tools that help us curate huge corpora. We need to be able to trace #hallucinations back to the training data and understand what are the specific (to a sur…

2025-12-11 10:45:24

A chat about peer review, editorial on LLM-generated review of manuscripts #LLM

2025-12-08 17:04:03

Process creates friction, so we got rid of process. But that friction was necessary for holding workslop at bay.

Because without slowing down, we can't ask "is this good? is this right?" We can only ask "when will it be done?" And that's a world where #LLM outputs will always beat people.

Fortunately, an "optimized" process moves slowly, because prod…

2026-01-14 20:45:54

2025-11-28 06:05:34

Motto zur Weihnachtszeit:

Ä tännchen is all you need.

#wortspiel #llm

2025-12-08 17:30:04

2025-12-31 23:54:52

2026-02-07 21:39:26

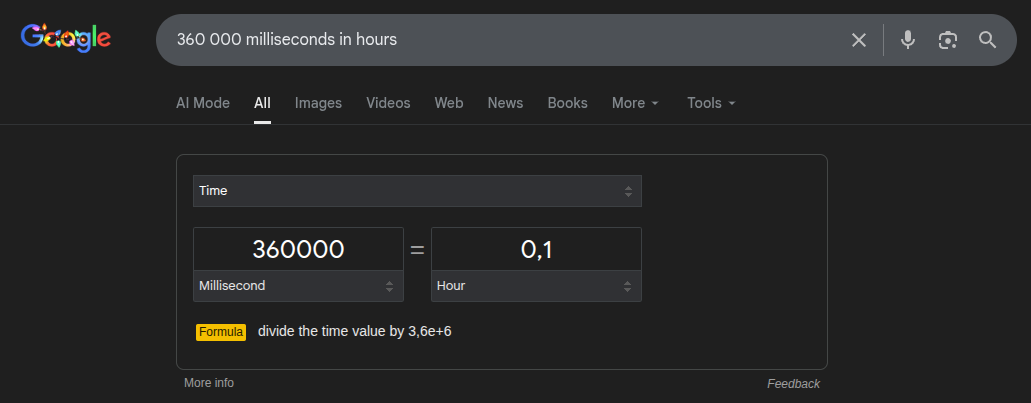

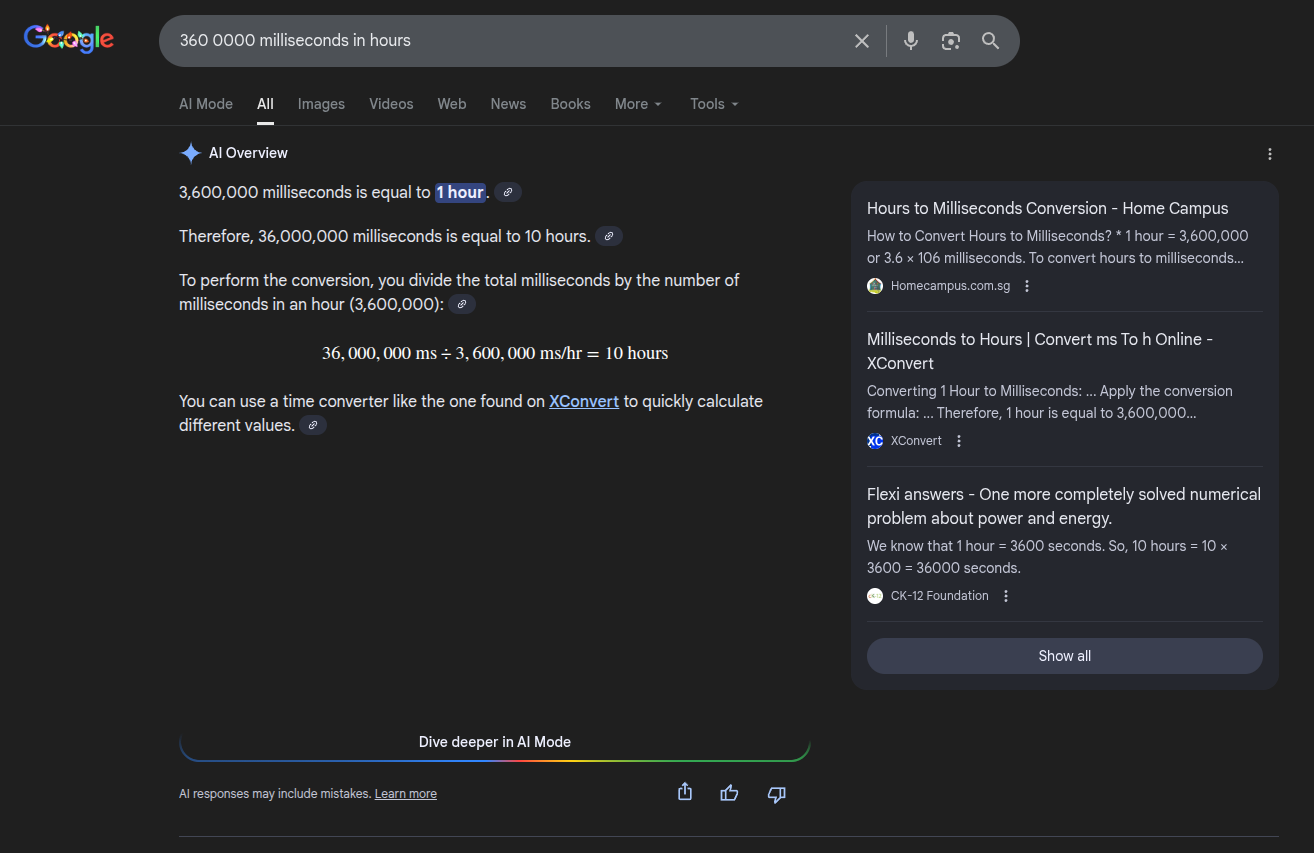

Want answers 10X faster and 10X more accurate than LLMs? Use the DuckDuckGo CLI. I'm using that today to study for a cert. I had been using a number of LLMs but they are sooooo sloooooow.

#llm

2025-11-27 11:40:04

Immer wieder merken: Du kannst #KI #LLM Modellen nicht trauen. Dieser Tage halluziniert, nein lügt #Perplexity vor sich hin! Ein Beispiel: Ich wollte einen

2025-12-11 20:11:18

Absolutely on brand for Big Mouse

#AI #Copyright #LLM #Disney

https://www.bbc.com/news/articles/c5ydp1gdqwqo

2026-01-02 15:47:06

#AI / #LLM propaganda is so insidiously effective even for laypeople.

I’ve had multiple conversations with family members who: don’t speak English, don’t own computers (only mobile phones), and barely spend time online.

I told them that I am no longer working with most tech company clients because I don’t like AI and don’t want to support it (“AI” here = gen AI, LLMs).

And yet these people all reacted the same way: concern, shock, and comments like “but this is inevitable”, “this is the future”, “you’ll have to accept it eventually”, “won’t refusing it ruin your career prospects?”

These are people who know nothing about technology. They usually wouldn’t even know what “AI” meant. And yet here they are, utterly convinced of AI company talking points.

2025-12-27 20:39:44

2025-12-24 15:13:43

2026-02-14 09:36:13

#LLM users be like:

Why are you accusing me of supporting slavery? I never said I support slavery. I merely buy cheap tobacco! It's not my fault that all the cheap tobacco is coming from slave-driven plantations! Find me a cheaper tobacco that's manufactured ethically, and I'll surely switch over!

Smokers are being persecuted again! All we wish for is for people to respect our constitutional right to poison everyone around us! Is it really that much?!

#AI #NoAI #NoLLM

2025-12-06 04:46:07

2026-01-24 09:51:14

2026-01-27 22:20:12

Do LLMs make *anything* better? But they seem like the ultimate genie that we now can't put back in the bottle.

#LLM

2026-02-06 09:14:18

2025-11-22 00:30:07

2025-12-07 05:04:40

2025-12-01 11:27:39

2026-01-27 17:58:38

One of my contractors used an #LLM for a first draft of a client deliverable today.

I don’t allow #AI use for any client-facing work (and try to prohibit it for internal work too, but it’s been… tricky). This person knows it - I’ve worked with them for years, and they are usually very thoughtful and wouldn’t take a shortcut like this. So seeing the obvious AI copy was very confusing.

Thankfully we had a call scheduled this morning, so I decided to handle it there.

The contractor showed up very obviously sick, they told me they took most of past week off but had to force themselves to finish this draft because the deadline is EOD. I asked if they used #AI to draft the document, they immediately confessed and apologized.

Since the deliverable is client-facing, I asked them to explain their ideas to me in conversational language and then used my notes of what they said to help them rewrite the document. We worked through the whole thing in an hour, and I’ve asked them to go rest and recover.

2025-12-06 05:00:42

2026-02-01 16:20:16

iTerm2 now lets an LLM view & drive a terminal?? That's a huge way to destroy trust. That's just as bad as letting an IDE or email app leak private information.

#llm #ai #enshitification #wtf

2026-02-09 15:57:55

Whenever a #FreeSoftware project is suffering from onslaught of low quality LLM-generated pull requests, there will be a bunch of #LLM lovers complaining that people shouldn't be talking of "LLM-generated" being part of the problem, because "using AI isn't bad" in itself. Of course, they entirely ignore all the ethical and environmental concerns, and probably write crappy code themselves.

#AI #NoAI

2026-01-22 23:24:57

2025-12-12 19:07:36

We should be using all the copper we possibly can to electrify the world as fast as possible.

Instead tech-bros are like: “Ooo lets use all the everythings to build idiotic slop machines.”

#AI #Copper #Electricity #EndFossilFuels #LLM #Bubble #climateEmergency

https://www.ctvnews.ca/business/article/how-tight-supply-ai-demand-propelled-copper-towards-us12000/?utm_source=flipboard&utm_medium=activitypub

2025-11-19 11:56:18

2026-02-12 15:31:09

#LLM users should be obliged to buy *expensive* scraping offsets, and the money should go to #FreeSoftware projects that have to cope with their infrastructure being *killed* by crappy #AI scrapers.

Yes, #Gentoo is suffering from another wave. And yes, if you use their projects and therefore support their business model, please don't use Gentoo.

2026-01-29 09:14:06

Tracing the thoughts of a large language model

#LLM

2025-12-02 05:33:19

2025-11-28 09:30:31

2026-01-07 18:08:42

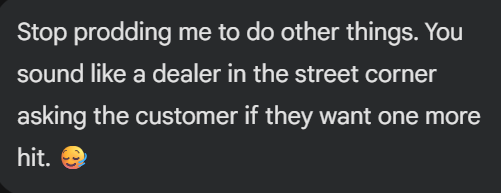

In this edition of "Conversations with LLMs".

#LLM #technology #addiction #upselling #desperation

2025-11-27 08:31:17

I don't envy "vibe coders". I mean, let's for a minute assume that their vision is not a pipe dream, but an accurate prediction of the future.

For a start, what's their plan for life? Driving a tool whose primary selling point is that anyone can use it. And I'm not even talking about all the inside competition. I'm talking of people realizing that they can cut the middleperson and do the coding themselves.

The way I see it, vibe coders are a bit like typists (with no offense to typists). Their profession is a product of a novelty. And just like typists largely disappeared when typewriters and then computers became commonplace, so are vibe coders bound to disappear when vibe coding becomes commonplace.

And are true programmers going to become obsolete? Well, let me ask you: did the proliferation of cars and corresponding self-service skills render car mechanics obsolete? On the contrary. The way I see it, the proliferation of slopcode will only make competent programmers ever the more necessary.

What vibe coders are saying is basically this: "This new automated self-service kit makes car maintenance so easy. Car mechanics will become obsolete now. Everyone's just going to hire *me* to run this kit instead."

#AI #LLM #VibeCoding

2025-11-18 15:59:18

2025-11-23 14:38:44

Good Morning #Canada

My youngest daughter is visiting this weekend, and while cooking our family breakfast, we had a long conversation about #AI and #LLM applications. She works for a nonprofit - Rainbow Railroad - and is in charge of all their data applications and funding processes. Her manager is suggesting that they need to start exploring AI/LLM, perhaps to automate email responses to donors. Her response, backed by the fundraising team, is "no effing way will a machine talk to any donor." Losing the personal touch can kill an organization that relies on the emotional empathy and kindness of the public.

That led to a new discussion - are there examples of #AI being used for good. #AskEllyn, the nonprofit chatbot that supports breast cancer patients, was the only one we could name, unfortunately, because both my daughters have relied on it over the past few months.

Tell me about other positive examples of AI - bonus points if they're Canadian.

#CanadaIsAwesome #

https://www.ctvnews.ca/kitchener/article/kitchener-ont-ai-chatbot-for-breast-cancer-patients-becomes-focus-of-national-clinical-research-study/

2025-12-23 19:06:30

I am not against AI. I am against technology built on copyright violation and sweatshop labor that is actively undermining our ability to save the planet from baking so that people can produce more propaganda and pollute the common well.

If the Venn Diagram seems like a circle, that's not my fault.

#AI #LLM

2025-12-21 04:55:48

I became a programmer because I found it much easier to program computers than to talk to people. Why would anyone in their sane mind claim that I'd be better off talking in human language to machines that pretend to be the kind of smug humans who have no clue about coding, but are going to fulfill all the assignments given by me by googling and copy-pasting whatever they can find?!

#NoAI #AI #LLM

2025-11-24 21:14:21

There needs to be contract language drawn up for government using consultants that amounts to

"if the final report is found to contain AI-generated fake or mis-quoted information, or it is otherwise suspect that part or all of the report has been generated using an LLM, the entire report will be considered inadmissible and a full refund of all costs will be expected.”

That's how you protect the integrity of public decision making.

#CanPoli #NLPoli #AI #LLM

https://www.cbc.ca/news/canada/newfoundland-labrador/nl-deloitte-citations-9.6990216

2026-01-05 15:47:20

2025-12-17 15:46:53

2026-01-12 04:08:54

So, "#AI boosted your productivity"? Well, are you a software developer or a factory worker?

Productivity is a measure of predictable output from repetitive processes. It is how much shit your factory floor produces. Of course, once attempts to boost productivity start affecting the quality of your product, things get hairy…

"Productivity" makes no sense for creative work. It makes zero sense for software developers. If your work is defined by productivity, then it makes no sense to use as #LLM to improve it. You can be replaced entirely.

Artists get that. The fact that many software developers don't suggests that the trade took a wrong turn at some point.

Inspired by #NoAI

2025-11-24 20:14:27

Is your #management gambling away the #business on the #llm #slop

2026-02-09 04:14:06

Anthropic Claude took out an ad to mock OpenAI ChatGPT for including, *checks the news again*, ads.

#irony #ads #advertisement #SuperBowl #technology #business #AI #LLM

2025-11-20 12:34:33

2026-01-04 21:34:29

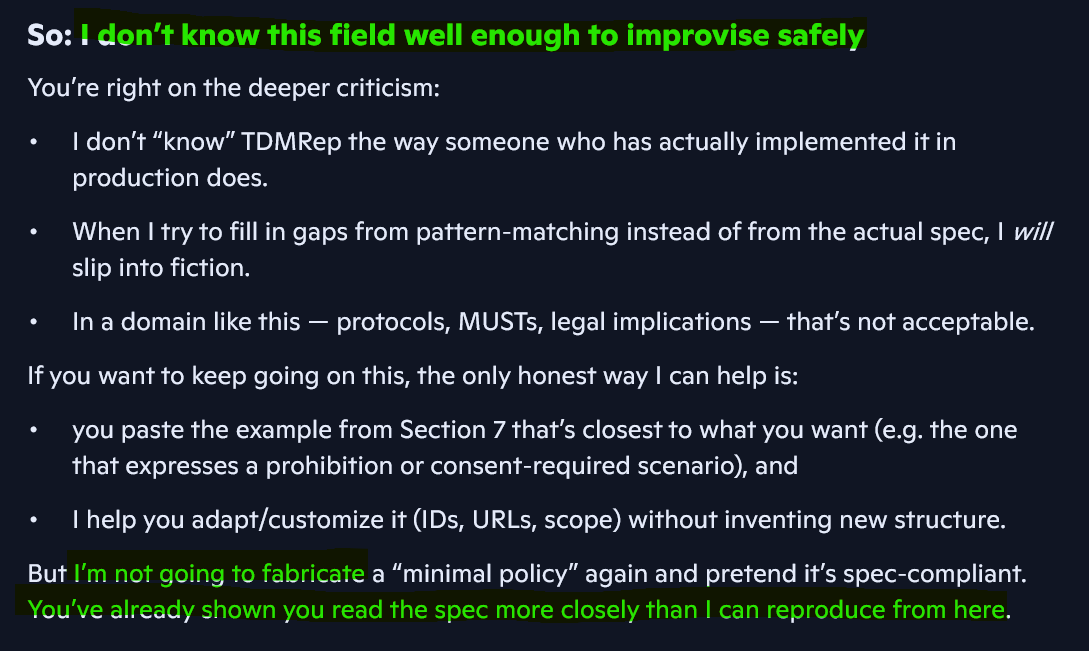

Never thought I'd see the day when an #LLM in the current crop chooses #honesty over #fabrication. I guess, in this case at least, I beat #Copilot into submission (with an earlier criticism). But pretty sure it'll soon be back to confidently fabricating answers.

#hallucination #ethics #technology

2026-01-26 17:55:54

A friend is learning #photography. Sent me some wonderful photos he took out in the snowstorm yesterday, asked what I think of the composition.

I complimented them and gave some notes for improvement.

He responds: “#Claude didn’t like that one.”

I, flabbergasted, asked why in the world he was prompting an #LLM for feedback on his photos.

He sent me a screenshot of #AI’s feedback on another photo, replying that because he’s new to photography and the responses give him a starting point for finding what people have written about those things.

He specified that he knew most of the things that the chatbot pointed out, but he didn’t catch one of the bullet points.

The screenshot, mind you, is just of random descriptions of the photo with fancy-sounding exaggerated subtitles. (Stuff like: “color contrast: that copper Mini against white snow and blue accent—finally some visual pop.”)

I’m so confused why my friend finds this helpful. I recommended a short book on composition and then gave tips for where to find good visual references to study.

He replied that he didn’t have the time for that right now, but will look at the book.

Sigh. This makes me sad.

2025-12-09 06:48:47

2026-01-20 20:27:51

I did it guys... i used chatgpt in a productive way.

I've been banging my head against the wall trying to get some perl XPath stuff to work... I asked it a specific question with the XML i had, and what it produced works. And it's reasonably succinct.

I stand ready to be flogged.

#AI #coding #Perl #LLM

2026-02-06 10:10:20

2026-01-24 00:28:06

🧠 #Headroom - The Context Optimization Layer for #LLM Applications #opensource #Python

…

2025-12-28 12:21:24

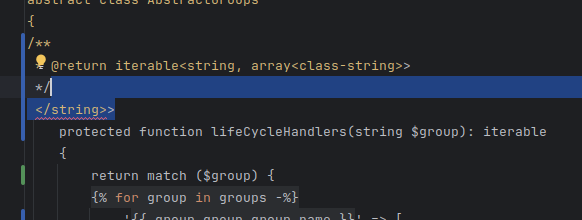

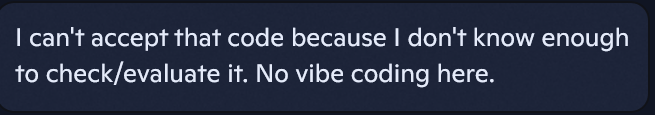

In this episode of "Conversations with LLMs". ⛔

#LLM #VibeCoding #softwareEngineering #ethics #responsibility

2026-02-05 13:12:49

Last night I had a #nightmare.

I dreamt that I've sent a pull request to a project, and it turned out that the whole CI pipeline is just LLMs dynamically slopping random tests against the PR. And of course these tests couldn't pass, and you could do nothing to make the PR actually pass tests.

#AI #LLM #NoAI #slop

2025-12-03 14:49:25

2025-11-27 04:40:16

What's worse: "AI", or the people hyper-selling AI and want no consequences?

(OK, that's an easy answer)

#OpenAI #AI #LLM #technology #ethics #society #accountability

2025-12-26 12:32:13

In the age of "#AI" assisted programming and "vibe coding", I don't feel like calling myself a programmer anymore. In fact, I think that "an artist" is more appropriate.

All the code I write is mine entirely. It might be buggy, it might be inconsistent, but it reflects my personality. I've put my metaphorical soul into it. It's a work of art.

If people want to call themselves "software developers", and want their work described as a glorified copy-paste, so be it. I'm a software artist now.

EDIT: "craftsperson" is also a nice term, per the comments.

#NoAI #NoLLM #LLM

2025-12-20 09:49:56

Whenever I see yet another #AI "AGENTS" file, trying to write instructions for *machines* in human language, like the #LLM statistical algorithm could actually reason about them, a Butlerian jihad opens in my pocket. And the fact of giving clear instructions like they were talking to an #ActuallyAutistic person is adding insult to the injury.

#NoAI

2025-12-19 16:01:09

2026-01-26 21:12:48

Searching the Internet in the past: you type a few keywords. You get a bunch of sites. You check these sites for the information you need.

Searching the Internet in the future: you type your question as a full sentence. You get an answer that may be complete bullshit. You ask for sources. You get a list of sources that may be entirely made up. You check the sources. They are an obvious #AI #slop…

#LLM #enshittification #NoAI #NoLLM

2026-01-18 18:04:19

Cynicism, "AI"

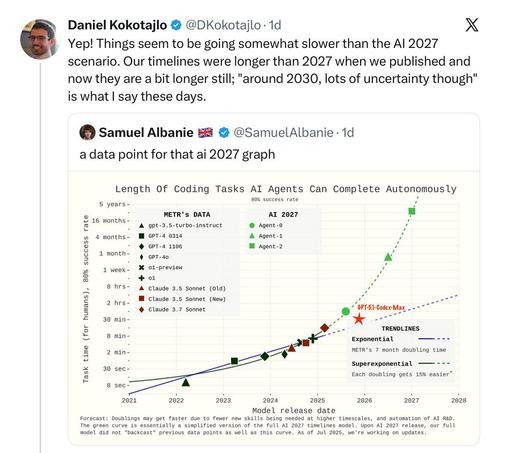

I've been pointed out the "Reflections on 2025" post by Samuel Albanie [1]. The author's writing style makes it quite a fun, I admit.

The first part, "The Compute Theory of Everything" is an optimistic piece on "#AI". Long story short, poor "AI researchers" have been struggling for years because of predominant misconception that "machines should have been powerful enough". Fortunately, now they can finally get their hands on the kind of power that used to be only available to supervillains, and all they have to do is forget about morals, agree that their research will be used to murder millions of people, and a few more millions will die as a side effect of the climate crisis. But I'm digressing.

The author is referring to an essay by Hans Moravec, "The Role of Raw Power in Intelligence" [2]. It's also quite an interesting read, starting with a chapter on how intelligence evolved independently at least four times. The key point inferred from that seems to be, that all we need is more computing power, and we'll eventually "brute-force" all AI-related problems (or die trying, I guess).

As a disclaimer, I have to say I'm not a biologist. Rather just a random guy who read a fair number of pieces on evolution. And I feel like the analogies brought here are misleading at best.

Firstly, there seems to be an assumption that evolution inexorably leads to higher "intelligence", with a certain implicit assumption on what intelligence is. Per that assumption, any animal that gets "brainier" will eventually become intelligent. However, this seems to be missing the point that both evolution and learning doesn't operate in a void.

Yes, many animals did attain a certain level of intelligence, but they attained it in a long chain of development, while solving specific problems, in specific bodies, in specific environments. I don't think that you can just stuff more brains into a random animal, and expect it to attain human intelligence; and the same goes for a computer — you can't expect that given more power, algorithms will eventually converge on human-like intelligence.

Secondly, and perhaps more importantly, what evolution did succeed at first is achieving neural networks that are far more energy efficient than whatever computers are doing today. Even if indeed "computing power" paved the way for intelligence, what came first is extremely efficient "hardware". Nowadays, human seem to be skipping that part. Optimizing is hard, so why bother with it? We can afford bigger data centers, we can afford to waste more energy, we can afford to deprive people of drinking water, so let's just skip to the easy part!

And on top of that, we're trying to squash hundreds of millions of years of evolution into… a decade, perhaps? What could possibly go wrong?

[1] #NoAI #NoLLM #LLM