2026-02-15 20:53:36

2026-02-15 20:53:36

2026-02-17 06:42:41

Finally put together type stubs for an old (last release 2017!) python library I've been depending on for years. And now wondering whether it would have been easier to just contribute types to the library directly. I thought it abandoned but then the maintainer responded up on an unrelated ticket.

(Though there's no CI infra actually working anymore. Makes testing contributions painful. Wonder whether building that first would be worthwhile & welcome...)

#python #packaging

2026-01-13 16:57:16

Only $1.5mil?

#Python #Anthropic

2026-02-16 14:57:12

I made a utility to bulk-upload calendar entries to a #CalDAV server from a JSON file:

https://pypi.org/project/caldav-event-pusher/

2025-12-11 06:07:02

#Steady

Wie lassen sich #Wechselrichterdaten vom APsystems EZ1 lokal speichern?

Für statistische Auswertungen müssen alle Daten kontinuierlich gespeichert werden. Wie das mithilfe von #Python

2025-12-10 16:58:59

I rewrote a data analysis pipeline, moving it from #python to #julialang . I am now in love with the threading support in Julia.

The task is very parallelizable but each thread needs random read access to a tens-of-GB dataset. In Python (with multiprocessing, shared stores, etc) data bookkeeping was a nightmar…

2025-12-03 05:05:25

BREAKING: #CPython 3.13.10 and 3.14.1 changed the multiprocessing message format in patch release. As a result, programs using multiprocessing may break randomly if they are running while #Python is upgraded (i.e. need restarting).

But apparently it's not a big deal, since all the cool kids are running Python in containers, and nobody is using Python for system tools anymore. Everything has been RIIR-ed and Python is only omnipresent in some backwaters like #Gentoo.

https://github.com/python/cpython/issues/142206

2026-01-02 10:53:39

I just found a Starter Pack called Women in #Python: https://fedidevs.com/s/NjY3/

Ceated by Python Core Developer @…

2025-12-30 09:00:09

4 mois de #Python de manière intensive: mon retour sur le langage

https://mcorbin.fr/posts/2025-12-26-python-langage/

2025-12-12 13:37:50

2026-01-09 05:43:08

Santa #Python came super early in 2026!

With build 1.4.0, it is now possible to easily dump effective package metadata!

So getting the version of a package in the current directory is now as easy as `pipx run build --metadata 2>/dev/null | jq -r .version`.

This is NOT like parsing pyproject.toml or whatever. It builds the package and looks at the result. So it works even with…

2025-11-17 22:11:27

2026-01-15 15:58:32

> Concretely, we expect to add ML-KEM and ML-DSA APIs that are only available with #LibreSSL/#BoringSSL/AWS-LC, and not with #OpenSSL.

(from #Cryptography and #Qt simultaneously, right? (Qt rejected LibreSSL support.)

#Python

2025-11-30 14:02:44

2025-11-19 14:21:15

Any #Python newbies out there? (Or experts that need to teach Python)

Would you have a specific online tutorial to recommend for someone who wants to learn Python without any prior programming experience? One that also explains how to install it ?

I was thinking of something like this:

2025-12-21 09:30:04

2025-12-29 19:22:52

2026-01-05 15:52:34

how did i not know about tox’s version range syntax!? (i.e., 3{9-14} == 3{9,10,11,12,13,14}) 😍

(added in tox 4.25.0 on 2025-03-27) #python

2025-11-19 22:55:05

My very first experience with Python was generating programmatic art using the Turtle module.

Don’t let anyone tell you coding is only for pros, it can be an incredibly fun and liberating experience.

#PythonIsForEveryone #Python

2026-01-07 07:12:33

2026-01-23 17:45:15

In #Python, you could write sensible and transparent code, like this:

if (curNode):

curNode = curNode.next

But if you prefer something that is functionally identical, but harder to read, try this:

curNode and (curNode := curNode.next)

Follow me for more great tips on how to make life hell for the next person working with your code (which could be you).

2025-11-21 06:28:44

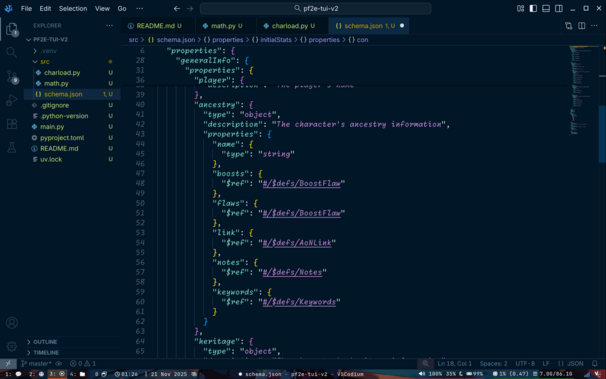

The #Pathfinder2E character data structure is in progress, figured a good ol'-fashioned JSON schema would be the way to go. Still, progress continues.

#python #characterSheet

2026-01-19 11:00:01

Video tutorials for modern ideas and open source tools. #python

2025-12-15 04:47:34

Totally normal #Python upstream attitude:

1. Ignore a reply on a bug report for 3 years.

2. Install a #StaleBot in the middle of the night.

3. 7 minutes after the bug is marked stale, claim that you "never heard back on this" and that "the issue was somewhere downstream", without even checking another linked issue.

#Matplotlib

2026-01-21 00:19:52

📋 #SkillSeekers - #Python tool automatically transforms documentation, #GitHub repos & PDFs into production-ready AI skills

2025-12-31 09:19:35

2026-02-14 02:42:36

2025-12-23 06:48:38

2025-12-12 05:17:12

2026-01-02 04:23:29

Yeah, why not neglect all the good recommendations in the #Python ecosystem, and instead fork your own C extension package, force people to build it with #ZigLang (it's still C), add unconditional dependency on that, and on top of that, refuse to publish wheels, "allowing for optimised compilation according to your machine's specific architecture and capabilities, instead of some (low performance) common denominator."

Fortunately, looks like #Gentoo can just ignore all the fancy crap and compile it with GCC.

https://pypi.org/project/ruamel.yaml.clibz/

[UPDATE: didn't last long: https://sourceforge.net/p/ruamel-yaml/code/ci/6846cf136e775ed0052cdef6ff02330269c86011/]

2026-01-22 10:16:03

MapToPoster is a beautiful utility to create minimalist posters of the (water)ways of a city.

If you have uv installed, it takes a moment to get going

#python

2026-01-03 17:24:22

2025-11-29 10:11:58

#Python "do not pin your new dependencies to a version that's already obsolete by the time you release your package" challenge.

This one also has difficulty: impossible.

#packaging

2026-01-11 22:35:27

Binary partitioning with k-d trees is silly amazing. And with OSM data in radians and pretending the planet is a perfect sphere, scikit-learn's BallTree works wonderfully for quickly finding things nearby some reference point.

Like how far away the nearest bicycle_parking is for every building in a city.

#IMadeAThing #python #osm #overpassql

2025-12-29 17:48:33

When you notice twine uploading a 400M sdist for a tiny #Python package, you know something went wrong.

2026-01-22 08:35:08

What‘s your go-to #python or #rstats tool(chain) for splitting #German compounds? I‘ve tried a few but was not really satisfied. Maybe I missed something. #NLP #linguistics

2025-12-20 07:40:05

2025-11-26 12:40:38

📦 #Copyparty - Turn Any Device Into a Feature-Rich File Server #opensource #selfhosted #Python

2026-02-01 15:34:36

Ah yes, brew upgraded some ssl-related .so file and broke the python binaries linked against it. Again. So many venvs now lay in ruins.

There has got to be a better way.

(Why do pyenv-built pythons seem to habitually get built using the brew-provided libraries? There's no way for brew to know about that dependency.)

#brew #python #macdev #complaining #askfedi

2026-02-08 19:27:45

I hear that #Python folk are going to enjoy their Monday.

#setuptools removed pkg_resources.

Thanks to Eli Schwartz for the advance warning. We're going to mask it in #Gentoo.

2026-01-24 00:28:06

🧠 #Headroom - The Context Optimization Layer for #LLM Applications #opensource #Python

…

2025-12-09 06:48:47

2026-01-07 04:46:42

Let's get this straight: it is entirely normal for a #OpenSource project to accumulate bug reports over time. They're not a thing to be ashamed of.

On the contrary, if you see a nontrivial project with a very small number of bug reports, it usually means one of the following:

a. you've hit a malicious fake,

b. the project is very young and it doesn't have many users (so it's likely buggy),

c. the project is actively shoving issues under the carpet.

None of that is a good sign. You don't want to use that (except for b., if you're ready to be the beta tester).

#FreeSoftware #Gentoo #GitHub #Python

2025-12-20 11:48:43

#Sphinx joined the list of packages dropping #Python 3.11 (and therefore #PyPy) support. Of course, we could just go through the effort of dropping it from respective packages in #Gentoo, given it's not technically that common… but honestly, at this point I have zero motivation to put the extra effort for this, just to learn that next month some core package starts requiring Python 3.12.

So, would anyone really mind if I removed Python 3.11 and PyPy support completely from Gentoo packages?

2026-01-07 03:55:58

How to absolutely *not* do #OpenSource: require people to commit to work on other issues with your project in order to file bugs. So, sorry, #Typer, I won't be filing bugs. You figure out how you messed up your release yourself.

Also, please don't use #FastAPI. They are clearly bothered by the fact that people dare use their projects and waste their precious time with support requests.

#Python #FreeSoftware #Gentoo

2025-11-28 02:53:54

"Do not introduce #NIH #RustLang dependencies in your #Python package when there's no performance, security or any other benefit to it, and it just limits portability and creates more work for packagers" challenge.

Difficulty: impossible.

#packaging

2026-01-27 09:49:48