2025-07-13 22:33:29

Two wildfires are burning at or near the North Rim, known

as the White Sage Fire

and the Dragon Bravo Fire.

The latter is the one that destroyed the lodge and other structures.

The park initially was managing it as a controlled burn

but then shifted to suppression as it rapidly grew to 7.8 square miles (20 square kilometers) because of hot temperatures,

low humidity and wind, fire officials said.

No injuries have been reported.

Millions of peo…

2025-09-13 23:43:29

TL;DR: what if nationalism, not anarchy, is futile?

Since I had the pleasure of seeing the "what would anarchists do against a warlord?" argument again in my timeline, I'll present again my extremely simple proposed solution:

Convince the followers of the warlord that they're better off joining you in freedom, then kill or exile the warlord once they're alone or vastly outnumbered.

Remember that even in our own historical moment where nothing close to large-scale free society has existed in living memory, the warlord's promise of "help me oppress others and you'll be richly rewarded" is a lie that many understand is historically a bad bet. Many, many people currently take that bet, for a variety of reasons, and they're enough to coerce through fear an even larger number of others. But although we imagine, just as the medieval peasants might have imagined of monarchy, that such a structure is both the natural order of things and much too strong to possibly fail, in reality it takes an enormous amount of energy, coordination, and luck for these structures to persist! Nations crumble every day, and none has survived more than a couple *hundred* years, compared to pre-nation societies which persisted for *tends of thousands of years* if not more. I'm this bubbling froth of hierarchies, the notion that hierarchy is inevitable is certainly popular, but since there's clearly a bit of an ulterior motive to make (and teach) that claim, I'm not sure we should trust it.

So what I believe could form the preconditions for future anarchist societies to avoid the "warlord problem" is merely: a widespread common sense belief that letting anyone else have authority over you is morally suspect. Given such a belief, a warlord will have a hard time building any following at all, and their opponents will have an easy time getting their supporters to defect. In fact, we're already partway there, relative to the situation a couple hundred years ago. At that time, someone could claim "you need to obey my orders and fight and die for me because the Queen was my mother" and that was actually a quite successful strategy. Nowadays, this strategy is only still working in a few isolated places, and the idea that one could *start a new monarchy* or even resurrect a defunct one seems absurd. So why can't that same transformation from "this is just how the world works" to "haha, how did anyone ever believe *that*? also happen to nationalism in general? I don't see an obvious reason why not.

Now I think one popular counterargument to this is: if you think non-state societies can win out with these tactics, why didn't they work for American tribes in the face of the European colonizers? (Or insert your favorite example of colonialism here.) I think I can imagine a variety of reasons, from the fact that many of those societies didn't try this tactic (and/or were hierarchical themselves), to the impacts of disease weakening those societies pre-contact, to the fact that with much-greater communication and education possibilities it might work better now, to the fact that most of those tribes are *still* around, and a future in which they persist longer than the colonist ideologies actually seems likely to me, despite the fact that so much cultural destruction has taken place. In fact, if the modern day descendants of the colonized tribes sow the seeds of a future society free of colonialism, that's the ultimate demonstration of the futility of hierarchical domination (I just read "Theory of Water" by Leanne Betasamosake Simpson).

I guess the TL;DR on this is: what if nationalism is actually as futile as monarchy, and we're just unfortunately living in the brief period during which it is ascendant?

2025-09-05 14:38:01

🔥 Grand Canyon’s Dragon Bravo megafire shows the growing wildfire threat to water systems

https://theconversation.com/grand-canyons-dragon-bravo-megafire-shows-the-growing-wildfire-threat-to-water-systems-263104…

2025-07-22 16:04:54

In the spring, I gave the participants of the fine arts pedagogy course at INN a glimpse into what I’ll be researching this fall. While the connections have developed since then, I still like the map and refer to it regularly.

A brief overview – fredsnotes https://filmschoolteacher.info…

2025-06-28 08:13:00

Abuse in Buddhism: The Law of Silence https://openbuddhism.org/library/videos/2022/elodie-emery-wandrille-lanos-present-buddhism-the-unspeakable-truth/

2025-07-26 10:42:34

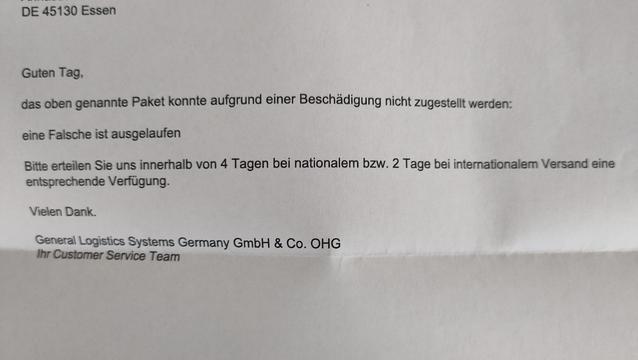

Ich habe via Amazon bei einem deutschen Händler 6 Flaschen Sirup bestellt.

Händler schickte das falsche Produkt.

Zurückgeschickt in der selben Verpackung.

Nun schreibt mir GLS einen Brief, dass sie es nicht zustellen konnten, weil eine Flasche kaputtgegangen ist. Und wollen eine Verfügung.

WTH? Wie soll ich eine Verfügung erteilen? Bedeutet das in D was anderes als in CH?

2025-09-04 08:06:01

Non-Lagrangian Construction of Anyons via Flux Quantization in Cohomotopy

Hisham Sati, Urs Schreiber

https://arxiv.org/abs/2509.02577 https://arxiv.org/pdf…

2025-09-05 09:08:31

Hamiltonian Systems as an Example of Invariant Measure

Daniel Ferreira Lopes

https://arxiv.org/abs/2509.04248 https://arxiv.org/pdf/2509.04248

2025-08-17 19:58:48

Dynamic #EV charging prices, following the spot market, and available to all - including direct payment with bank or credit card, without ‘fees’. A big ‘Bravo!’ for enercity and EV-Pay for showing what is possible in Germany, despite all the ‘Eichrecht’ hurdles.

2025-06-30 08:28:20

The First Compute Arms Race: the Early History of Numerical Weather Prediction

Charles Yang

https://arxiv.org/abs/2506.21816 https://…