2025-11-23 07:01:39

An in-depth look at the humanoid robotics industry, which currently relies heavily on hype to rally interest and investors, as AI fuels the humanoid boom (James Vincent/Harper's)

https://harpers.org/archive/2025/12/kicking-robots-james-vincent-humanoids/

2026-01-22 05:48:40

🇺🇦 #NowPlaying on KEXP's #AstralPlane

Vanishing Twin:

🎵 The Conservation of Energy

#VanishingTwin

https://v-twin.bandcamp.com/track/the-conservation-of-energy

https://open.spotify.com/track/5uNXiMHALYL5LPhlUsczpq

2025-12-22 17:15:20

Daniels was looking at just 10 easily quantifiable body measurements. How many important dimensions of variations are there in a human mind? How hard are they to measure? How likely is it that even one single “average” mind exists on Earth?? The odds are vanishingly small.

[Napkin sketch: assume there are a paltry 20 dimensions of brain variation. (Surely that’s low.) Assume there’s a 1 in 5 change of being completely “normal” in each. (Surely that’s high.) Even that absurd hypothetical gives a 1 in 11,490 chance that a •single• completely average mind exists in a population of 8.3 billion.]

5/

2026-02-19 09:25:57

Q&A with Walt Handelsman, who retired at the end of 2025 as an editorial cartoonist at New Orleans' Times-Picayune, on the changes in a dwindling profession (Rob Tornoe/Editor and Publisher)

https://www.editorandpublisher.com/stories/the-vanishing…

2025-12-21 20:09:18

Mage session:

The un-awakened teenager, whose brother had been abducted and imprisoned by their parents, has just spotted them for the first time since her brother vanished. She runs at them, yelling a battle cry on the top of her lungs.

Storyteller: »Is somebody trying to stop her?«

The Euthanatoi social worker: »Can I possess her with a spirit of vengeance?«

#rpg

2026-01-22 22:02:25

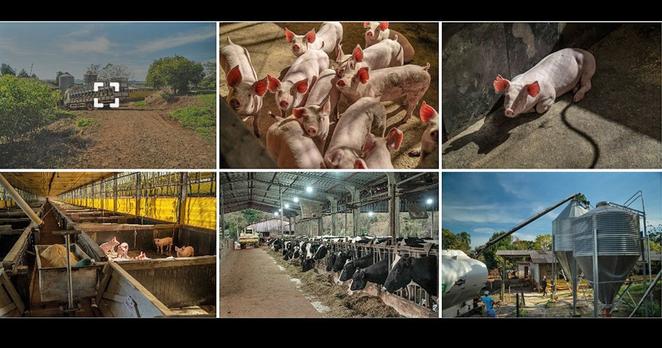

Brazil’s Farmed Animals “Meat Machine” Exposed in Photo Report https://veganfta.com/articles/2025/12/25/brazils-farmed-animals-meat-machine-exposed-in-photo-report/?utm_source=Mastodon

2026-01-22 11:25:13

Sonnet 063 - LXIII

Against my love shall be as I am now,

With Time's injurious hand crush'd and o'erworn;

When hours have drain'd his blood and fill'd his brow

With lines and wrinkles; when his youthful morn

Hath travell'd on to age's steepy night;

And all those beauties whereof now he's king

Are vanishing, or vanished out of sight,

Stealing away the treasure of his spring;

For such a time do I now fortify …

2025-12-23 13:07:04

Click Here: Erased: Silencing a kindergarten

Episode webpage: https://play.prx.org/listen?ge=prx_8376_f7cbcbf4-bf50-49a6-856d-3daf4ba10d91&uf=https://publicfeeds.net/f/8376/clickhere

2025-12-22 09:08:09

Un train stationne Š Saint-Mandé vers Château de Vincennes en raison d'une gêne Š la fermeture des portes de quai .

🤖 22/12 10:08

2026-02-21 06:28:10

Le trafic reprend mais reste perturbé entre Nation et Château de Vincennes en raison de l'actionnement d'un signal d'alarme entre Nation et Château de Vincennes .

🤖 21/02 07:28