Believe it or not, I've never actually submitted an academic paper for peer-reviewed publication before, and I'm a lil nail-bitey.

Is there anyone here who's active in ACL (the Association for Computational Linguistics) who wants to swap papers with me for a deep critique of methods/code/conclusions? I have a fun classic ML model that will entertain you, I swear.

Nominations sought for the 2026 Rising Stars in Computational & Data Sciences Workshop to be held April 7-8, 2026, at the Santa Fe Institute in #SantaFe, NM. #NewMexico

h…

Our department at the University of #Lausanne, Switzerland, has an opening for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

Knowledge of French (~ B2 level) is required for teaching.

Check out the official job posting (in French) …

Our department at the University of #Lausanne, Switzerland, has an opening for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

Knowledge of French (~ B2 level) is required for teaching.

Check out the official job posting (in French) …

Our department at the University of #Lausanne, Switzerland, has an opening for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

Knowledge of French (~ B2 level) is required for teaching.

Check out the official job posting (in French) …

In a group of ca. 200 computational linguists, there’s most probably mutual agreement on “the AI bubble will burst.” But there’s only very few people one can bond with over “I hope the AI bubble will burst.” 💥

[2025-10-17 Fri (UTC), 1 new article found for cs.CG Computational Geometry]

toXiv_bot_toot

International postdocs: Come to Bochum for a two-week stay to plan a research project and learn how to apply for funding for it in Germany.

I'm available as a host for any topic related to my research interests: digital forensic linguistics, experimental semantics/pragmatics, emojis, (computational analyses of) harmful language, metaphors, discourse, etc.

https://www.research-academy-ruhr.de/programm/researchexplorer/

Excelsior Sciences, which aims to use AI and robots for small-molecule drug discovery and development, raised a $70M Series A from Khosla Ventures and others (Aayushi Pratap/Chemical & Engineering News)

https://cen.acs.org/physical-chemistry

Computational Social Scientists in the Nordics, unite!

🇩🇰🇫🇮🇳🇴🇸🇪🇮🇸

The brand new Nordic Society for #CSS welcomes all researchers and practitioners based in the Nordics. The Society will promote student mobility, events, and education initiatives.

Join for free: https…

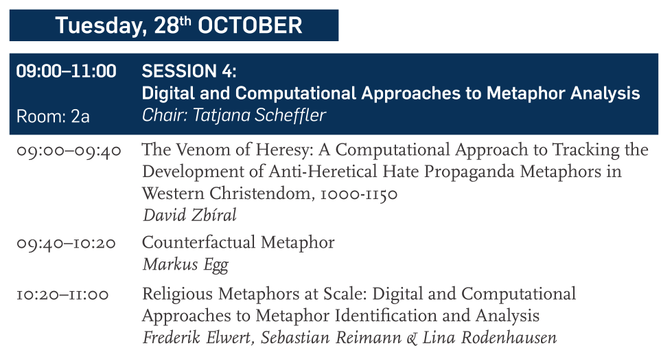

This morning, the #MetaphorsOfReligion conference starts with the panel on “Digital and Computational Approaches to Metaphor Analysis,” including our own presentation “Religious Metaphors at Scale.” I am looking forward to the discussion!

The third edition of the Workshop on Computational Methods in the Humanities #COMHUM2026 will take place on September 9 and 10, 2026 at the University of Lausanne #UNIL.

We invite researchers to submit abstracts of 500 to 1000 words (excluding references).

• Special track: computation …

The third edition of the Workshop on Computational Methods in the Humanities #COMHUM2026 will take place on September 9 and 10, 2026 at the University of Lausanne #UNIL.

We invite researchers to submit abstracts of 500 to 1000 words (excluding references).

• Special track: computation …

The third edition of the Workshop on Computational Methods in the Humanities #COMHUM2026 will take place on September 9 and 10, 2026 at the University of Lausanne #UNIL.

We invite researchers to submit abstracts of 500 to 1000 words (excluding references).

• Special track: computation …

S-D-RSM: Stochastic Distributed Regularized Splitting Method for Large-Scale Convex Optimization Problems

Maoran Wang, Xingju Cai, Yongxin Chen

https://arxiv.org/abs/2511.10133 https://arxiv.org/pdf/2511.10133 https://arxiv.org/html/2511.10133

arXiv:2511.10133v1 Announce Type: new

Abstract: This paper investigates the problems large-scale distributed composite convex optimization, with motivations from a broad range of applications, including multi-agent systems, federated learning, smart grids, wireless sensor networks, compressed sensing, and so on. Stochastic gradient descent (SGD) and its variants are commonly employed to solve such problems. However, existing algorithms often rely on vanishing step sizes, strong convexity assumptions, or entail substantial computational overhead to ensure convergence or obtain favorable complexity. To bridge the gap between theory and practice, we integrate consensus optimization and operator splitting techniques (see Problem Reformulation) to develop a novel stochastic splitting algorithm, termed the \emph{stochastic distributed regularized splitting method} (S-D-RSM). In practice, S-D-RSM performs parallel updates of proximal mappings and gradient information for only a randomly selected subset of agents at each iteration. By introducing regularization terms, it effectively mitigates consensus discrepancies among distributed nodes. In contrast to conventional stochastic methods, our theoretical analysis establishes that S-D-RSM achieves global convergence without requiring diminishing step sizes or strong convexity assumptions. Furthermore, it achieves an iteration complexity of $\mathcal{O}(1/\epsilon)$ with respect to both the objective function value and the consensus error. Numerical experiments show that S-D-RSM achieves up to 2--3$\times$ speedup compared to state-of-the-art baselines, while maintaining comparable or better accuracy. These results not only validate the algorithm's theoretical guarantees but also demonstrate its effectiveness in practical tasks such as compressed sensing and empirical risk minimization.

toXiv_bot_toot

From Euler to Today: Universal Mathematical Fallibility A Large-Scale Computational Analysis of Errors in ArXiv Papers

Igor Rivin

https://arxiv.org/abs/2511.10543 https://

My #AltProcess #Kallitype development/printmaking journey is already showing strong parallels to my software dev experience, i.e. a preference for avoiding monolithic frameworks and building more granular, reliable, understandable & controllable tooling myself, and get much better & mor…

The Probably Approximately Correct Learning Model in Computational Learning Theory

Rocco A. Servedio

https://arxiv.org/abs/2511.08791 https://arxiv.org/pdf…

Self-awareness, bodhisattvic altruism and computational architectures

📣 PhD Position in Computational & Systems Neuroscience (Marseille, France)

⏰ Deadline: January 28, 2026

We are recruiting a PhD student to work on neuromodulatory control of predictive processing in mouse vision, co-supervised by Ede Rancz (INMED) and myself.

This CENTURI project com…

Nobel laureate Sir Roger Penrose dismantles standard cosmology,

arguing the Big Bang wasn't the beginning and quantum mechanics is fundamentally wrong.

He then connects a real, gravitational wave function collapse to the non-computational nature of consciousness

and why today's AI can't truly understand

An inexact semismooth Newton-Krylov method for semilinear elliptic optimal control problem

Shiqi Chen, Xuesong Chen

https://arxiv.org/abs/2511.10058 https://arxiv.org/pdf/2511.10058 https://arxiv.org/html/2511.10058

arXiv:2511.10058v1 Announce Type: new

Abstract: An inexact semismooth Newton method has been proposed for solving semi-linear elliptic optimal control problems in this paper. This method incorporates the generalized minimal residual (GMRES) method, a type of Krylov subspace method, to solve the Newton equations and utilizes nonmonotonic line search to adjust the iteration step size. The original problem is reformulated into a nonlinear equation through variational inequality principles and discretized using a second-order finite difference scheme. By leveraging slanting differentiability, the algorithm constructs semismooth Newton directions and employs GMRES method to inexactly solve the Newton equations, significantly reducing computational overhead. A dynamic nonmonotonic line search strategy is introduced to adjust stepsizes adaptively, ensuring global convergence while overcoming local stagnation. Theoretical analysis demonstrates that the algorithm achieves superlinear convergence near optimal solutions when the residual control parameter $\eta_k$ approaches to 0. Numerical experiments validate the method's accuracy and efficiency in solving semilinear elliptic optimal control problems, corroborating theoretical insights.

toXiv_bot_toot

Riccati-ZORO: An efficient algorithm for heuristic online optimization of internal feedback laws in robust and stochastic model predictive control

Florian Messerer, Yunfan Gao, Jonathan Frey, Moritz Diehl

https://arxiv.org/abs/2511.10473 https://arxiv.org/pdf/2511.10473 https://arxiv.org/html/2511.10473

arXiv:2511.10473v1 Announce Type: new

Abstract: We present Riccati-ZORO, an algorithm for tube-based optimal control problems (OCP). Tube OCPs predict a tube of trajectories in order to capture predictive uncertainty. The tube induces a constraint tightening via additional backoff terms. This backoff can significantly affect the performance, and thus implicitly defines a cost of uncertainty. Optimizing the feedback law used to predict the tube can significantly reduce the backoffs, but its online computation is challenging.

Riccati-ZORO jointly optimizes the nominal trajectory and uncertainty tube based on a heuristic uncertainty cost design. The algorithm alternates between two subproblems: (i) a nominal OCP with fixed backoffs, (ii) an unconstrained tube OCP, which optimizes the feedback gains for a fixed nominal trajectory. For the tube optimization, we propose a cost function informed by the proximity of the nominal trajectory to constraints, prioritizing reduction of the corresponding backoffs. These ideas are developed in detail for ellipsoidal tubes under linear state feedback. In this case, the decomposition into the two subproblems yields a substantial reduction of the computational complexity with respect to the state dimension from $\mathcal{O}(n_x^6)$ to $\mathcal{O}(n_x^3)$, i.e., the complexity of a nominal OCP.

We investigate the algorithm in numerical experiments, and provide two open-source implementations: a prototyping version in CasADi and a high-performance implementation integrated into the acados OCP solver.

toXiv_bot_toot

Multi state neurons

Robert Worden

https://arxiv.org/abs/2512.08815 https://arxiv.org/pdf/2512.08815 https://arxiv.org/html/2512.08815

arXiv:2512.08815v1 Announce Type: new

Abstract: Neurons, as eukaryotic cells, have powerful internal computation capabilities. One neuron can have many distinct states, and brains can use this capability. Processes of neuron growth and maintenance use chemical signalling between cell bodies and synapses, ferrying chemical messengers over microtubules and actin fibres within cells. These processes are computations which, while slower than neural electrical signalling, could allow any neuron to change its state over intervals of seconds or minutes. Based on its state, a single neuron can selectively de-activate some of its synapses, sculpting a dynamic neural net from the static neural connections of the brain. Without this dynamic selection, the static neural networks in brains are too amorphous and dilute to do the computations of neural cognitive models. The use of multi-state neurons in animal brains is illustrated in hierarchical Bayesian object recognition. Multi-state neurons may support a design which is more efficient than two-state neurons, and scales better as object complexity increases. Brains could have evolved to use multi-state neurons. Multi-state neurons could be used in artificial neural networks, to use a kind of non-Hebbian learning which is faster and more focused and controllable than traditional neural net learning. This possibility has not yet been explored in computational models.

toXiv_bot_toot

Replaced article(s) found for cs.GT. https://arxiv.org/list/cs.GT/new

[1/1]:

- Egyptian Ratscrew: Discovering Dominant Strategies with Computational Game Theory

Justin Diamond, Ben Garcia

https://arxiv.org/abs/2304.01007

- Truthful and Almost Envy-Free Mechanism of Allocating Indivisible Goods: the Power of Randomness

Xiaolin Bu, Biaoshuai Tao

https://arxiv.org/abs/2407.13634 https://mastoxiv.page/@arXiv_csGT_bot/112811955506293858

- Learning the Value of Value Learning

Alex John London, Aydin Mohseni

https://arxiv.org/abs/2511.17714 https://mastoxiv.page/@arXiv_csAI_bot/115609411461995995

toXiv_bot_toot

Replaced article(s) found for q-bio.GN. https://arxiv.org/list/q-bio.GN/new

[1/1]:

- Uchimata: a toolkit for visualization of 3D genome structures on the web and in computational not...

David Kou\v{r}il, Trevor Manz, Tereza Clarence, Nils Gehlenborg

DeepSeek researchers detail a new mHC architecture they used to train 3B, 9B, and 27B models, finding it scaled without adding significant computational burden (Vincent Chow/South China Morning Post)

https://www.scmp.com/tech/big-tech/article

i stumbled across a fragment of my online dating profile from two decades ago

I'm into Monk, chaos theory, Björk, Vermeer, Frosted Mini-Wheats, Wallace Stevens, Saint-Saëns, Tim O'Brien, Miro, evolutionary biology, Ravel, Gregory Corso, fresh berries, UFOs, Ellington, Bible comics, computational complexity, Nespresso, The Kinks, Diane DiPrima, fresh-squeezed OJ, Köln's Kompakt music label, Giacometti, Mingus, that sort of thing. I'm very curious and never bored.

s…

A lot of computational effort going into the throwaway gag that is "TV advert for children's toy playset with an unsuitable theme".

https://www.youtube.com/watch?v=FY9pqTgLPfk

The offices at John Quackenbush’s lab at the Harvard T.H. Chan School of Public Health

were once full of postdoctoral fellows, graduate students, and interns.

Young scientists here worked on some of the most cutting-edge computational biology research in the world,

driving new discoveries and the creation of widely used big data tools, including one the National Cancer Institute named among the most important advances of 2024.

Today, the offices are rows of empty comp…

Dispersion-Aware Modeling Framework for Parallel Optical Computing

Ziqi Wei, Yuanjian Wan, Yuhu Cheng, Xiao Yu, Peng Xie

https://arxiv.org/abs/2511.18897 https://arxiv.org/pdf/2511.18897 https://arxiv.org/html/2511.18897

arXiv:2511.18897v1 Announce Type: new

Abstract: Optical computing represents a groundbreaking technology that leverages the unique properties of photons, with innate parallelism standing as its most compelling advantage. Parallel optical computing like cascaded Mach-Zehnder interferometers (MZIs) based offers powerful computational capabilities but also introduces new challenges, particularly concerning dispersion due to the introduction of new frequencies. In this work, we extend existing theories of cascaded MZI systems to develop a generalized model tailored for wavelength-multiplexed parallel optical computing. Our comprehensive model incorporates component dispersion characteristics into a wavelength-dependent transfer matrix framework and is experimentally validated. We propose a computationally efficient compensation strategy that reduces global dispersion error within a 40 nm range from 0.22 to 0.039 using edge-spectrum calibration. This work establishes a fundamental framework for dispersion-aware model and error correction in MZI-based parallel optical computing chips, advancing the reliability of multi-wavelength photonic processors.

toXiv_bot_toot

Spatially-informed transformers: Injecting geostatistical covariance biases into self-attention for spatio-temporal forecasting

Yuri Calleo

https://arxiv.org/abs/2512.17696 https://arxiv.org/pdf/2512.17696 https://arxiv.org/html/2512.17696

arXiv:2512.17696v1 Announce Type: new

Abstract: The modeling of high-dimensional spatio-temporal processes presents a fundamental dichotomy between the probabilistic rigor of classical geostatistics and the flexible, high-capacity representations of deep learning. While Gaussian processes offer theoretical consistency and exact uncertainty quantification, their prohibitive computational scaling renders them impractical for massive sensor networks. Conversely, modern transformer architectures excel at sequence modeling but inherently lack a geometric inductive bias, treating spatial sensors as permutation-invariant tokens without a native understanding of distance. In this work, we propose a spatially-informed transformer, a hybrid architecture that injects a geostatistical inductive bias directly into the self-attention mechanism via a learnable covariance kernel. By formally decomposing the attention structure into a stationary physical prior and a non-stationary data-driven residual, we impose a soft topological constraint that favors spatially proximal interactions while retaining the capacity to model complex dynamics. We demonstrate the phenomenon of ``Deep Variography'', where the network successfully recovers the true spatial decay parameters of the underlying process end-to-end via backpropagation. Extensive experiments on synthetic Gaussian random fields and real-world traffic benchmarks confirm that our method outperforms state-of-the-art graph neural networks. Furthermore, rigorous statistical validation confirms that the proposed method delivers not only superior predictive accuracy but also well-calibrated probabilistic forecasts, effectively bridging the gap between physics-aware modeling and data-driven learning.

toXiv_bot_toot

Reminder: We currently have an opening in our department at the University of #Lausanne #Unil for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

…

Reminder: We currently have an opening in our department at the University of #Lausanne #Unil for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

…

Reminder: We currently have an opening in our department at the University of #Lausanne #Unil for a junior lecturer (#postdoc) in computational humanities: 80%, up to 5 years, starting Feb 1, 2026.

…

Experimental insights into data augmentation techniques for deep learning-based multimode fiber imaging: limitations and success

Jawaria Maqbool, M. Imran Cheema

https://arxiv.org/abs/2511.19072 https://arxiv.org/pdf/2511.19072 https://arxiv.org/html/2511.19072

arXiv:2511.19072v1 Announce Type: new

Abstract: Multimode fiber~(MMF) imaging using deep learning has high potential to produce compact, minimally invasive endoscopic systems. Nevertheless, it relies on large, diverse real-world medical data, whose availability is limited by privacy concerns and practical challenges. Although data augmentation has been extensively studied in various other deep learning tasks, it has not been systematically explored for MMF imaging. This work provides the first in-depth experimental and computational study on the efficacy and limitations of augmentation techniques in this field. We demonstrate that standard image transformations and conditional generative adversarial-based synthetic speckle generation fail to improve, or even deteriorate, reconstruction quality, as they neglect the complex modal interference and dispersion that results in speckle formation. To address this, we introduce a physical data augmentation method in which only organ images are digitally transformed, while their corresponding speckles are experimentally acquired via fiber. This approach preserves the physics of light-fiber interaction and enhances the reconstruction structural similarity index measure~(SSIM) by up to 17\%, forming a viable system for reliable MMF imaging under limited data conditions.

toXiv_bot_toot

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[5/5]:

- CLAReSNet: When Convolution Meets Latent Attention for Hyperspectral Image Classification

Asmit Bandyopadhyay, Anindita Das Bhattacharjee, Rakesh Das

https://arxiv.org/abs/2511.12346 https://mastoxiv.page/@arXiv_csCV_bot/115570753208147835

- Safeguarded Stochastic Polyak Step Sizes for Non-smooth Optimization: Robust Performance Without ...

Dimitris Oikonomou, Nicolas Loizou

https://arxiv.org/abs/2512.02342 https://mastoxiv.page/@arXiv_mathOC_bot/115654870924418771

- Predictive Modeling of I/O Performance for Machine Learning Training Pipelines: A Data-Driven App...

Karthik Prabhakar, Durgamadhab Mishra

https://arxiv.org/abs/2512.06699 https://mastoxiv.page/@arXiv_csPF_bot/115688618582182232

- Minimum Bayes Risk Decoding for Error Span Detection in Reference-Free Automatic Machine Translat...

Lyu, Song, Kamigaito, Ding, Tanaka, Utiyama, Funakoshi, Okumura

https://arxiv.org/abs/2512.07540 https://mastoxiv.page/@arXiv_csCL_bot/115689532163491162

- In-Context Learning for Seismic Data Processing

Fabian Fuchs, Mario Ruben Fernandez, Norman Ettrich, Janis Keuper

https://arxiv.org/abs/2512.11575 https://mastoxiv.page/@arXiv_csCV_bot/115723040285820239

- Journey Before Destination: On the importance of Visual Faithfulness in Slow Thinking

Rheeya Uppaal, Phu Mon Htut, Min Bai, Nikolaos Pappas, Zheng Qi, Sandesh Swamy

https://arxiv.org/abs/2512.12218 https://mastoxiv.page/@arXiv_csCV_bot/115729165330908574

- Non-Resolution Reasoning (NRR): A Computational Framework for Contextual Identity and Ambiguity P...

Kei Saito

https://arxiv.org/abs/2512.13478 https://mastoxiv.page/@arXiv_csCL_bot/115729234145554554

- Stylized Synthetic Augmentation further improves Corruption Robustness

Georg Siedel, Rojan Regmi, Abhirami Anand, Weijia Shao, Silvia Vock, Andrey Morozov

https://arxiv.org/abs/2512.15675 https://mastoxiv.page/@arXiv_csCV_bot/115740141862163631

- mimic-video: Video-Action Models for Generalizable Robot Control Beyond VLAs

Jonas Pai, Liam Achenbach, Victoriano Montesinos, Benedek Forrai, Oier Mees, Elvis Nava

https://arxiv.org/abs/2512.15692 https://mastoxiv.page/@arXiv_csRO_bot/115739947869830764

toXiv_bot_toot