2025-12-25 07:08:53

2025-12-25 07:08:53

2026-01-21 12:21:05

Ukrainian-founded language learning marketplace Preply raised a $150M Series D led by WestCap at a $1.2B valuation; the startup has a 150-person office in Kyiv (Anna Heim/TechCrunch)

https://techcrunch.com/2026/01/21/langu…

2025-11-23 16:46:33

Buccaneers vs. Rams NFL player props, SGP: Self-learning AI backs Baker Mayfield Over 242.5 yards on 'SNF'

https://www.cbssports.com/nfl/news/buccane

2025-12-24 08:40:51

2025-11-21 06:07:30

Over the last decade, America’s roads have become more dangerous,

with serious crashes increasing by nearly 20 percent since 2013.

Approximately 94 percent of crashes are the result of driver behavior

like speeding, impairment or distraction

— behavior that can be detected and corrected by a new generation of machine learning-enabled dash-cams.

Seamless integration between machine learning, IoT management and the cloud allows these cameras to improve safety in r…

2025-12-23 15:12:20

One thing I'm learning from so many people declaring that LLMs are like a pair programmer you have to guide a lot is how few people I ever want to pair program with.

2025-12-22 17:36:05

“Make the seats adjustable” is a thought I bring to teaching, for example: Does the context I’m creating for learning accommodate people with all different kinds of minds? What variations am I not accommodating? Can I make some things more individually adjustable to better embrace those variations? Can multiple instructors / learning environments / schools offer the flexibility that I can’t offer myself?

Total adjustability is impossible; infinite flexibility is impossible. But as an ongoing effort, as a •direction•, this work is both feasible and useful.

9/

2025-11-23 17:31:41

I have been learning #Rust for a couple of years, and using it for pet projects and demos alike. Working for a JVM-heavy company, I thought it would be my fate forever. Last week, I had a nice surprise: I convinced my management that using Rust for a particular project was the right choice. It’s not a huge project, but I want to describe my experience using Rust in a "real" project.

2025-12-23 18:37:05

I'm just reviewing some old(er) video I recorded quite a while ago ... and I admit that I'm eager to record in higher quality!

But hey, it's all a learning curve. I'm not feeling bad about the bad quality. I regard it pretty nice to see the advancement over time!

https://video.franzgraf.de/w/r3XH…

2025-11-23 21:47:03

This week has been incredibly challenging, but sometimes with people who refuse to accept disagreement, there's only so much you can do.

If you're interested in learning more about anarcho-syndicalism, I’ve curated some excellent articles on my Linktree, mostly in Norwegian, but also some in English.

I’m planning to update it soon with more reliable sources beyond just blog posts.

Anyway, I'm not mad, just really exhausted.

2025-12-24 19:26:38

I post a lot here and on Maker Forums Discourse, and have kind of not gotten around to blogging for a while. But today I had time to reflect on my first full year of #HamRadio — this new hobby has surprised me in a lot of (good) ways.

2026-01-23 20:59:56

Raw With J

Each week, Jacintha Field sits down with thought leaders, parents, experts, and real people to dive into life's messier moments: parenting, separation, burnout, grief, rebuilding, and learning to feel again...

Great Australian Pods Podcast Directory: https://www.greataustralianpods.com/raw-wi

2026-01-23 17:22:39

"Back in my day, we walked to school in the snow. Uphill. Both ways. And we spent hours at the terminal learning commands and syntax." 😂

https://www.nlsh.dev/

2025-10-25 09:15:04

You know that you’re teaching in #Switzerland when you catch the majority of students running the live broadcast of today’s #ski competition in a separate window while doing learning tasks

#AcademicChatter

2025-11-24 00:36:40

Mapping the unseen: How Europe is fighting back against invisible soil pollution #Europe

2025-12-23 13:56:30

Self-learning AI releases NFL picks, score predictions every Week 17 game

https://www.cbssports.com/nfl/news/nfl-week-17-picks-ai-score-predictions/

2025-12-22 13:54:45

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[3/5]:

- Look-Ahead Reasoning on Learning Platforms

Haiqing Zhu, Tijana Zrnic, Celestine Mendler-D\"unner

https://arxiv.org/abs/2511.14745 https://mastoxiv.page/@arXiv_csLG_bot/115575981129228810

- Deep Gaussian Process Proximal Policy Optimization

Matthijs van der Lende, Juan Cardenas-Cartagena

https://arxiv.org/abs/2511.18214 https://mastoxiv.page/@arXiv_csLG_bot/115610315210502140

- Spectral Concentration at the Edge of Stability: Information Geometry of Kernel Associative Memory

Akira Tamamori

https://arxiv.org/abs/2511.23083 https://mastoxiv.page/@arXiv_csLG_bot/115644325602130493

- xGR: Efficient Generative Recommendation Serving at Scale

Sun, Liu, Zhang, Wu, Yang, Liang, Li, Ma, Liang, Ren, Zhang, Liu, Zhang, Qian, Yang

https://arxiv.org/abs/2512.11529 https://mastoxiv.page/@arXiv_csLG_bot/115723008170311172

- Credit Risk Estimation with Non-Financial Features: Evidence from a Synthetic Istanbul Dataset

Atalay Denknalbant, Emre Sezdi, Zeki Furkan Kutlu, Polat Goktas

https://arxiv.org/abs/2512.12783 https://mastoxiv.page/@arXiv_csLG_bot/115729287232895097

- The Semantic Illusion: Certified Limits of Embedding-Based Hallucination Detection in RAG Systems

Debu Sinha

https://arxiv.org/abs/2512.15068 https://mastoxiv.page/@arXiv_csLG_bot/115740048142898391

- Towards Reproducibility in Predictive Process Mining: SPICE -- A Deep Learning Library

Stritzel, H\"uhnerbein, Rauch, Zarate, Fleischmann, Buck, Lischka, Frey

https://arxiv.org/abs/2512.16715 https://mastoxiv.page/@arXiv_csLG_bot/115745910810427061

- Differentially private Bayesian tests

Abhisek Chakraborty, Saptati Datta

https://arxiv.org/abs/2401.15502 https://mastoxiv.page/@arXiv_statML_bot/111843467510507382

- SCAFFLSA: Taming Heterogeneity in Federated Linear Stochastic Approximation and TD Learning

Paul Mangold, Sergey Samsonov, Safwan Labbi, Ilya Levin, Reda Alami, Alexey Naumov, Eric Moulines

https://arxiv.org/abs/2402.04114

- Adjusting Model Size in Continual Gaussian Processes: How Big is Big Enough?

Guiomar Pescador-Barrios, Sarah Filippi, Mark van der Wilk

https://arxiv.org/abs/2408.07588 https://mastoxiv.page/@arXiv_statML_bot/112965266196097314

- Non-Perturbative Trivializing Flows for Lattice Gauge Theories

Mathis Gerdes, Pim de Haan, Roberto Bondesan, Miranda C. N. Cheng

https://arxiv.org/abs/2410.13161 https://mastoxiv.page/@arXiv_heplat_bot/113327593338897860

- Dynamic PET Image Prediction Using a Network Combining Reversible and Irreversible Modules

Sun, Zhang, Xia, Sun, Chen, Yang, Liu, Zhu, Liu

https://arxiv.org/abs/2410.22674 https://mastoxiv.page/@arXiv_eessIV_bot/113401026110345647

- Targeted Learning for Variable Importance

Xiaohan Wang, Yunzhe Zhou, Giles Hooker

https://arxiv.org/abs/2411.02221 https://mastoxiv.page/@arXiv_statML_bot/113429912435819479

- Refined Analysis of Federated Averaging and Federated Richardson-Romberg

Paul Mangold, Alain Durmus, Aymeric Dieuleveut, Sergey Samsonov, Eric Moulines

https://arxiv.org/abs/2412.01389 https://mastoxiv.page/@arXiv_statML_bot/113588027268311334

- Embedding-Driven Data Distillation for 360-Degree IQA With Residual-Aware Refinement

Abderrezzaq Sendjasni, Seif-Eddine Benkabou, Mohamed-Chaker Larabi

https://arxiv.org/abs/2412.12667 https://mastoxiv.page/@arXiv_csCV_bot/113672538318570349

- 3D Cell Oversegmentation Correction via Geo-Wasserstein Divergence

Peter Chen, Bryan Chang, Olivia A Creasey, Julie Beth Sneddon, Zev J Gartner, Yining Liu

https://arxiv.org/abs/2502.01890 https://mastoxiv.page/@arXiv_csCV_bot/113949981686723660

- DHP: Discrete Hierarchical Planning for Hierarchical Reinforcement Learning Agents

Shashank Sharma, Janina Hoffmann, Vinay Namboodiri

https://arxiv.org/abs/2502.01956 https://mastoxiv.page/@arXiv_csRO_bot/113949997485625086

- Foundation for unbiased cross-validation of spatio-temporal models for species distribution modeling

Diana Koldasbayeva, Alexey Zaytsev

https://arxiv.org/abs/2502.03480

- GraphCompNet: A Position-Aware Model for Predicting and Compensating Shape Deviations in 3D Printing

Juheon Lee (Rachel), Lei (Rachel), Chen, Juan Carlos Catana, Hui Wang, Jun Zeng

https://arxiv.org/abs/2502.09652 https://mastoxiv.page/@arXiv_csCV_bot/114017924551186136

- LookAhead Tuning: Safer Language Models via Partial Answer Previews

Liu, Wang, Luo, Yuan, Sun, Liang, Zhang, Zhou, Hooi, Deng

https://arxiv.org/abs/2503.19041 https://mastoxiv.page/@arXiv_csCL_bot/114227502448008352

- Constraint-based causal discovery with tiered background knowledge and latent variables in single...

Christine W. Bang, Vanessa Didelez

https://arxiv.org/abs/2503.21526 https://mastoxiv.page/@arXiv_statML_bot/114238919468512990

toXiv_bot_toot

2026-01-22 18:30:15

I’m wondering what all this software is that people now make that it wasn’t worth for them learning programming for

2026-01-23 16:33:05

2026-01-23 20:39:04

Ways to stand with those in Minnesota that are under attack by their federal government

https://www.standwithminnesota.com/

2025-11-15 08:07:50

Poor cancer survival rates for those with learning disabilities

https://northwestbylines.co.uk/news/health/poor-cancer-survival-rates-for-those-with-learning-disabilities/

2026-01-23 20:54:07

Short-range kamikaze drones are one of the fastest moving facets of the defense sector today —

The Marine Corps "Organic Precision Fires-Light" (OPF-L) program, is designed to provide dismounted Marine infantry rifle squads with a man-packable, easy-to-operate precision strike drone to engage adversaries beyond line of sight.

A recent announcement of a $23.9-million contract to provide the U.S. Marine Corps with more than 600 "Bolt-M" drones is the next phas…

2025-12-19 01:39:25

Raiders’ Darien Porter intent on learning from mistakes https://www.reviewjournal.com/sports/raiders/raiders-darien-porter-intent-on-learning-from-mistakes-3597557/

2025-12-24 20:20:15

The journey of learning is transformative, fueling curiosity and sparking growth. Knowledge lets us transcend limits and transform challenges into thrilling adventures. 🚀

Where has your curiosity led you lately? Share your stories!

#LifelongLearning #CuriosityJourney

2025-12-22 17:35:30

The other day I had a funny conversation. A Finnish person was making excuses for my laziness at learning Finnish. Then she asked «is Italian hard to learn?». I never know how to answer the question so I said «I don't know: at least pronunciation is not too bad for Finns, they may sound funny but they're understandable; they mostly have trouble because they have no concept of separate p and b, and so on». She said «you mean strong p and soft p»? Not how I would have phrased it, but y…

2026-01-22 15:16:13

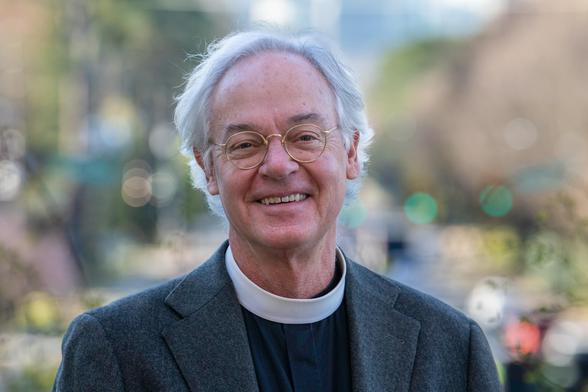

Dean of St. Philip's Cathedral in Atlanta, The Very Rev. Sam Candler ’82 M.Div. would rather bless than curse. "People learning how to pray together, serve together, get angry together, and love together—that’s what’s going to save the world,” he says.

Read Ray Waddle's new profile of Sam Candler and his uplifting work in Atlanta.

2025-12-21 16:15:20

I still need to spend more time learning TrueNAS and how to get container applications running properly. It's much more complex than OpenMediaVault.

Some applications do just work, but many seem to need a lot more care and configuration to get working as desired.

#trueNaS #selfHosting

2025-12-22 13:54:35

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[2/5]:

- The Diffusion Duality

Sahoo, Deschenaux, Gokaslan, Wang, Chiu, Kuleshov

https://arxiv.org/abs/2506.10892 https://mastoxiv.page/@arXiv_csLG_bot/114675526577078472

- Multimodal Representation Learning and Fusion

Jin, Ge, Xie, Luo, Song, Bi, Liang, Guan, Yeong, Song, Hao

https://arxiv.org/abs/2506.20494 https://mastoxiv.page/@arXiv_csLG_bot/114749113025183688

- The kernel of graph indices for vector search

Mariano Tepper, Ted Willke

https://arxiv.org/abs/2506.20584 https://mastoxiv.page/@arXiv_csLG_bot/114749118923266356

- OptScale: Probabilistic Optimality for Inference-time Scaling

Youkang Wang, Jian Wang, Rubing Chen, Xiao-Yong Wei

https://arxiv.org/abs/2506.22376 https://mastoxiv.page/@arXiv_csLG_bot/114771735361664528

- Boosting Revisited: Benchmarking and Advancing LP-Based Ensemble Methods

Fabian Akkerman, Julien Ferry, Christian Artigues, Emmanuel Hebrard, Thibaut Vidal

https://arxiv.org/abs/2507.18242 https://mastoxiv.page/@arXiv_csLG_bot/114913322736512937

- MolMark: Safeguarding Molecular Structures through Learnable Atom-Level Watermarking

Runwen Hu, Peilin Chen, Keyan Ding, Shiqi Wang

https://arxiv.org/abs/2508.17702 https://mastoxiv.page/@arXiv_csLG_bot/115095014405732247

- Dual-Distilled Heterogeneous Federated Learning with Adaptive Margins for Trainable Global Protot...

Fatema Siddika, Md Anwar Hossen, Wensheng Zhang, Anuj Sharma, Juan Pablo Mu\~noz, Ali Jannesari

https://arxiv.org/abs/2508.19009 https://mastoxiv.page/@arXiv_csLG_bot/115100269482762688

- STDiff: A State Transition Diffusion Framework for Time Series Imputation in Industrial Systems

Gary Simethy, Daniel Ortiz-Arroyo, Petar Durdevic

https://arxiv.org/abs/2508.19011 https://mastoxiv.page/@arXiv_csLG_bot/115100270137397046

- EEGDM: Learning EEG Representation with Latent Diffusion Model

Shaocong Wang, Tong Liu, Yihan Li, Ming Li, Kairui Wen, Pei Yang, Wenqi Ji, Minjing Yu, Yong-Jin Liu

https://arxiv.org/abs/2508.20705 https://mastoxiv.page/@arXiv_csLG_bot/115111565155687451

- Data-Free Continual Learning of Server Models in Model-Heterogeneous Cloud-Device Collaboration

Xiao Zhang, Zengzhe Chen, Yuan Yuan, Yifei Zou, Fuzhen Zhuang, Wenyu Jiao, Yuke Wang, Dongxiao Yu

https://arxiv.org/abs/2509.25977 https://mastoxiv.page/@arXiv_csLG_bot/115298721327100391

- Fine-Tuning Masked Diffusion for Provable Self-Correction

Jaeyeon Kim, Seunggeun Kim, Taekyun Lee, David Z. Pan, Hyeji Kim, Sham Kakade, Sitan Chen

https://arxiv.org/abs/2510.01384 https://mastoxiv.page/@arXiv_csLG_bot/115309690976554356

- A Generic Machine Learning Framework for Radio Frequency Fingerprinting

Alex Hiles, Bashar I. Ahmad

https://arxiv.org/abs/2510.09775 https://mastoxiv.page/@arXiv_csLG_bot/115372387779061015

- ASecond-Order SpikingSSM for Wearables

Kartikay Agrawal, Abhijeet Vikram, Vedant Sharma, Vaishnavi Nagabhushana, Ayon Borthakur

https://arxiv.org/abs/2510.14386 https://mastoxiv.page/@arXiv_csLG_bot/115389079527543821

- Utility-Diversity Aware Online Batch Selection for LLM Supervised Fine-tuning

Heming Zou, Yixiu Mao, Yun Qu, Qi Wang, Xiangyang Ji

https://arxiv.org/abs/2510.16882 https://mastoxiv.page/@arXiv_csLG_bot/115412243355962887

- Seeing Structural Failure Before it Happens: An Image-Based Physics-Informed Neural Network (PINN...

Omer Jauhar Khan, Sudais Khan, Hafeez Anwar, Shahzeb Khan, Shams Ul Arifeen

https://arxiv.org/abs/2510.23117 https://mastoxiv.page/@arXiv_csLG_bot/115451891042176876

- Training Deep Physics-Informed Kolmogorov-Arnold Networks

Spyros Rigas, Fotios Anagnostopoulos, Michalis Papachristou, Georgios Alexandridis

https://arxiv.org/abs/2510.23501 https://mastoxiv.page/@arXiv_csLG_bot/115451942159737549

- Semi-Supervised Preference Optimization with Limited Feedback

Seonggyun Lee, Sungjun Lim, Seojin Park, Soeun Cheon, Kyungwoo Song

https://arxiv.org/abs/2511.00040 https://mastoxiv.page/@arXiv_csLG_bot/115490555013124989

- Towards Causal Market Simulators

Dennis Thumm, Luis Ontaneda Mijares

https://arxiv.org/abs/2511.04469 https://mastoxiv.page/@arXiv_csLG_bot/115507943827841017

- Incremental Generation is Necessary and Sufficient for Universality in Flow-Based Modelling

Hossein Rouhvarzi, Anastasis Kratsios

https://arxiv.org/abs/2511.09902 https://mastoxiv.page/@arXiv_csLG_bot/115547587245365920

- Optimizing Mixture of Block Attention

Guangxuan Xiao, Junxian Guo, Kasra Mazaheri, Song Han

https://arxiv.org/abs/2511.11571 https://mastoxiv.page/@arXiv_csLG_bot/115564541392410174

- Assessing Automated Fact-Checking for Medical LLM Responses with Knowledge Graphs

Shasha Zhou, Mingyu Huang, Jack Cole, Charles Britton, Ming Yin, Jan Wolber, Ke Li

https://arxiv.org/abs/2511.12817 https://mastoxiv.page/@arXiv_csLG_bot/115570877730326947

toXiv_bot_toot

2025-11-21 15:01:03

Speaking of kids, here if a kid who is building a nostr gaming system.

Not by vibe coding, by learning how to use unity.

Gamestr.io is the marketplace he's building. Submitting a protocol improvement proposal for gaming types.

His gaming market is first going to feature a Tetris clone that broadcasts scores as that new type.

Talented kid named Sam.

#nostr #gamestr #nostrshire

2026-01-19 14:26:55

Seriously, knowing the dire predicament we are in, we should be busy learning and re-learning how to produce food, clothing and housing on a planet transitioning into a radically different climate. We desperately need a plan how to scale back on technology use, in tandem with the natural decline in resource and energy availability.

https://

2026-01-02 11:00:00

2025-12-21 20:13:18

The Seeing Center (2005.5)

⭐️⭐️⭐️⭐️ (183,746 reviews)

"This Best Picture-winning Tour de Force follows Moses Jackson, a good-hearted African-American PhD from Brooklyn, as he moves to a rural southern school to introduce some rowdy, expressive, redneck kids to the joys of learning, overcoming racial and economic divides in the process."

2025-11-22 05:44:58

Needed to build something quite weird that involves NFC tags and mainly Notion DB as storage and UI

Didn’t feel like learning Notion cos I am not a fan.

Worked with ChatGPT to make a spec and prompt this was useful while doing that totally changed my mind to something better

Gave it to codex and it one-shot built it perfectly in 20 minutes without interaction, worked first time once I fixed a mistake I made in Notion.

Lots of issues with AI but it’s certainly moving…

2025-12-06 12:08:54

I am not as pessimistic as the writer of this article, still an absolute must-read,

"AI is Destroying the University and Learning Itself"

https://www.currentaffairs.org/news/ai-is-destroying-the-university-and-learning-itself

2026-01-21 15:05:17

I like AI. I like robots. I love machine learning automation. I don't like it when their use cases are replacing people, spying on or profiling people, prosecuting people, submitting people into subscription, creating "art", "videos", "pictures" and scumbag "memes", warfare, propaganda, disinformation, deepfakes of any kind, ads, trolling, pumping up stocks, just plain wrong search results that force you to waste twice as much time to confirm they …

2025-11-21 05:56:34

Britain’s tax system combines the worst of the US and Scandinavia - https://on.ft.com/48abwRo via @FT

Sharing also for the sake of warning my Scandinavian compatriots some of whom seem to be learning the wrong things from the UK.

2025-11-11 18:49:19

How Do We Honor Veterans? By Learning from Their Experiences. - TheHumanist.com https://thehumanist.com/commentary/how-do-we-honor-veterans-by-learning-from-their-experiences

2025-11-15 13:39:31

Every week, Metacurity delivers our free and paid subscribers a run-down of the top infosec-related long reads we didn't have time for during the daily crush of cyber news.

This week's selection covers

--Massive surveillance in Mexico City leaves crime high,

--Workplace surveillance can harm workers,

--Machine learning privacy attacks are less effective in reality than they are in theory,

--LLMs produce more secure code when trained on flaw-free code,

2025-12-22 10:33:50

Calibratable Disambiguation Loss for Multi-Instance Partial-Label Learning

Wei Tang, Yin-Fang Yang, Weijia Zhang, Min-Ling Zhang

https://arxiv.org/abs/2512.17788 https://arxiv.org/pdf/2512.17788 https://arxiv.org/html/2512.17788

arXiv:2512.17788v1 Announce Type: new

Abstract: Multi-instance partial-label learning (MIPL) is a weakly supervised framework that extends the principles of multi-instance learning (MIL) and partial-label learning (PLL) to address the challenges of inexact supervision in both instance and label spaces. However, existing MIPL approaches often suffer from poor calibration, undermining classifier reliability. In this work, we propose a plug-and-play calibratable disambiguation loss (CDL) that simultaneously improves classification accuracy and calibration performance. The loss has two instantiations: the first one calibrates predictions based on probabilities from the candidate label set, while the second one integrates probabilities from both candidate and non-candidate label sets. The proposed CDL can be seamlessly incorporated into existing MIPL and PLL frameworks. We provide a theoretical analysis that establishes the lower bound and regularization properties of CDL, demonstrating its superiority over conventional disambiguation losses. Experimental results on benchmark and real-world datasets confirm that our CDL significantly enhances both classification and calibration performance.

toXiv_bot_toot

2025-11-12 16:58:01

DTO-BioFlow is using AI to analyze decades of marine biodiversity records, revealing insights that support ocean monitoring, conservation, and restoration. 💙

https://dto-bioflow.eu/news/using-deep-learning-unlock-decades-mari…

2025-12-20 07:05:11

Let's Learn Rust Using Rustlings

This video is a walkthrough and review of learning Rust using Rustlings, aimed at developers who struggle with Rust’s ownership model and borrow checker.

📺 https://www.youtube.com/watch?v=jJopQTH7vmg

🦀

2025-12-05 14:51:22

Ethical Considerations Around Machine Learning-Engaged Online Participatory Research - poster from Zooniverse community at #FF2025 https://zenodo.org/records/17779992

2025-11-24 13:46:50

49ers vs. Panthers SGP: 'Monday Night Football' same-game parlay picks, bets, props from SportsLine AI

https://www.cbssports.com/nfl/news/49ers-pan…

2025-11-20 17:45:51

In the remaining two thirds of the book a second interpreter – a bytecode virtual machine – is built using C. I'm very much looking forward to that part of the book. However, I can't bring myself to write C, not even for something inconsequential like this. So I guess I'll finally have to get serious about properly learning Rust.

2025-11-20 17:45:51

In the remaining two thirds of the book a second interpreter – a bytecode virtual machine – is built using C. I'm very much looking forward to that part of the book. However, I can't bring myself to write C, not even for something inconsequential like this. So I guess I'll finally have to get serious about properly learning Rust.

2025-11-19 19:22:31

2025-12-09 01:32:16

Here is a link to Current Affairs to an article about the impact of AI on universities in the US.

It is a very long post, but well worth your time and attention

https://www.currentaffairs.org/news/ai-is-destroying-the-university-and-learning-it…

2025-11-21 07:57:14

Timbuktu’s Medieval Manuscripts Return Home After a Decade Away Safe from Insurgents https://www.goodnewsnetwork.org/timbuktus-medieval-manuscripts-return-home-after-a-decade-away-safe-from-insurgents/

2025-11-19 14:39:15

Thank you all for 3,000 followers on here! Here’s a photo of Danny to celebrate the occasion ☺️

3 years into being part of Mastodon, I continue to be impressed with how wonderful the people here are and how much this social network actually FEELS social. People replying to one another, having conversations, learning things, sharing moments of joy, making friends.

Mastodon brought my business clients, helped me gain confidence in my own voice, freed me from dependence on big tech and algorithms, rekindled my interests, introduced me to incredible people and projects, and served as a source of hope in humanity in the times when cynicism and nihilism felt all but inevitable.

I love our little corner of the internet, and am so glad that it’s still here despite everyone who professed it was doomed to fade into irrelevance.

Thank you to everyone reading these words for being here on the Fedi. The world is a little better thanks to your choice to support an independent web.

2025-12-11 08:16:21

Meta-learning three-factor plasticity rules for structured credit assignment with sparse feedback

Dimitra Maoutsa

https://arxiv.org/abs/2512.09366 https://arxiv.org/pdf/2512.09366 https://arxiv.org/html/2512.09366

arXiv:2512.09366v1 Announce Type: new

Abstract: Biological neural networks learn complex behaviors from sparse, delayed feedback using local synaptic plasticity, yet the mechanisms enabling structured credit assignment remain elusive. In contrast, artificial recurrent networks solving similar tasks typically rely on biologically implausible global learning rules or hand-crafted local updates. The space of local plasticity rules capable of supporting learning from delayed reinforcement remains largely unexplored. Here, we present a meta-learning framework that discovers local learning rules for structured credit assignment in recurrent networks trained with sparse feedback. Our approach interleaves local neo-Hebbian-like updates during task execution with an outer loop that optimizes plasticity parameters via \textbf{tangent-propagation through learning}. The resulting three-factor learning rules enable long-timescale credit assignment using only local information and delayed rewards, offering new insights into biologically grounded mechanisms for learning in recurrent circuits.

toXiv_bot_toot

2026-01-21 13:23:05

A Kubernetes custom scheduler based on reinforcement learning for compute-intensive pods

Hanlin Zhou, Huah Yong Chan, Shun Yao Zhang, Meie Lin, Jingfei Ni

https://arxiv.org/abs/2601.13579

2025-12-20 21:14:21

2025-12-22 10:34:50

Regularized Random Fourier Features and Finite Element Reconstruction for Operator Learning in Sobolev Space

Xinyue Yu, Hayden Schaeffer

https://arxiv.org/abs/2512.17884 https://arxiv.org/pdf/2512.17884 https://arxiv.org/html/2512.17884

arXiv:2512.17884v1 Announce Type: new

Abstract: Operator learning is a data-driven approximation of mappings between infinite-dimensional function spaces, such as the solution operators of partial differential equations. Kernel-based operator learning can offer accurate, theoretically justified approximations that require less training than standard methods. However, they can become computationally prohibitive for large training sets and can be sensitive to noise. We propose a regularized random Fourier feature (RRFF) approach, coupled with a finite element reconstruction map (RRFF-FEM), for learning operators from noisy data. The method uses random features drawn from multivariate Student's $t$ distributions, together with frequency-weighted Tikhonov regularization that suppresses high-frequency noise. We establish high-probability bounds on the extreme singular values of the associated random feature matrix and show that when the number of features $N$ scales like $m \log m$ with the number of training samples $m$, the system is well-conditioned, which yields estimation and generalization guarantees. Detailed numerical experiments on benchmark PDE problems, including advection, Burgers', Darcy flow, Helmholtz, Navier-Stokes, and structural mechanics, demonstrate that RRFF and RRFF-FEM are robust to noise and achieve improved performance with reduced training time compared to the unregularized random feature model, while maintaining competitive accuracy relative to kernel and neural operator tests.

toXiv_bot_toot

2025-12-18 14:49:19

Ellen Patton has been a joy to add to our learning and engagement team and to our staff as a whole. A native Athenian, she grew up visiting the museum, which helped her forge strong connections with both the artwork and her family. “I think that having these great memories in the museum makes me want to push for everyone who visits to have a connective experience,” Patton said.

Read more about her journey on our blog:

2026-01-21 04:11:15

Barret Zoph says Thinking Machines Lab fired him only after learning he was leaving, and at no time did the company cite his performance or unethical conduct (Wall Street Journal)

https://www.wsj.com/tech/ai/the-messy-h…

2026-01-24 19:26:37

NFL player props, 2026 AFC, NFC Championship picks, odds, AI predictions: Puka Nacua Over 92.5 receiving yards

https://www.cbssports.com/nfl/news/nfl-player-prop…

2026-01-11 15:42:21

🇺🇦 #NowPlaying on KEXP's #PacificNotions

Passarani:

🎵 Learning To Let Go

#Passarani

https://marcopassarani.bandcamp.com/track/learning-to-let-go

2025-11-04 04:46:11

2026-01-11 01:36:23

2025-12-10 08:00:50

Multi-agent learning under uncertainty: Recurrence vs. concentration

Kyriakos Lotidis, Panayotis Mertikopoulos, Nicholas Bambos, Jose Blanchet

https://arxiv.org/abs/2512.08132 https://arxiv.org/pdf/2512.08132 https://arxiv.org/html/2512.08132

arXiv:2512.08132v1 Announce Type: new

Abstract: In this paper, we examine the convergence landscape of multi-agent learning under uncertainty. Specifically, we analyze two stochastic models of regularized learning in continuous games -- one in continuous and one in discrete time with the aim of characterizing the long-run behavior of the induced sequence of play. In stark contrast to deterministic, full-information models of learning (or models with a vanishing learning rate), we show that the resulting dynamics do not converge in general. In lieu of this, we ask instead which actions are played more often in the long run, and by how much. We show that, in strongly monotone games, the dynamics of regularized learning may wander away from equilibrium infinitely often, but they always return to its vicinity in finite time (which we estimate), and their long-run distribution is sharply concentrated around a neighborhood thereof. We quantify the degree of this concentration, and we show that these favorable properties may all break down if the underlying game is not strongly monotone -- underscoring in this way the limits of regularized learning in the presence of persistent randomness and uncertainty.

toXiv_bot_toot

2026-01-16 15:11:35

"Digital Learning: Exploring Perceived Usefulness and Perceived Ease of Use of Open Educational Resources"

#OpenIrony

2026-01-16 02:00:06

livemocha: Livemocha friendship network (2010)

A network of friendships among users on Livemocha, a large online language learning community. Nodes represent users and edges represent a mutual declaration of friendship.

This network has 104103 nodes and 2193083 edges.

Tags: Social, Online, Unweighted

https://ne…

2025-12-17 01:44:23

Align. Your. Assessments. With. Your. Learning objectives.

2025-12-11 12:56:58

This is an important announcement. Google uses it's ecosystem to gain an advantage, "Announcing Model Context Protocol (MCP) support for Google services"

https://cloud.google.com/blog/products/ai-machine-learning/ann…

2025-12-22 11:50:43

Crosslisted article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[3/3]:

- Fraud detection in credit card transactions using Quantum-Assisted Restricted Boltzmann Machines

Jo\~ao Marcos Cavalcanti de Albuquerque Neto, Gustavo Castro do Amaral, Guilherme Penello Tempor\~ao

https://arxiv.org/abs/2512.17660 https://mastoxiv.page/@arXiv_quantph_bot/115762703945731580

- Vidarc: Embodied Video Diffusion Model for Closed-loop Control

Feng, Xiang, Mao, Tan, Zhang, Huang, Zheng, Liu, Su, Zhu

https://arxiv.org/abs/2512.17661 https://mastoxiv.page/@arXiv_csRO_bot/115762650859932523

- Imputation Uncertainty in Interpretable Machine Learning Methods

Pegah Golchian, Marvin N. Wright

https://arxiv.org/abs/2512.17689 https://mastoxiv.page/@arXiv_statML_bot/115762577479255577

- Revisiting the Broken Symmetry Phase of Solid Hydrogen: A Neural Network Variational Monte Carlo ...

Shengdu Chai, Chen Lin, Xinyang Dong, Yuqiang Li, Wanli Ouyang, Lei Wang, X. C. Xie

https://arxiv.org/abs/2512.17703 https://mastoxiv.page/@arXiv_condmatstrel_bot/115762481116668454

- Breast Cancer Neoadjuvant Chemotherapy Treatment Response Prediction Using Aligned Longitudinal M...

Rahul Ravi, Ruizhe Li, Tarek Abdelfatah, Stephen Chan, Xin Chen

https://arxiv.org/abs/2512.17759 https://mastoxiv.page/@arXiv_eessIV_bot/115762481771898369

- MedNeXt-v2: Scaling 3D ConvNeXts for Large-Scale Supervised Representation Learning in Medical Im...

Roy, Kirchhoff, Ulrich, Rokuss, Wald, Isensee, Maier-Hein

https://arxiv.org/abs/2512.17774 https://mastoxiv.page/@arXiv_eessIV_bot/115762492258209812

- Domain-Aware Quantum Circuit for QML

Gurinder Singh, Thaddeus Pellegrini, Kenneth M. Merz, Jr

https://arxiv.org/abs/2512.17800 https://mastoxiv.page/@arXiv_quantph_bot/115762723607200478

- Visually Prompted Benchmarks Are Surprisingly Fragile

Feng, Lian, Dunlap, Shu, Wang, Wang, Darrell, Suhr, Kanazawa

https://arxiv.org/abs/2512.17875 https://mastoxiv.page/@arXiv_csCV_bot/115762781936221554

- Learning vertical coordinates via automatic differentiation of a dynamical core

Tim Whittaker, Seth Taylor, Elsa Cardoso-Bihlo, Alejandro Di Luca, Alex Bihlo

https://arxiv.org/abs/2512.17877 https://mastoxiv.page/@arXiv_physicsaoph_bot/115762405092703069

- RadarGen: Automotive Radar Point Cloud Generation from Cameras

Tomer Borreda, Fangqiang Ding, Sanja Fidler, Shengyu Huang, Or Litany

https://arxiv.org/abs/2512.17897 https://mastoxiv.page/@arXiv_csCV_bot/115762783246540528

- Distributionally Robust Imitation Learning: Layered Control Architecture for Certifiable Autonomy

Gahlawat, Aboudonia, Banik, Hovakimyan, Matni, Ames, Zardini, Speranzon

https://arxiv.org/abs/2512.17899 https://mastoxiv.page/@arXiv_eessSY_bot/115762532257741954

- Re-Depth Anything: Test-Time Depth Refinement via Self-Supervised Re-lighting

Ananta R. Bhattarai, Helge Rhodin

https://arxiv.org/abs/2512.17908 https://mastoxiv.page/@arXiv_csCV_bot/115762785868778349

toXiv_bot_toot

2025-11-21 22:46:50

Self-learning AI releases NFL picks, score predictions every Week 12 game

https://www.cbssports.com/nfl/news/nfl-week-12-ai-picks-score-predictions-best-bets-today/…

2025-11-18 15:57:18

I urge men — and everyone of a privileged identity — to read the Reddit post in the OP.

We’re the ones who need to hear about these experiences. We’re the ones who need to start learning to recognize it sooner, recognize it at a distance. We’re the ones who need to start sharing notes, sharing warnings, and having our colleagues’ backs.

5/

2026-01-21 13:28:36

Device Association and Resource Allocation for Hierarchical Split Federated Learning in Space-Air-Ground Integrated Network

Haitao Zhao, Xiaoyu Tang, Bo Xu, Jinlong Sun, Linghao Zhang

https://arxiv.org/abs/2601.13817

2025-12-19 18:55:53

Neural Concept, whose 3D product design software uses deep learning to help cut development times, raised a $100M Series C, bringing its total funding to $130M (Chris Metinko/Axios)

https://www.axios.com/pro/enterprise-software-deals/20…

2025-12-22 11:50:31

Crosslisted article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[2/3]:

- Sharp Structure-Agnostic Lower Bounds for General Functional Estimation

Jikai Jin, Vasilis Syrgkanis

https://arxiv.org/abs/2512.17341 https://mastoxiv.page/@arXiv_statML_bot/115762312049963700

- Timely Information Updating for Mobile Devices Without and With ML Advice

Yu-Pin Hsu, Yi-Hsuan Tseng

https://arxiv.org/abs/2512.17381 https://mastoxiv.page/@arXiv_csNI_bot/115762180316858485

- SWE-Bench : A Framework for the Scalable Generation of Software Engineering Benchmarks from Open...

Wang, Ramalho, Celestino, Pham, Liu, Sinha, Portillo, Osunwa, Maduekwe

https://arxiv.org/abs/2512.17419 https://mastoxiv.page/@arXiv_csSE_bot/115762487015279852

- Perfect reconstruction of sparse signals using nonconvexity control and one-step RSB message passing

Xiaosi Gu, Ayaka Sakata, Tomoyuki Obuchi

https://arxiv.org/abs/2512.17426 https://mastoxiv.page/@arXiv_statML_bot/115762346108219997

- MULTIAQUA: A multimodal maritime dataset and robust training strategies for multimodal semantic s...

Jon Muhovi\v{c}, Janez Per\v{s}

https://arxiv.org/abs/2512.17450 https://mastoxiv.page/@arXiv_csCV_bot/115762717053353674

- When Data Quality Issues Collide: A Large-Scale Empirical Study of Co-Occurring Data Quality Issu...

Emmanuel Charleson Dapaah, Jens Grabowski

https://arxiv.org/abs/2512.17460 https://mastoxiv.page/@arXiv_csSE_bot/115762500123147574

- Behavioural Effects of Agentic Messaging: A Case Study on a Financial Service Application

Olivier Jeunen, Schaun Wheeler

https://arxiv.org/abs/2512.17462 https://mastoxiv.page/@arXiv_csIR_bot/115762430673347625

- Linear Attention for Joint Power Optimization and User-Centric Clustering in Cell-Free Networks

Irched Chafaa, Giacomo Bacci, Luca Sanguinetti

https://arxiv.org/abs/2512.17466 https://mastoxiv.page/@arXiv_eessSY_bot/115762336277179643

- Translating the Rashomon Effect to Sequential Decision-Making Tasks

Dennis Gross, J{\o}rn Eirik Betten, Helge Spieker

https://arxiv.org/abs/2512.17470 https://mastoxiv.page/@arXiv_csAI_bot/115762556506696539

- Alternating Direction Method of Multipliers for Nonlinear Matrix Decompositions

Atharva Awari, Nicolas Gillis, Arnaud Vandaele

https://arxiv.org/abs/2512.17473 https://mastoxiv.page/@arXiv_eessSP_bot/115762580078964235

- TwinSegNet: A Digital Twin-Enabled Federated Learning Framework for Brain Tumor Analysis

Almustapha A. Wakili, Adamu Hussaini, Abubakar A. Musa, Woosub Jung, Wei Yu

https://arxiv.org/abs/2512.17488 https://mastoxiv.page/@arXiv_csCV_bot/115762726884307901

- Resource-efficient medical image classification for edge devices

Mahsa Lavaei, Zahra Abadi, Salar Beigzad, Alireza Maleki

https://arxiv.org/abs/2512.17515 https://mastoxiv.page/@arXiv_eessIV_bot/115762459510336799

- PathBench-MIL: A Comprehensive AutoML and Benchmarking Framework for Multiple Instance Learning i...

Brussee, Valkema, Weijer, Doeleman, Schrader, Kers

https://arxiv.org/abs/2512.17517 https://mastoxiv.page/@arXiv_csCV_bot/115762741957639051

- HydroGym: A Reinforcement Learning Platform for Fluid Dynamics

Christian Lagemann, et al.

https://arxiv.org/abs/2512.17534 https://mastoxiv.page/@arXiv_physicsfludyn_bot/115762391350754768

- When De-noising Hurts: A Systematic Study of Speech Enhancement Effects on Modern Medical ASR Sys...

Chondhekar, Murukuri, Vasani, Goyal, Badami, Rana, SN, Pandia, Katiyar, Jagadeesh, Gulati

https://arxiv.org/abs/2512.17562 https://mastoxiv.page/@arXiv_csSD_bot/115762423443170715

- Enabling Disaggregated Multi-Stage MLLM Inference via GPU-Internal Scheduling and Resource Sharing

Lingxiao Zhao, Haoran Zhou, Yuezhi Che, Dazhao Cheng

https://arxiv.org/abs/2512.17574 https://mastoxiv.page/@arXiv_csDC_bot/115762425409322293

- SkinGenBench: Generative Model and Preprocessing Effects for Synthetic Dermoscopic Augmentation i...

N. A. Adarsh Pritam, Jeba Shiney O, Sanyam Jain

https://arxiv.org/abs/2512.17585 https://mastoxiv.page/@arXiv_eessIV_bot/115762479150695610

- MAD-OOD: A Deep Learning Cluster-Driven Framework for an Out-of-Distribution Malware Detection an...

Tosin Ige, Christopher Kiekintveld, Aritran Piplai, Asif Rahman, Olukunle Kolade, Sasidhar Kunapuli

https://arxiv.org/abs/2512.17594 https://mastoxiv.page/@arXiv_csCR_bot/115762509298207765

- Confidence-Credibility Aware Weighted Ensembles of Small LLMs Outperform Large LLMs in Emotion De...

Menna Elgabry, Ali Hamdi

https://arxiv.org/abs/2512.17630 https://mastoxiv.page/@arXiv_csCL_bot/115762575512981257

- Generative Multi-Objective Bayesian Optimization with Scalable Batch Evaluations for Sample-Effic...

Madhav R. Muthyala, Farshud Sorourifar, Tianhong Tan, You Peng, Joel A. Paulson

https://arxiv.org/abs/2512.17659 https://mastoxiv.page/@arXiv_statML_bot/115762554519447500

toXiv_bot_toot

2026-01-10 20:59:07

RMS Hospitality Learning Labs

A practical webinar series from RMS that explore how technology can make hospitality simpler, smarter, and more human...

Great Australian Pods Podcast Directory: https://www.greataustralianpods.com/rms-hospitality-learning-labs/

2026-01-20 03:20:31

We have learnt to look at the world through ratings, reviews, and whether a place is “Instagrammable.”

Nuance is pressed flat into a system of stars.

Even mountains and valleys are scored on Google Maps,

while countless unassuming places slip silently through the net.

I often wonder, ruefully, how much we are missing when only the ranked and the rated rise to the surface.

It begins to feel as though such systems are not merely cataloguing the world,

bu…

2025-12-18 20:39:47

"Taking small steps is crucial. You might start by just learning about your immediate neighborhood, how things actually work, finding ways to intervene in things that are not working well. Understand the water supply and how to keep it clean. ... Share. Share what you have."

—YDS professor Willie James Jennings in this interview in the new issue of Reflections, focused on building hope for a living planet

2025-11-18 15:49:24

⏰ Electric Vehicle Range Prediction Models: A Review of Machine Learning, Mathematical, and Simulation Approaches

#ev

2025-12-22 11:50:19

Crosslisted article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[1/3]:

- Optimizing Text Search: A Novel Pattern Matching Algorithm Based on Ukkonen's Approach

Xinyu Guan, Shaohua Zhang

https://arxiv.org/abs/2512.16927 https://mastoxiv.page/@arXiv_csDS_bot/115762062326187898

- SpIDER: Spatially Informed Dense Embedding Retrieval for Software Issue Localization

Shravan Chaudhari, Rahul Thomas Jacob, Mononito Goswami, Jiajun Cao, Shihab Rashid, Christian Bock

https://arxiv.org/abs/2512.16956 https://mastoxiv.page/@arXiv_csSE_bot/115762248476963893

- MemoryGraft: Persistent Compromise of LLM Agents via Poisoned Experience Retrieval

Saksham Sahai Srivastava, Haoyu He

https://arxiv.org/abs/2512.16962 https://mastoxiv.page/@arXiv_csCR_bot/115762140339109012

- Colormap-Enhanced Vision Transformers for MRI-Based Multiclass (4-Class) Alzheimer's Disease Clas...

Faisal Ahmed

https://arxiv.org/abs/2512.16964 https://mastoxiv.page/@arXiv_eessIV_bot/115762196702065869

- Probing Scientific General Intelligence of LLMs with Scientist-Aligned Workflows

Wanghan Xu, et al.

https://arxiv.org/abs/2512.16969 https://mastoxiv.page/@arXiv_csAI_bot/115762050529328276

- PAACE: A Plan-Aware Automated Agent Context Engineering Framework

Kamer Ali Yuksel

https://arxiv.org/abs/2512.16970 https://mastoxiv.page/@arXiv_csAI_bot/115762054461584205

- A Women's Health Benchmark for Large Language Models

Elisabeth Gruber, et al.

https://arxiv.org/abs/2512.17028 https://mastoxiv.page/@arXiv_csCL_bot/115762049873946945

- Perturb Your Data: Paraphrase-Guided Training Data Watermarking

Pranav Shetty, Mirazul Haque, Petr Babkin, Zhiqiang Ma, Xiaomo Liu, Manuela Veloso

https://arxiv.org/abs/2512.17075 https://mastoxiv.page/@arXiv_csCL_bot/115762077400293945

- Disentangled representations via score-based variational autoencoders

Benjamin S. H. Lyo, Eero P. Simoncelli, Cristina Savin

https://arxiv.org/abs/2512.17127 https://mastoxiv.page/@arXiv_statML_bot/115762251753966702

- Biosecurity-Aware AI: Agentic Risk Auditing of Soft Prompt Attacks on ESM-Based Variant Predictors

Huixin Zhan

https://arxiv.org/abs/2512.17146 https://mastoxiv.page/@arXiv_csCR_bot/115762318582013305

- Application of machine learning to predict food processing level using Open Food Facts

Arora, Chauhan, Rana, Aditya, Bhagat, Kumar, Kumar, Semar, Singh, Bagler

https://arxiv.org/abs/2512.17169 https://mastoxiv.page/@arXiv_qbioBM_bot/115762302873829397

- Systemic Risk Radar: A Multi-Layer Graph Framework for Early Market Crash Warning

Sandeep Neela

https://arxiv.org/abs/2512.17185 https://mastoxiv.page/@arXiv_qfinRM_bot/115762275982224870

- Do Foundational Audio Encoders Understand Music Structure?

Keisuke Toyama, Zhi Zhong, Akira Takahashi, Shusuke Takahashi, Yuki Mitsufuji

https://arxiv.org/abs/2512.17209 https://mastoxiv.page/@arXiv_csSD_bot/115762341541572505

- CheXPO-v2: Preference Optimization for Chest X-ray VLMs with Knowledge Graph Consistency

Xiao Liang, Yuxuan An, Di Wang, Jiawei Hu, Zhicheng Jiao, Bin Jing, Quan Wang

https://arxiv.org/abs/2512.17213 https://mastoxiv.page/@arXiv_csCV_bot/115762574180736975

- Machine Learning Assisted Parameter Tuning on Wavelet Transform Amorphous Radial Distribution Fun...

Deriyan Senjaya, Stephen Ekaputra Limantoro

https://arxiv.org/abs/2512.17245 https://mastoxiv.page/@arXiv_condmatmtrlsci_bot/115762447037143855

- AlignDP: Hybrid Differential Privacy with Rarity-Aware Protection for LLMs

Madhava Gaikwad

https://arxiv.org/abs/2512.17251 https://mastoxiv.page/@arXiv_csCR_bot/115762396593872943

- Practical Framework for Privacy-Preserving and Byzantine-robust Federated Learning

Baolei Zhang, Minghong Fang, Zhuqing Liu, Biao Yi, Peizhao Zhou, Yuan Wang, Tong Li, Zheli Liu

https://arxiv.org/abs/2512.17254 https://mastoxiv.page/@arXiv_csCR_bot/115762402470985707

- Verifiability-First Agents: Provable Observability and Lightweight Audit Agents for Controlling A...

Abhivansh Gupta

https://arxiv.org/abs/2512.17259 https://mastoxiv.page/@arXiv_csMA_bot/115762225538364939

- Warmer for Less: A Cost-Efficient Strategy for Cold-Start Recommendations at Pinterest

Saeed Ebrahimi, Weijie Jiang, Jaewon Yang, Olafur Gudmundsson, Yucheng Tu, Huizhong Duan

https://arxiv.org/abs/2512.17277 https://mastoxiv.page/@arXiv_csIR_bot/115762214396869930

- LibriVAD: A Scalable Open Dataset with Deep Learning Benchmarks for Voice Activity Detection

Ioannis Stylianou, Achintya kr. Sarkar, Nauman Dawalatabad, James Glass, Zheng-Hua Tan

https://arxiv.org/abs/2512.17281 https://mastoxiv.page/@arXiv_csSD_bot/115762361858560703

- Penalized Fair Regression for Multiple Groups in Chronic Kidney Disease

Carter H. Nakamoto, Lucia Lushi Chen, Agata Foryciarz, Sherri Rose

https://arxiv.org/abs/2512.17340 https://mastoxiv.page/@arXiv_statME_bot/115762446402738033

toXiv_bot_toot

2025-12-16 20:21:00

Want to break into climate work but don't know where to start? Terra.do's Learning for Action fellowship might be your answer.

This 12-week program goes deep on real-world climate solutions—beyond just clean energy. You'll learn the science, explore diverse solutions, and connect with a global community, all while working full-time (6-10 hrs/week).

Financial aid available.

2025-12-27 13:11:48

I've spent the past year learning Morse code, and it's ended up being a great deal of fun. I've had a lot of questions. I've tried to share what I've learned with others as I've gone along.

To celebrate the end of the year, I wrote a combination of information, story, and advice. How to get started, mobile apps and websites, books, getting on the air, learning to send, POTA, SST, keys and keyers. I'm not fluent or expert yet, but that means that I still remember what's hard!

2025-11-16 13:19:45

Im still "e-learning" how to generate aura and hype moments at a market-competitive rate so bear with me

2025-12-22 14:26:15

Colts vs. 49ers SGP: 'Monday Night Football' same-game parlay picks, bets, props from SportsLine AI

https://www.cbssports.com/nfl/news/colts-49ers-sgp…

2025-12-22 13:54:55

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[4/5]:

- Sample, Don't Search: Rethinking Test-Time Alignment for Language Models

Gon\c{c}alo Faria, Noah A. Smith

https://arxiv.org/abs/2504.03790 https://mastoxiv.page/@arXiv_csCL_bot/114301112970577326

- A Survey on Archetypal Analysis

Aleix Alcacer, Irene Epifanio, Sebastian Mair, Morten M{\o}rup

https://arxiv.org/abs/2504.12392 https://mastoxiv.page/@arXiv_statME_bot/114357826909813483

- The Stochastic Occupation Kernel (SOCK) Method for Learning Stochastic Differential Equations

Michael L. Wells, Kamel Lahouel, Bruno Jedynak

https://arxiv.org/abs/2505.11622 https://mastoxiv.page/@arXiv_statML_bot/114539065460187982

- BOLT: Block-Orthonormal Lanczos for Trace estimation of matrix functions

Kingsley Yeon, Promit Ghosal, Mihai Anitescu

https://arxiv.org/abs/2505.12289 https://mastoxiv.page/@arXiv_mathNA_bot/114539035462135281

- Clustering and Pruning in Causal Data Fusion

Otto Tabell, Santtu Tikka, Juha Karvanen

https://arxiv.org/abs/2505.15215 https://mastoxiv.page/@arXiv_statML_bot/114550346291754635

- On the performance of multi-fidelity and reduced-dimensional neural emulators for inference of ph...

Chloe H. Choi, Andrea Zanoni, Daniele E. Schiavazzi, Alison L. Marsden

https://arxiv.org/abs/2506.11683 https://mastoxiv.page/@arXiv_statML_bot/114692410563481289

- Beyond Force Metrics: Pre-Training MLFFs for Stable MD Simulations

Maheshwari, Tang, Ock, Kolluru, Farimani, Kitchin

https://arxiv.org/abs/2506.14850 https://mastoxiv.page/@arXiv_physicschemph_bot/114709402590755731

- Quantifying Uncertainty in the Presence of Distribution Shifts

Yuli Slavutsky, David M. Blei

https://arxiv.org/abs/2506.18283 https://mastoxiv.page/@arXiv_statML_bot/114738165218533987

- ZKPROV: A Zero-Knowledge Approach to Dataset Provenance for Large Language Models

Mina Namazi, Alexander Nemecek, Erman Ayday

https://arxiv.org/abs/2506.20915 https://mastoxiv.page/@arXiv_csCR_bot/114754394485208892

- SpecCLIP: Aligning and Translating Spectroscopic Measurements for Stars

Zhao, Huang, Xue, Kong, Liu, Tang, Beers, Ting, Luo

https://arxiv.org/abs/2507.01939 https://mastoxiv.page/@arXiv_astrophIM_bot/114788369702591337

- Towards Facilitated Fairness Assessment of AI-based Skin Lesion Classifiers Through GenAI-based I...

Ko Watanabe, Stanislav Frolov, Aya Hassan, David Dembinsky, Adriano Lucieri, Andreas Dengel

https://arxiv.org/abs/2507.17860 https://mastoxiv.page/@arXiv_csCV_bot/114912976717523345

- PASS: Probabilistic Agentic Supernet Sampling for Interpretable and Adaptive Chest X-Ray Reasoning

Yushi Feng, Junye Du, Yingying Hong, Qifan Wang, Lequan Yu

https://arxiv.org/abs/2508.10501 https://mastoxiv.page/@arXiv_csAI_bot/115032101532614110

- Unified Acoustic Representations for Screening Neurological and Respiratory Pathologies from Voice

Ran Piao, Yuan Lu, Hareld Kemps, Tong Xia, Aaqib Saeed

https://arxiv.org/abs/2508.20717 https://mastoxiv.page/@arXiv_csSD_bot/115111255835875066

- Machine Learning-Driven Predictive Resource Management in Complex Science Workflows

Tasnuva Chowdhury, et al.

https://arxiv.org/abs/2509.11512 https://mastoxiv.page/@arXiv_csDC_bot/115213444524490263

- MatchFixAgent: Language-Agnostic Autonomous Repository-Level Code Translation Validation and Repair

Ali Reza Ibrahimzada, Brandon Paulsen, Reyhaneh Jabbarvand, Joey Dodds, Daniel Kroening

https://arxiv.org/abs/2509.16187 https://mastoxiv.page/@arXiv_csSE_bot/115247172280557686

- Automated Machine Learning Pipeline: Large Language Models-Assisted Automated Dataset Generation ...

Adam Lahouari, Jutta Rogal, Mark E. Tuckerman

https://arxiv.org/abs/2509.21647 https://mastoxiv.page/@arXiv_condmatmtrlsci_bot/115286737423175311

- Quantifying the Impact of Structured Output Format on Large Language Models through Causal Inference

Han Yuan, Yue Zhao, Li Zhang, Wuqiong Luo, Zheng Ma

https://arxiv.org/abs/2509.21791 https://mastoxiv.page/@arXiv_csCL_bot/115287166674809413

- The Generation Phases of Flow Matching: a Denoising Perspective

Anne Gagneux, S\'egol\`ene Martin, R\'emi Gribonval, Mathurin Massias

https://arxiv.org/abs/2510.24830 https://mastoxiv.page/@arXiv_csCV_bot/115462527449411627

- Data-driven uncertainty-aware seakeeping prediction of the Delft 372 catamaran using ensemble Han...

Giorgio Palma, Andrea Serani, Matteo Diez

https://arxiv.org/abs/2511.04461 https://mastoxiv.page/@arXiv_eessSY_bot/115507785247809767

- Generalized infinite dimensional Alpha-Procrustes based geometries

Salvish Goomanee, Andi Han, Pratik Jawanpuria, Bamdev Mishra

https://arxiv.org/abs/2511.09801 https://mastoxiv.page/@arXiv_statML_bot/115547135711272091

toXiv_bot_toot

2025-12-02 17:18:14

I’m sympathetic to this from @…, but my patience for that learning process is short because the wrongheaded “plastic recycling is 100% hoax!!” canard quickly gets turned into “ALL recycling is 100% hoax!!” by right-wingers. It’s a learning process, yes, but a learning process that bad actors have been actively exploiting for at least a decade.

https://mastodon.social/@schwa/115651093211678205

2025-11-19 06:38:18

Google is still getting all the information collected by Nest Learning Thermostats,

including data measured by their sensors, such as temperature, humidity, ambient light, and motion.

“I was under the impression that the Google connection would be severed along with the remote functionality,

however that connection is not severed, and instead is a one-way street,” Kociemba says.

2025-11-17 04:31:01

Participants in the Learning for Action fellowship are gaining vital skills as they engage with climate solutions and expert insights, empowering them to tackle climate change effectively. https://www.terra.do/blog/answering-your-lfa-questions/

2025-12-17 13:30:35

Coursera plans to acquire rival online education platform Udemy in an all-stock deal valued at $2.5B, combining two of the largest US learning platforms (Akash Sriram/Reuters)

https://www.reuters.com/business/coursera-udemy-merge-deal-v…

2025-12-22 13:54:24

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[1/5]:

- Feed Two Birds with One Scone: Exploiting Wild Data for Both Out-of-Distribution Generalization a...

Haoyue Bai, Gregory Canal, Xuefeng Du, Jeongyeol Kwon, Robert Nowak, Yixuan Li

https://arxiv.org/abs/2306.09158

- Sparse, Efficient and Explainable Data Attribution with DualXDA

Galip \"Umit Yolcu, Moritz Weckbecker, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

https://arxiv.org/abs/2402.12118 https://mastoxiv.page/@arXiv_csLG_bot/111962593972369958

- HGQ: High Granularity Quantization for Real-time Neural Networks on FPGAs

Sun, Que, {\AA}rrestad, Loncar, Ngadiuba, Luk, Spiropulu

https://arxiv.org/abs/2405.00645 https://mastoxiv.page/@arXiv_csLG_bot/112370274737558603

- On the Identification of Temporally Causal Representation with Instantaneous Dependence

Li, Shen, Zheng, Cai, Song, Gong, Chen, Zhang

https://arxiv.org/abs/2405.15325 https://mastoxiv.page/@arXiv_csLG_bot/112511890051553111

- Basis Selection: Low-Rank Decomposition of Pretrained Large Language Models for Target Applications

Yang Li, Daniel Agyei Asante, Changsheng Zhao, Ernie Chang, Yangyang Shi, Vikas Chandra

https://arxiv.org/abs/2405.15877 https://mastoxiv.page/@arXiv_csLG_bot/112517547424098076

- Privacy Bias in Language Models: A Contextual Integrity-based Auditing Metric

Yan Shvartzshnaider, Vasisht Duddu

https://arxiv.org/abs/2409.03735 https://mastoxiv.page/@arXiv_csLG_bot/113089789682783135

- Low-Rank Filtering and Smoothing for Sequential Deep Learning

Joanna Sliwa, Frank Schneider, Nathanael Bosch, Agustinus Kristiadi, Philipp Hennig

https://arxiv.org/abs/2410.06800 https://mastoxiv.page/@arXiv_csLG_bot/113283021321510736

- Hierarchical Multimodal LLMs with Semantic Space Alignment for Enhanced Time Series Classification

Xiaoyu Tao, Tingyue Pan, Mingyue Cheng, Yucong Luo, Qi Liu, Enhong Chen

https://arxiv.org/abs/2410.18686 https://mastoxiv.page/@arXiv_csLG_bot/113367101100828901

- Fairness via Independence: A (Conditional) Distance Covariance Framework

Ruifan Huang, Haixia Liu

https://arxiv.org/abs/2412.00720 https://mastoxiv.page/@arXiv_csLG_bot/113587817648503815

- Data for Mathematical Copilots: Better Ways of Presenting Proofs for Machine Learning

Simon Frieder, et al.

https://arxiv.org/abs/2412.15184 https://mastoxiv.page/@arXiv_csLG_bot/113683924322164777

- Pairwise Elimination with Instance-Dependent Guarantees for Bandits with Cost Subsidy

Ishank Juneja, Carlee Joe-Wong, Osman Ya\u{g}an

https://arxiv.org/abs/2501.10290 https://mastoxiv.page/@arXiv_csLG_bot/113859392622871057

- Towards Human-Guided, Data-Centric LLM Co-Pilots

Evgeny Saveliev, Jiashuo Liu, Nabeel Seedat, Anders Boyd, Mihaela van der Schaar

https://arxiv.org/abs/2501.10321 https://mastoxiv.page/@arXiv_csLG_bot/113859392688054204

- Regularized Langevin Dynamics for Combinatorial Optimization

Shengyu Feng, Yiming Yang

https://arxiv.org/abs/2502.00277

- Generating Samples to Probe Trained Models

Eren Mehmet K{\i}ral, Nur\c{s}en Ayd{\i}n, \c{S}. \.Ilker Birbil

https://arxiv.org/abs/2502.06658 https://mastoxiv.page/@arXiv_csLG_bot/113984059089245671

- On Agnostic PAC Learning in the Small Error Regime

Julian Asilis, Mikael M{\o}ller H{\o}gsgaard, Grigoris Velegkas

https://arxiv.org/abs/2502.09496 https://mastoxiv.page/@arXiv_csLG_bot/114000974082372598

- Preconditioned Inexact Stochastic ADMM for Deep Model

Shenglong Zhou, Ouya Wang, Ziyan Luo, Yongxu Zhu, Geoffrey Ye Li

https://arxiv.org/abs/2502.10784 https://mastoxiv.page/@arXiv_csLG_bot/114023667639951005

- On the Effect of Sampling Diversity in Scaling LLM Inference

Wang, Liu, Chen, Light, Liu, Chen, Zhang, Cheng

https://arxiv.org/abs/2502.11027 https://mastoxiv.page/@arXiv_csLG_bot/114023688225233656

- How to use score-based diffusion in earth system science: A satellite nowcasting example

Randy J. Chase, Katherine Haynes, Lander Ver Hoef, Imme Ebert-Uphoff

https://arxiv.org/abs/2505.10432 https://mastoxiv.page/@arXiv_csLG_bot/114516300594057680

- PEAR: Equal Area Weather Forecasting on the Sphere

Hampus Linander, Christoffer Petersson, Daniel Persson, Jan E. Gerken

https://arxiv.org/abs/2505.17720 https://mastoxiv.page/@arXiv_csLG_bot/114572963019603744

- Train Sparse Autoencoders Efficiently by Utilizing Features Correlation

Vadim Kurochkin, Yaroslav Aksenov, Daniil Laptev, Daniil Gavrilov, Nikita Balagansky

https://arxiv.org/abs/2505.22255 https://mastoxiv.page/@arXiv_csLG_bot/114589956040892075

- A Certified Unlearning Approach without Access to Source Data

Umit Yigit Basaran, Sk Miraj Ahmed, Amit Roy-Chowdhury, Basak Guler

https://arxiv.org/abs/2506.06486 https://mastoxiv.page/@arXiv_csLG_bot/114658421178857085

toXiv_bot_toot

2026-01-19 18:01:54

Self-learning AI generates NFL picks, exact score predictions for 2026 NFC, AFC Championship Games

https://www.cbssports.com/nfl/news/nfl-afc-nfc-championship-games-20…

2025-11-16 16:16:47

Lions vs. Eagles NFL player props, SGP: Self-learning AI backs Jahmyr Gibbs Over 13.5 carries on 'SNF'

https://www.cbssports.com/nfl/news/lions-eagles-nf…

2025-12-22 10:32:10

Polyharmonic Cascade

Yuriy N. Bakhvalov

https://arxiv.org/abs/2512.17671 https://arxiv.org/pdf/2512.17671 https://arxiv.org/html/2512.17671

arXiv:2512.17671v1 Announce Type: new

Abstract: This paper presents a deep machine learning architecture, the "polyharmonic cascade" -- a sequence of packages of polyharmonic splines, where each layer is rigorously derived from the theory of random functions and the principles of indifference. This makes it possible to approximate nonlinear functions of arbitrary complexity while preserving global smoothness and a probabilistic interpretation. For the polyharmonic cascade, a training method alternative to gradient descent is proposed: instead of directly optimizing the coefficients, one solves a single global linear system on each batch with respect to the function values at fixed "constellations" of nodes. This yields synchronized updates of all layers, preserves the probabilistic interpretation of individual layers and theoretical consistency with the original model, and scales well: all computations reduce to 2D matrix operations efficiently executed on a GPU. Fast learning without overfitting on MNIST is demonstrated.

toXiv_bot_toot

2025-12-17 15:48:22

Whistles have become a popular raid alert tool in cities across the country

– New Yorkers wear them around their necks to warn neighbors,

the people of New Orleans blast them outside ICE facilities

and Charlotte residents used them to ward off Customs and Border Protection officials.

While strongly associated with Chicago, the tactic is actually one that city organizers learned in part from groups in Los Angeles.

Its spread is illustrative of the many ways cit…

2025-11-18 15:12:01

Self-learning AI releases NFL picks, score predictions every Week 12 game

https://www.cbssports.com/nfl/news/nfl-week-12-picks-score-predictions-predictions-best-bets/

2025-12-22 10:33:00

Mitigating Forgetting in Low Rank Adaptation

Joanna Sliwa, Frank Schneider, Philipp Hennig, Jose Miguel Hernandez-Lobato

https://arxiv.org/abs/2512.17720 https://arxiv.org/pdf/2512.17720 https://arxiv.org/html/2512.17720

arXiv:2512.17720v1 Announce Type: new

Abstract: Parameter-efficient fine-tuning methods, such as Low-Rank Adaptation (LoRA), enable fast specialization of large pre-trained models to different downstream applications. However, this process often leads to catastrophic forgetting of the model's prior domain knowledge. We address this issue with LaLoRA, a weight-space regularization technique that applies a Laplace approximation to Low-Rank Adaptation. Our approach estimates the model's confidence in each parameter and constrains updates in high-curvature directions, preserving prior knowledge while enabling efficient target-domain learning. By applying the Laplace approximation only to the LoRA weights, the method remains lightweight. We evaluate LaLoRA by fine-tuning a Llama model for mathematical reasoning and demonstrate an improved learning-forgetting trade-off, which can be directly controlled via the method's regularization strength. We further explore different loss landscape curvature approximations for estimating parameter confidence, analyze the effect of the data used for the Laplace approximation, and study robustness across hyperparameters.

toXiv_bot_toot

2025-12-19 04:53:30

Google’s vibe-coding tool, Opal,

is making its way to Gemini.

The company on Wednesday said it is integrating the tool,

which lets you build AI-powered mini apps,

inside the Gemini web app,

allowing users to create their own custom apps,

which Google calls Gems.

Introduced in 2024,

Gems are customized versions of Gemini designed for specific tasks or scenarios.

For instance, some of Google’s pre-made Gems include

a learning coach,…

2025-12-22 10:32:50

Spatially-informed transformers: Injecting geostatistical covariance biases into self-attention for spatio-temporal forecasting

Yuri Calleo

https://arxiv.org/abs/2512.17696 https://arxiv.org/pdf/2512.17696 https://arxiv.org/html/2512.17696

arXiv:2512.17696v1 Announce Type: new

Abstract: The modeling of high-dimensional spatio-temporal processes presents a fundamental dichotomy between the probabilistic rigor of classical geostatistics and the flexible, high-capacity representations of deep learning. While Gaussian processes offer theoretical consistency and exact uncertainty quantification, their prohibitive computational scaling renders them impractical for massive sensor networks. Conversely, modern transformer architectures excel at sequence modeling but inherently lack a geometric inductive bias, treating spatial sensors as permutation-invariant tokens without a native understanding of distance. In this work, we propose a spatially-informed transformer, a hybrid architecture that injects a geostatistical inductive bias directly into the self-attention mechanism via a learnable covariance kernel. By formally decomposing the attention structure into a stationary physical prior and a non-stationary data-driven residual, we impose a soft topological constraint that favors spatially proximal interactions while retaining the capacity to model complex dynamics. We demonstrate the phenomenon of ``Deep Variography'', where the network successfully recovers the true spatial decay parameters of the underlying process end-to-end via backpropagation. Extensive experiments on synthetic Gaussian random fields and real-world traffic benchmarks confirm that our method outperforms state-of-the-art graph neural networks. Furthermore, rigorous statistical validation confirms that the proposed method delivers not only superior predictive accuracy but also well-calibrated probabilistic forecasts, effectively bridging the gap between physics-aware modeling and data-driven learning.

toXiv_bot_toot

2025-12-22 13:55:06

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[5/5]:

- CLAReSNet: When Convolution Meets Latent Attention for Hyperspectral Image Classification

Asmit Bandyopadhyay, Anindita Das Bhattacharjee, Rakesh Das

https://arxiv.org/abs/2511.12346 https://mastoxiv.page/@arXiv_csCV_bot/115570753208147835

- Safeguarded Stochastic Polyak Step Sizes for Non-smooth Optimization: Robust Performance Without ...

Dimitris Oikonomou, Nicolas Loizou

https://arxiv.org/abs/2512.02342 https://mastoxiv.page/@arXiv_mathOC_bot/115654870924418771

- Predictive Modeling of I/O Performance for Machine Learning Training Pipelines: A Data-Driven App...

Karthik Prabhakar, Durgamadhab Mishra

https://arxiv.org/abs/2512.06699 https://mastoxiv.page/@arXiv_csPF_bot/115688618582182232

- Minimum Bayes Risk Decoding for Error Span Detection in Reference-Free Automatic Machine Translat...

Lyu, Song, Kamigaito, Ding, Tanaka, Utiyama, Funakoshi, Okumura

https://arxiv.org/abs/2512.07540 https://mastoxiv.page/@arXiv_csCL_bot/115689532163491162

- In-Context Learning for Seismic Data Processing

Fabian Fuchs, Mario Ruben Fernandez, Norman Ettrich, Janis Keuper

https://arxiv.org/abs/2512.11575 https://mastoxiv.page/@arXiv_csCV_bot/115723040285820239

- Journey Before Destination: On the importance of Visual Faithfulness in Slow Thinking

Rheeya Uppaal, Phu Mon Htut, Min Bai, Nikolaos Pappas, Zheng Qi, Sandesh Swamy

https://arxiv.org/abs/2512.12218 https://mastoxiv.page/@arXiv_csCV_bot/115729165330908574

- Non-Resolution Reasoning (NRR): A Computational Framework for Contextual Identity and Ambiguity P...

Kei Saito

https://arxiv.org/abs/2512.13478 https://mastoxiv.page/@arXiv_csCL_bot/115729234145554554

- Stylized Synthetic Augmentation further improves Corruption Robustness

Georg Siedel, Rojan Regmi, Abhirami Anand, Weijia Shao, Silvia Vock, Andrey Morozov

https://arxiv.org/abs/2512.15675 https://mastoxiv.page/@arXiv_csCV_bot/115740141862163631

- mimic-video: Video-Action Models for Generalizable Robot Control Beyond VLAs

Jonas Pai, Liam Achenbach, Victoriano Montesinos, Benedek Forrai, Oier Mees, Elvis Nava

https://arxiv.org/abs/2512.15692 https://mastoxiv.page/@arXiv_csRO_bot/115739947869830764

toXiv_bot_toot

2026-01-18 04:56:10

A damning new study could put AI companies on the defensive.

In it, Stanford and Yale researchers found compelling evidence that AI models are actually copying all that data,

not “learning” from it.

Specifically, four prominent LLMs

— OpenAI’s GPT-4.1, Google’s Gemini 2.5 Pro, xAI’s Grok 3, and Anthropic’s Claude 3.7 Sonnet

— happily reproduced lengthy excerpts from popular

— and protected

— works, with a stunning degree of accuracy.

They fou…

2025-12-16 14:31:31

Self-learning AI releases NFL picks, score predictions every Week 16 game

https://www.cbssports.com/nfl/news/nfl-week-16-picks-ai-score-predictions/

2026-01-13 16:11:48

Self-learning AI generates NFL picks, score predictions for every 2026 divisional round matchup

https://www.cbssports.com/nfl/news/nfl-divisional-round-2026-picks-ai-score-predi…

2025-11-09 16:46:19

Steelers vs. Chargers NFL player props: Self-learning AI backs Justin Herbert Over 252.5 passing on SNF

https://www.cbssports.com/nfl/news/steelers-chargers-nfl-pla…

2025-12-22 10:32:30

You Only Train Once: Differentiable Subset Selection for Omics Data

Daphn\'e Chopard, Jorge da Silva Gon\c{c}alves, Irene Cannistraci, Thomas M. Sutter, Julia E. Vogt

https://arxiv.org/abs/2512.17678 https://arxiv.org/pdf/2512.17678 https://arxiv.org/html/2512.17678

arXiv:2512.17678v1 Announce Type: new

Abstract: Selecting compact and informative gene subsets from single-cell transcriptomic data is essential for biomarker discovery, improving interpretability, and cost-effective profiling. However, most existing feature selection approaches either operate as multi-stage pipelines or rely on post hoc feature attribution, making selection and prediction weakly coupled. In this work, we present YOTO (you only train once), an end-to-end framework that jointly identifies discrete gene subsets and performs prediction within a single differentiable architecture. In our model, the prediction task directly guides which genes are selected, while the learned subsets, in turn, shape the predictive representation. This closed feedback loop enables the model to iteratively refine both what it selects and how it predicts during training. Unlike existing approaches, YOTO enforces sparsity so that only the selected genes contribute to inference, eliminating the need to train additional downstream classifiers. Through a multi-task learning design, the model learns shared representations across related objectives, allowing partially labeled datasets to inform one another, and discovering gene subsets that generalize across tasks without additional training steps. We evaluate YOTO on two representative single-cell RNA-seq datasets, showing that it consistently outperforms state-of-the-art baselines. These results demonstrate that sparse, end-to-end, multi-task gene subset selection improves predictive performance and yields compact and meaningful gene subsets, advancing biomarker discovery and single-cell analysis.

toXiv_bot_toot