2026-01-22 14:26:00

Collabora portiert Debian auf OpenWrt One

Der erste eigene Router OpenWrt One des OpenWrt-Projekts läuft nun auch mit Debian. Das macht ihn zum Allzweck-Linux-System.

https://www.heise.de/news/Collab…

2025-11-23 19:19:33

"A heinous devil by the name

of RRRobin Hood!"

🏹 😎

#TV #RobinHood

2025-12-22 16:34:20

2025-12-22 10:33:50

Calibratable Disambiguation Loss for Multi-Instance Partial-Label Learning

Wei Tang, Yin-Fang Yang, Weijia Zhang, Min-Ling Zhang

https://arxiv.org/abs/2512.17788 https://arxiv.org/pdf/2512.17788 https://arxiv.org/html/2512.17788

arXiv:2512.17788v1 Announce Type: new

Abstract: Multi-instance partial-label learning (MIPL) is a weakly supervised framework that extends the principles of multi-instance learning (MIL) and partial-label learning (PLL) to address the challenges of inexact supervision in both instance and label spaces. However, existing MIPL approaches often suffer from poor calibration, undermining classifier reliability. In this work, we propose a plug-and-play calibratable disambiguation loss (CDL) that simultaneously improves classification accuracy and calibration performance. The loss has two instantiations: the first one calibrates predictions based on probabilities from the candidate label set, while the second one integrates probabilities from both candidate and non-candidate label sets. The proposed CDL can be seamlessly incorporated into existing MIPL and PLL frameworks. We provide a theoretical analysis that establishes the lower bound and regularization properties of CDL, demonstrating its superiority over conventional disambiguation losses. Experimental results on benchmark and real-world datasets confirm that our CDL significantly enhances both classification and calibration performance.

toXiv_bot_toot

2026-01-20 08:30:02

"""Kein Einstein", aber nicht dumm: Clevere Kuh greift sich Werkzeuge, um sich zu kratzen"

faszinierend

https://www.n-tv.de/wissen/Clevere-Kuh-greift-sich-Werkzeuge-um-sich-zu-kratzen-id30260095.html

2026-01-19 15:39:04

Brainwaves meet spatial computing: Cognixion Vision Pro #noninvasive

2026-01-16 23:50:53

California's AG sends a cease-and-desist letter to xAI, demanding the company halt the generation and distribution of non-consensual intimate images and CSAM (Julianna Bragg/Axios)

https://www.axios.com/2026/01/16/xai-california-elon-musk-deepfakes-child…

2025-12-20 04:00:04

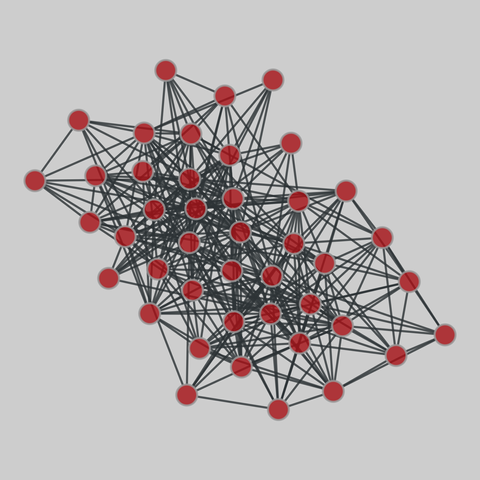

windsurfers: Windsurfers network (1986)

A network of interpersonal contacts among windsurfers in southern California during the Fall of 1986. The edge weights indicate the perception of social affiliations majored by the tasks in which each individual was asked to sort cards with other surfer’s name in the order of closeness.

This network has 43 nodes and 336 edges.

Tags: Social, Offline, Weighted

2026-01-19 14:29:06

Speaking as a retired chemist, this is absolutely essential to help maintain California's strength in science and engineering.

On the other hand , the most valuable parts of my education (as opposed to training) came from the humanities. Yet we don't seem to recognize how important and central that non-STEM segment is. Why not?

Wiener pitches $23B ‘science bond’ to backfill Trump research cuts

2025-12-22 10:32:50

Spatially-informed transformers: Injecting geostatistical covariance biases into self-attention for spatio-temporal forecasting

Yuri Calleo

https://arxiv.org/abs/2512.17696 https://arxiv.org/pdf/2512.17696 https://arxiv.org/html/2512.17696

arXiv:2512.17696v1 Announce Type: new

Abstract: The modeling of high-dimensional spatio-temporal processes presents a fundamental dichotomy between the probabilistic rigor of classical geostatistics and the flexible, high-capacity representations of deep learning. While Gaussian processes offer theoretical consistency and exact uncertainty quantification, their prohibitive computational scaling renders them impractical for massive sensor networks. Conversely, modern transformer architectures excel at sequence modeling but inherently lack a geometric inductive bias, treating spatial sensors as permutation-invariant tokens without a native understanding of distance. In this work, we propose a spatially-informed transformer, a hybrid architecture that injects a geostatistical inductive bias directly into the self-attention mechanism via a learnable covariance kernel. By formally decomposing the attention structure into a stationary physical prior and a non-stationary data-driven residual, we impose a soft topological constraint that favors spatially proximal interactions while retaining the capacity to model complex dynamics. We demonstrate the phenomenon of ``Deep Variography'', where the network successfully recovers the true spatial decay parameters of the underlying process end-to-end via backpropagation. Extensive experiments on synthetic Gaussian random fields and real-world traffic benchmarks confirm that our method outperforms state-of-the-art graph neural networks. Furthermore, rigorous statistical validation confirms that the proposed method delivers not only superior predictive accuracy but also well-calibrated probabilistic forecasts, effectively bridging the gap between physics-aware modeling and data-driven learning.

toXiv_bot_toot