2026-02-25 10:45:31

Learning from Trials and Errors: Reflective Test-Time Planning for Embodied LLMs

Yining Hong, Huang Huang, Manling Li, Li Fei-Fei, Jiajun Wu, Yejin Choi

https://arxiv.org/abs/2602.21198 https://arxiv.org/pdf/2602.21198 https://arxiv.org/html/2602.21198

arXiv:2602.21198v1 Announce Type: new

Abstract: Embodied LLMs endow robots with high-level task reasoning, but they cannot reflect on what went wrong or why, turning deployment into a sequence of independent trials where mistakes repeat rather than accumulate into experience. Drawing upon human reflective practitioners, we introduce Reflective Test-Time Planning, which integrates two modes of reflection: \textit{reflection-in-action}, where the agent uses test-time scaling to generate and score multiple candidate actions using internal reflections before execution; and \textit{reflection-on-action}, which uses test-time training to update both its internal reflection model and its action policy based on external reflections after execution. We also include retrospective reflection, allowing the agent to re-evaluate earlier decisions and perform model updates with hindsight for proper long-horizon credit assignment. Experiments on our newly-designed Long-Horizon Household benchmark and MuJoCo Cupboard Fitting benchmark show significant gains over baseline models, with ablative studies validating the complementary roles of reflection-in-action and reflection-on-action. Qualitative analyses, including real-robot trials, highlight behavioral correction through reflection.

toXiv_bot_toot

2025-12-09 14:11:46

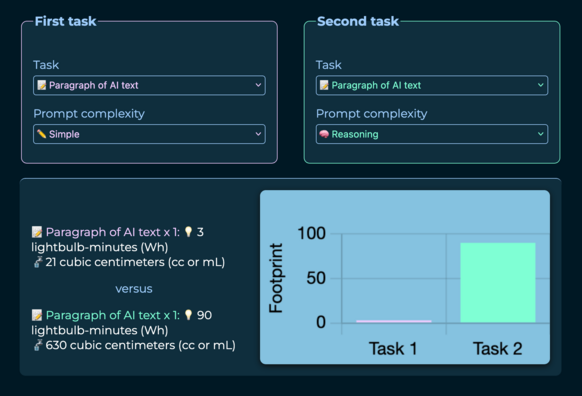

We've updated the What Uses More app to reflect last week's finding by Luccioni and Gamazaychikov that "reasoning" mode increases energy and water usage by 30x. The study casts doubt on the improved efficiency AI companies are claiming for newer models

https://www.