2025-12-27 18:59:15

Underlings are so annoying!

Sometimes you can make this problem go away by exploiting weak labor power (see: factories, agriculture, sanitation).

Sometimes you can create a toxic org culture when information •only• flows down the hierarchy, so no pushback can ever reach your sensitive ears. (Public school administrations are rife with this.)

Sometimes you can do it by making your catastrophic failures look like a string of successes to the people up the chain. (Large corporations are swimming in this.)

5/

2025-09-29 11:16:47

What Is The Political Content in LLMs' Pre- and Post-Training Data?

Tanise Ceron, Dmitry Nikolaev, Dominik Stammbach, Debora Nozza

https://arxiv.org/abs/2509.22367 https://

2025-11-29 02:57:13

Under the Trump regime, if anything can go wrong in this country, it will. When Democrats regain power, they must immediately repeal Murphy's law.

2025-11-26 05:36:56

2025-10-24 09:13:11

This. 100% this: «…the economy itself is driving us into the ditch. It’s based on the creed of cancer — steady growth — and you can’t have endless growth in a finite world. The global economy is far too big, it’s got to shrink, and it’s got to be distributed more equitably around the world.»

The problem is that even the politicians who understand this are helpless to do anything about it. We have surrendered our future to global corporations and the mega-rich and their hand-picked poli…

2025-12-23 14:48:43

Making this a subtoot so I don't come across as smug or condescending...

My decision to stop using github when they started providing services to ICE back in ~2016 felt awkward at times but has been feeling really good in hindsight right now.

I see a bunch of people now saying "why boycott X company over some "minor" transgression or political capitulation (or over a "neutral" stance on LLM code). The answer is: it shows what their values are, which predicts their future behavior, especially under the tilted playing field of capitalism. I'm by no means perfect at this and I don't think shouting at people to boycott is a good idea for several reasons. People should boycott what they want to, for their own reasons. But I am posting this to try to help others be aware of the upsides of taking action when confronted with "subtle" evidence of corporate unvalues.

2025-11-19 20:31:19

»Wie die Politologin Virginia Eubanks in ihrem Buch ›Automating Inequality‹ zeigt, werden KI-Systeme, wenn sie im Rahmen des Sozialstaats eingesetzt werden, in erster Linie dazu verwendet, den Zugang von Menschen zu öffentlichen Ressourcen zu kontrollieren, zu bewerten und zu reglementieren, und nicht etwa dazu, ihnen bessere Unterstützung zukommen zu lassen.«

Aus: Kate Crawford – Atlas der KI (2021/2025)

2025-12-26 06:25:37

“The case for upzoning is relatively solid but deeply underwhelming as a standalone position. The upshot is that everyone is at least partly right: Upzoning can address the shortfall in supply. But it won’t come close to solving the housing crisis alone. Re-enter: public housing.”

https://

2025-11-22 05:31:43

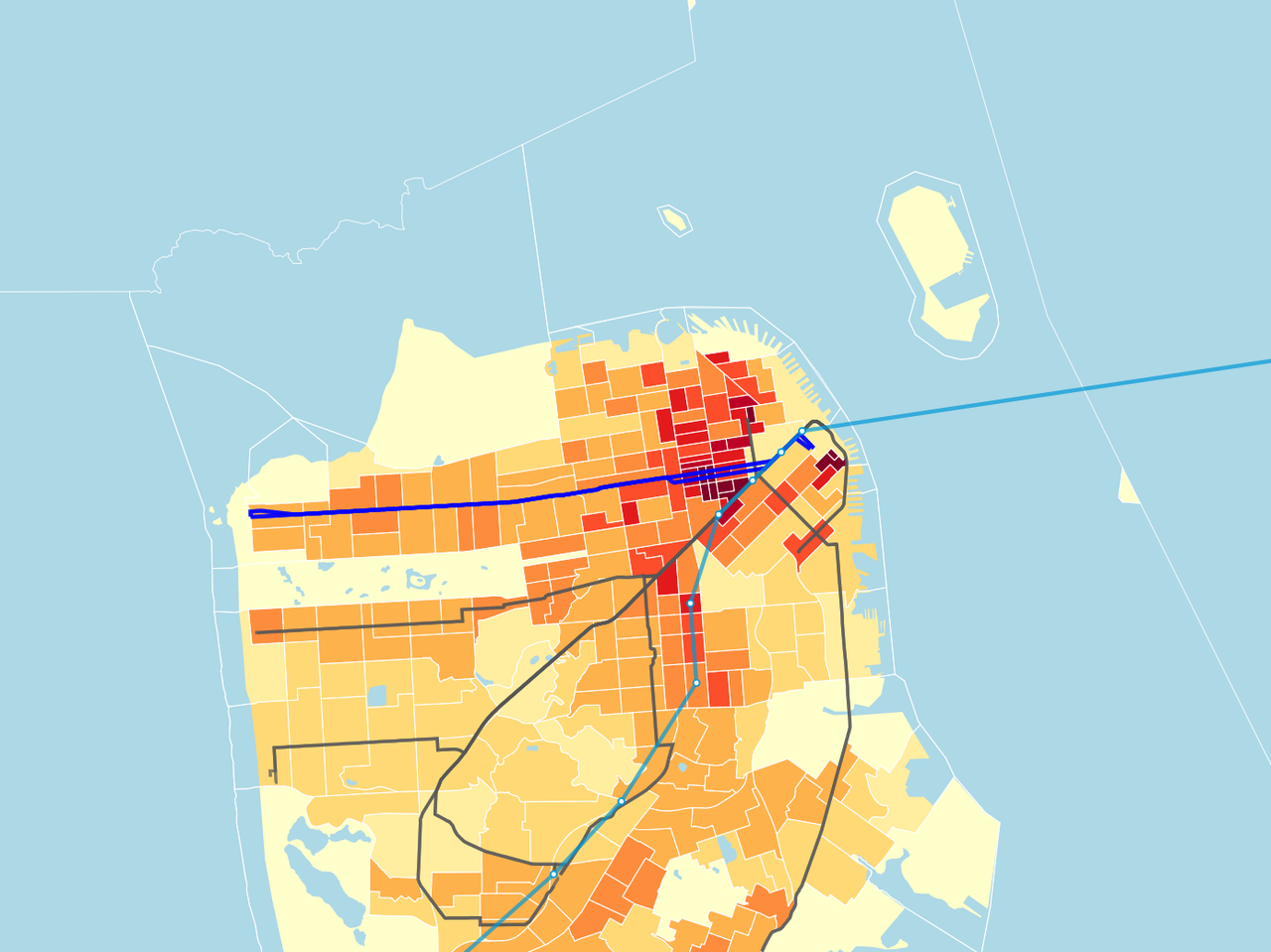

This is a good start but the subway should curve south down 19th Ave, meet up with Daly City BART and continue on the BART tracks down to Millbrae. That part is essential; a branch to Outer Richmond could be added later as a nice-to-have.

https://musubi3.github.io/sfmta-geary-subway…

2025-12-14 23:34:32

"Permitting Parking in Driveways" is at Monday's SFBOS land use committee.

I plan to comment against this. It seems to allow driveways to be unlimited width and to remain even after garages are converted to ADUs. This is nakedly an inducement to more driving at the expense of green space, stormwater drainage, active transportation, and public transit.

![[Planning Code - Permitting Parking in Driveways]

Sponsors: Mayor; Chen and Melgar

Ordinance amending the Planning Code to permit parking of up to two operable vehicles,

not including boats, trailers, recreational vehicles, mobile homes, or buses, in driveways

located in required front setbacks, side yards, or rear yards; affirming the Planning

Department’s determination under the California Environmental Quality Act; making

findings of consistency with the General Plan, and the eight…](https://s3-us-west-1.amazonaws.com/carfreecitymain/media_attachments/files/115/720/537/456/713/276/small/3587a1e38eb0a821.png)