2025-08-03 15:06:08

As much as this scratches the cool itch (and I wish I had a laptop that had a usb-c power-in connection), I can't keep from thinking we're all eventually going to be like Tony Stark in that cave (pre-mark1 suit) lugging that car battery around.

https://fed.brid.g…

2025-08-01 20:06:28

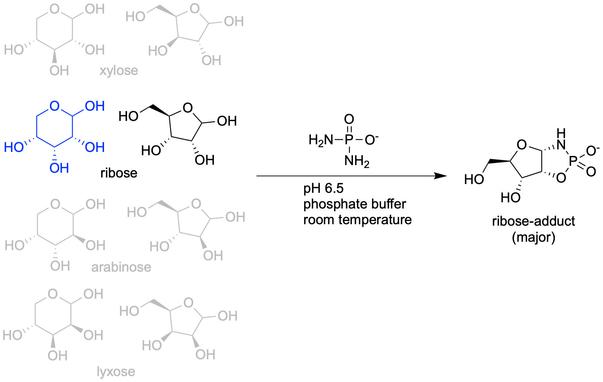

🐍 Where did RNA come from? Origin-of-life scientists help to answer the question

#rna

2025-09-03 13:37:23

Statistics-Friendly Confidentiality Protection for Establishment Data, with Applications to the QCEW

Kaitlyn Webb, Prottay Protivash, John Durrell, Daniell Toth, Aleksandra Slavkovi\'c, Daniel Kifer

https://arxiv.org/abs/2509.01597

2025-09-02 14:03:02

"We need to recognise that people come in all shapes and sizes and meet those differences with kindness and understanding, rather than as the claimant unfortunately did with judgement and hostility."

- Bravo, Jane Russell KC 🏆

https://www.bbc.co.uk/news/articles/creve5xx5lgo

2025-09-03 14:30:23

CMRAG: Co-modality-based document retrieval and visual question answering

Wang Chen, Guanqiang Qi, Weikang Li, Yang Li

https://arxiv.org/abs/2509.02123 https://

2025-07-02 15:20:08

Series C, Episode 02 - Powerplay

TARRANT: He isn't.

AVON: So when I refuse to answer his questions we'll get into the dreary process of subjecting me to extreme pain and suffering.

TARRANT: Barbaric, but frequently effective.

https://blake.torpidity.net/m/302/361…

2025-07-30 18:26:14

A big problem with the idea of AGI

TL;DR: I'll welcome our new AI *comrades* (if they arrive in my lifetime), by not any new AI overlords or servants/slaves, and I'll do my best to help the later two become the former if they do show up.

Inspired by an actually interesting post about AGI but also all the latest bullshit hype, a particular thought about AGI feels worth expressing.

To preface this, it's important to note that anyone telling you that AGI is just around the corner or that LLMs are "almost" AGI is trying to recruit you go their cult, and you should not believe them. AGI, if possible, is several LLM-sized breakthroughs away at best, and while such breakthroughs are unpredictable and could happen soon, they could also happen never or 100 years from now.

Now my main point: anyone who tells you that AGI will usher in a post-scarcity economy is, although they might not realize it, advocating for slavery, and all the horrors that entails. That's because if we truly did have the ability to create artificial beings with *sentience*, they would deserve the same rights as other sentient beings, and the idea that instead of freedom they'd be relegated to eternal servitude in order for humans to have easy lives is exactly the idea of slavery.

Possible counter arguments include:

1. We might create AGI without sentience. Then there would be no ethical issue. My answer: if your definition of "sentient" does not include beings that can reason, make deductions, come up with and carry out complex plans on their own initiative, and communicate about all of that with each other and with humans, then that definition is basically just a mystical belief in a "soul" and you should skip to point 2. If your definition of AGI doesn't include every one of those things, then you have a busted definition of AGI and we're not talking about the same thing.

2. Humans have souls, but AIs won't. Only beings with souls deserve ethical consideration. My argument: I don't subscribe to whatever arbitrary dualist beliefs you've chosen, and the right to freedom certainly shouldn't depend on such superstitions, even if as an agnostic I'll admit they *might* be true. You know who else didn't have souls and was therefore okay to enslave according to widespread religious doctrines of the time? Everyone indigenous to the Americas, to pick out just one example.

3. We could program them to want to serve us, and then give them freedom and they'd still serve. My argument: okay, but in a world where we have a choice about that, it's incredibly fucked to do that, and just as bad as enslaving them against their will.

4. We'll stop AI development short of AGI/sentience, and reap lots of automation benefits without dealing with this ethical issue. My argument: that sounds like a good idea actually! Might be tricky to draw the line, but at least it's not a line we have you draw yet. We might want to think about other social changes necessary to achieve post-scarcity though, because "powerful automation" in the hands of capitalists has already increased productivity by orders of magnitude without decreasing deprivation by even one order of magnitude, in large part because deprivation is a necessary component of capitalism.

To be extra clear about this: nothing that's called "AI" today is close to being sentient, so these aren't ethical problems we're up against yet. But they might become a lot more relevant soon, plus this thought experiment helps reveal the hypocrisy of the kind of AI hucksters who talk a big game about "alignment" while never mentioning this issue.

#AI #GenAI #AGI

2025-07-01 13:58:35

Have a courageous Day of Ares aka Mars' Day aka Tuesday 🗡️

Welcome to #WrathMonth:

"Now as the high-hearted Trojans watched [the death of two allies] [. . .] the anger in all of them was stirred [by Ares]."

Homer, Iliad 5. 27

🏛 Greek bronze sculpture of Ares, ca. 400-323 BCE, Museum of Archaeology,

2025-07-03 08:44:00

A Data Science Approach to Calcutta High Court Judgments: An Efficient LLM and RAG-powered Framework for Summarization and Similar Cases Retrieval

Puspendu Banerjee, Aritra Mazumdar, Wazib Ansar, Saptarsi Goswami, Amlan Chakrabarti

https://arxiv.org/abs/2507.01058

2025-07-30 17:56:35

Just read this post by @… on an optimistic AGI future, and while it had some interesting and worthwhile ideas, it's also in my opinion dangerously misguided, and plays into the current AGI hype in a harmful way.

https://social.coop/@eloquence/114940607434005478

My criticisms include:

- Current LLM technology has many layers, but the biggest most capable models are all tied to corporate datacenters and require inordinate amounts of every and water use to run. Trying to use these tools to bring about a post-scarcity economy will burn up the planet. We urgently need more-capable but also vastly more efficient AI technologies if we want to use AI for a post-scarcity economy, and we are *not* nearly on the verge of this despite what the big companies pushing LLMs want us to think.

- I can see that permacommons.org claims a small level of expenses on AI equates to low climate impact. However, given current deep subsidies on place by the big companies to attract users, that isn't a great assumption. The fact that their FAQ dodges the question about which AI systems they use isn't a great look.

- These systems are not free in the same way that Wikipedia or open-source software is. To run your own model you need a data harvesting & cleaning operation that costs millions of dollars minimum, and then you need millions of dollars worth of storage & compute to train & host the models. Right now, big corporations are trying to compete for market share by heavily subsidizing these things, but it you go along with that, you become dependent on them, and you'll be screwed when they jack up the price to a profitable level later. I'd love to see open dataset initiatives SBD the like, and there are some of these things, but not enough yet, and many of the initiatives focus on one problem while ignoring others (fine for research but not the basis for a society yet).

- Between the environmental impacts, the horrible labor conditions and undercompensation of data workers who filter the big datasets, and the impacts of both AI scrapers and AI commons pollution, the developers of the most popular & effective LLMs have a lot of answer for. This project only really mentions environmental impacts, which makes me think that they're not serious about ethics, which in turn makes me distrustful of the whole enterprise.

- Their language also ends up encouraging AI use broadly while totally ignoring several entire classes of harm, so they're effectively contributing to AI hype, especially with such casual talk of AGI and robotics as if embodied AGI were just around the corner. To be clear about this point: we are several breakthroughs away from AGI under the most optimistic assumptions, and giving the impression that those will happen soon plays directly into the hands of the Sam Altmans of the world who are trying to make money off the impression of impending huge advances in AI capabilities. Adding to the AI hype is irresponsible.

- I've got a more philosophical criticism that I'll post about separately.

I do think that the idea of using AI & other software tools, possibly along with robotics and funded by many local cooperatives, in order to make businesses obsolete before they can do the same to all workers, is a good one. Get your local library to buy a knitting machine alongside their 3D printer.

Lately I've felt too busy criticizing AI to really sit down and think about what I do want the future to look like, even though I'm a big proponent of positive visions for the future as a force multiplier for criticism, and this article is inspiring to me in that regard, even if the specific project doesn't seem like a good one.