2025-09-19 00:37:53

I feel like this Masto post will be evidence in yet another claim against Air Canada for wrecking someone’s mobility aid.

https://mastodon.social/@mollyanglin/115228115467133057

2025-07-20 02:00:09

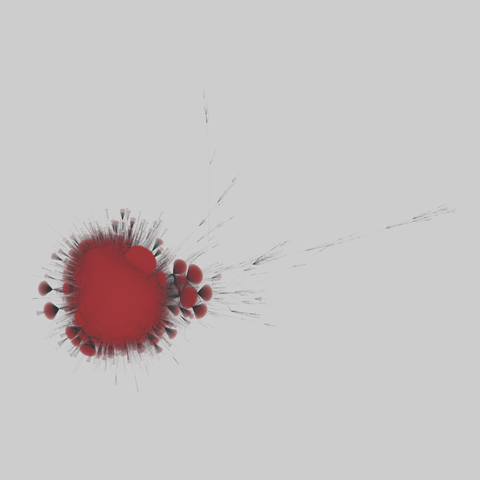

lkml_thread: Linux kernel mailing list

A bipartite network of contributions by users to threads on the Linux kernel mailing list. A left node is a person, and a right node is a thread, and each timestamped edge (i,j,t) denotes that user i contributed to thread j at time t. The date of the snapshot is not given.

This network has 379554 nodes and 1565683 edges.

Tags: Social, Communication, Unweighted, Timestamps

2025-09-19 17:46:28

A U.S. judge has temporarily blocked President Donald Trump's administration from stripping 19 mostly Democratic-led states and Washington, D.C., of food stamp benefits funding unless the states hand over data on millions of people who receive them.

U.S. District Judge Maxine Chesney in San Francisco said in a ruling late Thursday

that the states were likely correct that a federal law requiring them to safeguard information obtained from food stamp recipients bars them from d…

2025-07-18 16:47:03

it annoys me when news headlines refer to a government agency as taking a horrific action when it is clearly the work of the unconstitutional attackers of that agency, like saying the "EPA" is shredding environmental protections, but without an institutional opposition that is willing to defend these agencies and hire the ousted administrators to speak on behalf of the "real" agency, i don't see why i should care anymore.

2025-07-19 07:51:05

AI, AGI, and learning efficiency

My 4-month-old kid is not DDoSing Wikipedia right now, nor will they ever do so before learning to speak, read, or write. Their entire "training corpus" will not top even 100 million "tokens" before they can speak & understand language, and do so with real intentionally.

Just to emphasize that point: 100 words-per-minute times 60 minutes-per-hour times 12 hours-per-day times 365 days-per-year times 4 years is a mere 105,120,000 words. That's a ludicrously *high* estimate of words-per-minute and hours-per-day, and 4 years old (the age of my other kid) is well after basic speech capabilities are developed in many children, etc. More likely the available "training data" is at least 1 or 2 orders of magnitude less than this.

The point here is that large language models, trained as they are on multiple *billions* of tokens, are not developing their behavioral capabilities in a way that's remotely similar to humans, even if you believe those capabilities are similar (they are by certain very biased ways of measurement; they very much aren't by others). This idea that humans must be naturally good at acquiring language is an old one (see e.g. #AI #LLM #AGI

2025-08-18 09:52:20

MM-R1: Unleashing the Power of Unified Multimodal Large Language Models for Personalized Image Generation

Qian Liang, Yujia Wu, Kuncheng Li, Jiwei Wei, Shiyuan He, Jinyu Guo, Ning Xie

https://arxiv.org/abs/2508.11433

2025-07-18 09:40:12

Automating Steering for Safe Multimodal Large Language Models

Lyucheng Wu, Mengru Wang, Ziwen Xu, Tri Cao, Nay Oo, Bryan Hooi, Shumin Deng

https://arxiv.org/abs/2507.13255

2025-08-18 07:32:20

Covering the Euclidean Plane by a Pair of Trees

Hung Le, Lazar Milenkovi\'c, Shay Solomon, Tianyi Zhang

https://arxiv.org/abs/2508.11507 https://arxiv.…

2025-07-19 18:02:19

Sarah Josepha Hale is called the godmother of Thanksgiving

because for 40 years she wrote and lobbied five presidents and congressmen to establish it as a national holiday.

Hale, who is probably best remembered as the author of “Mary Had a Little Lamb,”

also wrote the novel “Northwood; or Life North and South,”

which argued for the virtue of the North against the evil slave owners of the South.

One of the chapters in her book discussed the importance of Thanks…

2025-09-19 00:40:18

"Our imposed starvation has killed what was left of this world’s humanity."

"The song that is sung cannot be killed."

https://electronicintifada.net/content/what-sung-cannot-be-killed/50942