2025-08-28 04:52:36

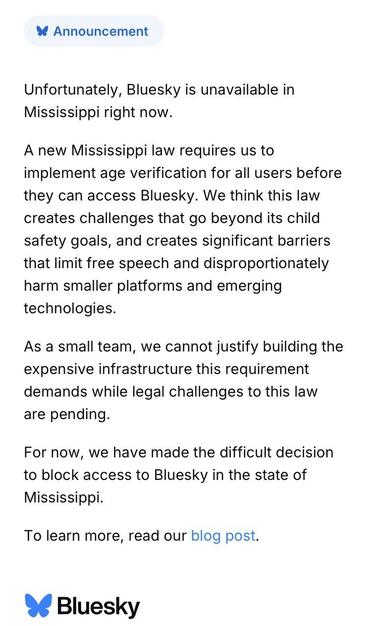

I’m not _in_ Mississippi, god damnit and thank god. I have an IP with a tn.comcast.net PTR, which comes from a /17 with an ARIN netname of “MEMPHIS9.” Your geolocation provider sucks ass, because _all_ geolocation providers suck ass, which is ultimately your problem and not mine because now I’m posting somewhere else. And fuck red states for getting us all into this censorious shitshow.

#Bluesky