2025-08-29 19:52:49

Lonely Little Red Dots - Challenges to the Active Galactic Nucleus Nature of #LittleRedDots through Their Clustering and Spectral Energy Distributions: https://iopscience.iop.org/article/10.3847/2041-8213/adf73d -> All Alone With No AGN to Call Home? New Results for Little Red Dots: https://aasnova.org/2025/08/29/all-alone-with-no-agn-to-call-home-new-results-for-little-red-dots/

2025-11-03 03:18:32

Jaguars Players Discuss Win Over Raiders https://www.jaguars.com/video/k000498-jaguars-players-discuss-win-over-raiders-press-conference

2025-10-25 01:37:38

‘94 @ Sue’s in Limehouse w/ Annie. She’s gone now, but she was a true Eastender, born & died. As a little girl during the Blitz, she (along w/ loads of other kids) had been put on a train out of London. The train would make rural stops & local folks could come take a child or 2 home to work on their farms. From her train, she was the last child chosen. She didn’t share that freely or easily, but she told me. I think about that little girl alone on that platform more often than you mi…

2025-09-26 06:43:23

Only Donald Trump would try to prove he wasn’t threatening ABC by threatening ABC.

“You almost have to feel sorry for the people who work for him,

who try to clean up the messes,”

he added. “They go to all these lengths to say,

‘Oh, it wasn’t coercion!

The president was just musing!’

And then the second Trump is alone, he sits on the toilet,

he gets his grubby little thumbs on his phone,

and he immediately blows their excuses to smithereens&quo…

2025-08-23 23:23:47

Oleander aphids are neat, their bright colors make them look like little alien monsters #bugstodon #bugs

2025-08-22 13:40:35

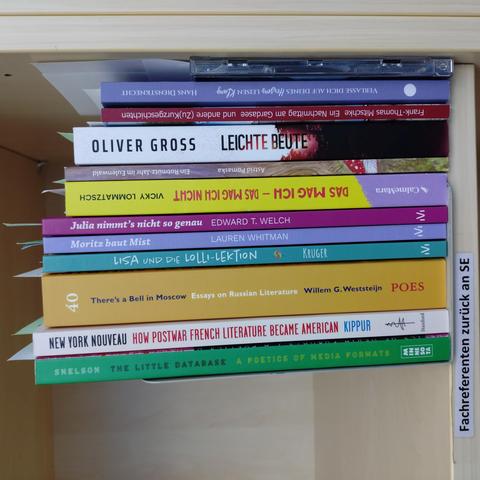

in der #verschlagwortung diese woche: experimentelle literaturformen ("the little database", https://hbz-ulbms.primo.exlibrisgroup.com…

2025-09-26 06:43:22

Only Donald Trump would try to prove he wasn’t threatening ABC by threatening ABC.

“You almost have to feel sorry for the people who work for him,

who try to clean up the messes,”

he added. “They go to all these lengths to say,

‘Oh, it wasn’t coercion!

The president was just musing!’

And then the second Trump is alone, he sits on the toilet,

he gets his grubby little thumbs on his phone,

and he immediately blows their excuses to smithereens&quo…

2025-10-04 13:51:37

🇺🇦 #NowPlaying on BBCRadio3's #RecordReview

Frederick Loewe, Robert Russell Bennett, Alun Armstrong, Sinfonia of London & John Wilson:

🎵 With a little bit of luck (My Fair Lady)

#FrederickLoewe #RobertRussellBennett #AlunArmstrong #SinfoniaofLondon #JohnWilson