2025-09-10 10:20:52

Sources: Rupert Murdoch forced the $3.3B succession deal through sheer will, placing enormous pressure on his adult children during negotiations over months (Financial Times)

https://www.ft.com/content/cca18dc3-92a6-420e-a5ce-534c0b6a48fa

2025-07-10 09:23:01

Girlhood Feminism as Soft Resistance: Affective Counterpublics and Algorithmic Negotiation on RedNote

Meng Liang, Xiaoyue Zhang, Linqi Ye

https://arxiv.org/abs/2507.07059

2025-07-31 09:11:41

A Node on the Constellation: The Role of Feminist Makerspaces in Building and Sustaining Alternative Cultures of Technology Production

Erin Gatz, Yasmine Kotturi, Andrea Afua Kwamya, Sarah Fox

https://arxiv.org/abs/2507.22329

2025-09-09 10:45:52

Social Dynamics of DAOs: Power, Onboarding, and Inclusivity

Victoria Kozlova, Ben Biedermann

https://arxiv.org/abs/2509.06163 https://arxiv.org/pdf/2509.06…

2025-07-30 19:26:02

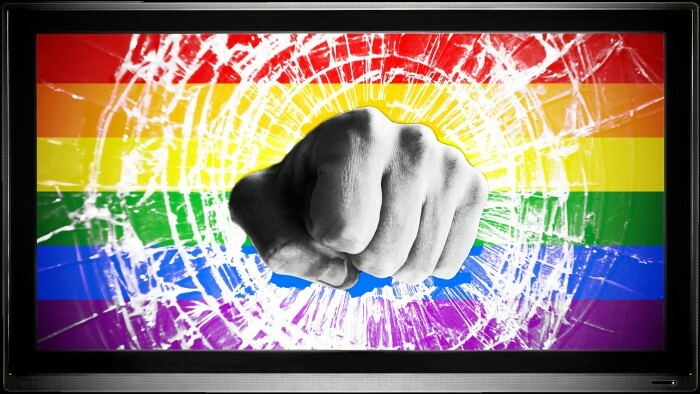

Source: David Ellison plans to "bring back a performance-based culture" to CBS, "Not quotas. Not ideology. Just objective journalism" (Financial Times)

https://www.ft.com/content/b0771100-9180-4a73-adb4-38595aa8c15c

2025-07-19 07:51:05

AI, AGI, and learning efficiency

My 4-month-old kid is not DDoSing Wikipedia right now, nor will they ever do so before learning to speak, read, or write. Their entire "training corpus" will not top even 100 million "tokens" before they can speak & understand language, and do so with real intentionally.

Just to emphasize that point: 100 words-per-minute times 60 minutes-per-hour times 12 hours-per-day times 365 days-per-year times 4 years is a mere 105,120,000 words. That's a ludicrously *high* estimate of words-per-minute and hours-per-day, and 4 years old (the age of my other kid) is well after basic speech capabilities are developed in many children, etc. More likely the available "training data" is at least 1 or 2 orders of magnitude less than this.

The point here is that large language models, trained as they are on multiple *billions* of tokens, are not developing their behavioral capabilities in a way that's remotely similar to humans, even if you believe those capabilities are similar (they are by certain very biased ways of measurement; they very much aren't by others). This idea that humans must be naturally good at acquiring language is an old one (see e.g. #AI #LLM #AGI

2025-07-21 08:10:36

A profile of Brett Cooper, a conservative YouTuber who uses celebrity news to argue against feminism and abortion rights, as she becomes a Fox News contributor (Jessica Testa/New York Times)

https://www.nytimes.c…

2025-06-18 09:01:28

Major US brands like Bud Light have shifted advertising strategies away from DEI themes, sources say due to fears of a Trump administration backlash (Financial Times)

https://www.ft.com/content/88949dbf-7be3-47ed-a6e2-48064bd3fa26

2025-08-14 10:00:15

"England’s rewilding movement is gaining steam, Ben Goldsmith says"

#UK #UnitedKingdom #Rewilding #Nature