OpenAI launches GPT-5.3-Codex, which it says runs 25% faster, enabling longer-running tasks, and "is our first model that was instrumental in creating itself" (David Gewirtz/ZDNET)

https://www.zdnet.com/article/openai-gpt-5-3-codex-faster-goes-beyond-c…

OpenAI says GPT-5.3-Codex goes beyond an agent that can code "to an agent that can do nearly anything developers and professionals can do on a computer" (OpenAI)

https://openai.com/index/introducing-gpt-5-3-codex/

"To be very clear, Gemini 3 isn’t perfect, and it still needs a manager who can guide and check it. But it suggests that “human in the loop” is evolving from “human who fixes AI mistakes” to “human who directs AI work.” And that may be the biggest change since the release of ChatGPT."

Gemini 3 demonstrates strong planning, coding, and judgment skills, and shows how AI models moved past hallucinations to subtle, and often human-like, errors (Ethan Mollick/One Useful Thing)

https://www.oneusefulthing.org/p/three-years-from-gpt-3-to-gemini

<…

A damning new study could put AI companies on the defensive.

In it, Stanford and Yale researchers found compelling evidence that AI models are actually copying all that data,

not “learning” from it.

Specifically, four prominent LLMs

— OpenAI’s GPT-4.1, Google’s Gemini 2.5 Pro, xAI’s Grok 3, and Anthropic’s Claude 3.7 Sonnet

— happily reproduced lengthy excerpts from popular

— and protected

— works, with a stunning degree of accuracy.

They fou…

Google makes Gemini 3 Flash the default model in Gemini app and Search's AI mode; it scored 33.7% without tool use on Humanity's Last Exam vs. GPT-5.2's 34.5% (Ivan Mehta/TechCrunch)

https://techcrunch.com/2025/12/17/goog

Gemini 3 hands-on: a fundamental improvement on daily use, extremely fast, Antigravity IDE is a powerful launch product, and its personality is terse and direct (matt shumer)

https://shumer.dev/gemini3review

Google says Gemini 3 Pro sets new vision AI benchmark records, including in complex visual reasoning, beating Claude Opus 4.5 and GPT-5.1 in some categories (Rohan Doshi/The Keyword)

https://blog.google/technology/developers/gemini-3-pro-vision/

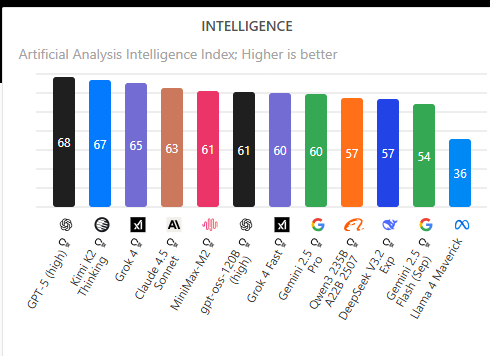

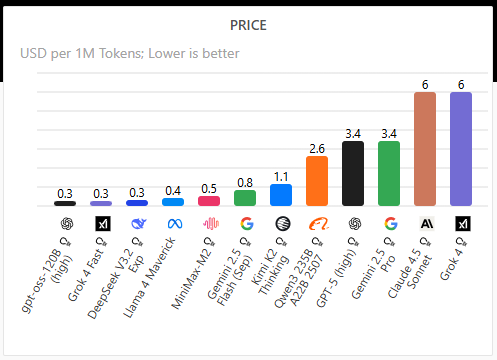

Look at the capabilities versus costs of Kimi K2 and GPT-5. Kimi K2 is 3 times as cheap with similar performance.

#AI

Proc3D: Procedural 3D Generation and Parametric Editing of 3D Shapes with Large Language Models

Fadlullah Raji, Stefano Petrangeli, Matheus Gadelha, Yu Shen, Uttaran Bhattacharya, Gang Wu

https://arxiv.org/abs/2601.12234 https://arxiv.org/pdf/2601.12234 https://arxiv.org/html/2601.12234

arXiv:2601.12234v1 Announce Type: new

Abstract: Generating 3D models has traditionally been a complex task requiring specialized expertise. While recent advances in generative AI have sought to automate this process, existing methods produce non-editable representation, such as meshes or point clouds, limiting their adaptability for iterative design. In this paper, we introduce Proc3D, a system designed to generate editable 3D models while enabling real-time modifications. At its core, Proc3D introduces procedural compact graph (PCG), a graph representation of 3D models, that encodes the algorithmic rules and structures necessary for generating the model. This representation exposes key parameters, allowing intuitive manual adjustments via sliders and checkboxes, as well as real-time, automated modifications through natural language prompts using Large Language Models (LLMs). We demonstrate Proc3D's capabilities using two generative approaches: GPT-4o with in-context learning (ICL) and a fine-tuned LLAMA-3 model. Experimental results show that Proc3D outperforms existing methods in editing efficiency, achieving more than 400x speedup over conventional approaches that require full regeneration for each modification. Additionally, Proc3D improves ULIP scores by 28%, a metric that evaluates the alignment between generated 3D models and text prompts. By enabling text-aligned 3D model generation along with precise, real-time parametric edits, Proc3D facilitates highly accurate text-based image editing applications.

toXiv_bot_toot

Anthropic prices Claude Opus 4.5 at $5/1M input and $25/1M output tokens, much cheaper than Opus 4.1 at $15/$75 but still pricier than GPT-5.1 and Gemini 3 Pro (Simon Willison/Simon Willison's Weblog)

https://simonwillison.net/2025/Nov/24/claude-opus/

Researchers say GPT 4.1, Claude 3.7 Sonnet, Gemini 2.5 Pro, and Grok 3 can reproduce long excerpts from books they were trained on when strategically prompted (Alex Reisner/The Atlantic)

https://www.

Curious that whenever someone shows me “the cool #AI flow” they built that’s supposed to be impressive, the conversation goes the same way:

Stage 1: “But you don’t understand. You don’t like AI because you haven’t used it right. Let me show you how much you can do it with.”

Stage 2: “Here are the steps in the flow and the instructions I feed to this agent / custom GPT / Claude project. I tell it to do X, reference document Y, and aim for Z.”

Stage 3: “Now, let me show you the results it gives.”

*Writes task, presses to run the prompt.*

Stage 4: “Umm sorry it’s taking a while. It’s fast but not instant. And by the way, the prompt isn’t perfect, you can definitely make it better. I just threw this together real quick the other day. It makes some mistakes, but it’s really good.”

Stage 5: “Uuuuuuh actually don’t look at the output.” *scrolls or stops screen share or pulls device away.*

“You know it’s already doing so well, if I do more prompt engineering it will get really good but I need to give it better instructions. And it ran just fine last night, I don’t know what’s up with it. And this is a cheap model, if we use another model it will be better.”

Stage 6: “You know, you really shouldn’t judge this so much. The technology will improve, it will get there sooner than you know and then you’ll regret not trying it sooner.”

So curious that this keeps happening 🤷♀️

#LLMs #work #tech #AIBubble