Should we teach vibe coding? Here's why not.

Should AI coding be taught in undergrad CS education?

1/2

I teach undergraduate computer science labs, including for intro and more-advanced core courses. I don't publish (non-negligible) scholarly work in the area, but I've got years of craft expertise in course design, and I do follow the academic literature to some degree. In other words, In not the world's leading expert, but I have spent a lot of time thinking about course design, and consider myself competent at it, with plenty of direct experience in what knowledge & skills I can expect from students as they move through the curriculum.

I'm also strongly against most uses of what's called "AI" these days (specifically, generative deep neutral networks as supplied by our current cadre of techbro). There are a surprising number of completely orthogonal reasons to oppose the use of these systems, and a very limited number of reasonable exceptions (overcoming accessibility barriers is an example). On the grounds of environmental and digital-commons-pollution costs alone, using specifically the largest/newest models is unethical in most cases.

But as any good teacher should, I constantly question these evaluations, because I worry about the impact on my students should I eschew teaching relevant tech for bad reasons (and even for his reasons). I also want to make my reasoning clear to students, who should absolutely question me on this. That inspired me to ask a simple question: ignoring for one moment the ethical objections (which we shouldn't, of course; they're very stark), at what level in the CS major could I expect to teach a course about programming with AI assistance, and expect students to succeed at a more technically demanding final project than a course at the same level where students were banned from using AI? In other words, at what level would I expect students to actually benefit from AI coding "assistance?"

To be clear, I'm assuming that students aren't using AI in other aspects of coursework: the topic of using AI to "help you study" is a separate one (TL;DR it's gross value is not negative, but it's mostly not worth the harm to your metacognitive abilities, which AI-induced changes to the digital commons are making more important than ever).

So what's my answer to this question?

If I'm being incredibly optimistic, senior year. Slightly less optimistic, second year of a masters program. Realistic? Maybe never.

The interesting bit for you-the-reader is: why is this my answer? (Especially given that students would probably self-report significant gains at lower levels.) To start with, [this paper where experienced developers thought that AI assistance sped up their work on real tasks when in fact it slowed it down] (https://arxiv.org/abs/2507.09089) is informative. There are a lot of differences in task between experienced devs solving real bugs and students working on a class project, but it's important to understand that we shouldn't have a baseline expectation that AI coding "assistants" will speed things up in the best of circumstances, and we shouldn't trust self-reports of productivity (or the AI hype machine in general).

Now we might imagine that coding assistants will be better at helping with a student project than at helping with fixing bugs in open-source software, since it's a much easier task. For many programming assignments that have a fixed answer, we know that many AI assistants can just spit out a solution based on prompting them with the problem description (there's another elephant in the room here to do with learning outcomes regardless of project success, but we'll ignore this over too, my focus here is on project complexity reach, not learning outcomes). My question is about more open-ended projects, not assignments with an expected answer. Here's a second study (by one of my colleagues) about novices using AI assistance for programming tasks. It showcases how difficult it is to use AI tools well, and some of these stumbling blocks that novices in particular face.

But what about intermediate students? Might there be some level where the AI is helpful because the task is still relatively simple and the students are good enough to handle it? The problem with this is that as task complexity increases, so does the likelihood of the AI generating (or copying) code that uses more complex constructs which a student doesn't understand. Let's say I have second year students writing interactive websites with JavaScript. Without a lot of care that those students don't know how to deploy, the AI is likely to suggest code that depends on several different frameworks, from React to JQuery, without actually setting up or including those frameworks, and of course three students would be way out of their depth trying to do that. This is a general problem: each programming class carefully limits the specific code frameworks and constructs it expects students to know based on the material it covers. There is no feasible way to limit an AI assistant to a fixed set of constructs or frameworks, using current designs. There are alternate designs where this would be possible (like AI search through adaptation from a controlled library of snippets) but those would be entirely different tools.

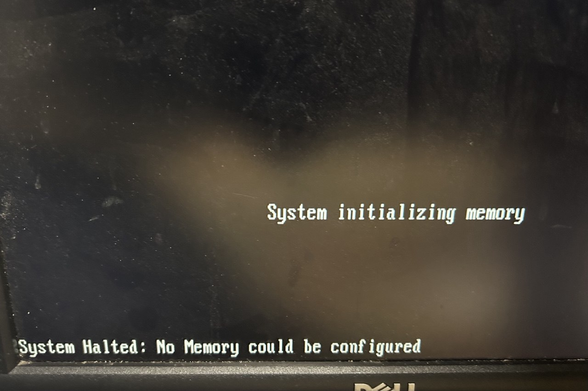

So what happens on a sizeable class project where the AI has dropped in buggy code, especially if it uses code constructs the students don't understand? Best case, they understand that they don't understand and re-prompt, or ask for help from an instructor or TA quickly who helps them get rid of the stuff they don't understand and re-prompt or manually add stuff they do. Average case: they waste several hours and/or sweep the bugs partly under the rug, resulting in a project with significant defects. Students in their second and even third years of a CS major still have a lot to learn about debugging, and usually have significant gaps in their knowledge of even their most comfortable programming language. I do think regardless of AI we as teachers need to get better at teaching debugging skills, but the knowledge gaps are inevitable because there's just too much to know. In Python, for example, the LLM is going to spit out yields, async functions, try/finally, maybe even something like a while/else, or with recent training data, the walrus operator. I can't expect even a fraction of 3rd year students who have worked with Python since their first year to know about all these things, and based on how students approach projects where they have studied all the relevant constructs but have forgotten some, I'm not optimistic seeing these things will magically become learning opportunities. Student projects are better off working with a limited subset of full programming languages that the students have actually learned, and using AI coding assistants as currently designed makes this impossible. Beyond that, even when the "assistant" just introduces bugs using syntax the students understand, even through their 4th year many students struggle to understand the operation of moderately complex code they've written themselves, let alone written by someone else. Having access to an AI that will confidently offer incorrect explanations for bugs will make this worse.

To be sure a small minority of students will be able to overcome these problems, but that minority is the group that has a good grasp of the fundamentals and has broadened their knowledge through self-study, which earlier AI-reliant classes would make less likely to happen. In any case, I care about the average student, since we already have plenty of stuff about our institutions that makes life easier for a favored few while being worse for the average student (note that our construction of that favored few as the "good" students is a large part of this problem).

To summarize: because AI assistants introduce excess code complexity and difficult-to-debug bugs, they'll slow down rather than speed up project progress for the average student on moderately complex projects. On a fixed deadline, they'll result in worse projects, or necessitate less ambitious project scoping to ensure adequate completion, and I expect this remains broadly true through 4-6 years of study in most programs (don't take this as an endorsement of AI "assistants" for masters students; we've ignored a lot of other problems along the way).

There's a related problem: solving open-ended project assignments well ultimately depends on deeply understanding the problem, and AI "assistants" allow students to put a lot of code in their file without spending much time thinking about the problem or building an understanding of it. This is awful for learning outcomes, but also bad for project success. Getting students to see the value of thinking deeply about a problem is a thorny pedagogical puzzle at the best of times, and allowing the use of AI "assistants" makes the problem much much worse. This is another area I hope to see (or even drive) pedagogical improvement in, for what it's worth.

1/2