2025-11-23 16:19:37

Me and some bots made a small Hiera implementation that is focussed on being single file contained.

I am on and off working on super minimal configuration management for Choria Autonomous Agents and I want a agent focussed on a single app to fetch from a single KV key it self-contained configuration that includes Hiera like overrides.

So this is a step toward that, a tiny small scoped Hiera.

2025-11-22 16:58:13

📢 Klimakonferenz endet mit Minimalkompromiss - kein Ausstiegsfahrplan für fossile Energien

Zum Abschluss der 30. Weltklimakonferenz haben sich die Delegierten auf einen Minimalkompromiss verständigt. Die Abschlusserklärung enthält keinen Fahrplan zur Abkehr von Kohle, Öl und Gas.

➡️ http…

2025-12-20 21:42:02

from my link log —

Minimal Wim: a grid-based typography experiment.

https://raffinaderij.booreiland.amsterdam/minimalwim/

saved 2019-10-28 https…

2025-11-22 18:19:37

Es ergibt eben keinen Sinn, wenn man bei Teilnahme von Rauchern erwartet, dass einstimmig für Nichtrauchen entschieden wird.

Die #COP30 in #Belém endet mit einem #Minimalkompromiss: Zwa…

2026-01-22 14:44:36

There are as many desk setups as there are users. I chose I chose minimalism.

https://www-gem.codeberg.page/sys_desk/

2025-12-23 00:30:00

Double Double 🛗

成双 🛗

📷 Nikon F4E

🎞️ Ilford HP5 Plus 400, expired 1993

#filmphotography #Photography #blackandwhite

2025-12-21 01:01:34

2025-12-22 10:33:40

Easy Adaptation: An Efficient Task-Specific Knowledge Injection Method for Large Models in Resource-Constrained Environments

Dong Chen, Zhengqing Hu, Shixing Zhao, Yibo Guo

https://arxiv.org/abs/2512.17771 https://arxiv.org/pdf/2512.17771 https://arxiv.org/html/2512.17771

arXiv:2512.17771v1 Announce Type: new

Abstract: While the enormous parameter scale endows Large Models (LMs) with unparalleled performance, it also limits their adaptability across specific tasks. Parameter-Efficient Fine-Tuning (PEFT) has emerged as a critical approach for effectively adapting LMs to a diverse range of downstream tasks. However, existing PEFT methods face two primary challenges: (1) High resource cost. Although PEFT methods significantly reduce resource demands compared to full fine-tuning, it still requires substantial time and memory, making it impractical in resource-constrained environments. (2) Parameter dependency. PEFT methods heavily rely on updating a subset of parameters associated with LMs to incorporate task-specific knowledge. Yet, due to increasing competition in the LMs landscape, many companies have adopted closed-source policies for their leading models, offering access only via Application Programming Interface (APIs). Whereas, the expense is often cost-prohibitive and difficult to sustain, as the fine-tuning process of LMs is extremely slow. Even if small models perform far worse than LMs in general, they can achieve superior results on particular distributions while requiring only minimal resources. Motivated by this insight, we propose Easy Adaptation (EA), which designs Specific Small Models (SSMs) to complement the underfitted data distribution for LMs. Extensive experiments show that EA matches the performance of PEFT on diverse tasks without accessing LM parameters, and requires only minimal resources.

toXiv_bot_toot

2025-12-18 03:45:15

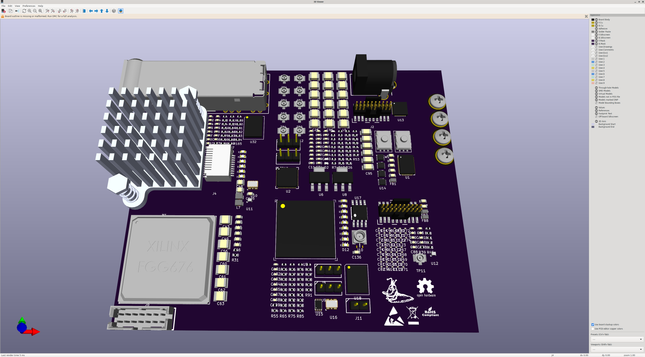

Ok sooo... How minimal do i want to make this stm32mp2 fpga test board?

I'm tempted to go bare bones, no ddr, minimal bom, entirely from parts i have on the shelf to keep costs down.

On one hand it'll be a waste of potential, on the other hand I have plenty of "real" projects coming up and the main goal is to de-risk them. I'm probably never going to use the ddr and it'll increase layout complexity a lot.