2025-09-13 18:01:37

Mira Murati's TML launches a research blog called Connectionism, and shares its work on resolving nondeterminism and achieving reproducible results from LLMs (Maxwell Zeff/TechCrunch)

https://techcrunch.com/2025/09/10/thinking-machines-lab…

2025-10-13 13:46:47

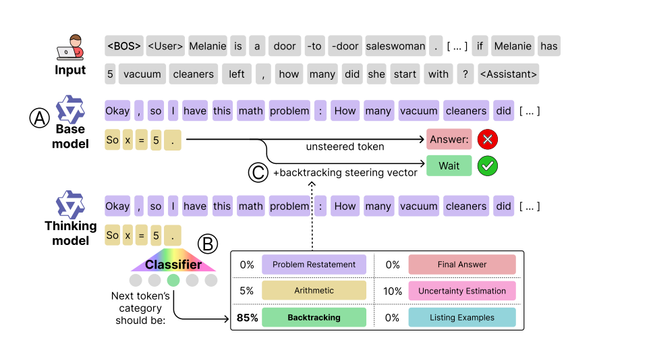

Always fun/challenging to read new AI (pre)papers like this. "Base models know how to reason, thinking models learn when".

#AI #Google #reasoning

2025-10-13 10:41:30

VITA-VLA: Efficiently Teaching Vision-Language Models to Act via Action Expert Distillation

Shaoqi Dong, Chaoyou Fu, Haihan Gao, Yi-Fan Zhang, Chi Yan, Chu Wu, Xiaoyu Liu, Yunhang Shen, Jing Huo, Deqiang Jiang, Haoyu Cao, Yang Gao, Xing Sun, Ran He, Caifeng Shan

https://arxiv.org/abs/2510.09607

2025-08-14 09:41:22

Hybrid Quantum-Classical Latent Diffusion Models for Medical Image Generation

K\"ubra Yeter-Aydeniz, Nora M. Bauer, Pranay Jain, Max Masnick

https://arxiv.org/abs/2508.09903

2025-08-14 09:48:12

Speed Always Wins: A Survey on Efficient Architectures for Large Language Models

Weigao Sun, Jiaxi Hu, Yucheng Zhou, Jusen Du, Disen Lan, Kexin Wang, Tong Zhu, Xiaoye Qu, Yu Zhang, Xiaoyu Mo, Daizong Liu, Yuxuan Liang, Wenliang Chen, Guoqi Li, Yu Cheng

https://arxiv.org/abs/2508.09834

2025-08-14 12:46:12

The US NSF and Nvidia partner to fund the Open Multimodal Infrastructure to Accelerate Science project, led by Ai2; NSF is contributing $75M and Nvidia $77M (Kyt Dotson/SiliconANGLE)

https://siliconangle.com/2025/08/14/nsf-nvidi…

2025-10-13 10:24:10

Zero-shot image privacy classification with Vision-Language Models

Alina Elena Baia, Alessio Xompero, Andrea Cavallaro

https://arxiv.org/abs/2510.09253 https://

2025-07-14 09:50:42

The Curious Case of Factuality Finetuning: Models' Internal Beliefs Can Improve Factuality

Benjamin Newman, Abhilasha Ravichander, Jaehun Jung, Rui Xin, Hamish Ivison, Yegor Kuznetsov, Pang Wei Koh, Yejin Choi

https://arxiv.org/abs/2507.08371

2025-07-14 09:53:52

Diagnosing Failures in Large Language Models' Answers: Integrating Error Attribution into Evaluation Framework

Zishan Xu, Shuyi Xie, Qingsong Lv, Shupei Xiao, Linlin Song, Sui Wenjuan, Fan Lin

https://arxiv.org/abs/2507.08459

2025-08-11 22:30:41

Nvidia debuts new Omniverse SDKs and Cosmos world foundation models for robotics devs, including Cosmos Reason, a 7B-parameter reasoning vision language model (Rebecca Szkutak/TechCrunch)

https://techcrunch.com/2025/08/11/nvid