2026-01-01 00:30:01

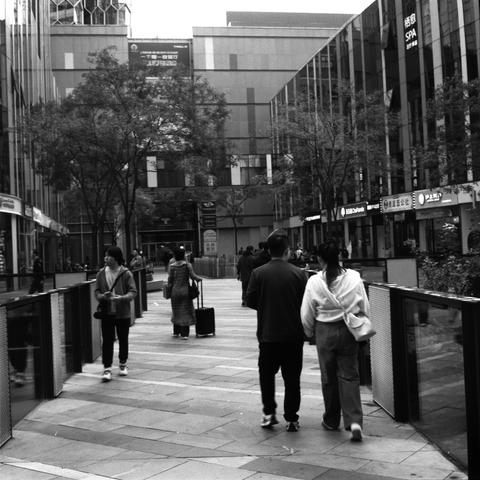

Urban Mirage 👁️🗨️

城市幻境 👁️🗨️

📷 Nikon F4E

🎞️ Ilford HP5 Plus 400, expired 1993

#filmphotography #Photography #blackandwhite

2026-01-04 22:51:40

Perfect day for pond skating! 19F/-7C, no wind. In this case, the artificial Centennial Lakes Park, #Edina, #Minnesota (suburb of #Minneapolis). Interestingly, set in an office complex. 900 m / half a mile long…

2026-02-05 11:04:04

Also, I haven't yet added captioning. I'm planning to do that later today. Apologies for not posting it in a completely accessible way, thanks for your patience.

Edit:

Updated. It should be more accessible now.

I usually don't add images to my posts. I realized half way through that I had a bunch more to do. I kind of had to power through before I ran out of dopamine, or I'd never actually post it. Thank you for your patience. I'm working on setting up a good OCR pipeline to make this faster in the future.

Thanks again for your patience, and please feel free to let me know if there are ways I could improve for future posts.

2026-01-04 02:07:20

Un Momento 🕰️

一瞬 🕰️

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Lucky SHD 400

#filmphotography #Photography #blackandwhite

2025-12-03 14:20:29

I think the root of the “AI” evil is when AI researchers in the 1960s recognized that they outrageously underestimated the complexity of the human mind.

They became humiliated by their promises that AGI was just a few years away—and then went full goblin mode that’s lasting to this day.

Some of the OG researchers took it quite badly that they stalled and weren’t in the limelight anymore.

‣ Marvin Minsky (co-founder of MIT AI lab and arguably the most important early AI bro) went on to visit Epstein’s island multiple times.

‣ Karl Steinbuch, who came up with the German term for computer science ("Informatik")—who also was a literal Nazi (and likely war criminal) in World War II—later wrote articles in ultra-right magazines about things like “equal rights rob women of their children”.

‣ John McCarthy (inventor of Lisp, co-authored document that coined the term “Artificial Intelligence”) was a staunch Republican who years later claimed (in a serious article) that “thermostats have beliefs”.

[one moment, I am receiving more information]

‣ There’s a second Epstein Island AI pioneer? Who also was Chief Learning Officer at… Trump University? That would be Roger Schank (founded one of the first AI companies in the 1980s AI boom, it even had an IPO. Of course the 1980s AI bubble burst).

Obviously all of the above received all the awards in computer science and are very revered people.

2025-12-06 02:04:35

Sitting on a fancy sofa in a very dim bar surrounded by a dozen tieflings as I sip NA amari and the DJ spins Camouflage and more modern knock-offs and 14-year-old me is pleased at this. Current me is pleased at how early it is.

2025-12-22 13:54:55

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[4/5]:

- Sample, Don't Search: Rethinking Test-Time Alignment for Language Models

Gon\c{c}alo Faria, Noah A. Smith

https://arxiv.org/abs/2504.03790 https://mastoxiv.page/@arXiv_csCL_bot/114301112970577326

- A Survey on Archetypal Analysis

Aleix Alcacer, Irene Epifanio, Sebastian Mair, Morten M{\o}rup

https://arxiv.org/abs/2504.12392 https://mastoxiv.page/@arXiv_statME_bot/114357826909813483

- The Stochastic Occupation Kernel (SOCK) Method for Learning Stochastic Differential Equations

Michael L. Wells, Kamel Lahouel, Bruno Jedynak

https://arxiv.org/abs/2505.11622 https://mastoxiv.page/@arXiv_statML_bot/114539065460187982

- BOLT: Block-Orthonormal Lanczos for Trace estimation of matrix functions

Kingsley Yeon, Promit Ghosal, Mihai Anitescu

https://arxiv.org/abs/2505.12289 https://mastoxiv.page/@arXiv_mathNA_bot/114539035462135281

- Clustering and Pruning in Causal Data Fusion

Otto Tabell, Santtu Tikka, Juha Karvanen

https://arxiv.org/abs/2505.15215 https://mastoxiv.page/@arXiv_statML_bot/114550346291754635

- On the performance of multi-fidelity and reduced-dimensional neural emulators for inference of ph...

Chloe H. Choi, Andrea Zanoni, Daniele E. Schiavazzi, Alison L. Marsden

https://arxiv.org/abs/2506.11683 https://mastoxiv.page/@arXiv_statML_bot/114692410563481289

- Beyond Force Metrics: Pre-Training MLFFs for Stable MD Simulations

Maheshwari, Tang, Ock, Kolluru, Farimani, Kitchin

https://arxiv.org/abs/2506.14850 https://mastoxiv.page/@arXiv_physicschemph_bot/114709402590755731

- Quantifying Uncertainty in the Presence of Distribution Shifts

Yuli Slavutsky, David M. Blei

https://arxiv.org/abs/2506.18283 https://mastoxiv.page/@arXiv_statML_bot/114738165218533987

- ZKPROV: A Zero-Knowledge Approach to Dataset Provenance for Large Language Models

Mina Namazi, Alexander Nemecek, Erman Ayday

https://arxiv.org/abs/2506.20915 https://mastoxiv.page/@arXiv_csCR_bot/114754394485208892

- SpecCLIP: Aligning and Translating Spectroscopic Measurements for Stars

Zhao, Huang, Xue, Kong, Liu, Tang, Beers, Ting, Luo

https://arxiv.org/abs/2507.01939 https://mastoxiv.page/@arXiv_astrophIM_bot/114788369702591337

- Towards Facilitated Fairness Assessment of AI-based Skin Lesion Classifiers Through GenAI-based I...

Ko Watanabe, Stanislav Frolov, Aya Hassan, David Dembinsky, Adriano Lucieri, Andreas Dengel

https://arxiv.org/abs/2507.17860 https://mastoxiv.page/@arXiv_csCV_bot/114912976717523345

- PASS: Probabilistic Agentic Supernet Sampling for Interpretable and Adaptive Chest X-Ray Reasoning

Yushi Feng, Junye Du, Yingying Hong, Qifan Wang, Lequan Yu

https://arxiv.org/abs/2508.10501 https://mastoxiv.page/@arXiv_csAI_bot/115032101532614110

- Unified Acoustic Representations for Screening Neurological and Respiratory Pathologies from Voice

Ran Piao, Yuan Lu, Hareld Kemps, Tong Xia, Aaqib Saeed

https://arxiv.org/abs/2508.20717 https://mastoxiv.page/@arXiv_csSD_bot/115111255835875066

- Machine Learning-Driven Predictive Resource Management in Complex Science Workflows

Tasnuva Chowdhury, et al.

https://arxiv.org/abs/2509.11512 https://mastoxiv.page/@arXiv_csDC_bot/115213444524490263

- MatchFixAgent: Language-Agnostic Autonomous Repository-Level Code Translation Validation and Repair

Ali Reza Ibrahimzada, Brandon Paulsen, Reyhaneh Jabbarvand, Joey Dodds, Daniel Kroening

https://arxiv.org/abs/2509.16187 https://mastoxiv.page/@arXiv_csSE_bot/115247172280557686

- Automated Machine Learning Pipeline: Large Language Models-Assisted Automated Dataset Generation ...

Adam Lahouari, Jutta Rogal, Mark E. Tuckerman

https://arxiv.org/abs/2509.21647 https://mastoxiv.page/@arXiv_condmatmtrlsci_bot/115286737423175311

- Quantifying the Impact of Structured Output Format on Large Language Models through Causal Inference

Han Yuan, Yue Zhao, Li Zhang, Wuqiong Luo, Zheng Ma

https://arxiv.org/abs/2509.21791 https://mastoxiv.page/@arXiv_csCL_bot/115287166674809413

- The Generation Phases of Flow Matching: a Denoising Perspective

Anne Gagneux, S\'egol\`ene Martin, R\'emi Gribonval, Mathurin Massias

https://arxiv.org/abs/2510.24830 https://mastoxiv.page/@arXiv_csCV_bot/115462527449411627

- Data-driven uncertainty-aware seakeeping prediction of the Delft 372 catamaran using ensemble Han...

Giorgio Palma, Andrea Serani, Matteo Diez

https://arxiv.org/abs/2511.04461 https://mastoxiv.page/@arXiv_eessSY_bot/115507785247809767

- Generalized infinite dimensional Alpha-Procrustes based geometries

Salvish Goomanee, Andi Han, Pratik Jawanpuria, Bamdev Mishra

https://arxiv.org/abs/2511.09801 https://mastoxiv.page/@arXiv_statML_bot/115547135711272091

toXiv_bot_toot

2026-02-03 03:53:25

A white man in Dillard, Oregon was arrested for stopping people on the freeway

to demand at gunpoint that they tell him if they were citizens.

He shot and missed one attempted to get another in his truck to bring to police.

Before his spree, he called local PD to complain about "foreign" people.

http…

2025-12-28 01:15:15

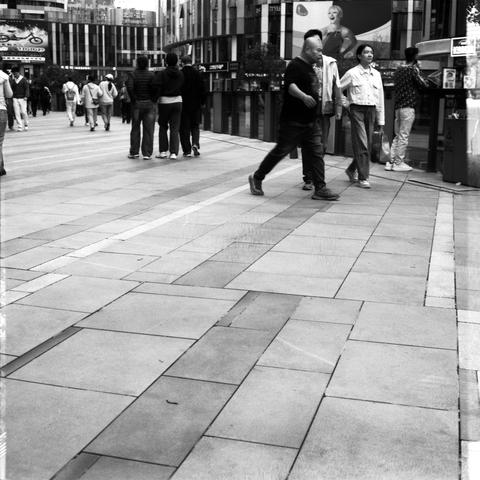

On the Side II 🆓

在边缘 II 🆓

📷 Nikon F4E

🎞️ Ilford HP5 Plus 400, expired 1993

#filmphotography #Photography #blackandwhite

2025-12-25 00:30:02

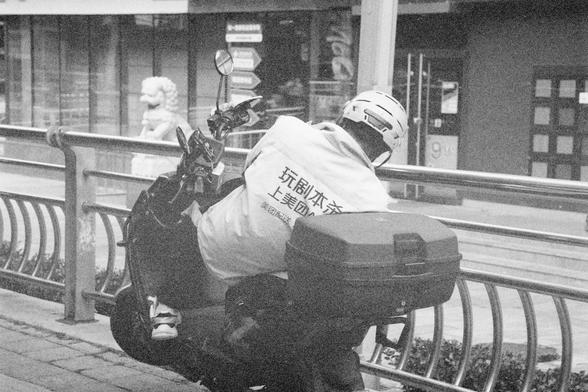

Urban Spots ✴️

城市噪点 ✴️

📷 Nikon F4E

🎞️ Ilford HP5 Plus 400, expired 1993

#filmphotography #Photography #blackandwhite