Ok this is cool. Don't know if this is a studio/publisher thing or Steam is now enforcing this:

>AI Generated Content Disclosure

> We are utilising ElevenLabs' text-to-speech tool to generate voice-over elements within Metro Rivals. All scripts and content are written by Dovetail Games staff, and the voices you hear in-game, which have used ElevenLabs' software, have been licensed by voice actors.

either way, cool!

@…

Understanding the Modality Gap: An Empirical Study on the Speech-Text Alignment Mechanism of Large Speech Language Models

Bajian Xiang, Shuaijiang Zhao, Tingwei Guo, Wei Zou

https://arxiv.org/abs/2510.12116

ParsVoice: A Large-Scale Multi-Speaker Persian Speech Corpus for Text-to-Speech Synthesis

Mohammad Javad Ranjbar Kalahroodi, Heshaam Faili, Azadeh Shakery

https://arxiv.org/abs/2510.10774

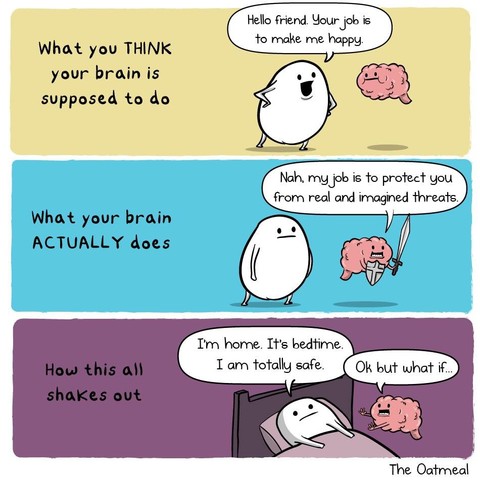

I used a speech to text program today to type “Shirley” and it entered “Surely”

#airplane

BridgeCode: A Dual Speech Representation Paradigm for Autoregressive Zero-Shot Text-to-Speech Synthesis

Jingyuan Xing, Mingru Yang, Zhipeng Li, Xiaofen Xing, Xiangmin Xu

https://arxiv.org/abs/2510.11646

Replaced article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[7/7]:

- ParsVoice: A Large-Scale Multi-Speaker Persian Speech Corpus for Text-to-Speech Synthesis

Mohammad Javad Ranjbar Kalahroodi, Heshaam Faili, Azadeh Shakery

WhisTLE: Deeply Supervised, Text-Only Domain Adaptation for Pretrained Speech Recognition Transformers

Akshat Pandey, Karun Kumar, Raphael Tang

https://arxiv.org/abs/2509.10452 …

OKAY IT ONLY JUST OCCURRED TO ME I CAN DO SPEECH TO TEXT FOR ALT TEXT

DiSTAR: Diffusion over a Scalable Token Autoregressive Representation for Speech Generation

Yakun Song, Xiaobin Zhuang, Jiawei Chen, Zhikang Niu, Guanrou Yang, Chenpeng Du, Zhuo Chen, Yuping Wang, Yuxuan Wang, Xie Chen

https://arxiv.org/abs/2510.12210

Here's a great use of AI text-to-speech generation: preparing for a live pitch.

Instead of reading your pitch a hundred times so you can edit it for time, use ElevenLabs.

1. Create an account

2. Find a voice that matches your own cadence

3. Paste your script and have it generate the pitch

You'll immediately see how long the audio file is and can adjust your script for length.

Then you can spend your time **rehearsing** instead of editing.

Perturbation Self-Supervised Representations for Cross-Lingual Emotion TTS: Stage-Wise Modeling of Emotion and Speaker

Cheng Gong, Chunyu Qiang, Tianrui Wang, Yu Jiang, Yuheng Lu, Ruihao Jing, Xiaoxiao Miao, Xiaolei Zhang, Longbiao Wang, Jianwu Dang

https://arxiv.org/abs/2510.11124

I've been meaning to share this for a while, but for any Android users out who want to use a text-to-speech engine other than Google's, I recommend Sherpa TTS: https://github.com/woheller69/ttsEngine

It's open source, offline, multilingual, and available on F-Droid.

I use text-t…

O_O-VC: Synthetic Data-Driven One-to-One Alignment for Any-to-Any Voice Conversion

Huu Tuong Tu, Huan Vu, cuong tien nguyen, Dien Hy Ngo, Nguyen Thi Thu Trang

https://arxiv.org/abs/2510.09061

The Speech-LLM Takes It All: A Truly Fully End-to-End Spoken Dialogue State Tracking Approach

Nizar El Ghazal, Antoine Caubri\`ere, Valentin Vielzeuf

https://arxiv.org/abs/2510.09424

Unsupervised lexicon learning from speech is limited by representations rather than clustering

Danel Adendorff, Simon Malan, Herman Kamper

https://arxiv.org/abs/2510.09225 https…

DiTReducio: A Training-Free Acceleration for DiT-Based TTS via Progressive Calibration

Yanru Huo, Ziyue Jiang, Zuoli Tang, Qingyang Hong, Zhou Zhao

https://arxiv.org/abs/2509.09748

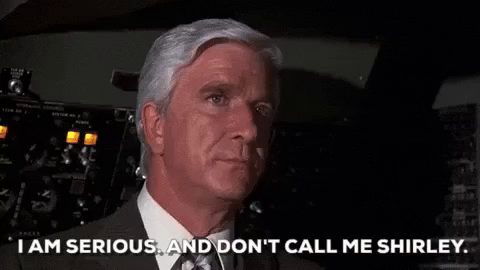

Youtube have some new "feature" to transcribe speech to text. (or whatever this is)

Watching something about Star Trek and Kardashian, it produced this picture of Kim Kardashian.

I think we shouldn't be afraid of "AI" in any way.😂

Revisiting Direct Speech-to-Text Translation with Speech LLMs: Better Scaling than CoT Prompting?

Oriol Pareras, Gerard I. G\'allego, Federico Costa, Cristina Espa\~na-Bonet, Javier Hernando

https://arxiv.org/abs/2510.03093

M4SER: Multimodal, Multirepresentation, Multitask, and Multistrategy Learning for Speech Emotion Recognition

Jiajun He, Xiaohan Shi, Cheng-Hung Hu, Jinyi Mi, Xingfeng Li, Tomoki Toda

https://arxiv.org/abs/2509.18706

Listening or Reading? Evaluating Speech Awareness in Chain-of-Thought Speech-to-Text Translation

Jacobo Romero-D\'iaz, Gerard I. G\'allego, Oriol Pareras, Federico Costa, Javier Hernando, Cristina Espa\~na-Bonet

https://arxiv.org/abs/2510.03115

What speech-to-text thinks I'm saying when I say @…

- "are open sigh"

- "our open size"

- "art open side"

Futzing around with Narrator (because of its new Braille viewer, which I am working on testing) and reminded of the new speech log (“Speech Recap”):

Narrator Key Alt X

Wanted to confirm this is also true with Narrator:

https://adrianroselli.com/2020/08/speech…

ControlAudio: Tackling Text-Guided, Timing-Indicated and Intelligible Audio Generation via Progressive Diffusion Modeling

Yuxuan Jiang, Zehua Chen, Zeqian Ju, Yusheng Dai, Weibei Dou, Jun Zhu

https://arxiv.org/abs/2510.08878

Neuralink plans a US clinical trial in October to test a brain implant that translates thoughts into text, hoping to put its device in a healthy person by 2030 (Ike Swetlitz/Bloomberg)

https://www.bloomberg.com/news/articles/202…

@… I've used Talon, but before I retired I used Dragon Professional on Windows, which I greatly preferred. Now that I'm retired, I don't need speech to text that much. I've only used the free version of Talon, not the "beta", which costs something like $25/month. I found Talon very hard to use, perhaps because I was used to the Dragon way of …

KAME: Tandem Architecture for Enhancing Knowledge in Real-Time Speech-to-Speech Conversational AI

So Kuroki, Yotaro Kubo, Takuya Akiba, Yujin Tang

https://arxiv.org/abs/2510.02327

Towards Responsible Evaluation for Text-to-Speech

Yifan Yang, Hui Wang, Bing Han, Shujie Liu, Jinyu Li, Yong Qin, Xie Chen

https://arxiv.org/abs/2510.06927 https://

Flamed-TTS: Flow Matching Attention-Free Models for Efficient Generating and Dynamic Pacing Zero-shot Text-to-Speech

Hieu-Nghia Huynh-Nguyen, Huynh Nguyen Dang, Ngoc-Son Nguyen, Van Nguyen

https://arxiv.org/abs/2510.02848

IntMeanFlow: Few-step Speech Generation with Integral Velocity Distillation

Wei Wang, Rong Cao, Yi Guo, Zhengyang Chen, Kuan Chen, Yuanyuan Huo

https://arxiv.org/abs/2510.07979 …

A Multilingual Framework for Dysarthria: Detection, Severity Classification, Speech-to-Text, and Clean Speech Generation

Ananya Raghu, Anisha Raghu, Nithika Vivek, Sofie Budman, Omar Mansour

https://arxiv.org/abs/2510.03986

Stream RAG: Instant and Accurate Spoken Dialogue Systems with Streaming Tool Usage

Siddhant Arora, Haidar Khan, Kai Sun, Xin Luna Dong, Sajal Choudhary, Seungwhan Moon, Xinyuan Zhang, Adithya Sagar, Surya Teja Appini, Kaushik Patnaik, Sanat Sharma, Shinji Watanabe, Anuj Kumar, Ahmed Aly, Yue Liu, Florian Metze, Zhaojiang Lin

https://arxiv.…

Emotional Text-To-Speech Based on Mutual-Information-Guided Emotion-Timbre Disentanglement

Jianing Yang, Sheng Li, Takahiro Shinozaki, Yuki Saito, Hiroshi Saruwatari

https://arxiv.org/abs/2510.01722

TMD-TTS: A Unified Tibetan Multi-Dialect Text-to-Speech Synthesis for \"U-Tsang, Amdo and Kham Speech Dataset Generation

Yutong Liu, Ziyue Zhang, Ban Ma-bao, Renzeng Duojie, Yuqing Cai, Yongbin Yu, Xiangxiang Wang, Fan Gao, Cheng Huang, Nyima Tashi

https://arxiv.org/abs/2509.18060

The Unheard Alternative: Contrastive Explanations for Speech-to-Text Models

Lina Conti, Dennis Fucci, Marco Gaido, Matteo Negri, Guillaume Wisniewski, Luisa Bentivogli

https://arxiv.org/abs/2509.26543 …

DialoSpeech: Dual-Speaker Dialogue Generation with LLM and Flow Matching

Hanke Xie, Dake Guo, Chengyou Wang, Yue Li, Wenjie Tian, Xinfa Zhu, Xinsheng Wang, Xiulin Li, Guanqiong Miao, Bo Liu, Lei Xie

https://arxiv.org/abs/2510.08373

DialoSpeech: Dual-Speaker Dialogue Generation with LLM and Flow Matching

Recent advances in text-to-speech (TTS) synthesis, particularly those leveraging large language models (LLMs), have significantly improved expressiveness and naturalness. However, generating human-like, interactive dialogue speech remains challenging. Current systems face limitations due to the scarcity of dual-track data and difficulties in achieving naturalness, contextual coherence, and interactional dynamics, such as turn-taking, overlapping speech, and speaker consistency, in multi-turn co…

Crosslisted article(s) found for cs.LG. https://arxiv.org/list/cs.LG/new

[3/3]:

- VoXtream: Full-Stream Text-to-Speech with Extremely Low Latency

Nikita Torgashov, Gustav Eje Henter, Gabriel Skantze

BatonVoice: An Operationalist Framework for Enhancing Controllable Speech Synthesis with Linguistic Intelligence from LLMs

Yue Wang, Ruotian Ma, Xingyu Chen, Zhengliang Shi, Wanshun Chen, Huang Liu, Jiadi Yao, Qu Yang, Qingxuan Jiang, Fanghua Ye, Juntao Li, Min Zhang, Zhaopeng Tu, Xiaolong Li, Linus

https://arxiv.org/abs/2509.26514…

Evaluating Self-Supervised Speech Models via Text-Based LLMS

Takashi Maekaku, Keita Goto, Jinchuan Tian, Yusuke Shinohara, Shinji Watanabe

https://arxiv.org/abs/2510.04463 https…

Comprehend and Talk: Text to Speech Synthesis via Dual Language Modeling

Junjie Cao, Yichen Han, Ruonan Zhang, Xiaoyang Hao, Hongxiang Li, Shuaijiang Zhao, Yue Liu, Xiao-Ping Zhng

https://arxiv.org/abs/2509.22062

Audio Forensics Evaluation (SAFE) Challenge

Kirill Trapeznikov, Paul Cummer, Pranay Pherwani, Jai Aslam, Michael S. Davinroy, Peter Bautista, Laura Cassani, Matthew Stamm, Jill Crisman

https://arxiv.org/abs/2510.03387

Word-Level Emotional Expression Control in Zero-Shot Text-to-Speech Synthesis

Tianrui Wang, Haoyu Wang, Meng Ge, Cheng Gong, Chunyu Qiang, Ziyang Ma, Zikang Huang, Guanrou Yang, Xiaobao Wang, Eng Siong Chng, Xie Chen, Longbiao Wang, Jianwu Dang

https://arxiv.org/abs/2509.24629

Cross-Attention is Half Explanation in Speech-to-Text Models

Sara Papi, Dennis Fucci, Marco Gaido, Matteo Negri, Luisa Bentivogli

https://arxiv.org/abs/2509.18010 https://

From Text to Talk: Audio-Language Model Needs Non-Autoregressive Joint Training

Tianqiao Liu, Xueyi Li, Hao Wang, Haoxuan Li, Zhichao Chen, Weiqi Luo, Zitao Liu

https://arxiv.org/abs/2509.20072

Beyond Video-to-SFX: Video to Audio Synthesis with Environmentally Aware Speech

Xinlei Niu, Jianbo Ma, Dylan Harper-Harris, Xiangyu Zhang, Charles Patrick Martin, Jing Zhang

https://arxiv.org/abs/2509.15492

Speak, Edit, Repeat: High-Fidelity Voice Editing and Zero-Shot TTS with Cross-Attentive Mamba

Baher Mohammad, Magauiya Zhussip, Stamatios Lefkimmiatis

https://arxiv.org/abs/2510.04738

UniVoice: Unifying Autoregressive ASR and Flow-Matching based TTS with Large Language Models

Wenhao Guan, Zhikang Niu, Ziyue Jiang, Kaidi Wang, Peijie Chen, Qingyang Hong, Lin Li, Xie Chen

https://arxiv.org/abs/2510.04593

Speech-to-See: End-to-End Speech-Driven Open-Set Object Detection

Wenhuan Lu, Xinyue Song, Wenjun Ke, Zhizhi Yu, Wenhao Yang, Jianguo Wei

https://arxiv.org/abs/2509.16670 https:…

Sidon: Fast and Robust Open-Source Multilingual Speech Restoration for Large-scale Dataset Cleansing

Wataru Nakata, Yuki Saito, Yota Ueda, Hiroshi Saruwatari

https://arxiv.org/abs/2509.17052

VSSFlow: Unifying Video-conditioned Sound and Speech Generation via Joint Learning

Xin Cheng, Yuyue Wang, Xihua Wang, Yihan Wu, Kaisi Guan, Yijing Chen, Peng Zhang, Xiaojiang Liu, Meng Cao, Ruihua Song

https://arxiv.org/abs/2509.24773

Preservation of Language Understanding Capabilities in Speech-aware Large Language Models

Marek Kubis, Pawe{\l} Sk\'orzewski, Iwona Christop, Mateusz Czy\.znikiewicz, Jakub Kubiak, {\L}ukasz Bondaruk, Marcin Lewandowski

https://arxiv.org/abs/2509.12171

Group Relative Policy Optimization for Text-to-Speech with Large Language Models

Chang Liu, Ya-Jun Hu, Ying-Ying Gao, Shi-Lei Zhang, Zhen-Hua Ling

https://arxiv.org/abs/2509.18798

DiaMoE-TTS: A Unified IPA-Based Dialect TTS Framework with Mixture-of-Experts and Parameter-Efficient Zero-Shot Adaptation

Ziqi Chen, Gongyu Chen, Yihua Wang, Chaofan Ding, Zihao chen, Wei-Qiang Zhang

https://arxiv.org/abs/2509.22727

Eliminating stability hallucinations in llm-based tts models via attention guidance

ShiMing Wang, ZhiHao Du, Yang Xiang, TianYu Zhao, Han Zhao, Qian Chen, XianGang Li, HanJie Guo, ZhenHua Ling

https://arxiv.org/abs/2509.19852

SpeechOp: Inference-Time Task Composition for Generative Speech Processing

Justin Lovelace, Rithesh Kumar, Jiaqi Su, Ke Chen, Kilian Q Weinberger, Zeyu Jin

https://arxiv.org/abs/2509.14298

Direct Simultaneous Translation Activation for Large Audio-Language Models

Pei Zhang, Yiming Wang, Jialong Tang, Baosong Yang, Rui Wang, Derek F. Wong, Fei Huang

https://arxiv.org/abs/2509.15692

Direct Preference Optimization for Speech Autoregressive Diffusion Models

Zhijun Liu, Dongya Jia, Xiaoqiang Wang, Chenpeng Du, Shuai Wang, Zhuo Chen, Haizhou Li

https://arxiv.org/abs/2509.18928

From Fuzzy Speech to Medical Insight: Benchmarking LLMs on Noisy Patient Narratives

Eden Mama, Liel Sheri, Yehudit Aperstein, Alexander Apartsin

https://arxiv.org/abs/2509.11803

DAIEN-TTS: Disentangled Audio Infilling for Environment-Aware Text-to-Speech Synthesis

Ye-Xin Lu, Yu Gu, Kun Wei, Hui-Peng Du, Yang Ai, Zhen-Hua Ling

https://arxiv.org/abs/2509.14684

Crosslisted article(s) found for cs.SD. https://arxiv.org/list/cs.SD/new

[1/1]:

- Data-efficient Targeted Token-level Preference Optimization for LLM-based Text-to-Speech

Rikuto Kotoge, Yuichi Sasaki

SENSE models: an open source solution for multilingual and multimodal semantic-based tasks

Salima Mdhaffar, Haroun Elleuch, Chaimae Chellaf, Ha Nguyen, Yannick Est\`eve

https://arxiv.org/abs/2509.12093

Discrete Diffusion for Generative Modeling of Text-Aligned Speech Tokens

Pin-Jui Ku, He Huang, Jean-Marie Lemercier, Subham Sekhar Sahoo, Zhehuai Chen, Ante Juki\'c

https://arxiv.org/abs/2509.20060

CS-FLEURS: A Massively Multilingual and Code-Switched Speech Dataset

Brian Yan, Injy Hamed, Shuichiro Shimizu, Vasista Lodagala, William Chen, Olga Iakovenko, Bashar Talafha, Amir Hussein, Alexander Polok, Kalvin Chang, Dominik Klement, Sara Althubaiti, Puyuan Peng, Matthew Wiesner, Thamar Solorio, Ahmed Ali, Sanjeev Khudanpur, Shinji Watanabe, Chih-Chen Chen, Zhen Wu, Karim Benharrak, Anuj Diwan, Samuele Cornell, Eunjung Yeo, Kwanghee Choi, Carlos Carvalho, Karen Rosero

Semantic-VAE: Semantic-Alignment Latent Representation for Better Speech Synthesis

Zhikang Niu, Shujie Hu, Jeongsoo Choi, Yushen Chen, Peining Chen, Pengcheng Zhu, Yunting Yang, Bowen Zhang, Jian Zhao, Chunhui Wang, Xie Chen

https://arxiv.org/abs/2509.22167

Canary-1B-v2 & Parakeet-TDT-0.6B-v3: Efficient and High-Performance Models for Multilingual ASR and AST

Monica Sekoyan, Nithin Rao Koluguri, Nune Tadevosyan, Piotr Zelasko, Travis Bartley, Nick Karpov, Jagadeesh Balam, Boris Ginsburg

https://arxiv.org/abs/2509.14128

Exploring Fine-Tuning of Large Audio Language Models for Spoken Language Understanding under Limited Speech data

Youngwon Choi, Jaeyoon Jung, Hyeonyu Kim, Huu-Kim Nguyen, Hwayeon Kim

https://arxiv.org/abs/2509.15389

TICL: Text-Embedding KNN For Speech In-Context Learning Unlocks Speech Recognition Abilities of Large Multimodal Models

Haolong Zheng, Yekaterina Yegorova, Mark Hasegawa-Johnson

https://arxiv.org/abs/2509.13395

MBCodec:Thorough disentangle for high-fidelity audio compression

Ruonan Zhang, Xiaoyang Hao, Yichen Han, Junjie Cao, Yue Liu, Kai Zhang

https://arxiv.org/abs/2509.17006 https://…

Deep Dubbing: End-to-End Auto-Audiobook System with Text-to-Timbre and Context-Aware Instruct-TTS

Ziqi Dai, Yiting Chen, Jiacheng Xu, Liufei Xie, Yuchen Wang, Zhenchuan Yang, Bingsong Bai, Yangsheng Gao, Wenjiang Zhou, Weifeng Zhao, Ruohua Zhou

https://arxiv.org/abs/2509.15845

Cross-Lingual F5-TTS: Towards Language-Agnostic Voice Cloning and Speech Synthesis

Qingyu Liu, Yushen Chen, Zhikang Niu, Chunhui Wang, Yunting Yang, Bowen Zhang, Jian Zhao, Pengcheng Zhu, Kai Yu, Xie Chen

https://arxiv.org/abs/2509.14579

DIVERS-Bench: Evaluating Language Identification Across Domain Shifts and Code-Switching

Jessica Ojo, Zina Kamel, David Ifeoluwa Adelani

https://arxiv.org/abs/2509.17768 https:/…

Do You Hear What I Mean? Quantifying the Instruction-Perception Gap in Instruction-Guided Expressive Text-To-Speech Systems

Yi-Cheng Lin, Huang-Cheng Chou, Tzu-Chieh Wei, Kuan-Yu Chen, Hung-yi Lee

https://arxiv.org/abs/2509.13989

Nord-Parl-TTS: Finnish and Swedish TTS Dataset from Parliament Speech

Zirui Li, Jens Edlund, Yicheng Gu, Nhan Phan, Lauri Juvela, Mikko Kurimo

https://arxiv.org/abs/2509.17988 h…

Audiobook-CC: Controllable Long-context Speech Generation for Multicast Audiobook

Min Liu, JingJing Yin, Xiang Zhang, Siyu Hao, Yanni Hu, Bin Lin, Yuan Feng, Hongbin Zhou, Jianhao Ye

https://arxiv.org/abs/2509.17516

A Lightweight Pipeline for Noisy Speech Voice Cloning and Accurate Lip Sync Synthesis

Javeria Amir, Farwa Attaria, Mah Jabeen, Umara Noor, Zahid Rashid

https://arxiv.org/abs/2509.12831

Crosslisted article(s) found for cs.SD. https://arxiv.org/list/cs.SD/new

[1/1]:

- Emotion-Aligned Generation in Diffusion Text to Speech Models via Preference-Guided Optimization

Jiacheng Shi, Hongfei Du, Yangfan He, Y. Alicia Hong, Ye Gao

![Speech to text by youtube (picture from Youtube "rewrite" feature):

Drago 4 features an unusually large temperate zone.

However, it is within three light years of Kardashian space.

[Explanation under Kardashian word]:

Kim Kardashian

Kim Kardashian is american well known model and business woman... [for more pres]](https://cdn.social.linux.pizza/system/media_attachments/files/115/611/173/013/020/200/small/2c163e7a807234a3.png)