2025-12-31 06:00:16

amazon_copurchases: Amazon co-purchasing network (2003)

Network of items for sale on amazon.com in 2003 and the items they "recommend" (via the "Customers Who Bought This Item Also Bought" feature). If one item is frequently co-purchased with another, then the first item recommends the second.

This network has 403394 nodes and 3387388 edges.

Tags: Economic, Commerce, Unweighted

2025-12-27 23:35:55

Have a joyful #DayOfDionysos here at Erotic Mythology! 🍇

"[Silenus] great nurse of Bakkhos [. . .] surrounded by the nurses young and fair, Naiades and Bacchae who ivy bear, with all your Satyrs."

Orphic Hymn 54 to #Silenus

🏛

2025-12-23 22:05:21

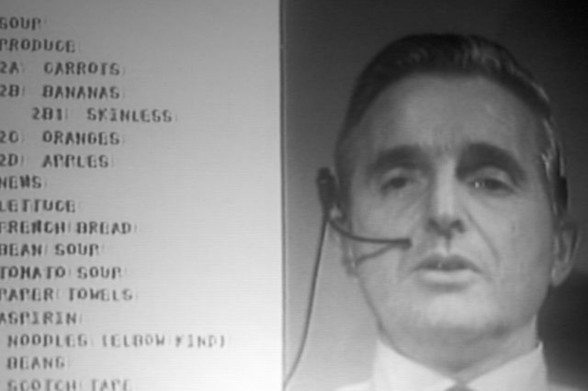

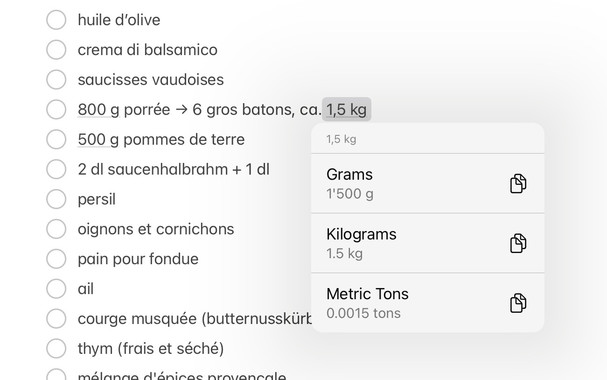

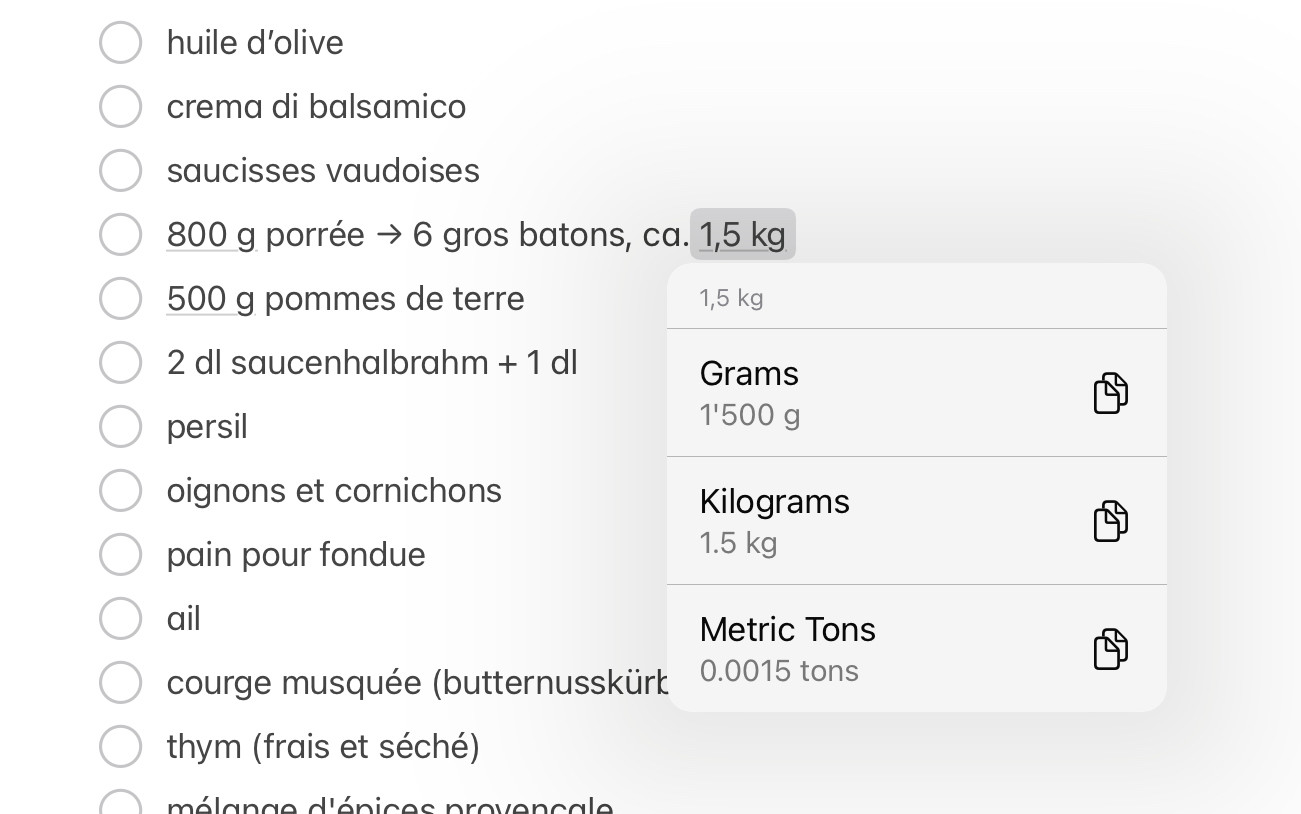

I rarely make electronic shopping lists, so I only discovered today that the Notes app has a handy feature that converts kilograms into tons. Even when using the comma as decimal separator! It doesn’t recognize “dl” (customary in Switzerland), though, and come to think of it, it has none of the features demoed by Doug Engelbart in 1968.

2025-12-22 20:24:34

One might wonder if Denmark/Greenlend might refuse to allow this person to enter Greenland.

If someone who was a government official from Sudan with a professed mission to annex Texas tried to enter the US then I would expect that that person would be stopped at the entry kiosk and sent back.

"New Trump envoy says he will serve to make Greenland part of US"

2025-11-22 00:59:37

2026-01-05 11:44:42

If you are an anti-fascist, you are against petroleum. Petroleum funds fascism globally. It is at the heart of the military industrial complex driving global imperialism, from both the US and Russia. Motonormitivity is fascist, both in it's elitist roots and in it's ties to historical fascism (Hitler hated bikes, just on principle). Oil is militarism.

Oil is the dominant resource which drives war, both in terms of it being the primary spoil wars are fought over and in terms of fueling the military vehicles and weapons that carry out those wars. Practically every war since (and including) WWII has been over oil. Genocides are carried out to secure oil. Gaza is over oil, in more ways than one.

Oil is the absolute enemy, and AI is simply an extension of that: an attempt to atomize us so we can't resist the oil-centric global order, one last grasp at the control over our lives oil has given to those whose power is now threatened by a solar punk future.

"I haven't written for a few weeks now. As I write the closing chapter and begin rewriting previous sections, everything feels both more distant and more immediate. The working title [Kairos] has only continued to feel more and more resonant, both during the writing and during my pause."

Now is the time to resist by making something different, by creating a world fundamentally opposed to these systems of oppression.

This is the last in my Kairos series. From here on out I'll be editing to try and make it more of a book than a series of posts. Thanks to everyone who has helped so far. All editing is welcome (typos, spell checks, questions and challenges). Between ADHD and dyslexia, it's always hard for my brain to notice mistakes in my own text so I always appreciate the support of those who can.

https://anarchoccultism.org/building-zion/kairos

2025-12-23 22:05:21

I rarely make electronic shopping lists, so I only discovered today that the Notes app has a handy feature that converts kilograms into tons. Even when using the comma as decimal separator! It doesn’t recognize “dl” (customary in Switzerland), though, and come to think of it, it has none of the features demoed by Doug Engelbart in 1968.

2025-12-27 13:00:09

dbtropes_feature: Artistic works and their tropes

A bipartite network of artistic works (movies, novels, etc.) and their tropes (stylistic conventions or devices), as extracted from tvtropes.org. The date of this snapshot is uncertain.

This network has 152093 nodes and 3232134 edges.

Tags: Informational, Relatedness, Unweighted

https…

2025-11-26 00:00:13

amazon_copurchases: Amazon co-purchasing network (2003)

Network of items for sale on amazon.com in 2003 and the items they "recommend" (via the "Customers Who Bought This Item Also Bought" feature). If one item is frequently co-purchased with another, then the first item recommends the second.

This network has 400727 nodes and 3200440 edges.

Tags: Economic, Commerce, Unweighted

2025-11-25 11:00:15

amazon_copurchases: Amazon co-purchasing network (2003)

Network of items for sale on amazon.com in 2003 and the items they "recommend" (via the "Customers Who Bought This Item Also Bought" feature). If one item is frequently co-purchased with another, then the first item recommends the second.

This network has 403394 nodes and 3387388 edges.

Tags: Economic, Commerce, Unweighted