2025-11-19 06:07:23

Part of why #Trump has always been so hard to pin down politically is that he was always representing highly conflicting interests. Now, as that eats him alive, the GOP is fracturing in to two main groups: the Pinochet/Franco wing and the Hitler wing.

The Pinochet/Franco wing (let's call them PF) are lead by Vance. PF are also a coalition with some competing interests, but basically it's evangelical leaders, Opus Dei (fascist catholics), tech fascists (Yarvinites), pharma, and the other normal big republican donors. They support Israel, some because apartheid is extremely profitable and some because they support the genocide of Palestinian in order to bring the end of the world. They are split between extremely antisemitic evangelicals and Zionists, wanting similar things for completely different reasons. PF wants strong immigration enforcement because it lets them exploit immigrants, they don't want actual ethnic cleansing (just the constant threat). They want H1B visas because they want to a precarious tech work force. They want to end tariffs because they support free trade and don't actually care about things being made here.

The Hitler wing are lead by Nick Fuentes. I think they're a more unified group, but they're going to try to pull together a coalition that I don't think can really work. They're against Israel because they believe in some bat shit antisemitic conspiracy theory (which they are trying to inject along side legitimate criticism of Israel). They are focused on release of the #EpsteinFiles because they believe that it shows that Epstein worked for Mossad. They don't think that the ICE raids are going far enough, they oppose H1Bs because they are racists. They want a full ethnic cleansing of the US where everyone who isn't "white" is either enslaved for menial labor, deported, or dead. But they're also critical of big business (partially because of conspiracy theories but also) because they think their best option is to push for a white socialism (red/brown alliance).

Both of them want to sink Trump because they see him as standing in the way of their objectives. Both see #Epstein as an opportunity. Both of them have absolutely terrifying visions of authoritarian dictatorships, but they're different dictatorships.with opposing interests. Even within these there may be opportunities to fracture these more.

While these fractures decrease the likelihood of either group getting enough people together, their vision is more clear and thus more likely to succeed if they can make that happen. Now is absolutely *not* the time to just enjoy the collapse, we need to keep up or accelerate anti-fascist efforts to avoid repeating some of the mistakes of history.

Edit:

I should not that this isn't *totally* original analysis. I'll link a video later when I have time to find it.

Here it is:

#USPol

2025-12-15 20:16:03

Managed to put the new Pi music player together before leaving work today. Got it set up with remote control and a quick test of playback. Next stop: Speaker mounting and figuring out if I should bet on physical security through obscurity by making it not easily visible or using a moderately long RCA cable. What’s a reasonable cable length with decent quality cables?

2025-11-30 12:12:58

- 60 out of 100 times it’ll “sync” and freeze with all 4 LEDs on. The Wii U does a little beep but doesn’t show the controller and no inputs work.

- 38 out of 100 times the same thing will happen but with all LEDs off

- You need to remove the batteries in order to take the wiimotes out of these states

- 2 out of 100 times the wiimotes actually connect, but show with 1 battery bar and almost no inputs go through (and the ones that do are extremely laggy, except for the power button)

- The manual states that if this happens you can remove the batteries, wait 3 minutes and connect (without syncing) again. That does fixes it sometimes, but it really only worked once for each wiimote.

- A truly successful connection only happens after the “low battery one”, and even then it seems to be hard to achieve for the first time.

- Afterwards you’re supposed to be able to reconnect without syncing and then not encounter the issues anymore. That was true for the pink wiimote, but the blue wiimote had to be synced again. I spent quite a few hours trying and wasn’t able to get a successful connection.

- If you connect the pink wiimote that was working when the other wiimote is in the 4 LEDs freeze state it’ll break the connection of the other one and make you need to sync it again

- Now none of my aliexpress wiimotes are synced and idk when I’ll be able to sync them again

2026-01-05 00:30:02

Un Momento II 🕰️

一瞬 II 🕰️

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Lucky SHD 400

#filmphotography #Photography #blackandwhite

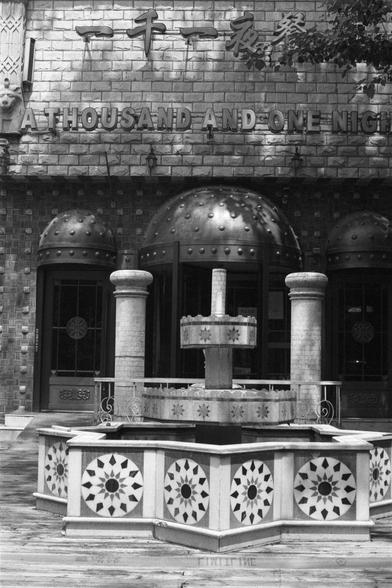

2026-01-06 00:30:01

Un Momento III 🕰️

一瞬 III 🕰️

📷 Nikon F4E

🎞️ ERA 100, expired 1993

#filmphotography #Photography #blackandwhite

2025-11-28 23:49:06

Just finished "Orsinian Tales" by Ursula K Le Guin. It's... good, but not nearly as anarchist as a lot of her other work. These are short fiction stories weaving mostly through a fictional Eastern European country during the cold war, although some stretch farther back into history.

As typical for Le Guin a bunch of male protagonists, and a few parts that might seem to excuse sexual assault, which I've always found an odd thing in Le Guin's work (the rape in "The Dispossessed" bothered me too; the lack of strong female characters in "A Wizard of Earthsea" also sticks out to me). On the other hand, I've read from an interview that she wrote "Earthsea" absolutely knowing her audience (teenage boys) and intentionally writing something that would sell, which speaks to true mastery of her craft (I think the opening of "The Word for World is Forest" demonstrates what an expert can do wielding an intimate understanding of pulp science fiction tropes with intent, for example).

In any case, she writes sublime similes and sparse characters who nevertheless seem to embody deep wisdom about the human condition. I feel that often enough just a few words or sentences in a story bear forth hefty wisdom while around them Le Guin constructs something like an austere painting in muted tones, full of rich details that one can easily miss.

#AmReading #ReadingNow

2025-11-27 23:08:00

What if we could harvest critical minerals from seaweed instead of digging them out of the ground?

Scientists at NREL and the University of Alaska are studying how seaweed naturally absorbs rare earth elements near Alaska's Bokan Mountain. They're developing cost-effective extraction methods that could lead to large-scale seaweed farms producing these essential minerals—offering a cleaner, more sustainable alternative to traditional mining.

2025-10-22 00:30:01

Moody Urbanity - Urban Geometry 📐

情绪化城市 - 都市几何 📐

📷 Pentax MX

🎞️ Kentmere Pan 400

#filmphotography #Photography #blackandwhite