2026-02-05 02:44:22

https://forensic-architecture.org/investigation/tear-gas-tuesday-in-downtown-portland

Interesting visualizations of 2020 teargas exposure in portland.

2026-02-03 01:55:46

If assumptions hold, SpaceX-xAI could own a full stack of capabilities, from launch to orbital bandwidth to frontier AI models, and offer AI on demand anywhere (Eric Berger/Ars Technica)

https://arstechnica.com/ai/2026/02/spacex-acq…

2026-01-05 00:30:02

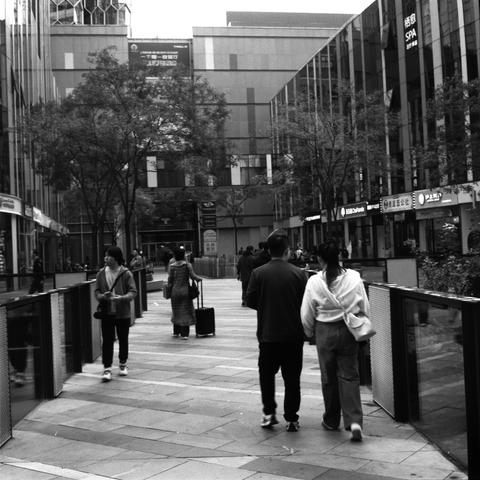

Un Momento II 🕰️

一瞬 II 🕰️

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Lucky SHD 400

#filmphotography #Photography #blackandwhite

2025-12-05 01:47:18

The Supreme Court, in a Thursday evening order, put on hold a lower court ruling that blocked Texas’ aggressive gerrymander,

a maneuver the state legislature carried out on orders from the Trump administration and the president himself,

hoping to preserve Republicans’ majority in the U.S. House in 2026.

In a brief order, the Court argued, among other things, that the District Court had intervened to block the maps too close to next year’s midterm election.

Justice Sam A…

2026-02-03 11:20:57

I have no idea what #musk has been huffing but clearly no one is willing to tell him no. #spacex have developed a really cool re-usable launch system but it seems like hubris to claim managing data centres in space gives you some sort of competitive advantage. Aside from making hardware swaps impossible…

2026-02-02 17:26:14

Rice University students create map exposing ICE raids across the country;

The new platform lets the public see ICE arrests unfolding across the U.S.

#ICE

2026-01-04 02:07:20

Un Momento 🕰️

一瞬 🕰️

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Lucky SHD 400

#filmphotography #Photography #blackandwhite

2026-01-31 01:07:38

Hell yes. We need more of Otto Lee and less of the quisling sheriff.

https://sanjosespotlight.com/will-santa-clara-county-arrest-ice-agents-at-super-bowl/

2025-12-23 11:32:52

2026-02-04 00:30:04

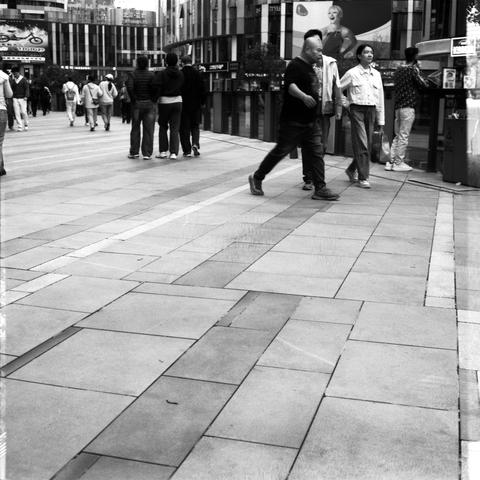

Different Corners V ▶️

不同的角落 V ▶️

📷 Nikon F4E

🎞️ Fujifilm NEOPAN SS, expired 1993

#filmphotography #Photography #blackandwhite