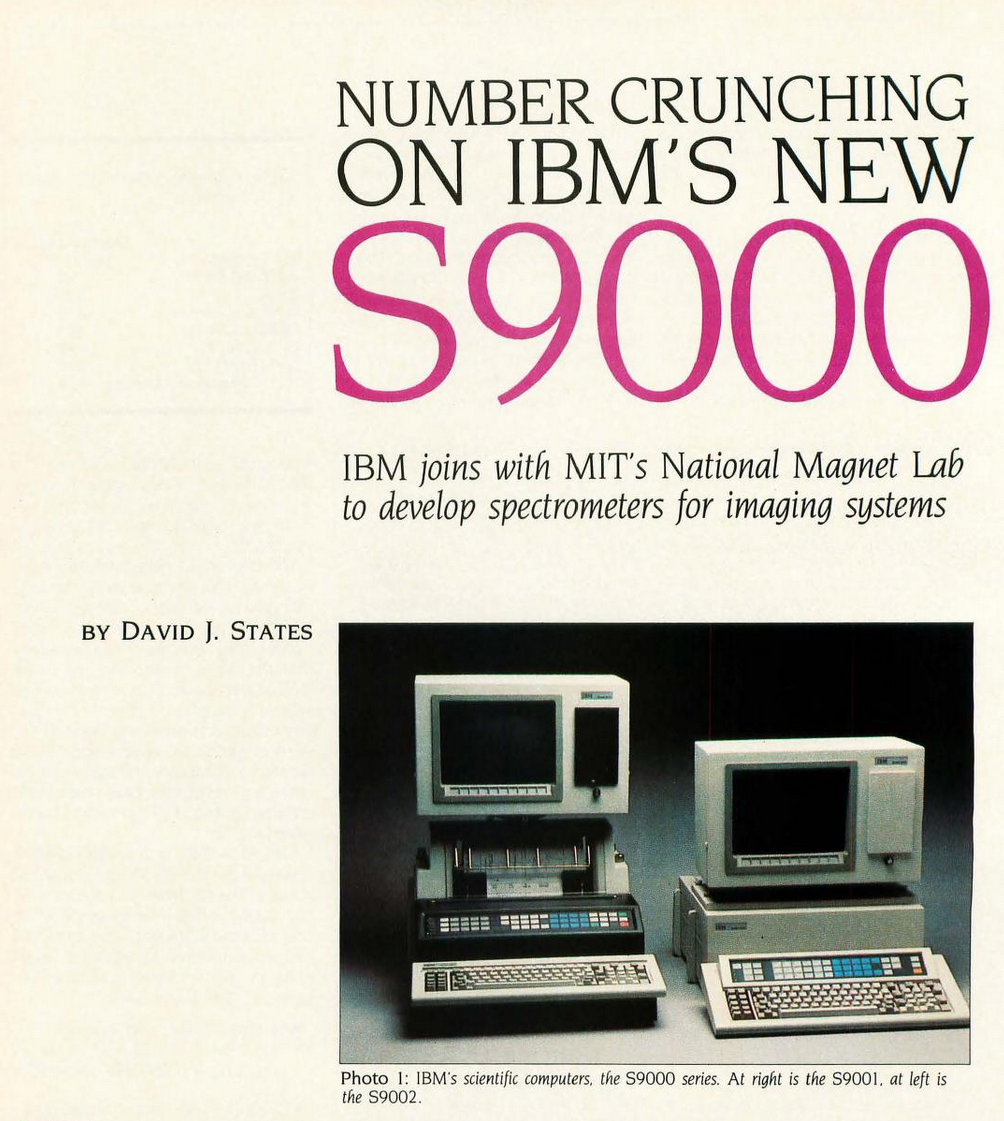

Has anyone made a good video on the obscure IBM System 9000?

It's a 68000-based architecture that can support "large amounts of RAM", the article brags about "as much 2 megabytes".

https://archive.org/details/byte-magazine-1984-09/page/n225/mode/2up

"ps. m88k has been the most worthwhile example, since some insane parts

of that architecture permits Miod to find a MI bug weekly."

I just live that quote.

https://marc.info/?l=openbsd-misc&m=109160932504578&w=2

Multi-port programmable silicon photonics using low-loss phase change material Sb$_2$Se$_3$

Thomas W. Radford, Idris A Ajia, Latif Rozaqi, Priya Deoli, Xingzhao Yan, Mehdi Banakar, David J Thomson, Ioannis Zeimpekis, Alberto Politi, Otto L. Muskens

https://arxiv.org/abs/2511.18205 https://arxiv.org/pdf/2511.18205 https://arxiv.org/html/2511.18205

arXiv:2511.18205v1 Announce Type: new

Abstract: Reconfigurable photonic devices are rapidly emerging as a cornerstone of next generation optical technologies, with wide ranging applications in quantum simulation, neuromorphic computing, and large-scale photonic processors. A central challenge in this field is identifying an optimal platform to enable compact, efficient, and scalable reconfigurability. Optical phase-change materials (PCMs) offer a compelling solution by enabling non-volatile, reversible tuning of optical properties, compatible with a wide range of device platforms and current CMOS technologies. In particular, antimony tri-selenide ($\text{Sb}_{2}\text{Se}_{3}$) stands out for its ultra low-loss characteristics at telecommunication wavelengths and its reversible switching. In this work, we present an experimental platform capable of encoding multi-port operations onto the transmission matrix of a compact multimode interferometer architecture on standard 220~nm silicon photonics using \textit{in-silico} designed digital patterns. The multi-port devices are clad with a thin film of $\text{Sb}_{2}\text{Se}_{3}$, which can be optically addressed using direct laser writing to provide local perturbations to the refractive index. A range of multi-port geometries from 2$\times$2 up to 5$\times$5 couplers are demonstrated, achieving simultaneous control of up to 25 matrix elements with programming accuracy of 90% relative to simulated patterns. Patterned devices remain stable with consistent optical performance across the C-band wavelengths. Our work establishes a pathway towards the development of large scale PCM-based reconfigurable multi-port devices which will allow implementing matrix operations on three orders of magnitude smaller areas than interferometer meshes.

toXiv_bot_toot

Series C, Episode 12 - Death-Watch

AVON: We are short of time, Orac.

VILA: I'll say we are. It's not hanging about this time. [Screen display: 2. WAIT blinks out and is replaced by:]

[Computer display: 2. ARBITERS TAKE POSITION AND INDICATE READINESS]

https://blake.torpidity.net/m/312/544

Italian Americans and the Making of America: Design, Diaspora, and the Architecture of Belonging

https://ift.tt/18rRolG

updated: Monday, February 9, 2026 - 2:10pmfull name / name of organization: Italian American Studies…

via Input 4 RELCFP

Good Morning #Canada

February 3rd, 1916, a fire breaks out in Canada’s Parliament buildings resulting in a total loss of the structure with only the library saved. In the midst of #WWI, German sabotage was suspected but a later investigation found that a burning cigar in some furniture was more likely. Our current Parliament building replaced the ruins and have served faithfully ever since. But approximately a year ago the rehabilitation of Canada’s Centre Block on Parliament Hill started. Expected to cost $4.5B to $5B, it is a multi-year project targeted for completion by 2031. Crumbling mortar, outdated electrical and HVAC, and weak supporting structure are all on the to-do list, as well as modernizing the building.

I started watching this 2-part CPAC video last night (2 hours in total) and it's full of history and architecture details. Spoiler: there are no tips for your bathroom reno.

#CanadaIsAwesome #History

https://youtu.be/Y14ObQPGdho