2025-11-14 00:30:03

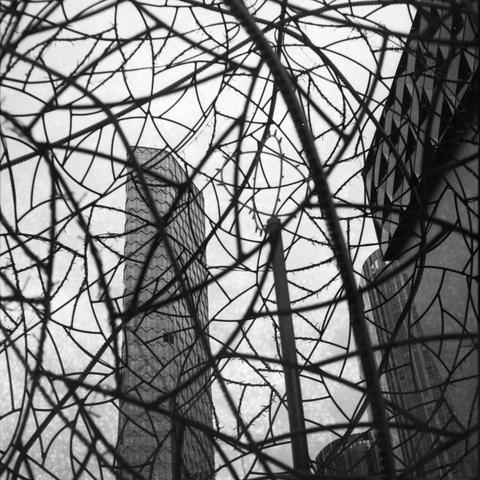

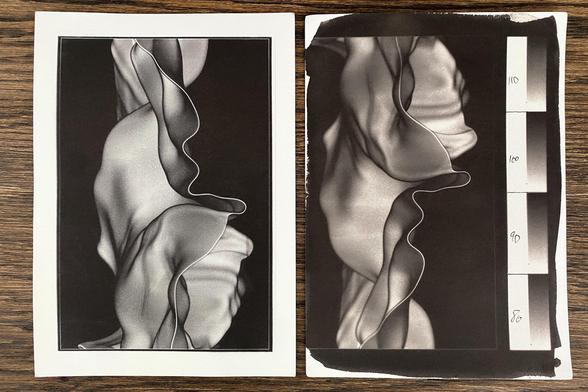

Moody Urbanity - Relations VI 🧬

情绪化城市 - 关系 VI 🧬

📷 Zeiss IKON Super Ikonta 533/16

🎞️ Ilford HP5 400, expired 1993

#filmphotography #Photography #blackandwhite

2025-11-12 19:09:25

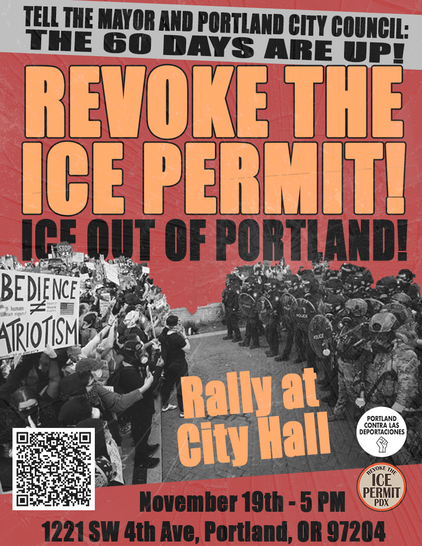

Tell Portland City Government: Revoke the ICE Permit!

This campaign was created to protect immigrants in Portland, OR by Revoking the ICE Permit for the building located at 4310 S Macadam Ave, Portland, OR 97239. Join us in sending emails to Portland City Council, the Mayor and the Permitting and Development Department demanding they revoke the permit. Please feel free to use the template provided and/or add a personal message as well.

2026-01-10 02:42:07

The Co-Parent Diaries

Your go-to podcast for navigating life after separation, building healthy two-home families, and raising emotionally strong kids...

Great Australian Pods Podcast Directory: https://www.greataustralianpods.com/the-co-parent-diaries/

2026-01-12 20:15:24

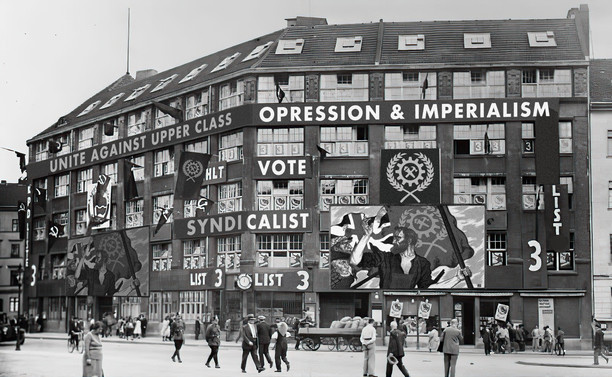

You do not vote syndicalist, you organize syndicalist, so treating it like an election choice in Kaiserreich is fundamentally silly.

Because of that, I’m buying myself Hearts of Iron IV just to install Kaiserreich and laugh at it.

Image is probably AI-generated; I grabbed it from DuckDuckGo and genuinely do not care.

#Humor

2025-11-14 09:50:00

(Adaptive) Scaled gradient methods beyond locally Holder smoothness: Lyapunov analysis, convergence rate and complexity

Susan Ghaderi, Morteza Rahimi, Yves Moreau, Masoud Ahookhosh

https://arxiv.org/abs/2511.10425 https://arxiv.org/pdf/2511.10425 https://arxiv.org/html/2511.10425

arXiv:2511.10425v1 Announce Type: new

Abstract: This paper addresses the unconstrained minimization of smooth convex functions whose gradients are locally Holder continuous. Building on these results, we analyze the Scaled Gradient Algorithm (SGA) under local smoothness assumptions, proving its global convergence and iteration complexity. Furthermore, under local strong convexity and the Kurdyka-Lojasiewicz (KL) inequality, we establish linear convergence rates and provide explicit complexity bounds. In particular, we show that when the gradient is locally Lipschitz continuous, SGA attains linear convergence for any KL exponent. We then introduce and analyze an adaptive variant of SGA (AdaSGA), which automatically adjusts the scaling and step-size parameters. For this method, we show global convergence, and derive local linear rates under strong convexity.

toXiv_bot_toot

2026-01-08 10:33:55

My #AltProcess #Kallitype development/printmaking journey is already showing strong parallels to my software dev experience, i.e. a preference for avoiding monolithic frameworks and building more granular, reliable, understandable & controllable tooling myself, and get much better & mor…

2025-11-04 16:23:08

Understanding #Docker Internals: Building a Container Runtime in Python

https://muhammadraza.me/2024/building-container-runtime-python/

2025-12-09 04:04:54

2026-01-01 14:02:45

Hier sind sie wieder: Meine Gedanken und Ideen zur #Jahreslosung 2026: Gott spricht: Siehe, ich mache alles neu. (Off. 21,5)

https://thomas-ebinger.de/2026/01/geda

2026-01-05 00:30:02

Un Momento II 🕰️

一瞬 II 🕰️

📷 Zeiss Ikon Super Ikonta 533/16

🎞️ Lucky SHD 400

#filmphotography #Photography #blackandwhite