2025-12-04 09:00:04

board_directors: Norwegian Boards of Directors (2002-2011)

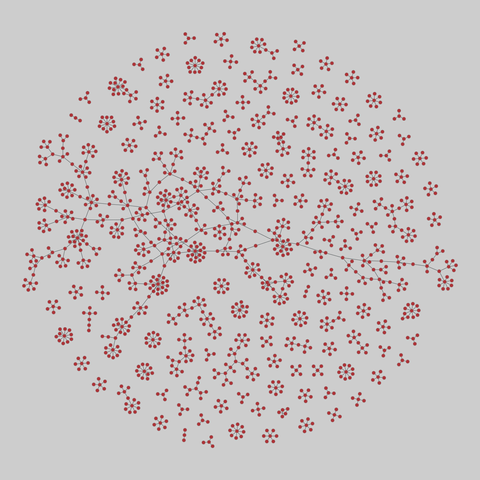

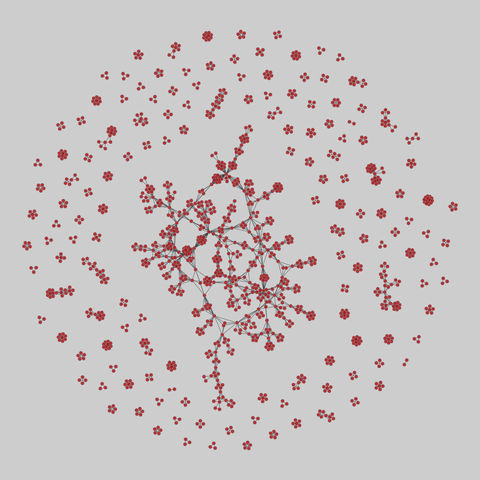

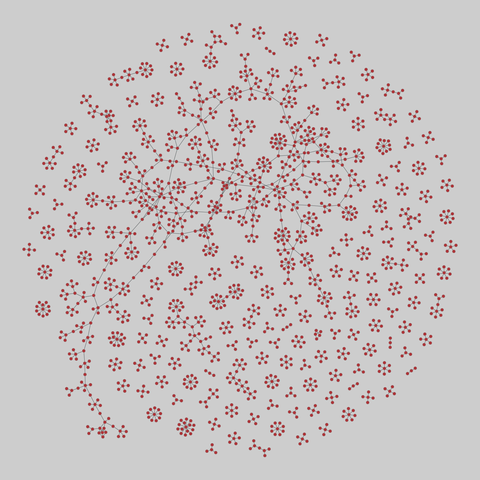

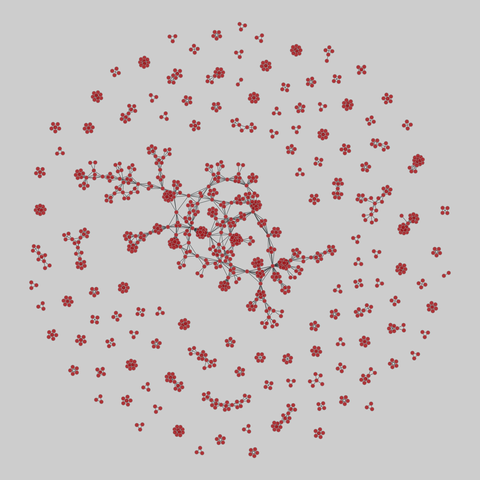

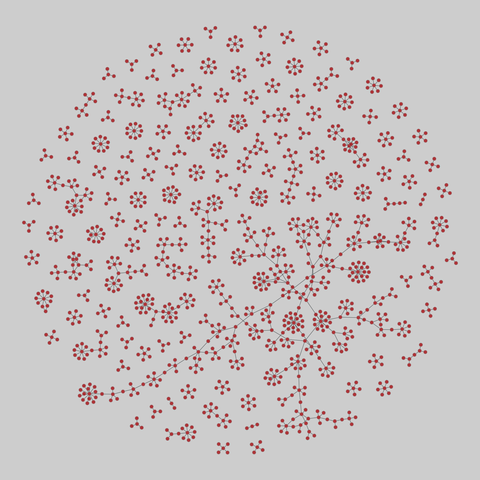

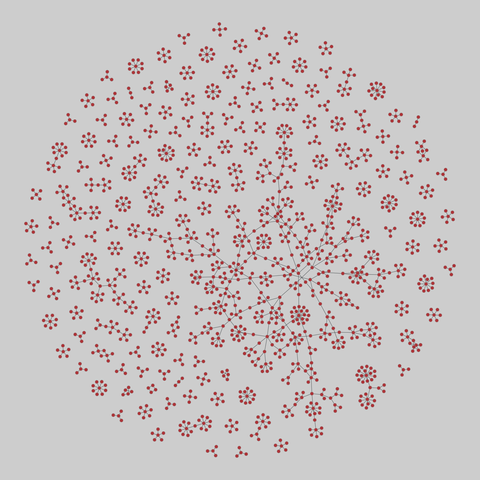

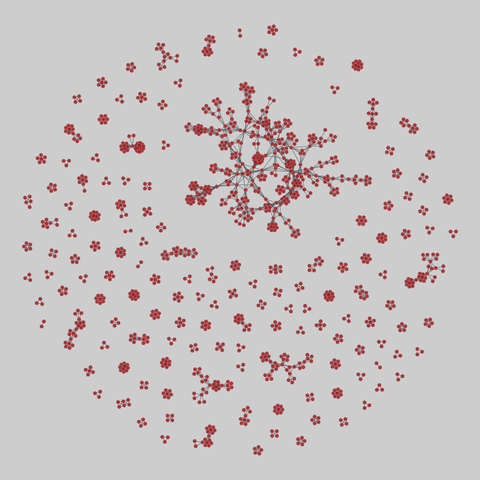

224 networks of the affiliations among board directors due to sitting on common boards of Norwegian public limited companies (as of 5 August 2009), from May 2002 onward, in monthly snapshots through August 2011. Some metadata is included, such as director and company names, city and postal code for companies, and gender for directors. The 'net2m' data are bipartite company-director networks, while the 'net1m' ar…