2025-10-14 09:01:08

On the Capacity of Distributed Quantum Storage

Hua Sun, Syed A. Jafar

https://arxiv.org/abs/2510.10568 https://arxiv.org/pdf/2510.10568

2025-12-14 00:30:01

2025-10-14 13:40:58

An Eulerian Perspective on Straight-Line Sampling

Panos Tsimpos, Youssef Marzouk

https://arxiv.org/abs/2510.11657 https://arxiv.org/pdf/2510.11657

2025-11-14 12:56:39

Replaced article(s) found for math.SG. https://arxiv.org/list/math.SG/new

[1/1]:

- Non-decomposable Lagrangian cobordisms between Legendrian knots

Roman Golovko, Daniel Kom\'arek

https://arxiv.org/abs/2511.08731 https://mastoxiv.page/@arXiv_mathSG_bot/115541377678336506

- Spaces of Legendrian cables and Seifert fibered links

Eduardo Fern\'andez, Hyunki Min

https://arxiv.org/abs/2310.12385 https://mastoxiv.page/@arXiv_mathGT_bot/111265563434686287

- Almost Hermitian structures on virtual moduli spaces of non-Abelian monopoles and applications to...

Paul M. N. Feehan, Thomas G. Leness

https://arxiv.org/abs/2410.13809 https://mastoxiv.page/@arXiv_mathDG_bot/113327305560976416

- Quantum cohomology, shift operators, and Coulomb branches

Ki Fung Chan, Kwokwai Chan, Chin Hang Eddie Lam

https://arxiv.org/abs/2505.23340 https://mastoxiv.page/@arXiv_mathAG_bot/114595582234065991

- One application of Duistermaat-Heckman measure in quantum information theory

Lin Zhang, Xiaohan Jiang, Bing Xie

https://arxiv.org/abs/2507.02369 https://mastoxiv.page/@arXiv_quantph_bot/114794376818737255

toXiv_bot_toot

2026-01-14 04:54:50

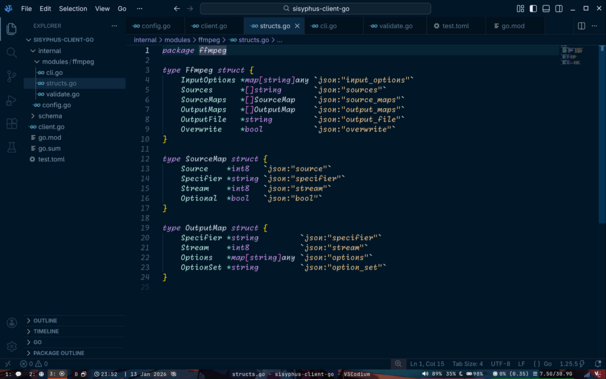

Started the official rewrite of the Sisyphus client in #golang, working on getting the Ffmpeg command-line tasks parsed and validated against the schema. This should make things easier to distribute with respect to the client as I can just distribute static binaries.

#programming

2026-01-13 16:10:51

Scott Adams, creator of the Dilbert comic strip, syndicated to ~2K newspapers at its peak and dropped after he made racist comments in 2023, has died at 68 (Richard Sandomir/New York Times)

https://www.nytimes.com/2026/01/13/arts/scott-adams-dead.html

2025-10-14 09:49:08

Modeling the Impact of Communication and Human Uncertainties on Runway Capacity in Terminal Airspace

Yutian Pang, Andrew Kendall, John-Paul Clarke

https://arxiv.org/abs/2510.09943

2025-10-14 10:08:18

Detection of Axion Stars in Galactic Magnetic Fields

Kuldeep J. Purohit, Jitesh R. Bhatt, Subhendra Mohanty, Prashant K. Mehta

https://arxiv.org/abs/2510.11032 https://

2025-11-14 00:30:03

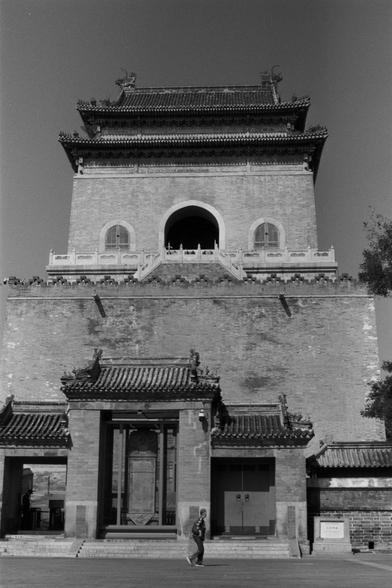

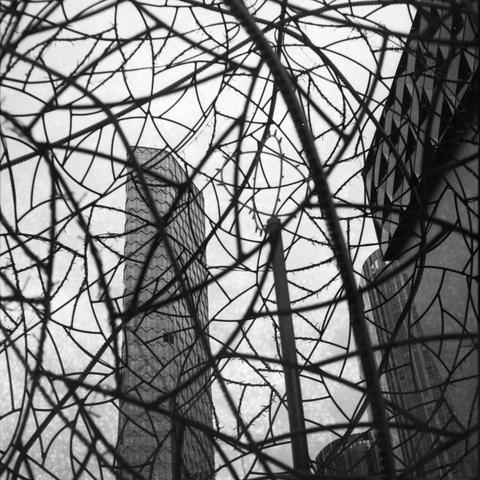

Moody Urbanity - Relations VI 🧬

情绪化城市 - 关系 VI 🧬

📷 Zeiss IKON Super Ikonta 533/16

🎞️ Ilford HP5 400, expired 1993

#filmphotography #Photography #blackandwhite

2025-10-14 09:30:08

List Decoding Reed--Solomon Codes in the Lee, Euclidean, and Other Metrics

Chris Peikert, Alexandra Veliche Hostetler

https://arxiv.org/abs/2510.11453 https://