2025-09-13 19:03:14

2025-09-13 19:03:14

2025-11-05 15:45:09

MINORITIES NON-CHRISTIAN RELIGIONS REJECTION OF GREED ADVOCACY OF THE SOCIETAL WHOLE TRUE DEMOCRACY OMG WHAT ARE WE TO DO

#ReleasetheEpsteinfiles

✅ MAGA Melts Down Over Mamdani’s Mayoral Victory: “New York City Has Fallen” | Vanity Fair

2025-09-22 15:53:22

My new piece.

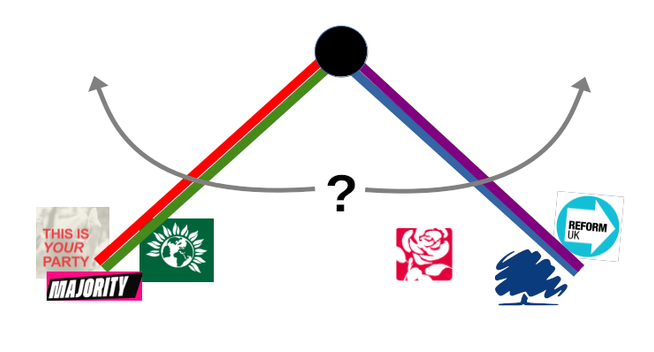

'As UK politics turns both right and left, how do we get degrowth onto the agenda?'

The problem is that neither of the two main British left alternatives (#GreenParty and nascent #YourParty) appears to be sufficiently facing up to the fundamental issue of

2025-09-10 11:45:07

Here are some key takeaways from implementing #PyPI attestations in #Gentoo:

• With OpenPGP, you need to validate the authenticity of a key. With attestations, you need to validate the authenticity of the identity (i.e. know the right GitHub repository). No problem really solved here.

• They verify that the artifact was created by the Continuous Deployment workflow of a given repository. A compromised workflow can produce valid attestations.

• They don't provide sufficient protection against PyPI being compromised. You can't e.g. detect whether new releases weren't hidden.

On the plus side, TOFU is easier here: we don't have to maintain hundreds of key packages, just short URLs on top of ebuilds.

Security-wise, I think PEP 740 itself summarizes it well in the "rationale and motivation" section. To paraphrase, maintainers wanted to create some signatures, and downstreams wanted to verify some signatures, so we gave them some signatures.

#security #Python

2025-09-20 20:37:33

We wanted to document what it sounds like inside a glacier.

There is sadness because you know all these sounds are disappearing right now.

Of course, melting is something natural for glaciers, but the problem is that nothing new is coming back

https://kottke.org/25/09/crying-glacier…

2025-09-25 09:40:52

On Brezis-Nirenberg problems: open questions and new results in dimension six

Fengliu Li, Giusi Vaira, Juncheng Wei, Yuanze Wu

https://arxiv.org/abs/2509.19863 https://

2025-10-04 20:16:32

Let's be honest. I've been a strong supporter of #OpenPGP (or #PGP in general) for a long time. And I still can't think of any real alternative that exists right now. And I kept believing it's not "that hard" — but it doesn't seem like it's getting any easier. The big problem with standards like that are tools.

#WebOfTrust is hard, and impractical for a lot of people. It doesn't really help how many tools implement trust. I mean, I sometimes receive encrypted mail via #EvolutionMail — and Evolution makes it really hard for me to reply encrypted without permanently trusting the sender!

The whole SKS keyserver mess doesn't help PGP at all. Nowadays finding someone's key is often hard. If you're lucky, WKD will work. If you're not, you're up for searching a bunch of keyservers, GitHub, or perhaps random websites. And it definitely doesn't help that some of these may hold expired keys, with people uploading their new key only to a subset of them or forgetting to do it.

On top of that, we have interoperability issues. Definitely doesn't speak well when GnuPG can't import keys from popular keyservers over lack of UIDs. And that's just the tip of the iceberg.

Now with diverging OpenPGP standards around the corner, we're a step ahead from true interoperability problems. Just imagine convincing someone to use OpenPGP, only to tell them afterwards that they've used non-portable tool / settings, and their key doesn't work for you.

That's really not how you advocate for #encryption.

2025-08-28 04:52:36

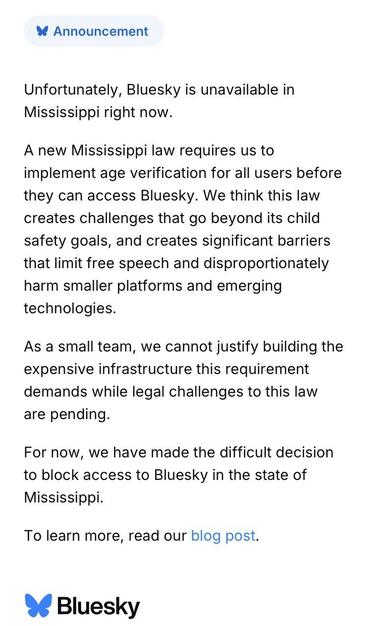

I’m not _in_ Mississippi, god damnit and thank god. I have an IP with a tn.comcast.net PTR, which comes from a /17 with an ARIN netname of “MEMPHIS9.” Your geolocation provider sucks ass, because _all_ geolocation providers suck ass, which is ultimately your problem and not mine because now I’m posting somewhere else. And fuck red states for getting us all into this censorious shitshow.

#Bluesky