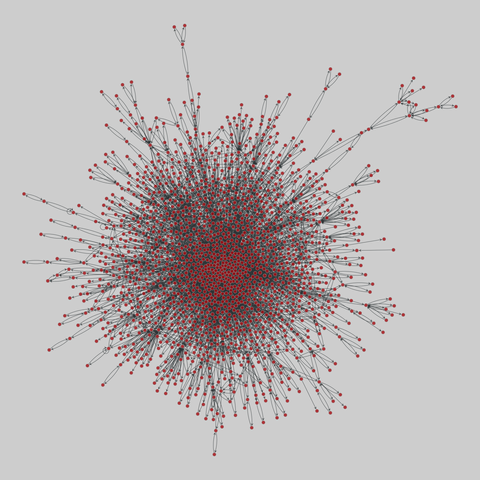

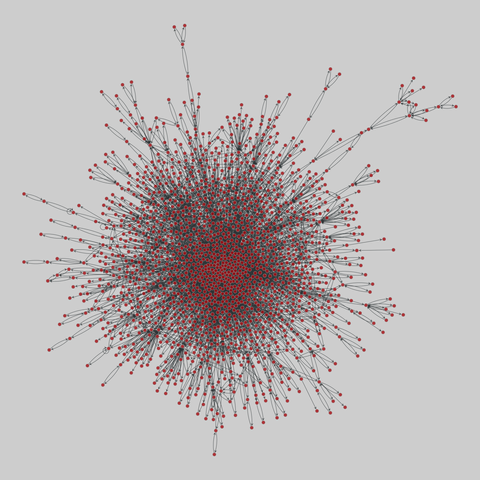

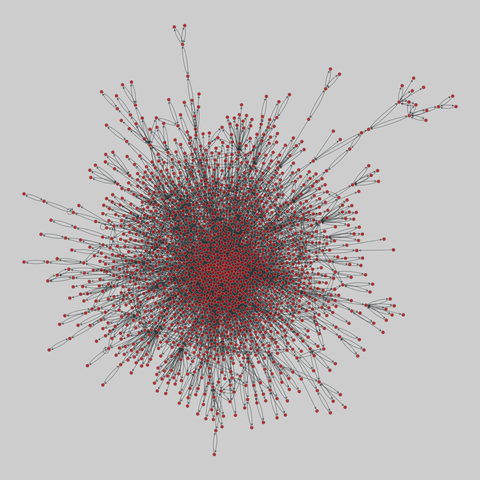

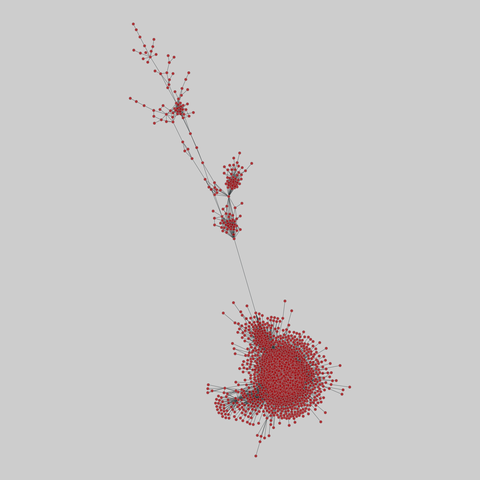

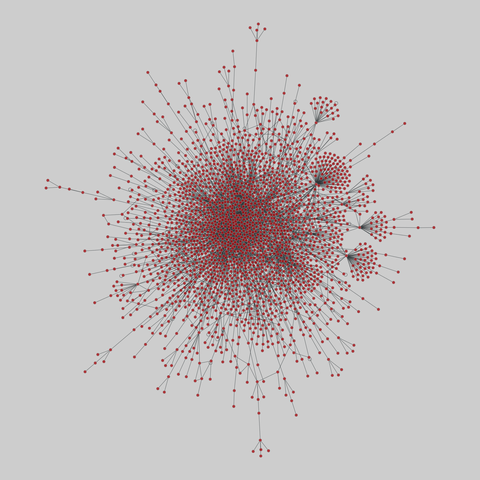

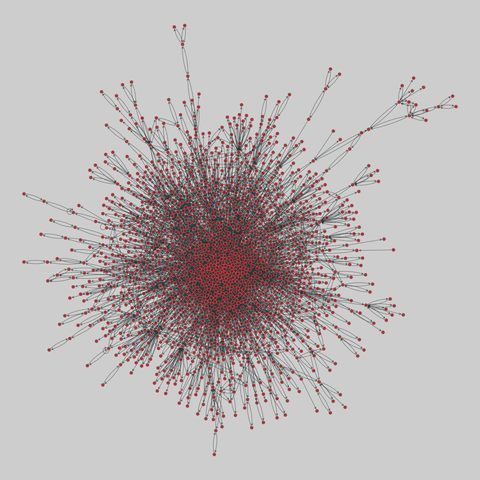

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

Welcome to #TextModeTuesday! For the next few weeks I'll be posting some projects & experiments related to this just spontaneously made up hashtag. If you've got something related to interesting text-based art/experiments, patterns, ASCII-art, ANSI-art etc. please share — the more, the merrier...

To start with, here's an experiment from a few years ago to demonstrat…

Series A, Episode 07 - Mission To Destiny

KENDALL: My experiments show that radiation from our sun was deficient in certain specific wavelengths. The neutrotope will provide the necessary frequencies to kill the fungus.

BLAKE: And you'll mount this on a satellite to be activated by your sun?

https://blake.torpidity.net/m/1…

On Dynamic Programming Theory for Leader-Follower Stochastic Games

Jilles Steeve Dibangoye, Thibaut Le Marre, Ocan Sankur, Fran\c{c}ois Schwarzentruber

https://arxiv.org/abs/2512.05667 https://arxiv.org/pdf/2512.05667 https://arxiv.org/html/2512.05667

arXiv:2512.05667v1 Announce Type: new

Abstract: Leader-follower general-sum stochastic games (LF-GSSGs) model sequential decision-making under asymmetric commitment, where a leader commits to a policy and a follower best responds, yielding a strong Stackelberg equilibrium (SSE) with leader-favourable tie-breaking. This paper introduces a dynamic programming (DP) framework that applies Bellman recursion over credible sets-state abstractions formally representing all rational follower best responses under partial leader commitments-to compute SSEs. We first prove that any LF-GSSG admits a lossless reduction to a Markov decision process (MDP) over credible sets. We further establish that synthesising an optimal memoryless deterministic leader policy is NP-hard, motivating the development of {\epsilon}-optimal DP algorithms with provable guarantees on leader exploitability. Experiments on standard mixed-motive benchmarks-including security games, resource allocation, and adversarial planning-demonstrate empirical gains in leader value and runtime scalability over state-of-the-art methods.

toXiv_bot_toot

Fine tuning the Lego spectrometer more.

Using a maglite as the source eliminates the need for a separate collimating lens.

I also switched away from the very flaky variable slit made of a sliding brick that wouldn't stay in place if you looked at it wrong.

After some failed experiments with aluminum foil, I discovered that the round tree trunk Duplo brick was a tiny bit smaller than a nominal square 2x2 brick. When placed next to a square brick and locked in place with …

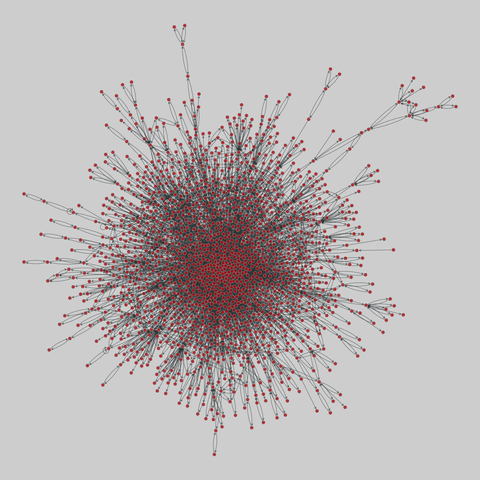

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

Thinking of reinstalling my computer from scratch to iron out all its kinks and tardiness from years of software experiments and trials. Just need to make sure all files are backed up... as I don't want to reinstall the computer from a backup – which would defeat the purpose.

My other task is I want to turn my old Mac mini into a home server. But that needs many hours of focus... haven't done something like that before.

I think my main concern is getting tech issues ou…

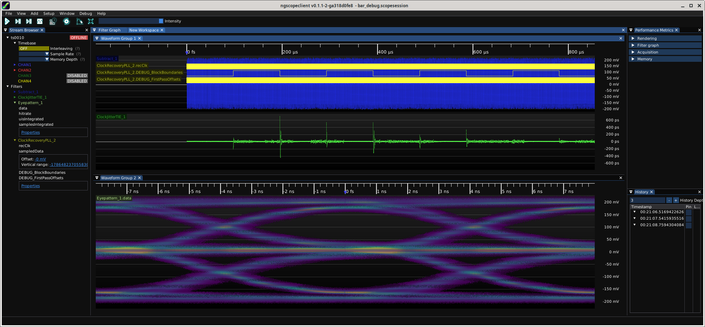

Initial experiments on a GPU-accelerated parallel CDR PLL filter.

Fundamentally, the problem is that a PLL is stateful so you can't process any given iteration of it without knowing the previous state. I'm trying to work around that by recognizing that the impulse response of the PLL loop filter tails off to effectively zero after a while, so we can truncate and samples older than that point will not materially affect the output.

What you see here is the first pass of wha…

"an astonishing wave of anti-Indian animus ... is fostered, in barely cloaked forms, by top Republican officials who accuse Indians of stealing American jobs."

Opinion | One of America’s Most Successful Experiments Is Coming to a Shuddering Halt - The New York Times

https://archive.ph/454nr#selection-763.0-765.459

#NowPlaying What’s old is new again. This 1989 vinyl LP can now be yours on cassette. When will they reissue The Judas & Natasha Experiments? https://tribetapes.bandcamp.com/album/drugs-are-n…

The youtube channel "Lensevision" has some nifty experiments with various homemade lens filters. All youtube shorts unfortunately but here's a really cool one with a rotating slit.

https://youtube.com/shorts/hgjK57kYGks?si=LUNmfez4rm-El25x

Cursor's recent experiment involved running hundreds of AI agents for nearly a week to build a web browser, writing 1M lines of code across 1,000 files (Simon Willison/Simon Willison's Weblog)

https://simonwillison.net/2026/Jan/19/scaling-long-running-a…

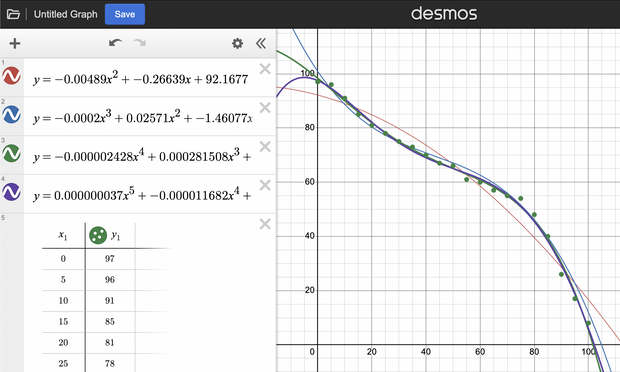

Researching DIY solutions for preparing digital negatives for my salt/kallitype printing experiments. Working on a little curve fitting tool/library (of course using https://thi.ng/umbrella) for computing tone mapping curves derived from sample swatches (here 5% steps of gray/density). The screenshot shows 2nd - 5th d…

Genus-0 Surface Parameterization using Spherical Beltrami Differentials

Zhehao Xu, Lok Ming Lui

https://arxiv.org/abs/2602.01589 https://arxiv.org/pdf/2602.01589 https://arxiv.org/html/2602.01589

arXiv:2602.01589v1 Announce Type: new

Abstract: Spherical surface parameterization is a fundamental tool in geometry processing and imaging science. For a genus-0 closed surface, many efficient algorithms can map the surface to the sphere; consequently, a broad class of task-driven genus-0 mapping problems can be reduced to constructing a high-quality spherical self-map. However, existing approaches often face a trade-off between satisfying task objectives (e.g., landmark or feature alignment), maintaining bijectivity, and controlling geometric distortion. We introduce the Spherical Beltrami Differential (SBD), a two-chart representation of quasiconformal self-maps of the sphere, and establish its correspondence with spherical homeomorphisms up to conformal automorphisms. Building on the Spectral Beltrami Network (SBN), we propose a neural optimization framework BOOST that optimizes two Beltrami fields on hemispherical stereographic charts and enforces global consistency through explicit seam-aware constraints. Experiments on large-deformation landmark matching and intensity-based spherical registration demonstrate the effectiveness of our proposed framework. We further apply the method to brain cortical surface registration, aligning sulcal landmarks and jointly matching cortical sulci depth maps, showing improved task fidelity with controlled distortion and robust bijective behavior.

toXiv_bot_toot

RE: https://graphics.social/@metin/115609395003766024

The claim "Evidence suggests early developing human brains are preconfigured with instructions for understanding the world" is not substantiated and impossible to base on experiments with brain …

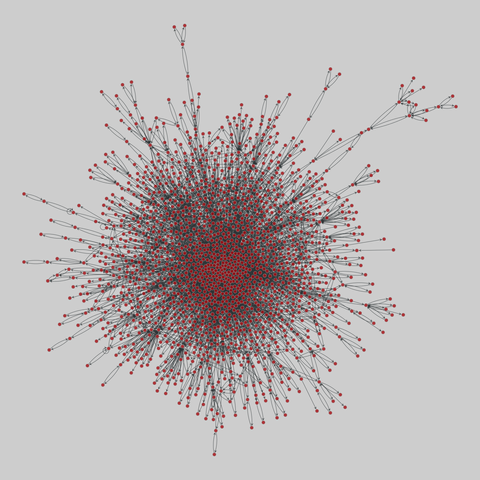

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

Scattering in Time-Varying Drude-Lorentz Models

Bryce Dixon, Calvin M. Hooper, Ian R. Hooper, Simon A. R. Horsley

https://arxiv.org/abs/2511.19322 https://arxiv.org/pdf/2511.19322 https://arxiv.org/html/2511.19322

arXiv:2511.19322v1 Announce Type: new

Abstract: Motivated by recent experiments, the theoretical study of wave propagation in time varying materials is of current interest. Although significant in nearly all such experiments, material dispersion is commonly neglected in theoretical studies. Yet, as we show here, understanding the precise microscopic model for the material dispersion is crucial for predicting experimental outcomes. Here we study the temporal scattering coefficients of four different time-varying Drude-Lorentz models, exploring how an incident continuous wave splits into forward and backward waves due to an abrupt change in plasma frequency. The differences in the predicted scattering are unique to time-varying media, and arise from the exact way in which the time variation appears in the various model parameters. We verify our results using a custom finite difference time domain algorithm, concluding with a discussion of the limitations that arise from using these models with an abrupt change in plasma frequency.

toXiv_bot_toot

A plant that looks like a fungus,

lives like a parasite,

and clones itself in the dark

—Balanophora may be one of evolution’s strangest experiments.

At the foot of moss-covered trees in the mountains of Taiwan and mainland Japan,

as well as within the subtropical forests of Okinawa,

an unusual organism emerges from the forest floor.

It is often mistaken for a mushroom,

yet it is actually a rare flowering plant that produces some of the smallest…

I’m excited to see the return of Steam Machine.

With my experiments of using the Linux Desktop mode with the Steamdeck … this absolutely puts a full Linux server in a lot of homes.

A good spot for a PDS, Tailscale, and a bunch of other things.

https://bsky.app/profile/jaypeters.net

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 2436 nodes and 136930 edges.

Tags: Biological, Protein interactions, Unweighte…

Oh oh, I haven't done anything here for a really long time—but health comes first.

And to make matters worse, I lost a very, very good friend more than a week ago, who was taken by the damn MRSA germ... Rest in peace, my friend, you will never be forgotten! 😢

But during my own convalescence, I had enough time to return to my roots with Linux and switch my systems back to the tried and tested, beloved, and familiar. And I'm now leaving the time of experiments and trials …

Screen, Match, and Cache: A Training-Free Causality-Consistent Reference Frame Framework for Human Animation

Jianan Wang, Nailei Hei, Li He, Huanzhen Wang, Aoxing Li, Haofen Wang, Yan Wang, Wenqiang Zhang

https://arxiv.org/abs/2601.22160 https://arxiv.org/pdf/2601.22160 https://arxiv.org/html/2601.22160

arXiv:2601.22160v1 Announce Type: new

Abstract: Human animation aims to generate temporally coherent and visually consistent videos over long sequences, yet modeling long-range dependencies while preserving frame quality remains challenging. Inspired by the human ability to leverage past observations for interpreting ongoing actions, we propose FrameCache, a training-free three-stage framework consisting of Screen, Cache, and Match. In the Screen stage, a multi-dimensional, quality-aware mechanism with adaptive thresholds dynamically selects informative frames; the Cache stage maintains a reference pool using a dynamic replacement-hit strategy, preserving both diversity and relevance; and the Match stage extracts behavioral features to perform motion-consistent reference matching for coherent animation guidance. Extensive experiments on standard benchmarks demonstrate that FrameCache consistently improves temporal coherence and visual stability while integrating seamlessly with diverse baselines. Despite these encouraging results, further analysis reveals that its effectiveness depends on baseline temporal reasoning and real-synthetic consistency, motivating future work on compatibility conditions and adaptive cache mechanisms. Code will be made publicly available.

toXiv_bot_toot

Development of a projectile charge state analyzer and 10 kV bipolar power supply for MeV energy ion - atom/molecule collision experiments

Sandeep Bajrangi Bari, Sahan Raghava Sykam, Ranojit Das, Rohit Tyagi, Aditya H. Kelkar

https://arxiv.org/abs/2511.18707

Medra, which programs robots with AI to conduct and improve biological experiments, raised $52M led by Human Capital, taking its total funding to $63M (Agnee Ghosh/Bloomberg)

https://www.bloomberg.com/news/articles/2025-12-11/…

"But they stop short of examining the forces that may be actually driving the minerals agenda: tech billionaires like Peter Thiel and Elon Musk, who see Greenland not just as a source of rare earths, but as a laboratory for their libertarian economic and social experiments. These tech-billionaires envision unregulated “freedom cities” in Greenland, free from democratic oversight, environmental laws, and labor protections."

Convergence Guarantees for Federated SARSA with Local Training and Heterogeneous Agents

Paul Mangold, Elo\"ise Berthier, Eric Moulines

https://arxiv.org/abs/2512.17688 https://arxiv.org/pdf/2512.17688 https://arxiv.org/html/2512.17688

arXiv:2512.17688v1 Announce Type: new

Abstract: We present a novel theoretical analysis of Federated SARSA (FedSARSA) with linear function approximation and local training. We establish convergence guarantees for FedSARSA in the presence of heterogeneity, both in local transitions and rewards, providing the first sample and communication complexity bounds in this setting. At the core of our analysis is a new, exact multi-step error expansion for single-agent SARSA, which is of independent interest. Our analysis precisely quantifies the impact of heterogeneity, demonstrating the convergence of FedSARSA with multiple local updates. Crucially, we show that FedSARSA achieves linear speed-up with respect to the number of agents, up to higher-order terms due to Markovian sampling. Numerical experiments support our theoretical findings.

toXiv_bot_toot

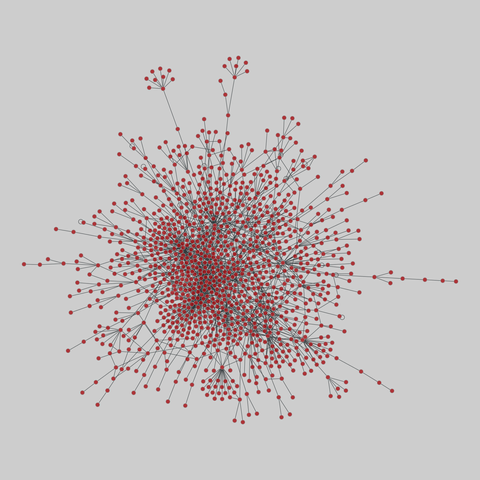

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 1237 nodes and 1735 edges.

Tags: Biological, Protein interactions, Unweighted<…

EAG-PT: Emission-Aware Gaussians and Path Tracing for Indoor Scene Reconstruction and Editing

Xijie Yang, Mulin Yu, Changjian Jiang, Kerui Ren, Tao Lu, Jiangmiao Pang, Dahua Lin, Bo Dai, Linning Xu

https://arxiv.org/abs/2601.23065 https://arxiv.org/pdf/2601.23065 https://arxiv.org/html/2601.23065

arXiv:2601.23065v1 Announce Type: new

Abstract: Recent reconstruction methods based on radiance field such as NeRF and 3DGS reproduce indoor scenes with high visual fidelity, but break down under scene editing due to baked illumination and the lack of explicit light transport. In contrast, physically based inverse rendering relies on mesh representations and path tracing, which enforce correct light transport but place strong requirements on geometric fidelity, becoming a practical bottleneck for real indoor scenes. In this work, we propose Emission-Aware Gaussians and Path Tracing (EAG-PT), aiming for physically based light transport with a unified 2D Gaussian representation. Our design is based on three cores: (1) using 2D Gaussians as a unified scene representation and transport-friendly geometry proxy that avoids reconstructed mesh, (2) explicitly separating emissive and non-emissive components during reconstruction for further scene editing, and (3) decoupling reconstruction from final rendering by using efficient single-bounce optimization and high-quality multi-bounce path tracing after scene editing. Experiments on synthetic and real indoor scenes show that EAG-PT produces more natural and physically consistent renders after editing than radiant scene reconstructions, while preserving finer geometric detail and avoiding mesh-induced artifacts compared to mesh-based inverse path tracing. These results suggest promising directions for future use in interior design, XR content creation, and embodied AI.

toXiv_bot_toot

For #TextureTuesday some more WIP snapshots of STRATA, a generative system I've been on/off working on since 2014 (in Clojure/TypeScript/Zig, originally for the cover design of HOLO magazine), loosely based on 1950s research/experiments by Barricelli, somewhat related to cellular automata and extended to use a different and much larger set of "reproduction/collision rules" f…

Singapore-based ChemLex raised a $45M funding round led by Granite Asia to build an AI-powered, automated chemistry lab to accelerate drug discovery (Rachyl Jones/Semafor)

https://www.semafor.com/article/12/12/2025/singapore-startup-b…

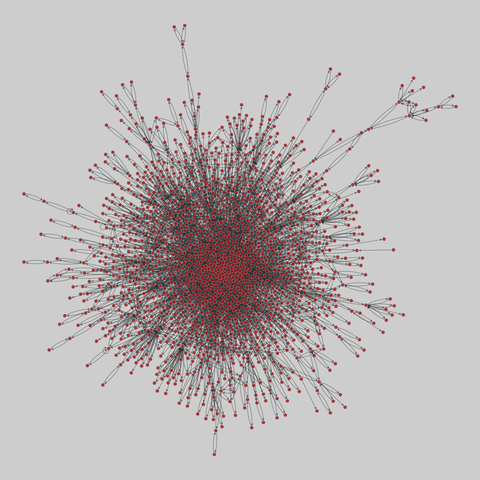

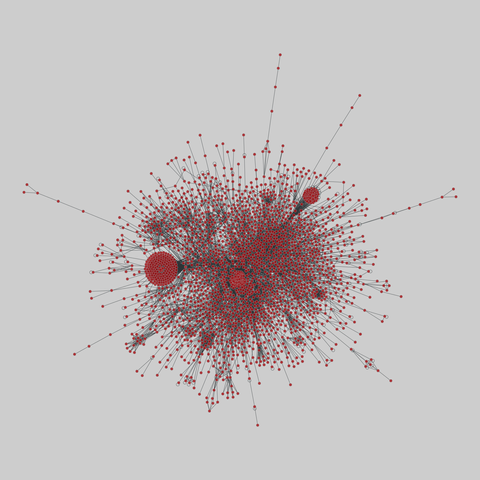

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

dHPR: A Distributed Halpern Peaceman--Rachford Method for Non-smooth Distributed Optimization Problems

Zhangcheng Feng, Defeng Sun, Yancheng Yuan, Guojun Zhang

https://arxiv.org/abs/2511.10069 https://arxiv.org/pdf/2511.10069 https://arxiv.org/html/2511.10069

arXiv:2511.10069v1 Announce Type: new

Abstract: This paper introduces the distributed Halpern Peaceman--Rachford (dHPR) method, an efficient algorithm for solving distributed convex composite optimization problems with non-smooth objectives, which achieves a non-ergodic $O(1/k)$ iteration complexity regarding Karush--Kuhn--Tucker residual. By leveraging the symmetric Gauss--Seidel decomposition, the dHPR effectively decouples the linear operators in the objective functions and consensus constraints while maintaining parallelizability and avoiding additional large proximal terms, leading to a decentralized implementation with provably fast convergence. The superior performance of dHPR is demonstrated through comprehensive numerical experiments on distributed LASSO, group LASSO, and $L_1$-regularized logistic regression problems.

toXiv_bot_toot

Manipulation of the orbital angular momentum of soft x-ray beams by consecutive diffractive optics

Nazir Khan, Rahul Jangid, Taras Stanislavchuk, Aaron Stein, Oleg Chubar, Andi Barbour, Andrei Sirenko, Valery Kiryukhin, Claudio Mazzoli

https://arxiv.org/abs/2511.17768 https://arxiv.org/pdf/2511.17768 https://arxiv.org/html/2511.17768

arXiv:2511.17768v1 Announce Type: new

Abstract: Production and manipulation of orbital angular momentum (OAM) of coherent soft x-ray beams is demonstrated utilizing consecutive diffractive optics. OAM addition is observed upon passing the beam through consecutive fork gratings. The OAM of the beam was found to be decoupled from its spin angular momentum (SAM). Practical implementation of angular momentum control by consecutive devices in the x-ray regime opens new experimental opportunities, such as direct measurement of OAM beams without resorting to phase sensitive techniques, including holography. OAM analyzers utilizing fork gratings can be used to characterize the beams produced by synchrotron and free electron lasers sources; they can also be used in scattering experiments.

toXiv_bot_toot

NeuroSketch: An Effective Framework for Neural Decoding via Systematic Architectural Optimization

Gaorui Zhang, Zhizhang Yuan, Jialan Yang, Junru Chen, Li Meng, Yang Yang

https://arxiv.org/abs/2512.09524 https://arxiv.org/pdf/2512.09524 https://arxiv.org/html/2512.09524

arXiv:2512.09524v1 Announce Type: new

Abstract: Neural decoding, a critical component of Brain-Computer Interface (BCI), has recently attracted increasing research interest. Previous research has focused on leveraging signal processing and deep learning methods to enhance neural decoding performance. However, the in-depth exploration of model architectures remains underexplored, despite its proven effectiveness in other tasks such as energy forecasting and image classification. In this study, we propose NeuroSketch, an effective framework for neural decoding via systematic architecture optimization. Starting with the basic architecture study, we find that CNN-2D outperforms other architectures in neural decoding tasks and explore its effectiveness from temporal and spatial perspectives. Building on this, we optimize the architecture from macro- to micro-level, achieving improvements in performance at each step. The exploration process and model validations take over 5,000 experiments spanning three distinct modalities (visual, auditory, and speech), three types of brain signals (EEG, SEEG, and ECoG), and eight diverse decoding tasks. Experimental results indicate that NeuroSketch achieves state-of-the-art (SOTA) performance across all evaluated datasets, positioning it as a powerful tool for neural decoding. Our code and scripts are available at https://github.com/Galaxy-Dawn/NeuroSketch.

toXiv_bot_toot

Synthesis of a high intensity, superthermal muonium beam for gravity and laser spectroscopy experiments

Jesse Zhang, Aldo Antognini, Marek Bartkowiak, Klaus Kirch, Andreas Knecht, Damian Goeldi, David Taqqu, Robert Waddy, Frederik Wauters, Paul Wegmann, Anna Soter

https://arxiv.org/abs/2512.19923 <…

Regularized Random Fourier Features and Finite Element Reconstruction for Operator Learning in Sobolev Space

Xinyue Yu, Hayden Schaeffer

https://arxiv.org/abs/2512.17884 https://arxiv.org/pdf/2512.17884 https://arxiv.org/html/2512.17884

arXiv:2512.17884v1 Announce Type: new

Abstract: Operator learning is a data-driven approximation of mappings between infinite-dimensional function spaces, such as the solution operators of partial differential equations. Kernel-based operator learning can offer accurate, theoretically justified approximations that require less training than standard methods. However, they can become computationally prohibitive for large training sets and can be sensitive to noise. We propose a regularized random Fourier feature (RRFF) approach, coupled with a finite element reconstruction map (RRFF-FEM), for learning operators from noisy data. The method uses random features drawn from multivariate Student's $t$ distributions, together with frequency-weighted Tikhonov regularization that suppresses high-frequency noise. We establish high-probability bounds on the extreme singular values of the associated random feature matrix and show that when the number of features $N$ scales like $m \log m$ with the number of training samples $m$, the system is well-conditioned, which yields estimation and generalization guarantees. Detailed numerical experiments on benchmark PDE problems, including advection, Burgers', Darcy flow, Helmholtz, Navier-Stokes, and structural mechanics, demonstrate that RRFF and RRFF-FEM are robust to noise and achieve improved performance with reduced training time compared to the unregularized random feature model, while maintaining competitive accuracy relative to kernel and neural operator tests.

toXiv_bot_toot

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 2528 nodes and 3864 edges.

Tags: Biological, Protein interactions, Unweighted<…

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

An inexact semismooth Newton-Krylov method for semilinear elliptic optimal control problem

Shiqi Chen, Xuesong Chen

https://arxiv.org/abs/2511.10058 https://arxiv.org/pdf/2511.10058 https://arxiv.org/html/2511.10058

arXiv:2511.10058v1 Announce Type: new

Abstract: An inexact semismooth Newton method has been proposed for solving semi-linear elliptic optimal control problems in this paper. This method incorporates the generalized minimal residual (GMRES) method, a type of Krylov subspace method, to solve the Newton equations and utilizes nonmonotonic line search to adjust the iteration step size. The original problem is reformulated into a nonlinear equation through variational inequality principles and discretized using a second-order finite difference scheme. By leveraging slanting differentiability, the algorithm constructs semismooth Newton directions and employs GMRES method to inexactly solve the Newton equations, significantly reducing computational overhead. A dynamic nonmonotonic line search strategy is introduced to adjust stepsizes adaptively, ensuring global convergence while overcoming local stagnation. Theoretical analysis demonstrates that the algorithm achieves superlinear convergence near optimal solutions when the residual control parameter $\eta_k$ approaches to 0. Numerical experiments validate the method's accuracy and efficiency in solving semilinear elliptic optimal control problems, corroborating theoretical insights.

toXiv_bot_toot

LoD-Structured 3D Gaussian Splatting for Streaming Video Reconstruction

Xinhui Liu, Can Wang, Lei Liu, Zhenghao Chen, Wei Jiang, Wei Wang, Dong Xu

https://arxiv.org/abs/2601.18475 https://arxiv.org/pdf/2601.18475 https://arxiv.org/html/2601.18475

arXiv:2601.18475v1 Announce Type: new

Abstract: Free-Viewpoint Video (FVV) reconstruction enables photorealistic and interactive 3D scene visualization; however, real-time streaming is often bottlenecked by sparse-view inputs, prohibitive training costs, and bandwidth constraints. While recent 3D Gaussian Splatting (3DGS) has advanced FVV due to its superior rendering speed, Streaming Free-Viewpoint Video (SFVV) introduces additional demands for rapid optimization, high-fidelity reconstruction under sparse constraints, and minimal storage footprints. To bridge this gap, we propose StreamLoD-GS, an LoD-based Gaussian Splatting framework designed specifically for SFVV. Our approach integrates three core innovations: 1) an Anchor- and Octree-based LoD-structured 3DGS with a hierarchical Gaussian dropout technique to ensure efficient and stable optimization while maintaining high-quality rendering; 2) a GMM-based motion partitioning mechanism that separates dynamic and static content, refining dynamic regions while preserving background stability; and 3) a quantized residual refinement framework that significantly reduces storage requirements without compromising visual fidelity. Extensive experiments demonstrate that StreamLoD-GS achieves competitive or state-of-the-art performance in terms of quality, efficiency, and storage.

toXiv_bot_toot

Now that Musk has offloaded Twitter and his AI experiments to SpaceX, he can be sure the government will bail it out when the AI bubble pops. The government's defense industry depends upon SpaceX for access to space.

This is probably the main reason for the "sale" transferring money from one of Musk's bank accounts to another of Musk's bank accounts.

The AI price bubble popping could have wiped out xAI and thus Twitter with it, but now it's cushioned against that by contracts with a government who can't afford to allow their only real space access to go bust.

So he can subsidize his failing AI business and his unprofitable media-manipulation efforts at Twitter with his government-protected failing exploding space-rocket project.

All protected by the lie which the media keep repeating uncritically that it makes any sense at all to have data centers in space.

What a great businessman.

#musk #spacex #twitter #xai

Spatially-informed transformers: Injecting geostatistical covariance biases into self-attention for spatio-temporal forecasting

Yuri Calleo

https://arxiv.org/abs/2512.17696 https://arxiv.org/pdf/2512.17696 https://arxiv.org/html/2512.17696

arXiv:2512.17696v1 Announce Type: new

Abstract: The modeling of high-dimensional spatio-temporal processes presents a fundamental dichotomy between the probabilistic rigor of classical geostatistics and the flexible, high-capacity representations of deep learning. While Gaussian processes offer theoretical consistency and exact uncertainty quantification, their prohibitive computational scaling renders them impractical for massive sensor networks. Conversely, modern transformer architectures excel at sequence modeling but inherently lack a geometric inductive bias, treating spatial sensors as permutation-invariant tokens without a native understanding of distance. In this work, we propose a spatially-informed transformer, a hybrid architecture that injects a geostatistical inductive bias directly into the self-attention mechanism via a learnable covariance kernel. By formally decomposing the attention structure into a stationary physical prior and a non-stationary data-driven residual, we impose a soft topological constraint that favors spatially proximal interactions while retaining the capacity to model complex dynamics. We demonstrate the phenomenon of ``Deep Variography'', where the network successfully recovers the true spatial decay parameters of the underlying process end-to-end via backpropagation. Extensive experiments on synthetic Gaussian random fields and real-world traffic benchmarks confirm that our method outperforms state-of-the-art graph neural networks. Furthermore, rigorous statistical validation confirms that the proposed method delivers not only superior predictive accuracy but also well-calibrated probabilistic forecasts, effectively bridging the gap between physics-aware modeling and data-driven learning.

toXiv_bot_toot

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

Easy Adaptation: An Efficient Task-Specific Knowledge Injection Method for Large Models in Resource-Constrained Environments

Dong Chen, Zhengqing Hu, Shixing Zhao, Yibo Guo

https://arxiv.org/abs/2512.17771 https://arxiv.org/pdf/2512.17771 https://arxiv.org/html/2512.17771

arXiv:2512.17771v1 Announce Type: new

Abstract: While the enormous parameter scale endows Large Models (LMs) with unparalleled performance, it also limits their adaptability across specific tasks. Parameter-Efficient Fine-Tuning (PEFT) has emerged as a critical approach for effectively adapting LMs to a diverse range of downstream tasks. However, existing PEFT methods face two primary challenges: (1) High resource cost. Although PEFT methods significantly reduce resource demands compared to full fine-tuning, it still requires substantial time and memory, making it impractical in resource-constrained environments. (2) Parameter dependency. PEFT methods heavily rely on updating a subset of parameters associated with LMs to incorporate task-specific knowledge. Yet, due to increasing competition in the LMs landscape, many companies have adopted closed-source policies for their leading models, offering access only via Application Programming Interface (APIs). Whereas, the expense is often cost-prohibitive and difficult to sustain, as the fine-tuning process of LMs is extremely slow. Even if small models perform far worse than LMs in general, they can achieve superior results on particular distributions while requiring only minimal resources. Motivated by this insight, we propose Easy Adaptation (EA), which designs Specific Small Models (SSMs) to complement the underfitted data distribution for LMs. Extensive experiments show that EA matches the performance of PEFT on diverse tasks without accessing LM parameters, and requires only minimal resources.

toXiv_bot_toot

Benders Decomposition for Passenger-Oriented Train Timetabling with Hybrid Periodicity

Zhiyuan Yao, Anita Sch\"obel, Lei Nie, Sven J\"ager

https://arxiv.org/abs/2511.09892 https://arxiv.org/pdf/2511.09892 https://arxiv.org/html/2511.09892

arXiv:2511.09892v1 Announce Type: new

Abstract: Periodic timetables are widely adopted in passenger railway operations due to their regular service patterns and well-coordinated train connections. However, fluctuations in passenger demand require varying train services across different periods, necessitating adjustments to the periodic timetable. This study addresses a hybrid periodic train timetabling problem, which enhances the flexibility and demand responsiveness of a given periodic timetable through schedule adjustments and aperiodic train insertions, taking into account the rolling stock circulation. Since timetable modifications may affect initial passenger routes, passenger routing is incorporated into the problem to guide planning decisions towards a passenger-oriented objective. Using a time-space network representation, the problem is formulated as a dynamic railway service network design model with resource constraints. To handle the complexity of real-world instances, we propose a decomposition-based algorithm integrating Benders decomposition and column generation, enhanced with multiple preprocessing and accelerating techniques. Numerical experiments demonstrate the effectiveness of the algorithm and highlight the advantage of hybrid periodic timetables in reducing passenger travel costs.

toXiv_bot_toot

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 2724 nodes and 14322 edges.

Tags: Biological, Protein interactions, Unweighted…

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 912 nodes and 22738 edges.

Tags: Biological, Protein interactions, Unweighted<…

Atomic and molecular systems for radiation thermometry

Stephen P. Eckel, Eric B. Norrgard, Christopher Holloway, Nikunjkumar Prajapati, Noah Schlossberger, Matthew Simons

https://arxiv.org/abs/2512.08668 https://arxiv.org/pdf/2512.08668 https://arxiv.org/html/2512.08668

arXiv:2512.08668v1 Announce Type: new

Abstract: Atoms and simple molecules are excellent candidates for new standards and sensors because they are both all identical and their properties are determined by the immutable laws of quantum physics. Here, we introduce the concept of building a standard and sensor of radiative temperature using atoms and molecules. Such standards are based on precise measurement of the rate at which blackbody radiation (BBR) either excites or stimulates emission for a given atomic transition. We summarize the recent results of two experiments while detailing the rate equation models required for their interpretation. The cold atom thermometer (CAT) uses a gas of laser cooled $^{85}$Rb Rydberg atoms to probe the BBR spectrum near 130~GHz. This primary, {\it i.e.}, not traceable to a measurement of like kind, temperature measurement currently has a total uncertainty of approximately 1~\%, with clear paths toward improvement. The compact blackbody radiation atomic sensor (CoBRAS) uses a vapour of $^{85}$Rb and monitors fluorescence from states that are either populated by BBR or populated by spontaneous emission to measure the blackbody spectrum near 24.5~THz. The CoBRAS has an excellent relative precision of $u(T)\approx 0.13$~K, with a clear path toward implementing a primary

toXiv_bot_toot

CAG-Avatar: Cross-Attention Guided Gaussian Avatars for High-Fidelity Head Reconstruction

Zhe Chang, Haodong Jin, Yan Song, Hui Yu

https://arxiv.org/abs/2601.14844 https://arxiv.org/pdf/2601.14844 https://arxiv.org/html/2601.14844

arXiv:2601.14844v1 Announce Type: new

Abstract: Creating high-fidelity, real-time drivable 3D head avatars is a core challenge in digital animation. While 3D Gaussian Splashing (3D-GS) offers unprecedented rendering speed and quality, current animation techniques often rely on a "one-size-fits-all" global tuning approach, where all Gaussian primitives are uniformly driven by a single expression code. This simplistic approach fails to unravel the distinct dynamics of different facial regions, such as deformable skin versus rigid teeth, leading to significant blurring and distortion artifacts. We introduce Conditionally-Adaptive Gaussian Avatars (CAG-Avatar), a framework that resolves this key limitation. At its core is a Conditionally Adaptive Fusion Module built on cross-attention. This mechanism empowers each 3D Gaussian to act as a query, adaptively extracting relevant driving signals from the global expression code based on its canonical position. This "tailor-made" conditioning strategy drastically enhances the modeling of fine-grained, localized dynamics. Our experiments confirm a significant improvement in reconstruction fidelity, particularly for challenging regions such as teeth, while preserving real-time rendering performance.

toXiv_bot_toot

interactome_stelzl: Stelzl human interactome (2005)

A network of human proteins and their binding interactions. Nodes represent proteins and an edge represents an interaction between two proteins, as inferred via high-throughput Y2H experiments using bait and prey methodology.

This network has 1706 nodes and 6207 edges.

Tags: Biological, Protein interactions, Unweighted

Minimizing smooth Kurdyka-{\L}ojasiewicz functions via generalized descent methods: Convergence rate and complexity

Masoud Ahookhosh, Susan Ghaderi, Alireza Kabgani, Morteza Rahimi

https://arxiv.org/abs/2511.10414 https://arxiv.org/pdf/2511.10414 https://arxiv.org/html/2511.10414

arXiv:2511.10414v1 Announce Type: new

Abstract: This paper addresses the generalized descent algorithm (DEAL) for minimizing smooth functions, which is analyzed under the Kurdyka-{\L}ojasiewicz (KL) inequality. In particular, the suggested algorithm guarantees a sufficient decrease by adapting to the cost function's geometry. We leverage the KL property to establish the global convergence, convergence rates, and complexity. A particular focus is placed on the linear convergence of generalized descent methods. We show that the constant step-size and Armijo line search strategies along a generalized descent direction satisfy our generalized descent condition. Additionally, for nonsmooth functions by leveraging the smoothing techniques such as forward-backward and high-order Moreau envelopes, we show that the boosted proximal gradient method (BPGA) and the boosted high-order proximal-point (BPPA) methods are also specific cases of DEAL, respectively. It is notable that if the order of the high-order proximal term is chosen in a certain way (depending on the KL exponent), then the sequence generated by BPPA converges linearly for an arbitrary KL exponent. Our preliminary numerical experiments on inverse problems and LASSO demonstrate the efficiency of the proposed methods, validating our theoretical findings.

toXiv_bot_toot

celegans_interactomes: C. elegans interactomes (2009)

Ten networks of protein-protein interactions in Caenorhabditis elegans (nematode), from yeast two-hybrid experiments, biological process maps, literature curation, orthologous interactions, and genetic interactions. The WI8 network combines WI2004, WI2007 and BPmaps, while the Integrated Network combines data from all sources.

This network has 759 nodes and 1593 edges.

Tags: Biological, Protein interactions, Unweighted

PAColorHolo: A Perceptually-Aware Color Management Framework for Holographic Displays

Chun Chen, Minseok Chae, Seung-Woo Nam, Myeong-Ho Choi, Minseong Kim, Eunbi Lee, Yoonchan Jeong, Jae-Hyeung Park

https://arxiv.org/abs/2601.14766 https://arxiv.org/pdf/2601.14766 https://arxiv.org/html/2601.14766

arXiv:2601.14766v1 Announce Type: new

Abstract: Holographic displays offer significant potential for augmented and virtual reality applications by reconstructing wavefronts that enable continuous depth cues and natural parallax without vergence-accommodation conflict. However, despite advances in pixel-level image quality, current systems struggle to achieve perceptually accurate color reproduction--an essential component of visual realism. These challenges arise from complex system-level distortions caused by coherent laser illumination, spatial light modulator imperfections, chromatic aberrations, and camera-induced color biases. In this work, we propose a perceptually-aware color management framework for holographic displays that jointly addresses input-output color inconsistencies through color space transformation, adaptive illumination control, and neural network-based perceptual modeling of the camera's color response. We validate the effectiveness of our approach through numerical simulations, optical experiments, and a controlled user study. The results demonstrate substantial improvements in perceptual color fidelity, laying the groundwork for perceptually driven holographic rendering in future systems.

toXiv_bot_toot

Riccati-ZORO: An efficient algorithm for heuristic online optimization of internal feedback laws in robust and stochastic model predictive control

Florian Messerer, Yunfan Gao, Jonathan Frey, Moritz Diehl

https://arxiv.org/abs/2511.10473 https://arxiv.org/pdf/2511.10473 https://arxiv.org/html/2511.10473

arXiv:2511.10473v1 Announce Type: new

Abstract: We present Riccati-ZORO, an algorithm for tube-based optimal control problems (OCP). Tube OCPs predict a tube of trajectories in order to capture predictive uncertainty. The tube induces a constraint tightening via additional backoff terms. This backoff can significantly affect the performance, and thus implicitly defines a cost of uncertainty. Optimizing the feedback law used to predict the tube can significantly reduce the backoffs, but its online computation is challenging.

Riccati-ZORO jointly optimizes the nominal trajectory and uncertainty tube based on a heuristic uncertainty cost design. The algorithm alternates between two subproblems: (i) a nominal OCP with fixed backoffs, (ii) an unconstrained tube OCP, which optimizes the feedback gains for a fixed nominal trajectory. For the tube optimization, we propose a cost function informed by the proximity of the nominal trajectory to constraints, prioritizing reduction of the corresponding backoffs. These ideas are developed in detail for ellipsoidal tubes under linear state feedback. In this case, the decomposition into the two subproblems yields a substantial reduction of the computational complexity with respect to the state dimension from $\mathcal{O}(n_x^6)$ to $\mathcal{O}(n_x^3)$, i.e., the complexity of a nominal OCP.

We investigate the algorithm in numerical experiments, and provide two open-source implementations: a prototyping version in CasADi and a high-performance implementation integrated into the acados OCP solver.

toXiv_bot_toot

Thermal one-loop self-energy correction for hydrogen-like systems: relativistic approach

M. Reiter, D. Solovyev, A. Bobylev, D. Glazov, T. Zalialiutdinov

https://arxiv.org/abs/2512.06828 https://arxiv.org/pdf/2512.06828 https://arxiv.org/html/2512.06828

arXiv:2512.06828v1 Announce Type: new

Abstract: Within a fully relativistic framework, the one-loop self-energy correction for a bound electron is derived and extended to incorporate the effects of external thermal radiation. In a series of previous works, it was shown that in quantum electrodynamics at finite temperature (QED), the description of effects caused by blackbody radiation can be reduced to using the thermal part of the photon propagator. As a consequence of the non-relativistic approximation in the calculation of the thermal one-loop self-energy correction, well-known quantum-mechanical (QM) phenomena emerge at successive orders: the Stark effect arises at leading order in $\alpha Z$, the Zeeman effect appears in the next-to-leading non-relativistic correction, accompanied by diamagnetic contributions and their relativistic refinements, among other perturbative corrections. The fully relativistic approach used in this work for calculating the SE contribution allows for accurate calculations of the thermal shift of atomic levels, in which all these effects are automatically taken into account. The hydrogen atom serves as the basis for testing a fully relativistic approach to such calculations. Additionally, an analysis is presented of the behavior of the thermal shift caused by the thermal one-loop correction to the self-energy of a bound electron for hydrogen-like ions with an arbitrary nuclear charge $Z$. The significance of these calculations lies in their relevance to contemporary high-precision experiments, where thermal radiation constitutes one of the major contributions to the overall uncertainty budget.

toXiv_bot_toot

S-D-RSM: Stochastic Distributed Regularized Splitting Method for Large-Scale Convex Optimization Problems

Maoran Wang, Xingju Cai, Yongxin Chen

https://arxiv.org/abs/2511.10133 https://arxiv.org/pdf/2511.10133 https://arxiv.org/html/2511.10133

arXiv:2511.10133v1 Announce Type: new

Abstract: This paper investigates the problems large-scale distributed composite convex optimization, with motivations from a broad range of applications, including multi-agent systems, federated learning, smart grids, wireless sensor networks, compressed sensing, and so on. Stochastic gradient descent (SGD) and its variants are commonly employed to solve such problems. However, existing algorithms often rely on vanishing step sizes, strong convexity assumptions, or entail substantial computational overhead to ensure convergence or obtain favorable complexity. To bridge the gap between theory and practice, we integrate consensus optimization and operator splitting techniques (see Problem Reformulation) to develop a novel stochastic splitting algorithm, termed the \emph{stochastic distributed regularized splitting method} (S-D-RSM). In practice, S-D-RSM performs parallel updates of proximal mappings and gradient information for only a randomly selected subset of agents at each iteration. By introducing regularization terms, it effectively mitigates consensus discrepancies among distributed nodes. In contrast to conventional stochastic methods, our theoretical analysis establishes that S-D-RSM achieves global convergence without requiring diminishing step sizes or strong convexity assumptions. Furthermore, it achieves an iteration complexity of $\mathcal{O}(1/\epsilon)$ with respect to both the objective function value and the consensus error. Numerical experiments show that S-D-RSM achieves up to 2--3$\times$ speedup compared to state-of-the-art baselines, while maintaining comparable or better accuracy. These results not only validate the algorithm's theoretical guarantees but also demonstrate its effectiveness in practical tasks such as compressed sensing and empirical risk minimization.

toXiv_bot_toot