2026-01-27 06:44:19

2026-01-27 06:44:19

2025-12-25 18:40:01

CATL is making major moves on two continents. The world's largest EV battery maker is partnering with Stellantis on a carbon-neutral 50 GWh factory in Spain, creating thousands of jobs.

Meanwhile, they're restarting a lithium mine in China that could impact global prices and potentially lower battery costs by 2026.

2026-01-30 00:30:01

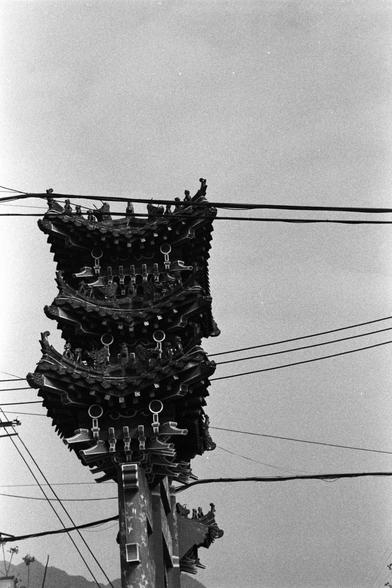

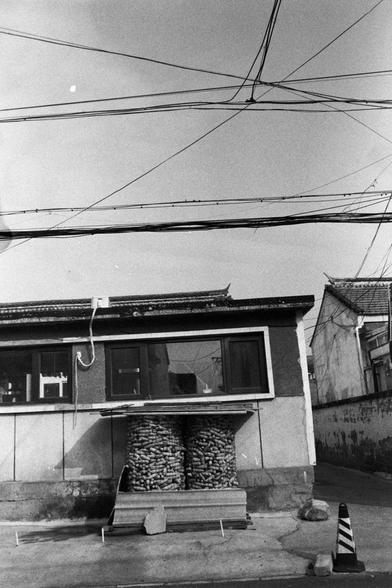

Some City Some Nature VII 🏙️

一些城一些自然 VII 🏙️

📷 Nikon F4E

🎞️ Fujifilm NEOPAN SS, expired 1993

#filmphotography #Photography #blackandwhite

2025-11-27 07:22:01

shots fired:

> it’s abundantly clear that the talented folks who used to work on the product have moved on to bigger and better things, with the remaining rookies eager to inflict some kind of bloated, buggy JavaScript framework on us in the name of progress. Stuff that used to be snappy is now sluggish and often entirely broken.

…

2026-01-28 16:41:05

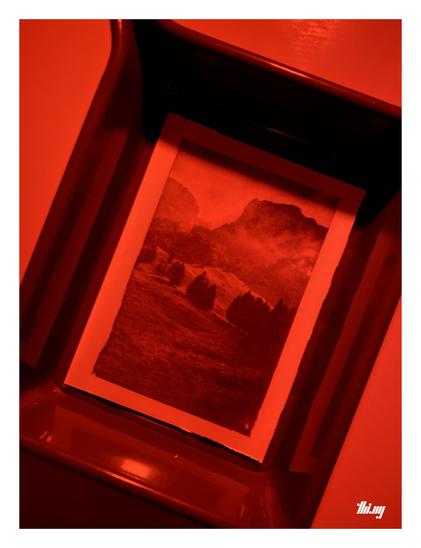

Me. That's the actual work in progress. 🚧

#wip #wipWednesday

2025-12-14 09:16:17

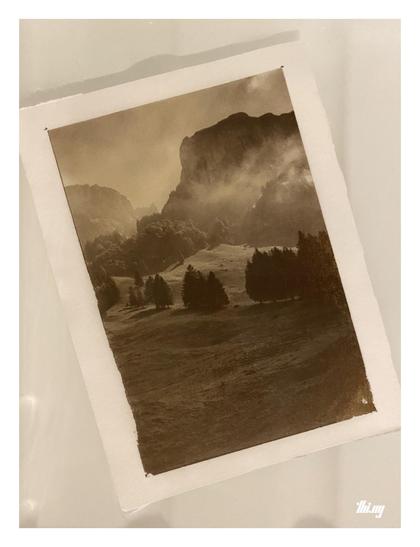

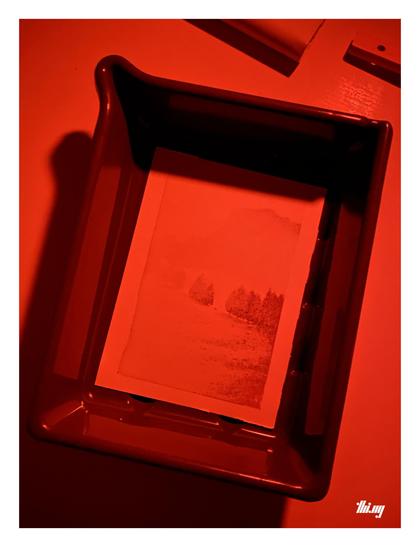

A silent scenery in four acts...

(Selenium-toned[1] 4x6 salt print (still in final wash) plus previous work-in-progress stages... see alt text for details)

The picture/motif itself is one of my personal favorites and was taken 4 years ago in the Alpstein massif, when clouds were spontaneously forming around us, creating a wonderful light/scene/drama and a dear memory... now also as print which likely will/can outlast myself

[1] The tones will become more neutral once dry...…

2025-11-19 13:14:40

2025-12-19 03:10:02

Strikes run on pizza.

Turns out they also can begin to end on them.

Several pies, in fact, arrived on North Shore Drive on the brisk Tuesday that was Oct. 18, 2022,

when the strikers first walked out of the Pittsburgh Post-Gazette newsroom

because the PG had violated federal labor law.

The workers said they would not go back to their jobs until their employer followed the rules.

They had no idea it would take three years

and become the nation’s lon…

2025-12-24 14:02:11

My work in progress for a playlist for Christmas (without ick) #Music

2025-12-09 04:20:57

Scientists at NeurIPS, which drew a record 26,000 attendees this year, say key questions about how AI models work and how to measure them remain unresolved (Jared Perlo/NBC News)

https://www.nbcnews.com/tech/tech-news/ai-progress-surges-resea…

2025-12-19 16:23:18

Progress! Now 3 VMs to upgrade to Trixie in the next day or two, plus my office workstation and core router which are scheduled for upgrade during the maintenance outage later.

And I only have 8 VMs to switch over to the new storage setup before I can work on upgrading the hypervisor.

2025-12-22 13:00:41

2026-01-19 14:40:41

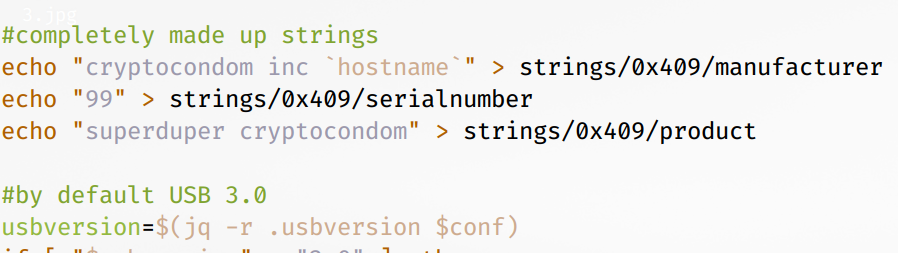

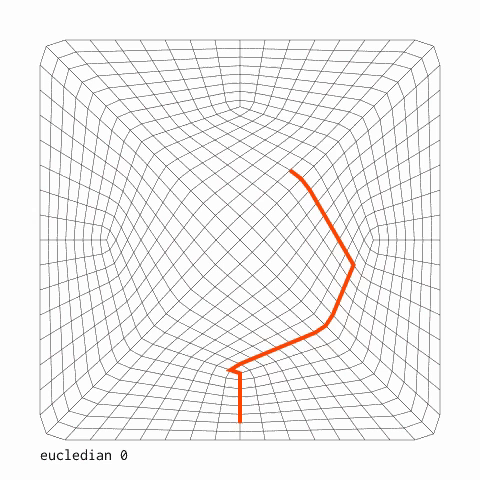

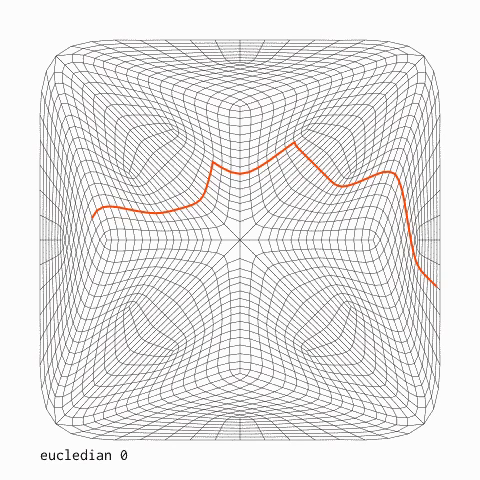

This was an interesting problem to work on (back in 2017): A visualization of a path planner for 3D printing (FDM) a single layer/mesh structure of a multi-layer textile. Two setups of the same path strategy which optimized for longest continuous sub-paths and minimum rapids (distance without filament extrusion) between sub-paths. The planner supported six strategies in total, incl. optimizing for straight sub-paths and minimum amounts of "recent" crossings (to allow filament to co…

2025-12-24 02:58:47

On November 20th, women stop being paid compared to men.

This is the point in the year when,

on average,

women effectively work for free.

The gender pay gap is now 11.3%,

up from 10.7% last year.

It’s a stark reminder that while progress has been made,

gender pay inequality persists

- and may be higher than we thought.

2025-12-05 14:51:22

Ethical Considerations Around Machine Learning-Engaged Online Participatory Research - poster from Zooniverse community at #FF2025 https://zenodo.org/records/17779992

2025-11-07 07:25:01

2025-11-20 01:17:54

Raiders’ Ashton Jeanty Speaks Up on Rookie Season https://www.si.com/nfl/raiders/onsi/las-vegas-ashton-jeanty-speaks-up-rookie-season

2025-12-13 17:08:58

@… same! Can't wait to get it up and running. Turns out talos.dev needs some work in progress fixes to support the pi 5 😅

2025-12-08 08:03:50

Strategyproof Tournament Rules for Teams with a Constant Degree of Selfishness

David Pennock, Daniel Schoepflin, Kangning Wang

https://arxiv.org/abs/2512.05235 https://arxiv.org/pdf/2512.05235 https://arxiv.org/html/2512.05235

arXiv:2512.05235v1 Announce Type: new

Abstract: We revisit the well-studied problem of designing fair and manipulation-resistant tournament rules. In this problem, we seek a mechanism that (probabilistically) identifies the winner of a tournament after observing round-robin play among $n$ teams in a league. Such a mechanism should satisfy the natural properties of monotonicity and Condorcet consistency. Moreover, from the league's perspective, the winner-determination tournament rule should be strategyproof, meaning that no team can do better by losing a game on purpose.

Past work considered settings in which each team is fully selfish, caring only about its own probability of winning, and settings in which each team is fully selfless, caring only about the total winning probability of itself and the team to which it deliberately loses. More recently, researchers considered a mixture of these two settings with a parameter $\lambda$. Intermediate selfishness $\lambda$ means that a team will not lose on purpose unless its pair gains at least $\lambda s$ winning probability, where $s$ is the individual team's sacrifice from its own winning probability. All of the dozens of previously known tournament rules require $\lambda = \Omega(n)$ to be strategyproof, and it has been an open problem to find such a rule with the smallest $\lambda$.

In this work, we make significant progress by designing a tournament rule that is strategyproof with $\lambda = 11$. Along the way, we propose a new notion of multiplicative pairwise non-manipulability that ensures that two teams cannot manipulate the outcome of a game to increase the sum of their winning probabilities by more than a multiplicative factor $\delta$ and provide a rule which is multiplicatively pairwise non-manipulable for $\delta = 3.5$.

toXiv_bot_toot

2026-01-18 16:31:00

Eleven organizations just won a combined $5.9M for solutions that are actually changing lives.

The 2026 Zayed Sustainability Prize winners are tackling everything from AI diagnostics to eco-brick production. Since 2008, this prize has impacted over 411 million people through innovations in health, water, energy, and climate action.

2026-01-13 16:31:39

Cowboys Urged to Make Shocking Pick on Star College Coach https://heavy.com/sports/nfl/dallas-cowboys/corey-hetherman-pick-on-star-college-coach/

2025-12-10 23:01:01

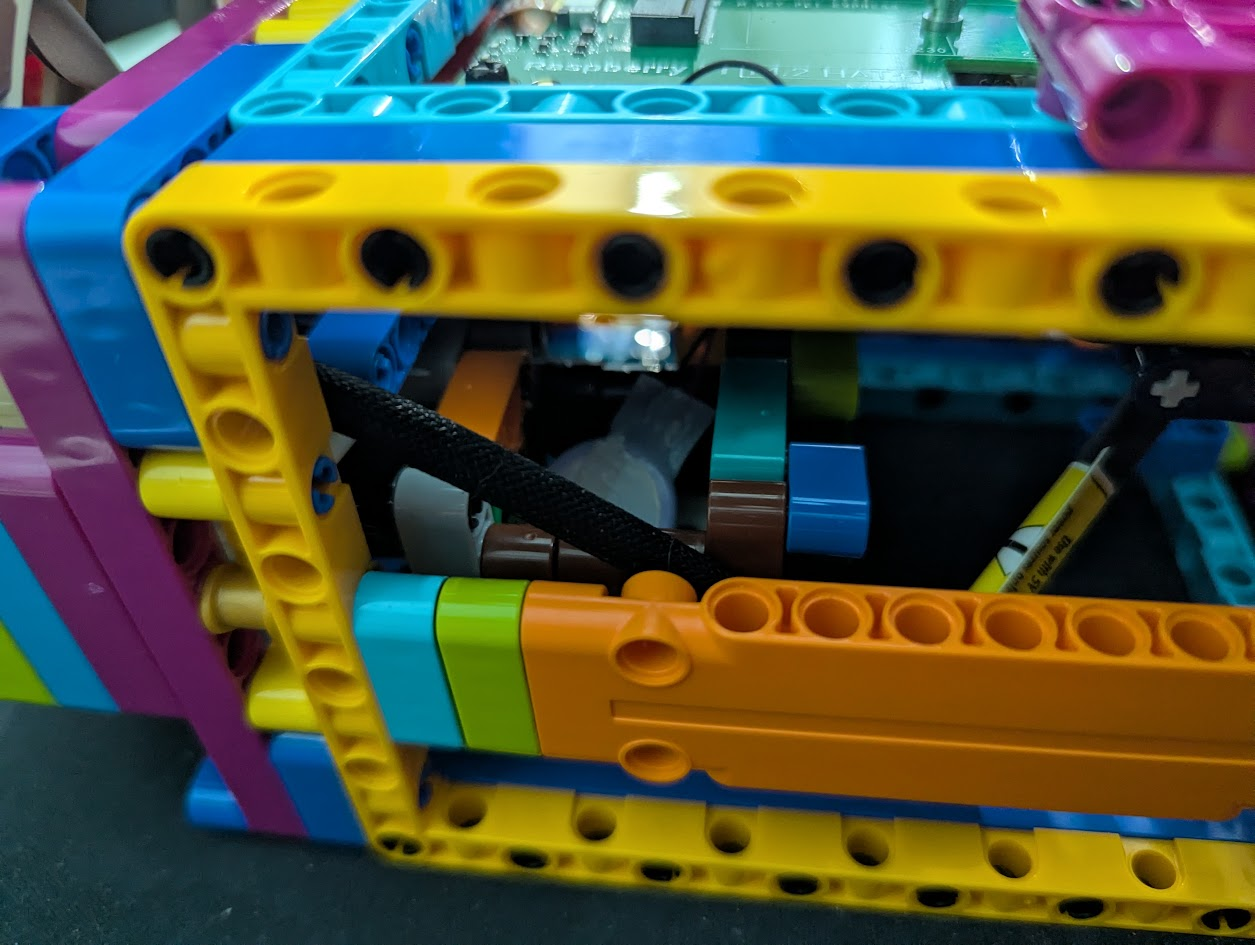

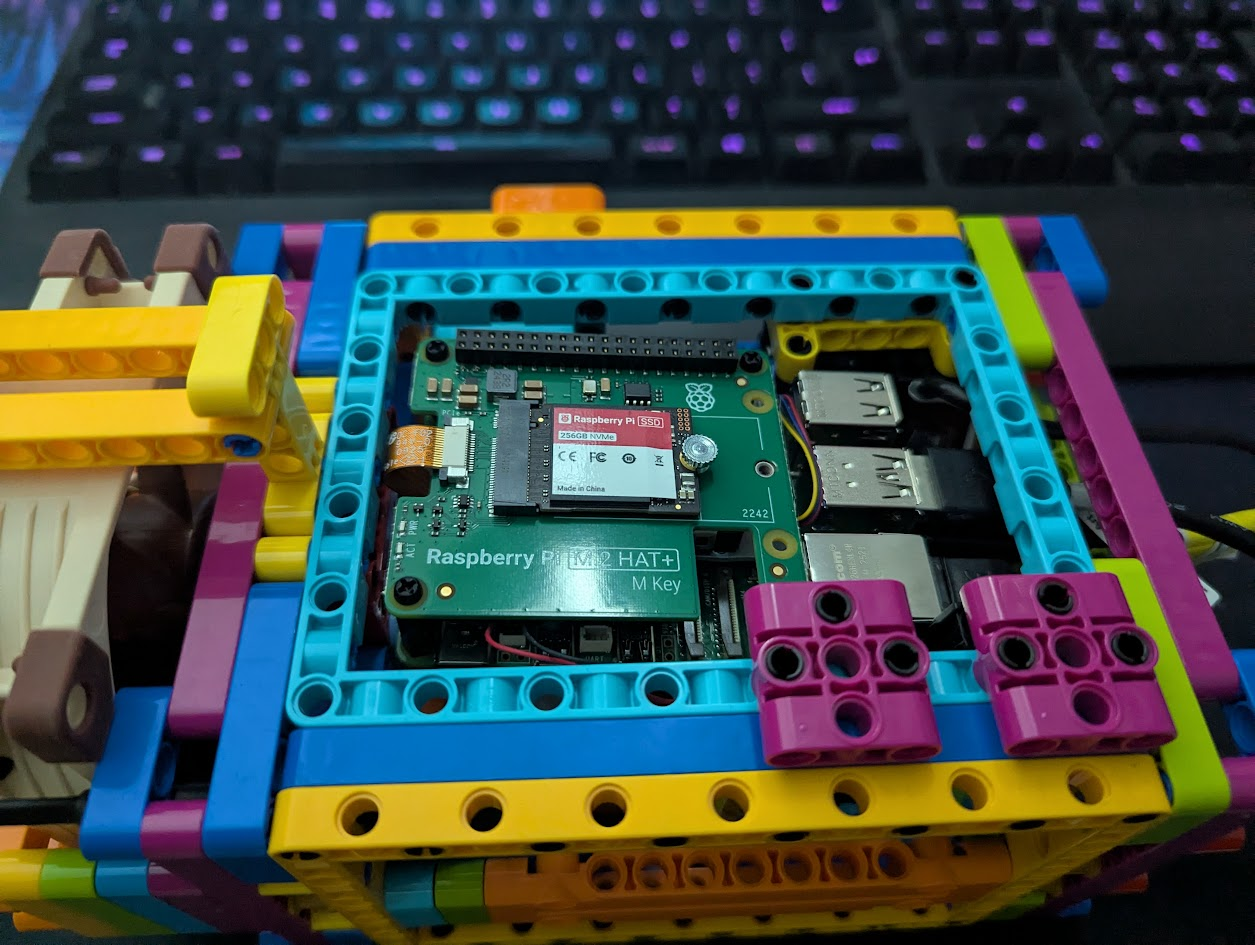

Preview of a new 🌈 node joining the cluster, once talos.dev fully supports the #RaspberryPi 5. It's a work in progress, and there are temporary options: https://github.com/siderolabs/sbc-rasp

2025-11-14 23:57:47

How Raiders' Chip Kelly Is Conducting Brock Bowers' Impact https://www.si.com/nfl/raiders/onsi/las-vegas-chip-kelly-conducting-brock-bowers-impact