2025-07-06 19:29:10

2025-07-06 19:29:10

2025-07-05 22:26:50

Understanding Japan’s AI Promotion Act: An “Innovation-First” Blueprint for AI Regulation

https://fpf.org/blog/understanding-japans-ai-promotion-act-an-innovation-first-blueprint-for-ai-regulation/

2025-06-07 06:21:10

AI research nonprofit EleutherAI releases the Common Pile v0.1, an 8TB dataset of licensed and open-domain text for AI models that it says is one of the largest (Kyle Wiggers/TechCrunch)

https://techcrunch.com/2025/06/06/eleu

2025-08-04 15:49:00

Should we teach vibe coding? Here's why not.

Should AI coding be taught in undergrad CS education?

1/2

I teach undergraduate computer science labs, including for intro and more-advanced core courses. I don't publish (non-negligible) scholarly work in the area, but I've got years of craft expertise in course design, and I do follow the academic literature to some degree. In other words, In not the world's leading expert, but I have spent a lot of time thinking about course design, and consider myself competent at it, with plenty of direct experience in what knowledge & skills I can expect from students as they move through the curriculum.

I'm also strongly against most uses of what's called "AI" these days (specifically, generative deep neutral networks as supplied by our current cadre of techbro). There are a surprising number of completely orthogonal reasons to oppose the use of these systems, and a very limited number of reasonable exceptions (overcoming accessibility barriers is an example). On the grounds of environmental and digital-commons-pollution costs alone, using specifically the largest/newest models is unethical in most cases.

But as any good teacher should, I constantly question these evaluations, because I worry about the impact on my students should I eschew teaching relevant tech for bad reasons (and even for his reasons). I also want to make my reasoning clear to students, who should absolutely question me on this. That inspired me to ask a simple question: ignoring for one moment the ethical objections (which we shouldn't, of course; they're very stark), at what level in the CS major could I expect to teach a course about programming with AI assistance, and expect students to succeed at a more technically demanding final project than a course at the same level where students were banned from using AI? In other words, at what level would I expect students to actually benefit from AI coding "assistance?"

To be clear, I'm assuming that students aren't using AI in other aspects of coursework: the topic of using AI to "help you study" is a separate one (TL;DR it's gross value is not negative, but it's mostly not worth the harm to your metacognitive abilities, which AI-induced changes to the digital commons are making more important than ever).

So what's my answer to this question?

If I'm being incredibly optimistic, senior year. Slightly less optimistic, second year of a masters program. Realistic? Maybe never.

The interesting bit for you-the-reader is: why is this my answer? (Especially given that students would probably self-report significant gains at lower levels.) To start with, [this paper where experienced developers thought that AI assistance sped up their work on real tasks when in fact it slowed it down] (https://arxiv.org/abs/2507.09089) is informative. There are a lot of differences in task between experienced devs solving real bugs and students working on a class project, but it's important to understand that we shouldn't have a baseline expectation that AI coding "assistants" will speed things up in the best of circumstances, and we shouldn't trust self-reports of productivity (or the AI hype machine in general).

Now we might imagine that coding assistants will be better at helping with a student project than at helping with fixing bugs in open-source software, since it's a much easier task. For many programming assignments that have a fixed answer, we know that many AI assistants can just spit out a solution based on prompting them with the problem description (there's another elephant in the room here to do with learning outcomes regardless of project success, but we'll ignore this over too, my focus here is on project complexity reach, not learning outcomes). My question is about more open-ended projects, not assignments with an expected answer. Here's a second study (by one of my colleagues) about novices using AI assistance for programming tasks. It showcases how difficult it is to use AI tools well, and some of these stumbling blocks that novices in particular face.

But what about intermediate students? Might there be some level where the AI is helpful because the task is still relatively simple and the students are good enough to handle it? The problem with this is that as task complexity increases, so does the likelihood of the AI generating (or copying) code that uses more complex constructs which a student doesn't understand. Let's say I have second year students writing interactive websites with JavaScript. Without a lot of care that those students don't know how to deploy, the AI is likely to suggest code that depends on several different frameworks, from React to JQuery, without actually setting up or including those frameworks, and of course three students would be way out of their depth trying to do that. This is a general problem: each programming class carefully limits the specific code frameworks and constructs it expects students to know based on the material it covers. There is no feasible way to limit an AI assistant to a fixed set of constructs or frameworks, using current designs. There are alternate designs where this would be possible (like AI search through adaptation from a controlled library of snippets) but those would be entirely different tools.

So what happens on a sizeable class project where the AI has dropped in buggy code, especially if it uses code constructs the students don't understand? Best case, they understand that they don't understand and re-prompt, or ask for help from an instructor or TA quickly who helps them get rid of the stuff they don't understand and re-prompt or manually add stuff they do. Average case: they waste several hours and/or sweep the bugs partly under the rug, resulting in a project with significant defects. Students in their second and even third years of a CS major still have a lot to learn about debugging, and usually have significant gaps in their knowledge of even their most comfortable programming language. I do think regardless of AI we as teachers need to get better at teaching debugging skills, but the knowledge gaps are inevitable because there's just too much to know. In Python, for example, the LLM is going to spit out yields, async functions, try/finally, maybe even something like a while/else, or with recent training data, the walrus operator. I can't expect even a fraction of 3rd year students who have worked with Python since their first year to know about all these things, and based on how students approach projects where they have studied all the relevant constructs but have forgotten some, I'm not optimistic seeing these things will magically become learning opportunities. Student projects are better off working with a limited subset of full programming languages that the students have actually learned, and using AI coding assistants as currently designed makes this impossible. Beyond that, even when the "assistant" just introduces bugs using syntax the students understand, even through their 4th year many students struggle to understand the operation of moderately complex code they've written themselves, let alone written by someone else. Having access to an AI that will confidently offer incorrect explanations for bugs will make this worse.

To be sure a small minority of students will be able to overcome these problems, but that minority is the group that has a good grasp of the fundamentals and has broadened their knowledge through self-study, which earlier AI-reliant classes would make less likely to happen. In any case, I care about the average student, since we already have plenty of stuff about our institutions that makes life easier for a favored few while being worse for the average student (note that our construction of that favored few as the "good" students is a large part of this problem).

To summarize: because AI assistants introduce excess code complexity and difficult-to-debug bugs, they'll slow down rather than speed up project progress for the average student on moderately complex projects. On a fixed deadline, they'll result in worse projects, or necessitate less ambitious project scoping to ensure adequate completion, and I expect this remains broadly true through 4-6 years of study in most programs (don't take this as an endorsement of AI "assistants" for masters students; we've ignored a lot of other problems along the way).

There's a related problem: solving open-ended project assignments well ultimately depends on deeply understanding the problem, and AI "assistants" allow students to put a lot of code in their file without spending much time thinking about the problem or building an understanding of it. This is awful for learning outcomes, but also bad for project success. Getting students to see the value of thinking deeply about a problem is a thorny pedagogical puzzle at the best of times, and allowing the use of AI "assistants" makes the problem much much worse. This is another area I hope to see (or even drive) pedagogical improvement in, for what it's worth.

1/2

2025-09-05 19:26:02

AI enhanced psychosis is not a great thing.

AI Psychosis sounds like a sci-fi problem, but it is real, and present tense.

Turns out AIs like ChatGPT, and their propensity to lie and their lack of actual intelligence, comes with costs.

https://www.cnn.com/2025/09/05/tech/ai-spa

2025-08-06 19:55:06

“Cocaine doesn’t make you a business genius — it just makes you think you’re a business genius. Same for AI.”

Generative AI runs on gambling addiction — just one more prompt, bro! – Pivot to AI https://pivot-to-ai.com/2025/06/05/generativ…

2025-08-06 09:09:07

GitHub CEO: Embrace AI or get out.

https://www.businessinsider.com/github-ceo-developers-embrace-ai-or-get-out-2025-8

PS. Here’s where to go: @…

2025-07-06 11:42:00

2025-09-05 16:03:16

MIT says AI isn’t replacing you… it’s just wasting your boss’s money

https://www.interviewquery.com/p/mit-ai-isnt-replacing-workers-just-wasting-money

2025-08-06 02:30:44

In its Q4 earnings report, News Corp warned President Trump that his books "are being consumed by AI engines which profit from his thoughts" (Lauren Aratani/The Guardian)

https://www.theguardian.com/us-news/2025/aug/05/news-corp-trump-ai-art-of-th…

2025-08-05 23:54:28

Teaching with AI: Human Days and AI Days

In my previous post I outlined a plan for a no-tech pedagogy that would prevent students from using AI to do the assignments. However, my colleagues tell me that current policy at the university where I used to teach requires the use of AI in some classes. They have also eliminated the budget for photocopying handouts and texts. Everything must be delivered through the Learning Management System, which in this case is Canvas.

2025-09-06 02:45:10

Anthropic to pay $1.5 billion to authors in landmark #AI settlement ... “believed to be the largest publicly reported recovery in the history of US copyright litigation.”

https://www.…

2025-08-07 07:02:22

https://www.wired.com/story/inside-the-biden-administrations-unpublished-report-on-ai-safety/

“The document might have helped companies assess their own AI systems”

2025-09-05 14:22:35

> As currently constructed, AI is an oligarchy-enriching, worker-immiserating, energy-depleting, brain-rotting economic bubble in waiting. Democrats can get on the public’s side here.

I haven't even read the article but (considering how strongly I agree with the premises here) I'm mostly just sad about how clearly this highlights the financial capture of the DNC

---

Democrats Must Oppose the AI Industry - The American Prospect

2025-07-07 10:20:05

RT: @PsyPost (X)

A new study suggests that people who view artificial intelligence positively may be more likely to overuse social media. The findings highlight a potential link between attitudes toward AI and problematic online behavior, especially among male users.

https://www.

2025-07-04 16:17:52

Lovely to give the keynote 'On Epistemic Humility: storytelling, positionalities, and just survival in/through research', for the Higher Degree Research Forum, University of South Australia.

I draw on post-colonial, Black, and Indigenous scholarship to problematise what we know and how we know.

#HigherEducation

2025-09-06 13:39:09

Anthropic uses questionable dark patterns to obtain users’ consent to the use of AI data in Claude: https://the-decoder.com/anthropic-uses-a-questionable-dark-pattern-to-obtain-user-consent-for-ai-data-use-in-claude/

2025-09-06 16:27:34

2025-09-05 15:16:53

On Saturday, the Oakland Ballers manager Aaron Miles will cede his decision-making duties to an AI.

It is believed to be the first time a professional sports team will be managed by an AI in a regular-season game.

The AI will determine most of the regular in-game decisions a typical manager would make.

It isn’t choosing the starting pitcher since the Ballers are on a set rotation,

but the AI will create the starting lineup, decide when pitchers need to be replaced and …

2025-07-07 00:31:17

The AI music problem on Spotify (and other streaming platforms) is worse than you think

https://www.musicbusinessworldwide.com/the

2025-09-05 12:35:50

ProRata.ai, which lets publishers embed custom AI search on their sites with a 50/50 revenue share, raised a $40M Series B, bringing its total raised to $70M (Kerry Flynn/Axios)

https://www.axios.com/2025/09/05/prorata-ai-search-tool-publishers

2025-08-05 11:11:40

Effect of AI Performance, Risk Perception, and Trust on Human Dependence in Deepfake Detection AI system

Yingfan Zhou, Ester Chen, Manasa Pisipati, Aiping Xiong, Sarah Rajtmajer

https://arxiv.org/abs/2508.01906

2025-08-05 17:15:50

“Assuming we steward it safely and responsibly into the world…”—Right, bro, as if.

https://www.theguardian.com/technology/2025/aug/04/demis-hassabis-ai-future-10-times-bigger-than-industrial-revolution…

2025-07-06 13:55:21

Here is a poll about #GenAI since that's all we're talking about at the moment:

Do you believe that you can detect AI-generated text?

If so, what are your tips to detect it? I found this article which has a few suggestions :

2025-09-05 13:02:46

Replaced article(s) found for cs.AI. https://arxiv.org/list/cs.AI/new

[4/4]:

- Pilot Study on Generative AI and Critical Thinking in Higher Education Classrooms

W. F. Lamberti, S. R. Lawrence, D. White, S. Kim, S. Abdullah

2025-07-05 16:02:54

Honestly, between the fascism, climate records breaking monthly, and AI I'd say we've arrived at the singularity.

Since the pandemic I've been feeling it's pointless, but every day I'm more and more certain it's not worth investing anything "for the future" any more.

So yeah I'm probably buying a guitar

2025-08-06 09:34:40

Beyond risk: A proto-framework for assessing the societal impact of AI systems

Willem Fourie

https://arxiv.org/abs/2508.03666 https://arxiv.org/pdf/2508.03…

2025-07-05 15:50:40

❝Cloudflare, along with a majority of the world's leading publishers and AI companies, is changing the default to block AI crawlers unless they pay creators for their content.❞

Oh!

❝But that's just the beginning. Next, we'll work on a marketplace where content creators and AI companies, large and small, can come together.❞

Oh.

First quote sounds like a strike. Second quote sounds more like a company trying to situate itself as an unavoidable middle player who can control both sides of a market.

https://mastodon.acm.org/@avandeursen/114799313365332691

2025-09-05 12:56:18

Chiefs vs. Chargers NFL props, odds, SportsLine Machine Learning Model AI predictions, Brazil game SGP picks

https://www.cbssports.com/nfl/news/chiefs-

2025-08-05 17:26:35

2025-08-06 13:04:00

KI-Update: Neue OpenAI-Modelle, KI am Schachbrett, AI-Slop, Genie 3, KI-Hasen

Das "KI-Update" liefert werktäglich eine Zusammenfassung der wichtigsten KI-Entwicklungen.

https://www.…

2025-09-05 12:45:55

ProRata.ai, which lets publishers embed custom AI search on their sites with a 50/50 revenue share, raised a $40M Series B, bringing its total raised to $75M (Kerry Flynn/Axios)

https://www.axios.com/2025/09/05/prorata-ai-search-tool-publishers

2025-09-06 05:25:48

C3 AI, whose NYSE ticker symbol is AI, has fallen 32% in the past month on growth concerns, after its Q1 revenue fell 19% YoY to $70.3M, below $100M est. (Hyunsoo Rim/Sherwood News)

https://sherwood.news/markets/the-company-with-t…

2025-07-05 15:47:25

You're not being forced to use AI because your boss thinks it will make you more productive. You're being forced to use AI because either your boss is invested in the AI hype and wants to drive usage numbers up, or because your boss needs training data from your specific role so they can eventually replace you with an AI, or both.

Either way, it's not in your interests to actually use it, which is convenient, because using it is also harmful in 4-5 different ways (briefly: resource overuse, data laborer abuse, commons abuse, psychological hazard, bubble inflation, etc.)

#AI

2025-08-05 08:12:03

2025-07-05 07:35:51

More time should be devoted about the (near) future businessmodels of AI and how it collects data/content. Just trying to prevent AI models from scraping data will be futile.

https://blog.cloudflare.com/content-independence-day-no-ai-crawl-without-compen…

2025-07-06 17:03:54

Malaysia Charts Its Digital Course: A Guide to the New Frameworks for Data Protection and AI Ethics

https://fpf.org/blog/malaysia-charts-its-digital-course-a-guide-to-the-new-frameworks-for-data-protection-and-ai-ethics/…

2025-09-05 17:01:38

Isotopes, co-founded by Scale AI's former CTO Arun Murthy to build an AI agent for enterprise business analytics, raised a $20M seed (Julie Bort/TechCrunch)

https://techcrunch.com/2025/09/05/scale-ais-former-cto-launche…

2025-08-05 19:19:48

Replaced article(s) found for cs.AI. https://arxiv.org/list/cs.AI/new

[2/9]:

- From Semantic Web and MAS to Agentic AI: A Unified Narrative of the Web of Agents

Tatiana Petrova, Boris Bliznioukov, Aleksandr Puzikov, Radu State

2025-08-07 09:29:14

Human-Centered Human-AI Interaction (HC-HAII): A Human-Centered AI Perspective

Wei Xu

https://arxiv.org/abs/2508.03969 https://arxiv.org/pdf/2508.03969

2025-09-06 19:11:08

NFL player props, odds, bets: Week 1, 2025 NFL picks, SportsLine Machine Learning Model AI predictions, SGP

https://www.cbssports.com/nfl/news/nfl-pla

2025-08-06 17:40:52

Google says total organic click volume from Search to websites has been "relatively stable" YoY and it's sending "slightly more quality clicks" than a year ago (Liz Reid/The Keyword)

https://blog.google/products/search/ai-sear…

2025-07-04 13:04:00

KI-Update DeepDive: Wie steht es um den AI Act?

Der AI Act soll ab August GPAI regulieren, also etwa KI-Modelle von OpenAI, Meta und Google. Doch es gibt Bedenken, ob es so kommen wird.

https://www.

2025-08-07 02:45:55

Sydney-based Lorikeet, which provides AI agents as "customer concierges", raised a AU$54M Series A led by QED Investors at a AU$200M valuation (Paul Smith/Australian Financial Review)

https://www.afr.com/technology/ai-agent-bo

2025-09-06 10:09:16

2025-07-05 19:30:53

A look at India's push to compete in the global AI race, as the country's vast linguistic diversity poses a core challenge to building foundational AI models (Shadma Shaikh/MIT Technology Review)

https://www.technologyreview.com/2025/07/0

2025-09-05 19:31:27

This was always how it was going to end, just a financial settlement and AI companies can continue.

https://www.nytimes.com/2025/09/05/technology/anth…

2025-08-06 16:56:02

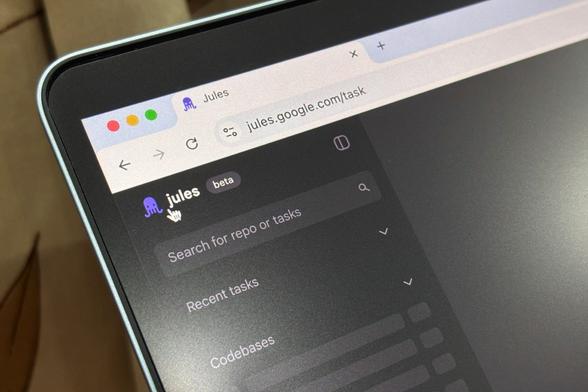

Google launches its asynchronous coding agent Jules out of beta, with a free plan capped at 15 daily tasks and higher limits for Google AI Pro and Ultra users (Jagmeet Singh/TechCrunch)

https://techcrunch.com/2025/08/06/googles-ai-coding-agent-jules-is-now…

2025-08-06 08:01:26

Wikipedia editors adopt a policy giving admins the authority to quickly delete AI-generated articles that meet certain criteria, like incorrect citations (Emanuel Maiberg/404 Media)

https://www.404media.co/wikipedia-editors-adopt-speedy-deletion-p…

2025-08-04 15:49:39

Should we teach vibe coding? Here's why not.

2/2

To address the bigger question I started with ("should we teach AI-"assisted" coding?"), my answer is: "No, except enough to show students directly what its pitfalls are." We have little enough time as it is to cover the core knowledge that they'll need, which has become more urgent now that they're going to be expected to clean up AI bugs and they'll have less time to develop an understanding of the problems they're supposed to be solving. The skill of prompt engineering & other skills of working with AI are relatively easy to pick up on your own, given a decent not-even-mathematical understanding of how a neutral network works, which is something we should be giving to all students, not just our majors.

Reasonable learning objectives for CS majors might include explaining what types of bugs an AI "assistant" is most likely to introduce, explaining the difference between software engineering and writing code, explaining why using an AI "assistant" is likely to violate open-source licenses, listing at lest three independent ethical objections to contemporary LLMs and explaining the evidence for/reasoning behind them, explaining why we should expect AI "assistants" to be better at generating code from scratch than at fixing bugs in existing code (and why they'll confidently "claim" to have fixed problems they haven't), and even fixing bugs in AI generated code (without AI "assistance").

If we lived in a world where the underlying environmental, labor, and data commons issues with AI weren't as bad, or if we could find and use systems that effectively mitigate these issues (there's lots of piecemeal progress on several of these) then we should probably start teaching an elective on coding with an assistant to students who have mastered programming basics, but such a class should probably spend a good chunk of time on non-assisted debugging.

#AI #LLMs #VibeCoding

2025-08-07 03:46:03

Sources: NIST withheld publishing an AI safety report and several other AI documents at the end of Biden's term to avoid clashing with the Trump administration (Will Knight/Wired)

https://www.wired.com/story/inside-the-biden-administrations-u…

2025-06-07 08:07:20

Interesting, "GPT-style models have a fixed memorization capacity of approximately 3.6 bits per parameter."

https://venturebeat.com/ai/how-much-information-do-llms-really-memorize-now-we-know-thanks-to-met…

2025-07-05 12:25:53

Sources: Google told publishers it is hiring new staff to market its ad tech to big advertisers and ad agencies, signaling a renewed focus on publisher ad tech (Catherine Perloff/The Information)

https://www.theinformation.com/articles/go

2025-09-06 06:01:25

Public records show C3 AI's Project Sherlock, company's flagship contract to speed up policing in San Mateo County, has struggled with usability issues and more (Thomas Brewster/Forbes)

https://www.forbes.com/sites/thomasbrewste

2025-09-05 21:41:16

Business Insider deleted at least 34 essays written under 13 bylines; a memo by the EIC said they were removed over concerns about authors' identity or veracity (Josh Fiallo/The Daily Beast)

https://www.thedailybeast.com/business-insider-del…

2025-07-07 00:50:30

Tokyo-based Sakana AI details a new Monte Carlo tree search-based technique that lets multiple LLMs cooperate on a single task, outperforming individual models (Ben Dickson/VentureBeat)

https://venturebeat.com/ai/sakana-ais-

2025-08-07 01:51:11

Tavily, which is helping AI agents connect to live web data, raised a $20M Series A led by Insight Partners and Alpha Wave, taking its total funding to $25M (Marina Temkin/TechCrunch)

https://techcrunch.com/2025/08/06/tavily-raises-25m-to-connect-ai-agent…

2025-08-06 17:20:58

Universal Pictures has started adding a legal warning at the end credits of its films stating that their titles "may not be used to train AI" (Winston Cho/The Hollywood Reporter)

https://www.hollywoodreporter.com/business

2025-09-06 00:30:49

Nvidia opposes the GAIN AI Act, saying the proposed US law forcing AI chipmakers to sell to US buyers first is "just another variation of the AI Diffusion Rule" (Zaheer Kachwala/Reuters)

https://www.reuters.com/world/china/nvidia

2025-08-05 14:31:01

Google DeepMind releases its Genie 3 model, which can generate 3D worlds from a prompt and has enough visual memory for a few minutes of continuous interaction (Jay Peters/The Verge)

https://www.theverge.com/news/718723/google-ai-genie-3-model-video-g…

2025-08-06 17:26:17

Google says total organic click volume from Search to websites has been "relatively stable" YoY and it's sending "slightly more quality clicks" than a year ago (Liz Reid/The Keyword)

https://blog.google/products/search/ai-sear…

2025-07-05 18:40:50

Investigation: preprint research papers on arXiv from 14 academic institutions in eight countries had hidden prompts telling AI tools to give positive reviews (Nikkei Asia)

https://asia.nikkei.com/Business/Techn

2025-07-07 09:01:16

France's Capgemini plans to acquire US-listed IT outsourcing company WNS for $3.3B in cash, seeking to boost its agentic AI services; WNS serves 600 clients (Bloomberg)

https://www.bloomberg.com/news/articles/2025-07…

2025-07-06 01:25:53

How Clorox is using generative AI for ad creation, brainstorming new products, and analyzing consumer reviews, as part of a five-year, $580M digital overhaul (Christopher Mims/Wall Street Journal)

https://www.wsj.com/tech/ai/clorox-ai-hidd

2025-08-04 19:55:43

Google unveils Kaggle Game Arena, a benchmarking platform where AI models compete head-to-head in strategic games, starting with a chess tournament this week (Mike Wheatley/SiliconANGLE)

https://siliconangle.com/2025/08/04/go

2025-09-05 13:40:43

Baseten, which helps companies launch open-source or custom AI models, raised a $150M Series D led by Bond at a $2.15B valuation, up from $825M in February (Allie Garfinkle/Fortune)

https://fortune.com/2025/09/05/exclusive-b…

2025-08-05 21:40:52

Wikipedia editors adopt a policy giving admins the authority to quickly delete AI-generated articles that meet certain criteria, like incorrect citations (Emanuel Maiberg/404 Media)

https://www.404media.co/wikipedia-editors-adopt-speedy-deletion-p…

2025-09-05 18:20:58

Roblox launches text-to-speech and speech-to-text APIs, and AI tools, including letting creators generate fully functional 3D objects from prompts, and more (Aisha Malik/TechCrunch)

https://techcrunch.com/2025/09/05/robl

2025-07-05 20:10:55

Sources: Google told publishers it is hiring new staff to market its ad tech to big advertisers and ad agencies, signaling a renewed focus on publisher ad tech (Catherine Perloff/The Information)

https://www.theinformation.com/articles/go

2025-08-03 17:25:50

Hugging Face CEO Clément Delangue says open-source AI is vital for US innovation and the US risks losing the AI race to China if it falls behind in open source (Clément Delangue/VentureBeat)

https://venturebeat.com/ai/why-open-source-ai-became-an-american-…

2025-07-06 04:30:46

Analysts estimate AI services within Microsoft Azure generated $11.5B in revenue in the just-ended fiscal year, up 100% YoY but only ~4% of Microsoft's revenue (Wall Street Journal)

https://www.wsj.com/tech/ai/nvidia-microso

2025-08-05 21:15:53

Alibaba's Qwen releases Qwen-Image, an AI image generation model focused on accurate text rendering, with support for alphabetic and logographic scripts (Carl Franzen/VentureBeat)

https://venturebeat.com/ai/qwen-image-

2025-08-07 03:21:00

Sources: Mustafa Suleyman has been calling Google DeepMind recruits to work at Microsoft AI, offering higher pay and a more startup-like workplace than DeepMind (Wall Street Journal)

https://www.wsj.com/tech/ai/microsoft-goog

2025-07-05 00:25:54

PitchBook: AI startups received 53% of all global VC dollars invested in H1 2025, rising to 64% in the US, and accounted for 29% of all global startups funded (Dan Primack/Axios)

https://www.axios.com/2025/07/03/ai-startups-vc-investments

2025-09-05 00:01:45

Amazon, Microsoft, Google, Code.org, IBM, and other companies pledged new commitments for AI in education as part of a White House event hosted by Melania Trump (Ashley Gold/Axios)

https://www.axios.com/2025/09/04/melania-trump-ai-in-education-the-robots-ar…

2025-09-04 10:05:48

Tencent releases HunyuanWorld-Voyager, an open-weights AI model that generates 3D-consistent video sequences from a single image, trained on 100K video clips (Benj Edwards/Ars Technica)

https://arstechnica.com/ai/2025/09/new-ai-model-turn…

2025-08-07 10:16:19

Internal OpenAI code suggests a tiered GPT-5 rollout: free users get basic GPT-5, Plus users get advanced reasoning, and Pro gets research-level performance (Alexey Shabanov/TestingCatalog)

https://www.testingcatalog.com/leaked-details-revea…

2025-09-04 16:25:41

A profile of Anthropic's Frontier Red Team, which is unique among AI companies in having a mandate to both evaluate its AI models and publicize findings widely (Sharon Goldman/Fortune)

https://fortune.com/2025/09/04/anthrop

2025-07-06 13:35:43

2025-08-06 17:10:58

Google unveils a Guided Learning mode within its Gemini chatbot to help students and commits $1B over three years to AI education and training efforts in the US (Mark Sullivan/Fast Company)

https://www.fastcompany.com/91380890/google-unveils-n…

2025-09-06 09:45:50

Thailand, aiming to become the second-largest PCB production hub, is witnessing a tech manufacturing boom as the PCB supply chain shifts from China and Taiwan (Nikkei Asia)

https://asia.nikkei.com/business/technology/…

2025-08-06 23:31:08

Rillet, which is building AI ledger software to automate accounting tasks, raised a $70M Series B co-led by a16z and Iconiq, a source says at a ~$500M valuation (Aditya Soni/Reuters)

https://www.reuters.com/technology/ai-acco

2025-09-06 03:26:06

Alibaba debuts Qwen3-Max-Preview, its largest AI model with over 1T parameters, showcasing strong benchmark performance; the model is not open source (Carl Franzen/VentureBeat)

https://venturebeat.com/ai/qwen3-max-arrives-in-preview-with-1-tril…

2025-08-05 13:11:08

Patreon CEO Jack Conte says the platform paid out $10B to creators since its 2013 founding, creators now get $2B annually, and there are 25M paid memberships (Sara Fischer/Axios)

https://www.axios.com/2025/08/05/patreon-10-billion-creator-economy-ai

…

2025-09-05 15:45:51

OpenAI acqui-hires the team behind Alex Codes, a Y-Combinator-backed startup whose tool lets developers use AI models within Apple's development suite Xcode (Ivan Mehta/TechCrunch)

https://techcrunch.com/2025/09/05/openai-hires-the-team-b…

2025-09-03 18:25:57

Scale AI sues a former employee and Mercor, his current employer and one of Scale's key competitors, for allegedly stealing more than 100 confidential documents (Hayden Field/The Verge)

https://www.theverge.com/ai-artificial-int

2025-08-05 00:45:41

Google agrees with two power utilities to pause non-essential AI workloads during peak demand or adverse weather events that reduce supply (Tobias Mann/The Register)

https://www.theregister.com/2025/08/04/google_ai_datacenter_grid/