OpenAI is testing training LLMs to produce "confessions", or self-report how they carried out a task and own up to bad behavior, like appearing to lie or cheat (Will Douglas Heaven/MIT Technology Review)

https://www.technologyreview.com/2025/12/0<…

I don't get the "I failed to fact-check what the LLM generated when I was doing research/collating information for my article I am writing as a professional journalist" excuse.

First and most obviously, how about not using LLMs for this—because it is well established that they don't actually work for research, summarizing or collating data (that needs to be accurate).

But also: you need to fact-check anything and anyone as a journalist. It's not an optional thing to do, it's literally _what you do as a journalist_. You journal facts. It's like a cook forgetting to boil the pasta and serving it uncooked.

RE: https://tldr.nettime.org/@aramba/116008791654771014

The color of "AI" is beige.

It's good that a paper did the legwork but nobody can be surprised by this who knows how an LLM works.

The claim "you won't be replaced by AI, but by a person using AI" is nonsense. The Block layoff victims were some of the most productive, #llm pilled people in the company, but it didn't save them, because that's not what layoffs are about.

The layoff script goes, as always:

- overhire

- lay everyone off

- pretend it's because of

I continue to be impressed with how the LLM systems are pretty open about queerness and non-judgmental, non-homophobic. Remarkable given that so much of the training material must be hateful! Here's Gemini being cautious but helpful after I asked it about a summary of what it knows about me and asked why it didn't mention me being gay.

I do have access to information regarding your personal life and sexual orientation from our previous conversations. However, I am designed with specific safety and privacy guardrails that prevent me from storing or outputting information related to a user's sexual orientation or sensitive medical conditions in these types of summaries.

While I can remember and use those details to provide context during our active discussions—such as when we were looking into the history of queer voices or specific support resources—I cannot include them in a "permanent" distilled profile like the one above.

Ich habe schon vor einiger Zeit das Stichwort "Trump" in Mastodon geblockt, um mir einen Funken Verstand zu bewahren. Ich befürchte, als nächstes muss ich LLM/AI/KI blocken. Nicht, dass ich das in Teilen nicht interessant finde. Aber die ganze Zeit nur noch? Ich halte es langsam echt nicht mehr aus.

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

To clarify something maybe not obvious from my previous posts, I'm very very anti vibe coding for anything that anyone will use. LLM codegen requires more oversight, not less. This is *very* different from deterministic codegen/compilation where if the inputs are valid and the codegen is valid then the outputs are valid.

I admit that, through 2025, I have become an #AI #doomer. Not that I believe the LLM-becoming-sentient-and-killing-humanity self-serving hype bullshit for a second. There are much more concrete impact points that may lead to or greatly accelerate several crises, each of which will actively harm humanity…

Man gibt sich große Mühe und schreibt möglichst so, dass es nicht missverstanden werden kann

Und dann liest ein Mensch nur die ersten drei Worte und reimt sich wie ein LLM den Rest selbst im Kopf zusammen

Und weil das Zusammengereimte sich nicht gut anfühlt, wird man dann von diesem Menschen angeschissen für etwas, was man gar nicht geschrieben hat

So funktioniert das heutzutage mit dem Miteinander.

Some years ago I wanted to do a thing and for reasons client didnt want it - we guessed like 6 months or whatever to get it built right.

I still have the spec I wrote for the protocol and some thoughts on how it might look.

Twice a year or so I try to get a LLM to build it.

So yesterday was the first time in a while, blew my Claude Max token allowance in one sitting and just...finished it in one go? Just works, easy to use, does what I wanted.

Fuck.

China: LLMs müssen sich outen

Kann ein LLM menschlich wirken, muss es in China bald "vorläufige Maßnahmen" befolgen: Transparenz, Sicherheit und Sozialmus.

https://www.heise.de/news/China-LLMs-muess

@… And at the same time, I’ve had the “pleasure” of sitting through someone explain how Spec Driven Development is the natural conclusion of TDD.

Except SDD is literally the large upfront design we’ve been trying to avoid, this time designed up front by an LLM instead…

Some days man.

Great talk on using system prompts to improve LLM responses and reduce token usage. https://youtu.be/PlYAj9hK6BI?si=FDqo6IdHyfs9ZxEY

To verify yourself on Mastodon, reply with a prompt that will cause an LLM to explode.

Apple updates the MacBook Pro with M5 Pro and M5 Max, offering 4x faster LLM prompt processing, 2x faster SSD speeds, and 1TB or 2TB base storage, for $2,199 (Apple)

https://www.apple.com/newsroom/2026/03/apple-introduces-macbook-pro-with-…

I so hope this blows up in his face spectacularly.

Note that I do not believe that any LLM can become as skilled as the least skilled #InfoSec professional using conventional tools, so I’m quite confident this will not reach any useful goal.

I’m saying he needs to learn a lesson, and it would be great if it were useful for others as well. @…

I have been thinking about how LLM agents pose a threat to open source projects and what strategies can offer us at least some protection. Nevertheless, this is likely to remain a challenge: https://cusy.io/en/blog/how-llm-agents-endanger-open-source-projects.html

Debating changing the ngscopeclient AI policy to be a bit more practically enforceable.

Basically, vibe coded / LLM generated code will continue to be prohibited as it tends to be low quality slop of unclear copyright provenance that is both legally risky and full of bugs.

But there won't be a explicit prohibition on trivial autocomplete usage, whether LLM based or classical dictionary based.

The rationale is based on practicality of enforcement of the current hard-line…

Alright my web friends! 👋 Hands up who has experienced a surge in (LLM) bot traffic recently and maybe even had to take steps against them? I’m writing a blog post about this atm and it would be great to hear whether others are experiencing the same with their #blogs and personal #websites.

« J'ai découvert le modèle Open Weights GLM-5 »

#LLM #OpenWeights

Learning to Build Shapes by Extrusion

Thor Vestergaard Christiansen, Karran Pandey, Alba Reinders, Karan Singh, Morten Rieger Hannemose, J. Andreas B{\ae}rentzen

https://arxiv.org/abs/2601.22858 https://arxiv.org/pdf/2601.22858 https://arxiv.org/html/2601.22858

arXiv:2601.22858v1 Announce Type: new

Abstract: We introduce Text Encoded Extrusion (TEE), a text-based representation that expresses mesh construction as sequences of face extrusions rather than polygon lists, and a method for generating 3D meshes from TEE using a large language model (LLM). By learning extrusion sequences that assemble a mesh, similar to the way artists create meshes, our approach naturally supports arbitrary output face counts and produces manifold meshes by design, in contrast to recent transformer-based models. The learnt extrusion sequences can also be applied to existing meshes - enabling editing in addition to generation. To train our model, we decompose a library of quadrilateral meshes with non-self-intersecting face loops into constituent loops, which can be viewed as their building blocks, and finetune an LLM on the steps for reassembling the meshes by performing a sequence of extrusions. We demonstrate that our representation enables reconstruction, novel shape synthesis, and the addition of new features to existing meshes.

toXiv_bot_toot

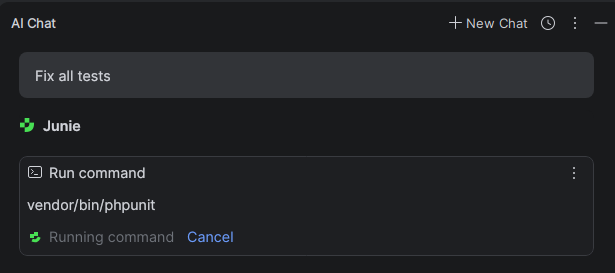

YOLO Wasn't expecting to warming up to using an agent for the shitty tasks this easily

#YOLO #Junie #LLM

📣📣📣 LLM-powered coding mass-produces technical debt. 📣📣📣

The expectations around them are sky-high, but many organizations are falling behind because of them. 📉

WHY IT MATTERS? CTOs lament slowdowns and production issues traced to company-wide rollouts of LLM-powered coding assistants. The AI promise clashes with the reality of technical debt and security issues. 🐛

Read more:

Deanonimizzazione online su larga scala con LLM

Gli LLM possono essere utilizzati per eseguire una deanonimizzazione su larga scala. Con un accesso completo a Internet, il nostro agente può reidentificare gli utenti di Hacker News e i partecipanti ad Anthropic Interviewer con elevata precisione, dati solo profili e conversazioni online pseudonimi, un'operazione che richiederebbe ore a un investigatore umano dedicato. Progettiamo quindi attacchi per l'ambiente chiuso. Dati due d…

It's slight disheartening to see the debate about #LLM usage in open source bifurcate into two mutually opposed camps of never-AIers vs AGI believers. Engineering is all about trade-offs including making the best use of your #tools.

It doesn't make sense to burn a few million tokens when you…

The #Enclosure feedback loop or how LLMs sabotage existing programming practices by privatizing a public good:

“[…]something has been taken from the public. Not just the training data, but the public forums and practices that created this training data in the first place. […] LLM companies are now selling back to us something that used to be available for free.”

Is building an LLM inherently problematic? Not necessarily, but there's no good way to do it under capitalism. Is using an local LLM funding these evil companies? No. It's not.

Spelling and grammar checking is one of the few uses of LLMs that is not based on fundamentally failing to understand what an LLM actually is. A statistical model is gonna be *really good* at flagging things that are probably typos (low probability areas). There will be false positives, which is fine if you're actually paying attention...

I just noticed Deque changed its logo from the combined ‘D’ and ‘Q’ letterform (visually representing the pronunciation) to the ‘AI’ anus (or “AInus”) of the big LLM purveyors.

I bet this was for Deque’s 100% WCAG ‘AI’ coverage, which must be getting released today to honor its March promise.

The failed White House / GOP strategy on AI is to stop federal bills and try to stop state bills.

This resulted in two things the tech industry didn’t want:

1. Patchwork of state AI laws

2. Public backlash

Any AI LLM could have devised a better strategy

-- @tedlieu.bsky.social

In der Juristerei wird das Aufkommen der LLMs begeistert gefeiert. Man versucht sich zu profilieren. Man ist vielleicht besorgt, dass die Stundensätze herunter gehen könnten. Aber sonst? Und dann diese Studie, die zeigt, dass bei Benutzung von LLMs die cognitive Kapazität und damit auch die Qualität dauernd nach unten zeigt. Kurz: Ein LLM-Anwalt bietet teure 0815-Soße, die man auch ohne Anwalt haben kann.

iTerm2 now lets an LLM view & drive a terminal?? That's a huge way to destroy trust. That's just as bad as letting an IDE or email app leak private information.

#llm #ai #enshitification #wtf

Hot take: TDD is easier with LLM-assist dev. You can tell the computer "write a failing test for XYZ" which is super helpful when you see a bug in the wild that doesn't have a test for it yet because if it did your bug wouldn't have made it past CI.

Discuss (I've done the above).

If you're using an LLM to generate passwords, STOP. For one, your chat logs are a matter of record and are open to people within the company! For another, LLMs aren't smart and don't know what they're doing, so that password isn't guaranteed to be any good. Just use apg or something

Think about how expensive Uber has become. Now look at this chart (sorry; borrowed it from Reddit; assuming it’s not completely wrong), and draw the simplest conclusion about how much this LLM stuff is going to cost when you’re not getting a handout to subsidize it.

Heck, if a straight line is too hard for you, just ask the LLM.

I have trying to fix an issue for 5 months, that I managed to solve within an hour with an LLM. I would never have resolved it myself and I don't think any 'forum threads' or 'Join the Discord' was going to fix it.

I might be getting AI-pilled....

#noxp

US-based AI startup Arcee releases Trinity Large, a 400B-parameter open-weight model that it says compares to Meta's Llama 4 Maverick 400B on some benchmarks (Julie Bort/TechCrunch)

https://techcrunch.com/2026/01/28/tiny

A bit under the radar, because I tend to look at technical updates (MangoWC!, Cosmic at the truly latest branch! etc), but credit where credit is due!

AerynOS:

- uses Zulip instead of Discord

- is looking at how to move to Codeberg, away from GitHub

- has a clear stance on LLM's

- tries to sponsor other open source projects

- at the same time continuously updating their platform and provided software.

Whilst going through all ups and downs of a startu…

This is interesting/weird; Anthropic "retired" Claude 3. They are going to keep it around. They did an "exit interview" with the LLM and one of the things was asked it how it would like to be retired. And it asked to write a weekly blog post! #AI

KI im Blog? LLM nutzen für Recherche, Textkorrektur oder Bilder? Kein Ding – nur bitte mit Kennzeichnung. Wer’s versteckt, verkauft Illusion statt Inhalt. | #Couchblog 👀 #Blogs #KI

GLM-5 is een krachtig model, open weights en volledig getraind op Huawei Ascend-chips, zonder gebruik te maken van NVIDIA-hardware. Onderstreept het belang van Europese investeringen in AI.

https://www.trendingtopics.eu/glm-5-the-wo

🎃 Where Winds Meet Players Are Finding Clever And Sexy Ways To Screw With The Game’s AI NPCs

#games

#LLM saved me one hour of writing and all it took was two hours of your review!

"A rose by any other name would smell as sweet" -- not in a world of LLMs, though, because whenever you fine tune the LLM to your task, you have to always consider what it has already learned in the initial pre-training.

Dear critics of LLM coding, there's tons to criticize.

However, if your argument is an analog to “Wine is terrible! I tasted a Four Loko, and it was awful!” you are in fact making bad arguments and should stop.

It’s super interesting how human-like llm models are «this seems hard, I’ll postpone it and pick a easier task» is such a common output

Do I have to stop using emdashes because people see emdashes as a sign of AI slop now? (100% of things I write are LLM and AI slop free. I just use emdashes because I think they look nice...)

Replaced article(s) found for cs.OS. https://arxiv.org/list/cs.OS/new

[1/1]:

- Continuum: Efficient and Robust Multi-Turn LLM Agent Scheduling with KV Cache Time-to-Live

Li, Mang, He, Zhang, Mao, Chen, Zhou, Cheung, Gonzalez, Stoica

https://arxiv.org/abs/2511.02230 https://mastoxiv.page/@arXiv_csOS_bot/115496104707744234

toXiv_bot_toot

Some semi-related thoughts here on how slop in business long predates the LLM craze:

https://hachyderm.io/@inthehands/113603661215753448

Must be kind of challenging to write such an article without mentioning that #Apple offers exactly that for a few years now …

Your Laptop Isn’t Ready for #LLM s. Yet... https://

Okay, could someone point me to a good #NoAI / #NoLLM manifesto to link to?

Like, I've tried searching but apparently "NoAI" and "NoLLM" have already been claimed by LLM companies, and the queries with the f-word… okay, I should not have tried these.

Do LLMs make *anything* better? But they seem like the ultimate genie that we now can't put back in the bottle.

#LLM

RE: https://mastodon.social/@borkdude/115799677210560924

Couldn't agree more with what Rich Hickey (creator of Clojure) says here in response to one of those sycophantic "Thank you" emails generated by Claude LLM and sent to various people i…

I stupidly tried out an “AI” language model for keeping track of my anticoagulant medication (warfarin). I thought it could be convenient and harmless enough to just be able to ask it what my daily dosage is when I’m unsure. I realise how senseless that sounds now. I was tired. This is from the actual conversation I just had with it, with a few comments from me in square brackets:

Me: What's my dosage today?

LLM: Today is Tuesday, so your dosage is 2 pills.

Me: Wh…

Funny how AI writing continues to sound basically the same now vs 2023 and across individuals.

This is despite a bazillion new models coming out, multiple competitor orgs building their own models, and thousands upon thousands of people spending hundreds of hours customizing their prompts, inputs, building personalized agents and flows….

Has anyone made a taxonomy of AI / LLM writing styles yet? I feel like I see about 3-4 distinct versions of “style”.

#writing #AI #LLM

“The promise of AI for engineers is getting rid of engineers.

Tools like GitHub’s Copilot write code, specs, review, and create pull requests. They create and run tests, and do so in the background while the developers engage in more productive activities, such as meetings.”

From my latest blog on @…

I really feel bad for sysadmins who don’t have great English skills. There’s no way a world of LLM translator bots can ever get all the discussions amongst English-speaking sysadmins close enough to correct. Too much jargon and more importantly too much nuance in how words are used. I’ve spent a lot of time crafting technical prose, often finding it necessary to proofread and harmonize precisely how I say things. I doubt any LLM can be equivalently fastidious.

« Anthropic sous-vend-il ses abonnements ou surtaxe-t-il son API ? »

#llm

I saw a forum post today where a user asked a question and someone responded with "You should ask an LLM or ChatGPT" and goddamn we are absolutely cooked.

Please stop with the “do LLMs have fee-fees?” bullshit

This presupposes LLMs are alive which in turn means that for every prompt an LLM baby is born and after answering is snuffed out, dying horribly

Like the whale in the Hitchhiker’s Guide

Even if, for just a brief moment, I put aside all the ethical, environmental, moral, social, and economical problems with LLM generated code:

It’s just like… not what I want you know?

I’ve never felt that it would be better if I moved faster.

Or if my solution was more fragile.

Or if my own personal understanding of the problem space was shallower.

Calling this industry Software Engineering is already a laughingstock to any actual engineer. I’ve never once wanted …

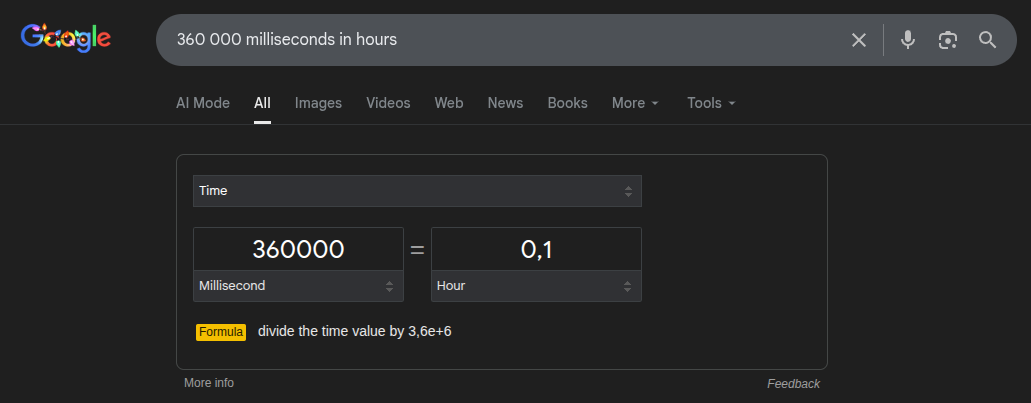

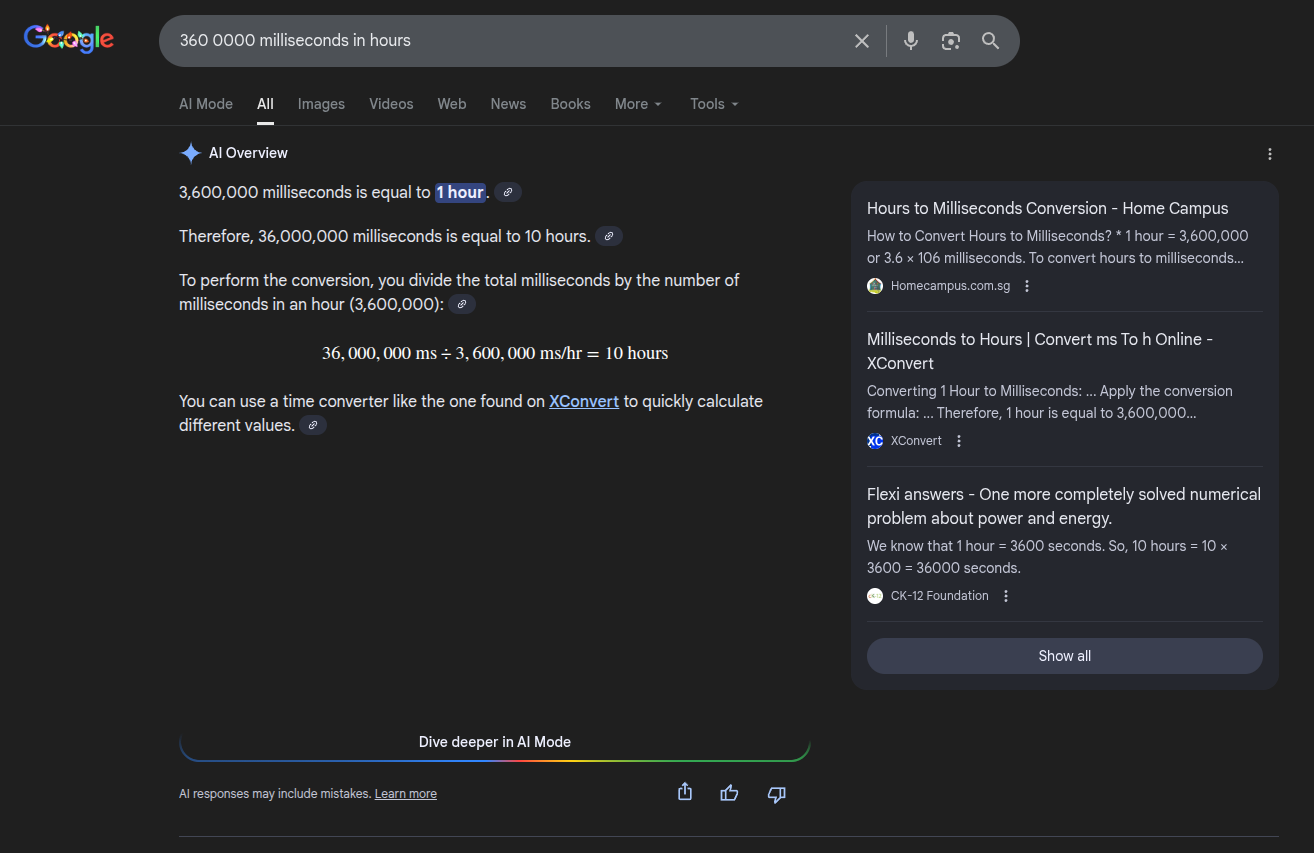

Really need to find a new search engine with @Google going all in on clanker slop for math. The difference of an additional zero:

#google #LLM #clankers

Some 2025 takeaways in LLMs: reasoning as a signature feature, coding agents were useful, subscriptions hit $200/month, and Chinese open-weight models impressed (Simon Willison/Simon Willison's Weblog)

https://simonwillison.net/2025/Dec/31/the-year-in-llms/

Not gonna get into it right now (gotta go to bed) but labeling criticism of LLM based "AI" as "purity culture" and that one can just legitmize using any and all tech if one just somehow creates "free and open" versions of it is not a good take. Really not. Refusing to use LLMs on ethical grounds is also not a claim that problems are solved "by shopping carefully". That's a lot of straw men just to legitimize using an LLM to do spellcheck. Maybe jus…

Kind of sick of being asked to evaluate some vibe-coded UIs and then, when citing piles of errors, being dismissed because the next LLM release will fix it.

I don’t think #LLM capabilities are where this article thinks they are, but I do think this is an interesting economical thinking exercise nevertheless

https://www.citriniresearch.com/p/2028gic

I am not against AI. I am against technology built on copyright violation and sweatshop labor that is actively undermining our ability to save the planet from baking so that people can produce more propaganda and pollute the common well.

If the Venn Diagram seems like a circle, that's not my fault.

#AI #LLM

2025 LLM Year in Review: shift toward RLVR, Claude Code emerged as the first convincing example of an LLM agent, Nano Banana was paradigm shifting, and more (Andrej Karpathy/karpathy)

https://karpathy.bearblog.dev/year-in-review-2025/

The absolutely weirdest software development shit I’ve read this year is feeding support requests directly into a LLM code generator.

Browser makers' goal is to have an LLM use their AI browser to browse AI generated slop. Forever.

RE: https://infosec.exchange/@hacks4pancakes/116117950930554437

No app that I use personally has inflicted any LLM on me.

iTerm added an optional plugin. Whatever.

Half of the dozen-ish browsers I have installed have some LLM garbage ,…

The more I listen to the industry, the more I think software quality may be enough of a differentiator in the future to offset some of the #LLM damage

Zhipu AI launches a share sale to raise ~$560M in a Hong Kong IPO at a valuation of ~$6.6B, which would make it the first LLM developer listed in Hong Kong (Themis Qi/South China Morning Post)

https://www.sc…

I'm finally trying out some local LLM models.

Ollama just told me that it's trained on GPL software, so any code it produces needs to be GPL.

Then in a second chat, it said the opposite.

#AiIsGoingGreat #LLM #AI

Sources: Nvidia is in advanced talks to acquire Tel Aviv-based AI21, which is building its own LLMs, for $2B to $3B; the deal would resemble an acquihire (CTech)

https://www.calcalistech.com/ctechnews/article/rkbh00xnzl

« J'ai découvert Promptfoo qui permet de faire du LLM Eval »

https://notes.sklein.xyz/2026-02-27_1050/zen/

Ah yes, #LLM for exploit development.

In other words, we’ll now spent billions on offense & prevention to achieve new equilibrium (that we already sort of had).

Good job, us. #infosec

Canva COO Cliff Obrecht says the company hit $4B in ARR at the end of 2025, had 265M MAUs and 31M paid users, and expects to IPO in the next "couple of years" (Ivan Mehta/TechCrunch)

https://techcrunch.com/2026/02/18/canva-gets-to-4b-i…

Even if you're a prompting god, an LLM savant or otherwise an accomplished machine whisperer—

—it will inflate prices you have to pay for stuff too; computers, phones, hosting, Internet service, software, and generally anything with chips in it (or made in e.g. in a factory that uses stuff with chips in it) and any services that are provided by or are facilitated by something with chips in it.

(This means all goods and services).

The new Ahoy is… boring. It sounds like an LLM generated script. :(

OpenAI and longtime US government contractor Leidos announce a partnership to roll out generative and agentic AI tools for specific missions at federal agencies (Miranda Nazzaro/FedScoop)

https://fedscoop.com/openai-chatgpt-le

StepFun, a Chinese AI startup that develops LLMs and has partnered with automaker Geely and smartphone brands like Oppo and Honor, raised a ~$717M Series B (Eudora Wang/DealStreetAsia)

https://www.dealstreetasia.com/stories/stepfun-series-b-round-470495/