2026-01-15 17:00:05

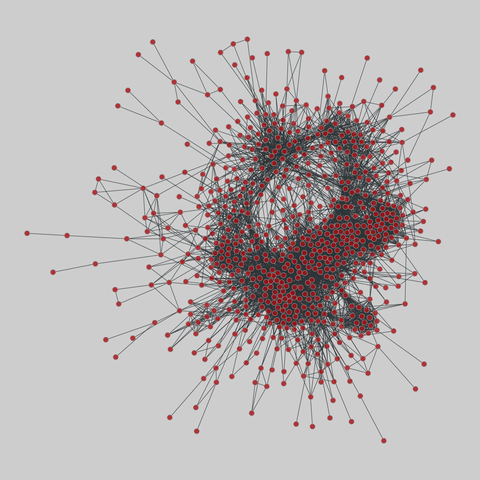

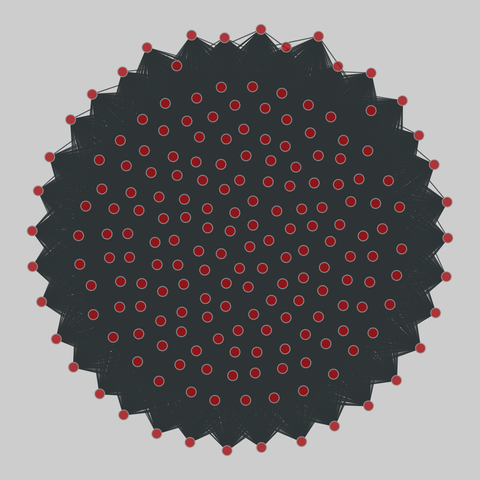

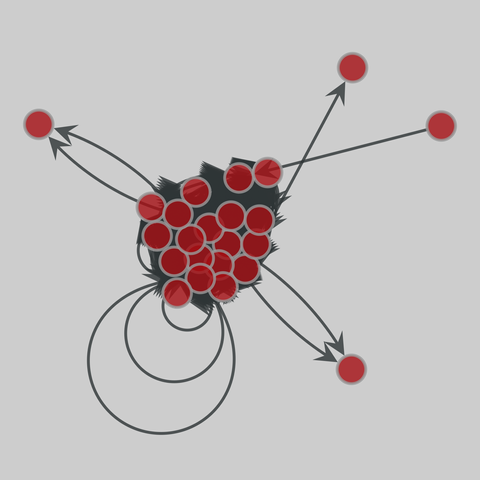

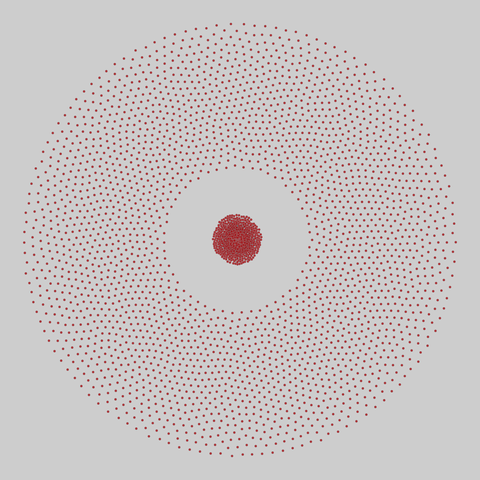

wiki_science: Wikipedia Map of Science (2020)

A network of scientific fields, extracted from the English Wikipedia in early 2020. Nodes are wikipedia pages representing natural, formal, social and applied sciences, and two nodes are linked if the cosine similarity of the page content is above a threshold. See <http://www.s…