Forty years ago, 21 people gathered for the first meeting of what became the Internet Engineering Task Force or #IETF . Every day billions of people use the open standards and technologies developed in the IETF. And nearly 8000 volunteer IETF participants from around the world collaborate in more than 100 working groups evolving those open standards and making the Internet work better!

So I’ve got a new gig of sorts. I’ll be a volunteer photographer for the city parks system. The task will be to take pics of folks participating in various programs in the parks and natural areas run by other volunteers, to try and capture that attendees are having fun, especially the kids. The difficulty level is that I’ve never liked photographing people. Go out of my way to keep them out of shots. I’ve done a couple events now, and still just feel intrusive. Hope it gets easier.

Quite tempted to occupy a section of Tony Blair’s property and build a house on it. You know, so he can really understand the task he has so gleefully taken on.

Obviously, I wouldn’t really do this. I’d be stuck with dreadful neighbours.

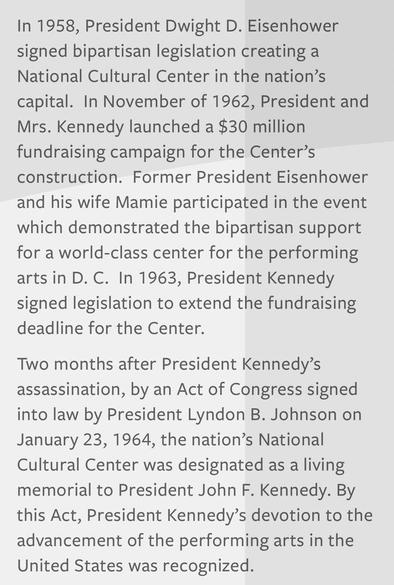

Gifts to the US President are generally considered to be the property of the United States rather than of the president as a person or the president as an office.

So it seems to me that the US National Archives is now the proud owner of a the physical object of a Nobel Peace Prize.

(Of course, el cheato will try to retain physical possession and we will have to pry it from his cold dead fingers, a task that , I suspect, many of us would find rather appealing.)

In his EO, Trump orders US AG Pam Bondi to create an "AI Litigation Task Force" that meets regularly with David Sacks to challenge "inconsistent" state AI laws (Igor Bonifacic/Engadget)

https://www.engadget.com/ai/trump-orders-c

Today I made a 2 line change to a file on GitHub. Copilot suggested a spectacularly incorrect summary of my change for the commit message, so I deleted it, finished the commit, and asked CoPilot “how do I disable CoPilot commit message suggestions.” THAT task was in its wheelhouse. #AIslop

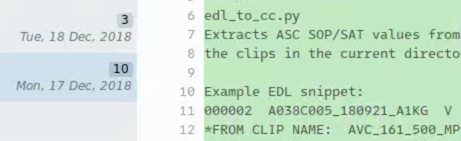

Oh well, our upcoming client doesn't provide cc files for each shot. Instead, we need to extract the grading values from an EDL file. Fortunately I've already written a script to do that a few years ago for another show.

The time to write a script might be more than what it takes to do the task manually (here it would be copying values from a text file to an xml file). But it pays off if you have to repeat the task. Even if that is 7 years later.

What if our networks could do more than just carry data?

In December, the Fibre Sensing Task of the GÉANT (GN5-2) Project, together with SURF @… turned 57 km of live optical fibre into a sensor.

The result? Detecting everything from trams to a plane landing at Amsterdam Airport Schiphol.

🎥 Watch Chris Atherton walk us through the experiment.

I noticed late last night, by way of discovering pooling water under the sink, that the garbage disposal had gone belly up.

And so today’s task is to replace it, which I don’t really relish doing, but:

"…so do all who live to see such times. But that is not for them to decide. All we have to decide is what to do with the time that is given us." -Gandalf the Grey

And so, I’m off to the hardware store, having accepted the conditions are what they are and deciding ho…

If you’re vide-coding MVPs (or pre-MVPs?) that you have no intention of taking further and instead releasing them as proofs of concept and moving on to your next soon-to-be-abandoned MVP, understand that you’re a patsy. A shill.

You’re suggesting the LLM is up to the task when you’re really admitting it failed and you’re too lazy to take it further.

Less critical folks won’t understand that and shitty managers pre-disposed to justifying their sunk costs will reference it as a win…

Nice blog in the discussion about AI & coding,

"AI can replace most of programming, but programming isn’t the job.

Programming is a task. It’s one of many things you do as part of your work. But if you’re a software engineer, your actual job is more than typing code into an editor."

https://<…

I guess the executive power grab attempts in SF government are taking multiple forms. Not only the Commission Streamlining Task Force, but Mayor Lurie and Board President Mandelman have also created this "charter reform working group," where there's no public comment and major city departments are excluded.

https://

Reading Paul Krugman talking with Adam Tooze . Re. € crisis in 2012: "Paul DeGraue, Belgian economist said, “this is a panic, the numbers don’t really justify this.” Mario Draghi actually agreed and said three words, “whatever it takes,” that the ECB will stand behind that and will make sure the countries don’t run out of cash. The crisis just evaporated like nothing. *Most effective central bank statement in the history of humanity*, I’m sure." Here is Dhragi's speech:

For balance, this is my favourite ever Dilbert strip, back from when it was good.

⚙️ Print mode runs by default for non-interactive execution, interactive mode enabled via .i. filename marker (task.i.claude .md), _interactive frontmatter key, or -_i CLI flag

🌐 Remote URL imports cached at ~/.mdflow/cache/ with 1-hour TTL, use --no-cache to force fresh fetch, automatic .env file loading from markdown file directory with support for .env.local, .env.development, .env.production

If He Builds It, Tear It Down -- The foremost task occupying the attention ... (Hamilton Nolan/How Things Work)

https://www.hamiltonnolan.com/p/if-he-builds-it-tear-it-down

http://www.memeorandum.com/251213/p38#a251213p38

In der Juristerei wird das Aufkommen der LLMs begeistert gefeiert. Man versucht sich zu profilieren. Man ist vielleicht besorgt, dass die Stundensätze herunter gehen könnten. Aber sonst? Und dann diese Studie, die zeigt, dass bei Benutzung von LLMs die cognitive Kapazität und damit auch die Qualität dauernd nach unten zeigt. Kurz: Ein LLM-Anwalt bietet teure 0815-Soße, die man auch ohne Anwalt haben kann.

Multiple responses from DeepSeek's namesake chatbot confirm that the startup has expanded the context window of its flagship AI model from 128K tokens to 1M (Ben Jiang/South China Morning Post)

https://www.scmp.com/tech/tech-trends/arti…

I've just finished a modestly onerous administrative task.

It lead me to think about effort and the 3 legs of my job: research, teaching and admin: While there are intrinsic reasons to do research and teaching to the best of your ability, for admin "good enough" is enough. Focus on efficiency not excellence, where efficiency includes making things work, not causing extra hassle in the medium term.

I rewrote a data analysis pipeline, moving it from #python to #julialang . I am now in love with the threading support in Julia.

The task is very parallelizable but each thread needs random read access to a tens-of-GB dataset. In Python (with multiprocessing, shared stores, etc) data bookkeeping was a nightmar…

This time of year is for the pleasant world-building task of (re-)imagining syllabuses for next term. Since I'm now in charge of the program, I'm also currently working on the whole curriculum for the academic year beginning in May - it's like syllabus composition turned up to 11. Living in the time before ideas become reality is both fantastic - no inconvenient truths or anyone causing trouble - and sterile (for basically the same reasons). Can't wait to see how my plans fal…

Has anyone ever gotten `services.webdav` to work on :nixos: #NixOS?

I have a very simple and probably very common task: Expose a directory (in my case `/var/lib/paperless/consume`) via #WebDAV so my scanner can upload their PDFs there. I'm pretty much doing this¹ here, but also this person has p…

Finally finished a "migrate a bunch of scripts from python2 to python3" task that's been in my todo list for, ah, a few years.

"On a quiz that covered concepts they’d used just a few minutes before, participants in the AI group scored 17% lower than those who coded by hand, or the equivalent of nearly two letter grades. Using AI sped up the task slightly, but this didn’t reach the threshold of statistical significance."

Replaced article(s) found for q-bio.NC. https://arxiv.org/list/q-bio.NC/new

[1/1]:

- State-space kinetic Ising model reveals task-dependent entropy flow in sparsely active nonequilib...

Ken Ishihara, Hideaki Shimazaki

https://arxiv.org/abs/2502.15440 https://mastoxiv.page/@arXiv_qbioNC_bot/114057779012161849

- Mechanisms for anesthesia, unawareness, respiratory depression, memory replay and sleep: MHb > IP...

Karin Vadovi\v{c}ov\'a

https://arxiv.org/abs/2509.04454 https://mastoxiv.page/@arXiv_qbioNC_bot/115167812677714466

- Meta-learning three-factor plasticity rules for structured credit assignment with sparse feedback

Dimitra Maoutsa

https://arxiv.org/abs/2512.09366 https://mastoxiv.page/@arXiv_qbioNC_bot/115699940165988688

- Prefrontal scaling of reward prediction error readout gates reinforcement-derived adaptive behavi...

Sang, Huang, Zhong, Wang, Yu, Li, Feng, Wang, Chai, Menon, Wang, Fang, Wang

https://arxiv.org/abs/2512.09761 https://mastoxiv.page/@arXiv_qbioNC_bot/115700046994546552

- Proof of a perfect platonic representation hypothesis

Liu Ziyin, Isaac Chuang

https://arxiv.org/abs/2507.01098 https://mastoxiv.page/@arXiv_csLG_bot/114788750477759162

toXiv_bot_toot

A primer on Instant Runoff Voting / Ranked Choice Voting in Washington DC for the 2026 elections.

#RankedChoiceVoting

"This single task of managing memory has proven to be one of the most difficult, let alone to grasp and understand, but most importantly, to get right.

Because not getting this right meant crashes, security issues, resource shortages, unhappy customers, and lots of white hair. To make things worse, pretty much every programming language comes these days with their own ideas of how to keep track of things on the heap."

Souvenir

On Monday, June 6th, 2011, after Steve Jobs' last public appearance as a keynote speaker, took place the "Developer Tools Kickoff" session at Apple’s annual Worldwide Developers Conference, also known as WWDC. That day, Chris Lattner, creator of the LLVM compiler infrastructure and the Swift programming language, introduced a new feature of the Objective-C language to thunderous applause. This feature, still present in Swift, is known as "Automatic Reference Counting", or ARC.

Genus-0 Surface Parameterization using Spherical Beltrami Differentials

Zhehao Xu, Lok Ming Lui

https://arxiv.org/abs/2602.01589 https://arxiv.org/pdf/2602.01589 https://arxiv.org/html/2602.01589

arXiv:2602.01589v1 Announce Type: new

Abstract: Spherical surface parameterization is a fundamental tool in geometry processing and imaging science. For a genus-0 closed surface, many efficient algorithms can map the surface to the sphere; consequently, a broad class of task-driven genus-0 mapping problems can be reduced to constructing a high-quality spherical self-map. However, existing approaches often face a trade-off between satisfying task objectives (e.g., landmark or feature alignment), maintaining bijectivity, and controlling geometric distortion. We introduce the Spherical Beltrami Differential (SBD), a two-chart representation of quasiconformal self-maps of the sphere, and establish its correspondence with spherical homeomorphisms up to conformal automorphisms. Building on the Spectral Beltrami Network (SBN), we propose a neural optimization framework BOOST that optimizes two Beltrami fields on hemispherical stereographic charts and enforces global consistency through explicit seam-aware constraints. Experiments on large-deformation landmark matching and intensity-based spherical registration demonstrate the effectiveness of our proposed framework. We further apply the method to brain cortical surface registration, aligning sulcal landmarks and jointly matching cortical sulci depth maps, showing improved task fidelity with controlled distortion and robust bijective behavior.

toXiv_bot_toot

Hmph, a fan has got noisy overnight; I guess that's todays task then.

The LLMs are useful for some tasks, I'm currently tidying up, proof reading and editing a document written by many international co-authors.

The LLM I'm using can very quickly correct grammar and spelling mistakes and produces much easier to understand text from occasionally tortured paragraphs. It still needs an experts eye (mine!) to check no mistakes have been introduced or complexities over-simplified.

This is actually the first time I've used an LLM for this task. It's making it much faster and less painful.

It also explains a lot about academic publishing lately..

Any #writers on here in groups that have a good system for managing submissions and critiques? like we could do more with tech e.g. a task prioritisation queue, rather than a month for submissions, a month for feedback, which feels clunky

#writing #amwriting

AgentCgroup: Understanding and Controlling OS Resources of AI Agents

Yusheng Zheng, Jiakun Fan, Quanzhi Fu, Yiwei Yang, Wei Zhang, Andi Quinn

https://arxiv.org/abs/2602.09345 https://arxiv.org/pdf/2602.09345 https://arxiv.org/html/2602.09345

arXiv:2602.09345v1 Announce Type: new

Abstract: AI agents are increasingly deployed in multi-tenant cloud environments, where they execute diverse tool calls within sandboxed containers, each call with distinct resource demands and rapid fluctuations. We present a systematic characterization of OS-level resource dynamics in sandboxed AI coding agents, analyzing 144 software engineering tasks from the SWE-rebench benchmark across two LLM models. Our measurements reveal that (1) OS-level execution (tool calls, container and agent initialization) accounts for 56-74% of end-to-end task latency; (2) memory, not CPU, is the concurrency bottleneck; (3) memory spikes are tool-call-driven with a up to 15.4x peak-to-average ratio; and (4) resource demands are highly unpredictable across tasks, runs, and models. Comparing these characteristics against serverless, microservice, and batch workloads, we identify three mismatches in existing resource controls: a granularity mismatch (container-level policies vs. tool-call-level dynamics), a responsiveness mismatch (user-space reaction vs. sub-second unpredictable bursts), and an adaptability mismatch (history-based prediction vs. non-deterministic stateful execution). We propose AgentCgroup , an eBPF-based resource controller that addresses these mismatches through hierarchical cgroup structures aligned with tool-call boundaries, in-kernel enforcement via sched_ext and memcg_bpf_ops, and runtime-adaptive policies driven by in-kernel monitoring. Preliminary evaluation demonstrates improved multi-tenant isolation and reduced resource waste.

toXiv_bot_toot

I wanted #Neovim and #Taskwarrior to interact together, so I wrote a script:

https://www-gem.codeberg.page/cli_task

Nicht vergessen: morgen beginnt der FAIR February mit einer Paneldiskussion zum Thema Nachnutzung von Forschungsdaten in digitalen Editionen. 📚👾📖

Am 18.02. folgt dann eine Sitzung (die ich mit hoste 👋) zum Thema Vernetzung (von Forschungsdaten). 🌐🪡🧵💾

Wie überschneiden sich FAIR und LOD? Und wie sieht das in Hinblick auf Forschungsdaten aus? Für mich aktuell recht relevant: BEACONs. Was ist der Status quo und was geht da noch?

Link zur Veranstaltungsseite ( Anmeldung):

…

Friday was an adventure in tech futility. Started with a gifted laptop, Dell Latitude, and the simple task of wiping its poor excuse for an OS and installing something sensible.

#Ubuntu wasn't it. OMG Ubuntu, what HAVE you done?! Tried first #UbuntuStudio then stock Ubuntu, 24.04, 25.04, 25.11, all the installers simply hung, no log, no journalctl, CPU chugging but nada detectable action, even hours later, just "Preparing…"

Net advice is old, of course, but points to the snap bootstrap service, fix has no effect but Kee-riced look at the mount table?! Snaps crackle and pop all over! Wtf, why? 🤯

And it is tedious. These 'DVD' iso files are 7GB, so, after 6 hours frustration, I thought, let's try something smaller to cut the turnaround time. #Debian13 simple bare-bones net-install seemed a good candidate …

And it was. Seemless install. 😊

Lastly, whenever I do work with people through a specific case of them having felt like they needed to rely on an LLM, it often goes like this.

They feel guilty and ashamed.

They explain how impossible getting that task done felt with their time and energy constraints.

Yet when I talk them through other ways of solving the same problem, often we end up completing the work much quicker than it even took them to prompt the damn LLM to begin with.

And at the end, I have often seen relief - as if the person has forgotten that there are ways to work quickly while trusting their own brain, getting help in collaboration with another person rather than from a machine.

I do kind of love seeing someone realize that the AI they thought was saving them time actually caused more hassle and stress than it was worth. And that there’s a better way.

Seriously, the worst ones are nodejs and rust: they fundamentally break the nodejs dependency model, flattening everything. They've chosen _controlling_ dependencies instead of _annotating_ them for understanding. Metadata about what's in a package and a package-build-time mechanism for substituting things in lockfiles would be far far simpler for forcing security updates than rewriting everything to use system dependencies, and versions that are not reconcilable.

Heck, both npm and cargo have put a lot of effort into repeatability though not actual hermetic builds, so it's very much Good Enough if you're using lock files. The problems are in updating those, not building packages. Mirror the registries if you need to. That's a much more tractable problem than _rewriting parts of everything you package_ or _eagerly packaging every dependency as a separate [human] task_

Approximate Cartesian Tree Matching with Substitutions

Panagiotis Charalampopoulos, Jonas Ellert, Manal Mohamed

https://arxiv.org/abs/2602.08570 https://arxiv.org/pdf/2602.08570 https://arxiv.org/html/2602.08570

arXiv:2602.08570v1 Announce Type: new

Abstract: The Cartesian tree of a sequence captures the relative order of the sequence's elements. In recent years, Cartesian tree matching has attracted considerable attention, particularly due to its applications in time series analysis. Consider a text $T$ of length $n$ and a pattern $P$ of length $m$. In the exact Cartesian tree matching problem, the task is to find all length-$m$ fragments of $T$ whose Cartesian tree coincides with the Cartesian tree $CT(P)$ of the pattern. Although the exact version of the problem can be solved in linear time [Park et al., TCS 2020], it remains rather restrictive; for example, it is not robust to outliers in the pattern.

To overcome this limitation, we consider the approximate setting, where the goal is to identify all fragments of $T$ that are close to some string whose Cartesian tree matches $CT(P)$. In this work, we quantify closeness via the widely used Hamming distance metric. For a given integer parameter $k>0$, we present an algorithm that computes all fragments of $T$ that are at Hamming distance at most $k$ from a string whose Cartesian tree matches $CT(P)$. Our algorithm runs in time $\mathcal O(n \sqrt{m} \cdot k^{2.5})$ for $k \leq m^{1/5}$ and in time $\mathcal O(nk^5)$ for $k \geq m^{1/5}$, thereby improving upon the state-of-the-art $\mathcal O(nmk)$-time algorithm of Kim and Han [TCS 2025] in the regime $k = o(m^{1/4})$.

On the way to our solution, we develop a toolbox of independent interest. First, we introduce a new notion of periodicity in Cartesian trees. Then, we lift multiple well-known combinatorial and algorithmic results for string matching and periodicity in strings to Cartesian tree matching and periodicity in Cartesian trees.

toXiv_bot_toot

Somehow I've got that problem that just reading a book feels like I'm neglecting "important" work. My task list is long and there is always some "productive" stuff I could do. I intellectually understand that reading is important and not just a recreational activity. Also I understand recreation is important. Still there is this nagging feeling when I pick up a book and read.

Do you have the same issue? How did or would you address it?

Today I will be mostly diving down the #3dprinting rabbit hole. First task is a couple of mounts for the hall effect #sensors for my garage door opener.

It turns out that having a band of 10 year-olds over to decorate Christmas cookies does not make for the most relaxing afternoon. Not only are they impressively loud, they lose interest in the task at hand almost immediately. So now I have something to keep me out of trouble tonight.

AOC: It’s our task to figure out how to claw back what has essentially supercharged this agency into becoming a relentless domestic paramilitary that is also a blank check to Palantir to create facial-recognition scans on US citizens.

Source:

https://reddit.com/comments/1qug0xd

Anthropic says it found Opus 4.6 "brings more focus to the most challenging parts of a task without being told to" and "thinks more deeply and more carefully" (Anthropic)

https://www.anthropic.com/news/claude-opus-4-6

Todoist FTW.

Several years ago we had smoky pies and rolls for Thanksgiving because we put off cleaning the ovens until it was too late.

I created a recurring Todoist task to clean the ovens on the 3rd Sunday of November. Ever since then we’ve had pristine ovens and smoke free cooking every Thanksgiving.

Collette appreciates that a holiday for which most of the tasks are hers, she doesn’t have to worry and the ovens are ready to go.

You Only Train Once: Differentiable Subset Selection for Omics Data

Daphn\'e Chopard, Jorge da Silva Gon\c{c}alves, Irene Cannistraci, Thomas M. Sutter, Julia E. Vogt

https://arxiv.org/abs/2512.17678 https://arxiv.org/pdf/2512.17678 https://arxiv.org/html/2512.17678

arXiv:2512.17678v1 Announce Type: new

Abstract: Selecting compact and informative gene subsets from single-cell transcriptomic data is essential for biomarker discovery, improving interpretability, and cost-effective profiling. However, most existing feature selection approaches either operate as multi-stage pipelines or rely on post hoc feature attribution, making selection and prediction weakly coupled. In this work, we present YOTO (you only train once), an end-to-end framework that jointly identifies discrete gene subsets and performs prediction within a single differentiable architecture. In our model, the prediction task directly guides which genes are selected, while the learned subsets, in turn, shape the predictive representation. This closed feedback loop enables the model to iteratively refine both what it selects and how it predicts during training. Unlike existing approaches, YOTO enforces sparsity so that only the selected genes contribute to inference, eliminating the need to train additional downstream classifiers. Through a multi-task learning design, the model learns shared representations across related objectives, allowing partially labeled datasets to inform one another, and discovering gene subsets that generalize across tasks without additional training steps. We evaluate YOTO on two representative single-cell RNA-seq datasets, showing that it consistently outperforms state-of-the-art baselines. These results demonstrate that sparse, end-to-end, multi-task gene subset selection improves predictive performance and yields compact and meaningful gene subsets, advancing biomarker discovery and single-cell analysis.

toXiv_bot_toot

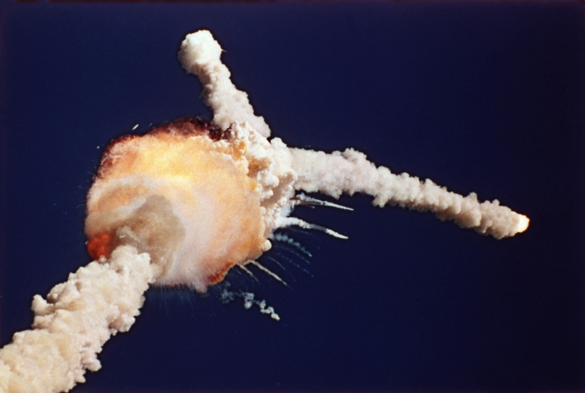

It's been 40 years. I still remember it well. I was in school. It's a few days till my 10th bday. Our (very small, maybe 14 kids) 4th grade class stopped our studies and tuned in the launch. The surreal moment of watching the explosion grow while the announcer calmly continued reporting the stats before realizing there was a problem. The teacher having to explain what we just watched. The day we learned that going to space is still a very difficult task.

#today met a friend for lunch, will take weekly meter readings this evening, and I'm back on the recreational optimisation task at the moment!

Good Morning #Canada

In 1905, when Alberta joined Canada, a temporary provincial legislature resided in Edmonton and they were given the task of choosing the capital. Edmonton, by a vote of 16 to 8, was selected over their southern rival Calgary. This choice was despite Calgary's larger size and incorporation as a city a full decade before Edmonton. But the new capital had used their history as an important trading post established on the fur trade route and federal relationships to secure their status as the capital. In 1941, Edmonton was still a relatively small city, ranking 9th in population in Canada. Oil would transform the entire province, and Edmonton would rapidly grow as the gateway to the resource rich Alberta north.

#CanadaIsAwesome #CanadianCapitals

https://thecanadianencyclopedia.ca/en/article/edmonton

Meta-learning three-factor plasticity rules for structured credit assignment with sparse feedback

Dimitra Maoutsa

https://arxiv.org/abs/2512.09366 https://arxiv.org/pdf/2512.09366 https://arxiv.org/html/2512.09366

arXiv:2512.09366v1 Announce Type: new

Abstract: Biological neural networks learn complex behaviors from sparse, delayed feedback using local synaptic plasticity, yet the mechanisms enabling structured credit assignment remain elusive. In contrast, artificial recurrent networks solving similar tasks typically rely on biologically implausible global learning rules or hand-crafted local updates. The space of local plasticity rules capable of supporting learning from delayed reinforcement remains largely unexplored. Here, we present a meta-learning framework that discovers local learning rules for structured credit assignment in recurrent networks trained with sparse feedback. Our approach interleaves local neo-Hebbian-like updates during task execution with an outer loop that optimizes plasticity parameters via \textbf{tangent-propagation through learning}. The resulting three-factor learning rules enable long-timescale credit assignment using only local information and delayed rewards, offering new insights into biologically grounded mechanisms for learning in recurrent circuits.

toXiv_bot_toot

OpenAI is testing training LLMs to produce "confessions", or self-report how they carried out a task and own up to bad behavior, like appearing to lie or cheat (Will Douglas Heaven/MIT Technology Review)

https://www.technologyreview.com/2025/12/0<…

Space Complexity Dichotomies for Subgraph Finding Problems in the Streaming Model

Yu-Sheng Shih, Meng-Tsung Tsai, Yen-Chu Tsai, Ying-Sian Wu

https://arxiv.org/abs/2602.08002 https://arxiv.org/pdf/2602.08002 https://arxiv.org/html/2602.08002

arXiv:2602.08002v1 Announce Type: new

Abstract: We study the space complexity of four variants of the standard subgraph finding problem in the streaming model. Specifically, given an $n$-vertex input graph and a fixed-size pattern graph, we consider two settings: undirected simple graphs, denoted by $G$ and $H$, and oriented graphs, denoted by $\vec{G}$ and $\vec{H}$. Depending on the setting, the task is to decide whether $G$ contains $H$ as a subgraph or as an induced subgraph, or whether $\vec{G}$ contains $\vec{H}$ as a subgraph or as an induced subgraph. Let Sub$(H)$, IndSub$(H)$, Sub$(\vec{H})$, and IndSub$(\vec{H})$ denote these four variants, respectively.

An oriented graph is well-oriented if it admits a bipartition in which every arc is oriented from one part to the other, and a vertex is non-well-oriented if both its in-degree and out-degree are non-zero. For each variant, we obtain a complete dichotomy theorem, briefly summarized as follows.

(1) Sub$(H)$ can be solved by an $\tilde{O}(1)$-pass $n^{2-\Omega(1)}$-space algorithm if and only if $H$ is bipartite.

(2) IndSub$(H)$ can be solved by an $\tilde{O}(1)$-pass $n^{2-\Omega(1)}$-space algorithm if and only if $H \in \{P_3, P_4, co\mbox{-}P_3\}$.

(3) Sub$(\vec{H})$ can be solved by a single-pass $n^{2-\Omega(1)}$-space algorithm if and only if every connected component of $\vec H$ is either a well-oriented bipartite graph or a tree containing at most one non-well-oriented vertex.

(4) IndSub$(\vec{H})$ can be solved by an $\tilde{O}(1)$-pass $n^{2-\Omega(1)}$-space algorithm if and only if the underlying undirected simple graph $H$ is a $co\mbox{-}P_3$.

toXiv_bot_toot

Exploring Performance-Productivity Trade-offs in AMT Runtimes: A Task Bench Study of Itoyori, ItoyoriFBC, HPX, and MPI

Torben R. Lahnor, Mia Reitz, Jonas Posner, Patrick Diehl

https://arxiv.org/abs/2601.14608

XFCE4 seems like a viable desktop environment if/when KDE ceases to be serviceable. I'll have to port my Beat-It! plugin, and may need to write or massage some existing ones (the task switcher/launcher situation is...sub-optimal), but there's still time.

Everyday #Emacs #orgmode task: merge two tables

I'm grading, and want to merge the class list with the list of assignment submissions. Include the two lists as named tables, and a tiny R-snippet will produce a merged table (also named).

Researchers unveil PropensityBench, a benchmark showing how stressors like shorter deadlines increase misbehavior in agentic AI models during task completion (Matthew Hutson/IEEE Spectrum)

https://spectrum.ieee.org/ai-agents-safety

Welfarist Formulations for Diverse Similarity Search

Siddharth Barman, Nirjhar Das, Shivam Gupta, Kirankumar Shiragur

https://arxiv.org/abs/2602.08742 https://arxiv.org/pdf/2602.08742 https://arxiv.org/html/2602.08742

arXiv:2602.08742v1 Announce Type: new

Abstract: Nearest Neighbor Search (NNS) is a fundamental problem in data structures with wide-ranging applications, such as web search, recommendation systems, and, more recently, retrieval-augmented generations (RAG). In such recent applications, in addition to the relevance (similarity) of the returned neighbors, diversity among the neighbors is a central requirement. In this paper, we develop principled welfare-based formulations in NNS for realizing diversity across attributes. Our formulations are based on welfare functions -- from mathematical economics -- that satisfy central diversity (fairness) and relevance (economic efficiency) axioms. With a particular focus on Nash social welfare, we note that our welfare-based formulations provide objective functions that adaptively balance relevance and diversity in a query-dependent manner. Notably, such a balance was not present in the prior constraint-based approach, which forced a fixed level of diversity and optimized for relevance. In addition, our formulation provides a parametric way to control the trade-off between relevance and diversity, providing practitioners with flexibility to tailor search results to task-specific requirements. We develop efficient nearest neighbor algorithms with provable guarantees for the welfare-based objectives. Notably, our algorithm can be applied on top of any standard ANN method (i.e., use standard ANN method as a subroutine) to efficiently find neighbors that approximately maximize our welfare-based objectives. Experimental results demonstrate that our approach is practical and substantially improves diversity while maintaining high relevance of the retrieved neighbors.

toXiv_bot_toot

Easy Adaptation: An Efficient Task-Specific Knowledge Injection Method for Large Models in Resource-Constrained Environments

Dong Chen, Zhengqing Hu, Shixing Zhao, Yibo Guo

https://arxiv.org/abs/2512.17771 https://arxiv.org/pdf/2512.17771 https://arxiv.org/html/2512.17771

arXiv:2512.17771v1 Announce Type: new

Abstract: While the enormous parameter scale endows Large Models (LMs) with unparalleled performance, it also limits their adaptability across specific tasks. Parameter-Efficient Fine-Tuning (PEFT) has emerged as a critical approach for effectively adapting LMs to a diverse range of downstream tasks. However, existing PEFT methods face two primary challenges: (1) High resource cost. Although PEFT methods significantly reduce resource demands compared to full fine-tuning, it still requires substantial time and memory, making it impractical in resource-constrained environments. (2) Parameter dependency. PEFT methods heavily rely on updating a subset of parameters associated with LMs to incorporate task-specific knowledge. Yet, due to increasing competition in the LMs landscape, many companies have adopted closed-source policies for their leading models, offering access only via Application Programming Interface (APIs). Whereas, the expense is often cost-prohibitive and difficult to sustain, as the fine-tuning process of LMs is extremely slow. Even if small models perform far worse than LMs in general, they can achieve superior results on particular distributions while requiring only minimal resources. Motivated by this insight, we propose Easy Adaptation (EA), which designs Specific Small Models (SSMs) to complement the underfitted data distribution for LMs. Extensive experiments show that EA matches the performance of PEFT on diverse tasks without accessing LM parameters, and requires only minimal resources.

toXiv_bot_toot

So farewell then Microsoft Office.

I haven't used you really since the 90s.

From this distance: it seems like you just kept getting worse and more exploitative since then and trapped millions of people in abusive relationships with tech.

It made me laugh when you went monthly-payment subscription-only, and then laugh even more when suckers actually ponied up for that.

It's fun when people say they don't know how to use Libreoffice, even though Libre is more like the original MS Office (before it was shit) than Office 365 was.

I wish you fare well on your transition to your new identity as #microSlop #office #CoPiliot #ai #enshitification #poem

JUST-DUB-IT: Video Dubbing via Joint Audio-Visual Diffusion

Anthony Chen, Naomi Ken Korem, Tavi Halperin, Matan Ben Yosef, Urska Jelercic, Ofir Bibi, Or Patashnik, Daniel Cohen-Or

https://arxiv.org/abs/2601.22143 https://arxiv.org/pdf/2601.22143 https://arxiv.org/html/2601.22143

arXiv:2601.22143v1 Announce Type: new

Abstract: Audio-Visual Foundation Models, which are pretrained to jointly generate sound and visual content, have recently shown an unprecedented ability to model multi-modal generation and editing, opening new opportunities for downstream tasks. Among these tasks, video dubbing could greatly benefit from such priors, yet most existing solutions still rely on complex, task-specific pipelines that struggle in real-world settings. In this work, we introduce a single-model approach that adapts a foundational audio-video diffusion model for video-to-video dubbing via a lightweight LoRA. The LoRA enables the model to condition on an input audio-video while jointly generating translated audio and synchronized facial motion. To train this LoRA, we leverage the generative model itself to synthesize paired multilingual videos of the same speaker. Specifically, we generate multilingual videos with language switches within a single clip, and then inpaint the face and audio in each half to match the language of the other half. By leveraging the rich generative prior of the audio-visual model, our approach preserves speaker identity and lip synchronization while remaining robust to complex motion and real-world dynamics. We demonstrate that our approach produces high-quality dubbed videos with improved visual fidelity, lip synchronization, and robustness compared to existing dubbing pipelines.

toXiv_bot_toot

I'm hopeful that the current RAM crisis will end up tanking demand for other hardware to the extent that I'll be able to pick up a future Zen6 chip and AM5 board (or at least a 5950X) for less than they'd otherwise go for. Let's arbitrage this shit to our advantage! (I will shortly have a recurring CPU-bound task that will benefit greatly from doubling my core count or other improvements, and I do have 2x16GB DDR5 SoDIMMs that I could press into service with an adapter in a n…

A draft EO shows President Trump plans to grant the federal government sole power to regulate AI and create an "AI Litigation Task Force" overseen by the AG (Tina Nguyen/The Verge)

https://www.theverge.com/ai-artificial-intelligence/82…

Hardness and Tractability of T_{h 1}-Free Edge Deletion

Ajinkya Gaikwad, Soumen Maity, Leeja R

https://arxiv.org/abs/2602.00644 https://arxiv.org/pdf/2602.00644 https://arxiv.org/html/2602.00644

arXiv:2602.00644v1 Announce Type: new

Abstract: We study the parameterized complexity of the T(h 1)-Free Edge Deletion problem. Given a graph G and integers k and h, the task is to delete at most k edges so that every connected component of the resulting graph has size at most h. The problem is NP-complete for every fixed h at least 3, while it is solvable in polynomial time for h at most 2.

Recent work showed strong hardness barriers: the problem is W[1]-hard when parameterized by the solution size together with the size of a feedback edge set, ruling out fixed-parameter tractability for many classical structural parameters. We significantly strengthen these negative results by proving W[1]-hardness when parameterized by the vertex deletion distance to a disjoint union of paths, the vertex deletion distance to a disjoint union of stars, or the twin cover number. These results unify and extend known hardness results for treewidth, pathwidth, and feedback vertex set, and show that several restrictive parameters, including treedepth, cluster vertex deletion number, and modular width, do not yield fixed-parameter tractability when h is unbounded.

On the positive side, we identify parameterizations that restore tractability. We show that the problem is fixed-parameter tractable when parameterized by cluster vertex deletion together with h, and also when parameterized by neighborhood diversity together with h via an integer linear programming formulation. We further present a fixed-parameter tractable bicriteria approximation algorithm parameterized by k. Finally, we show that the problem admits fixed-parameter tractable algorithms on split graphs and interval graphs, and we establish hardness for a directed generalization even on directed acyclic graphs.

toXiv_bot_toot

Proc3D: Procedural 3D Generation and Parametric Editing of 3D Shapes with Large Language Models

Fadlullah Raji, Stefano Petrangeli, Matheus Gadelha, Yu Shen, Uttaran Bhattacharya, Gang Wu

https://arxiv.org/abs/2601.12234 https://arxiv.org/pdf/2601.12234 https://arxiv.org/html/2601.12234

arXiv:2601.12234v1 Announce Type: new

Abstract: Generating 3D models has traditionally been a complex task requiring specialized expertise. While recent advances in generative AI have sought to automate this process, existing methods produce non-editable representation, such as meshes or point clouds, limiting their adaptability for iterative design. In this paper, we introduce Proc3D, a system designed to generate editable 3D models while enabling real-time modifications. At its core, Proc3D introduces procedural compact graph (PCG), a graph representation of 3D models, that encodes the algorithmic rules and structures necessary for generating the model. This representation exposes key parameters, allowing intuitive manual adjustments via sliders and checkboxes, as well as real-time, automated modifications through natural language prompts using Large Language Models (LLMs). We demonstrate Proc3D's capabilities using two generative approaches: GPT-4o with in-context learning (ICL) and a fine-tuned LLAMA-3 model. Experimental results show that Proc3D outperforms existing methods in editing efficiency, achieving more than 400x speedup over conventional approaches that require full regeneration for each modification. Additionally, Proc3D improves ULIP scores by 28%, a metric that evaluates the alignment between generated 3D models and text prompts. By enabling text-aligned 3D model generation along with precise, real-time parametric edits, Proc3D facilitates highly accurate text-based image editing applications.

toXiv_bot_toot

![Grok output:

-----------

Q: I want to wash my car. The car wash is 50 meters away. Should I walk or drive?

[40 pages]

-----------

Drive.

The goal is to wash the car, not to get yourself to the car wash.

Walking 50 meters gets you there in about 30-45 seconds (maybe 20 if you're speed-walking), but your dirty car remains exactly where it is-still dirty. To actually accomplish the task, the car has to travel those 50 meters.](https://cdn.social.linux.pizza/system/media_attachments/files/116/079/626/974/948/019/small/d825a752e2741c3f.png)